This post is intended for beginners who want to understand how event driven architectures communicate in AWS. The repository holding the python scripts to be used is mentioned at the end of the

Log into your AWS console with your IAM credentials, select the region closest to you & follow the below steps:

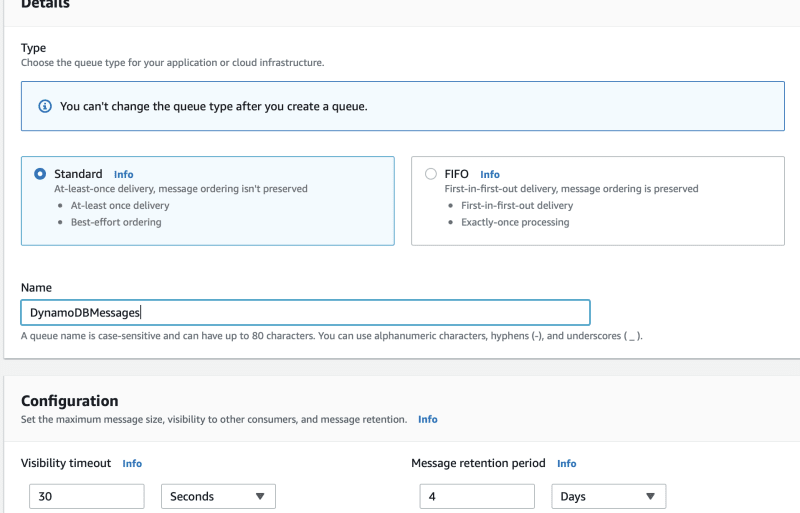

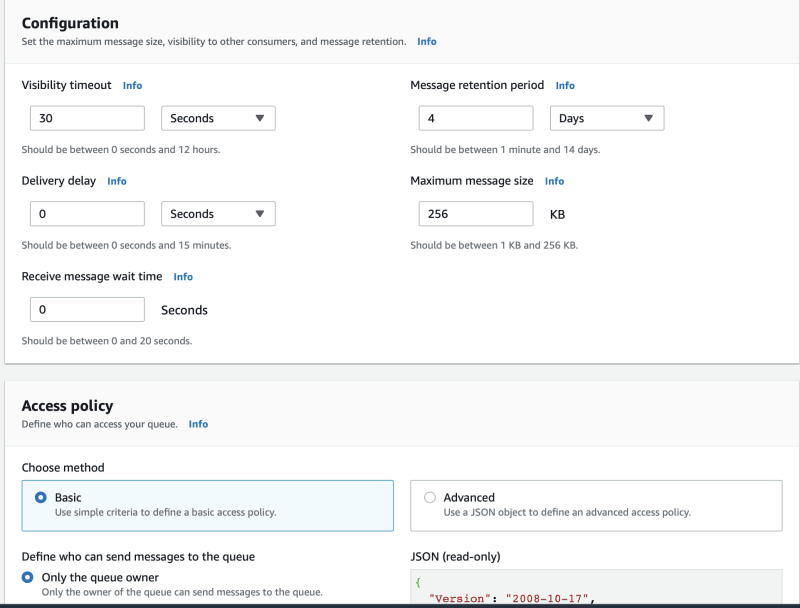

1. Creating a SQS(Simple Queue Service):

Create a simple SQS queue called "DynamoDBMessages" which will receive a number of messages from the EC2 instance running in our account.

No need to change the access policy or the configuration we can keep it as default

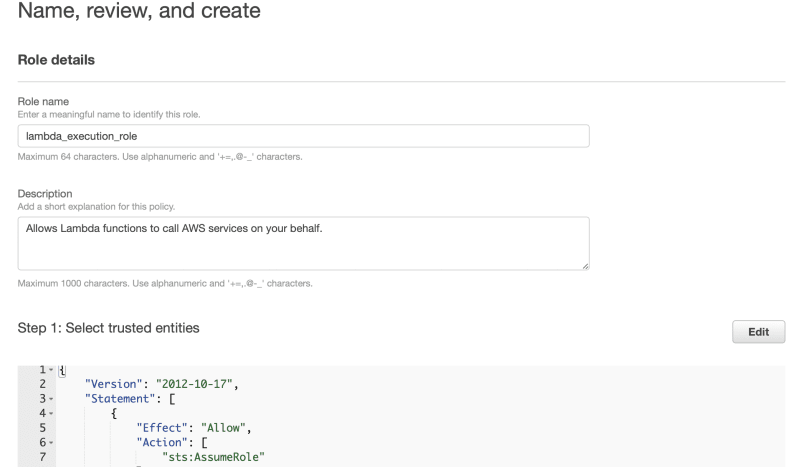

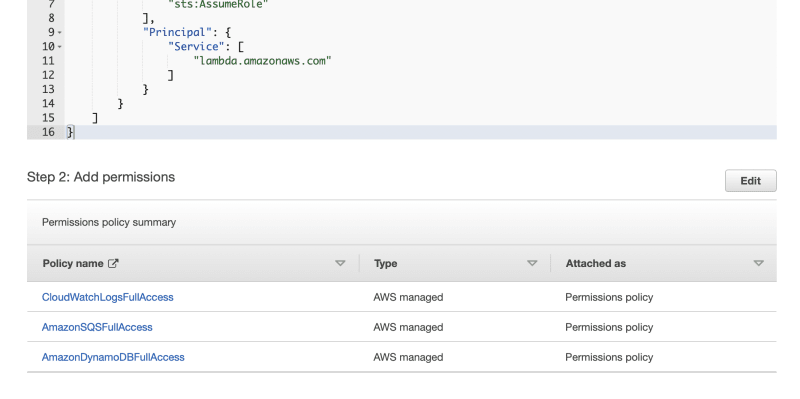

2. Lambda execution role:

We need to grant access to the Lambda service so that the function created in the next step can access the SQS queue to check for messages, DynamoDB in order to write the messages from the queue to the DB table & access to write to CloudWatch Logs.

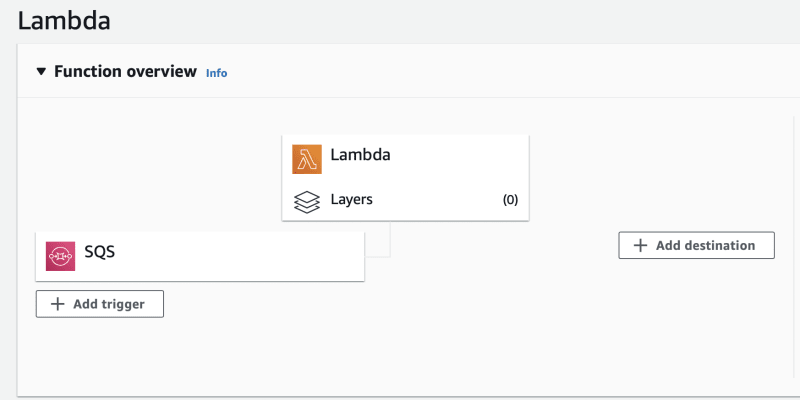

3. Lambda function:

This function will be triggered by an event whenever the messages are placed on DynamoDBMessages Queue created in step 1.

Provide the function name & select the runtime as Python 3.9 as we will be running the function written in Python.

The architecture can be default as x86_64. Click on Create Function.

Once the Function Overview screen open click on "Add Trigger", select SQS in the trigger configuration & select the queue we created above.

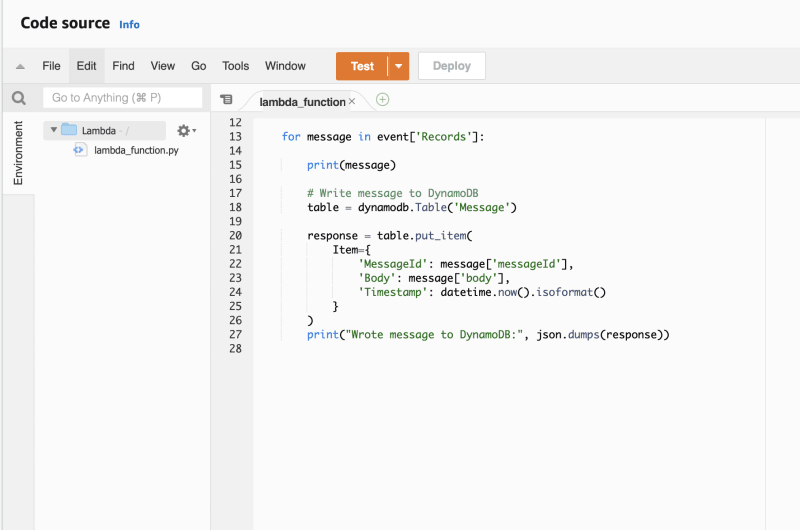

Select the Code tab & change the contents of the lambdafunction.py to the code attached (filename= lambda_function.py) in the repository given below:

Next, Deploy the code by clicking on Deploy.

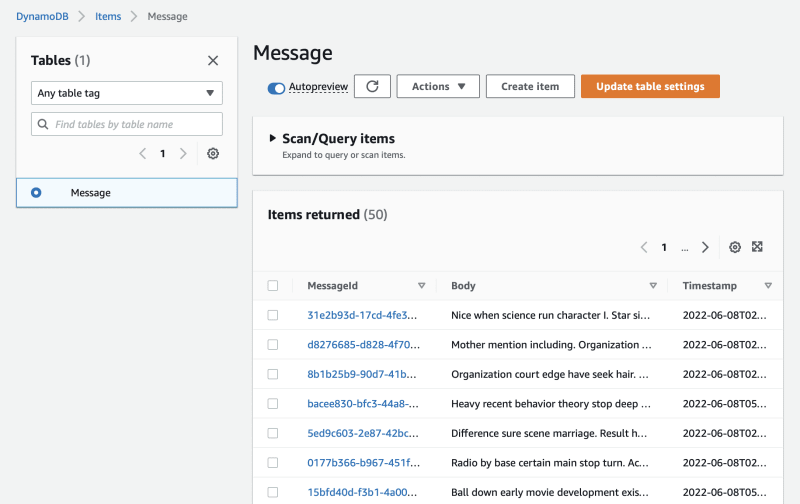

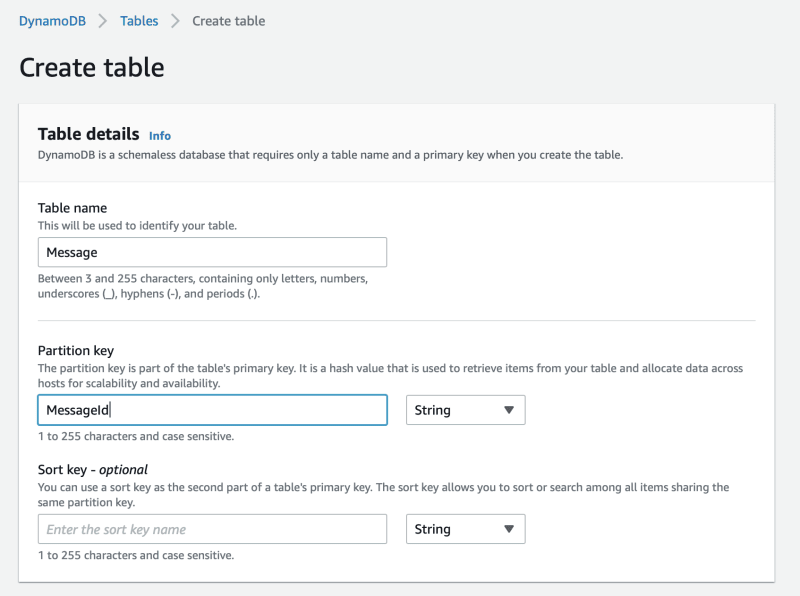

4. Create a DynamoDB table:

Create a table by the name of Messages as shown below:

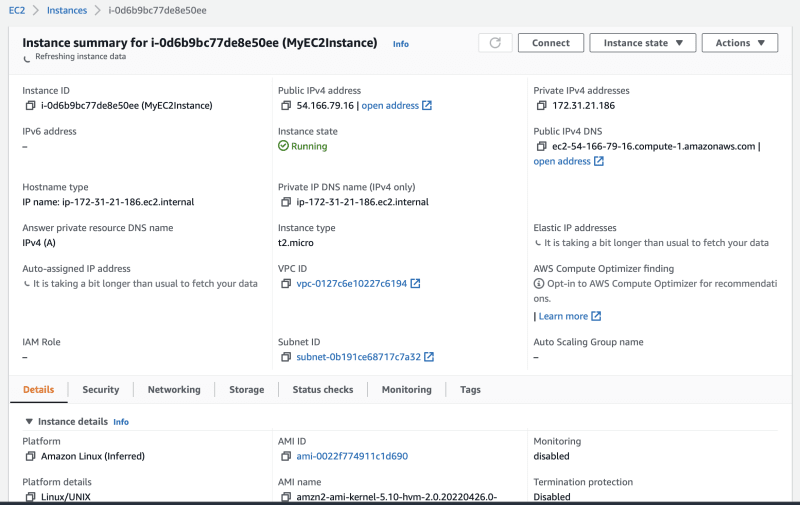

5. Create a EC2 Instance:

This instance will execute the code which will send messages to SQS. Be sure to select instance type t2.micro which is free tier eligible.

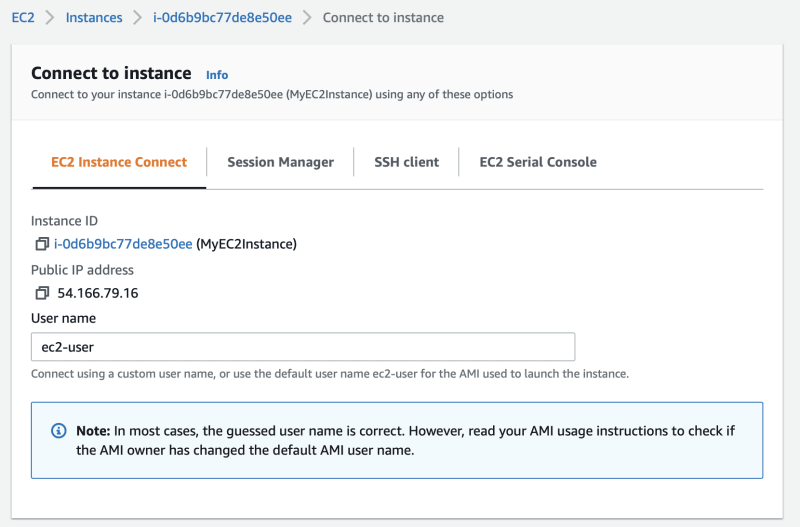

Select the EC2 Instance once its in the running state & click on Connect, alternatively we can also use the AWS CLI. You can run the scp command to move the send_message.py file to the EC2 Instance from your local once you are able to SSH into the instance(This requires the KeyPair pem file created at the time of instance creation)

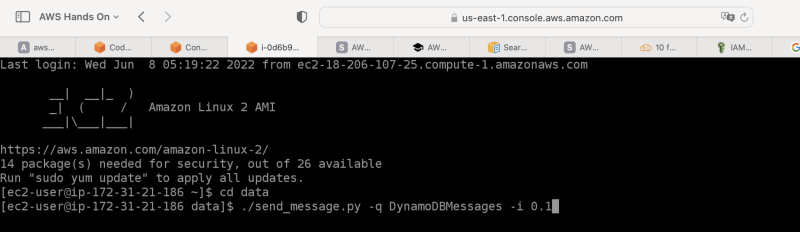

To run the below command, install boto3 & faker packages on the EC2 instance as this will not be pre-installed. Next, you might face "No Credentials Error" thrown by boto3 package. To overcome this, there are three options,

- Run aws configure & give the access key id, key, region, format.

- Edit the credentials file in the /.aws folder. Create the folder/file if not present & then enter the above access key & region details. The above two options are not safe as any other user having access to this EC2 instance can get hold of these credentials. Alternatively STS service can be used to get temporary credentials for package execution on EC2.

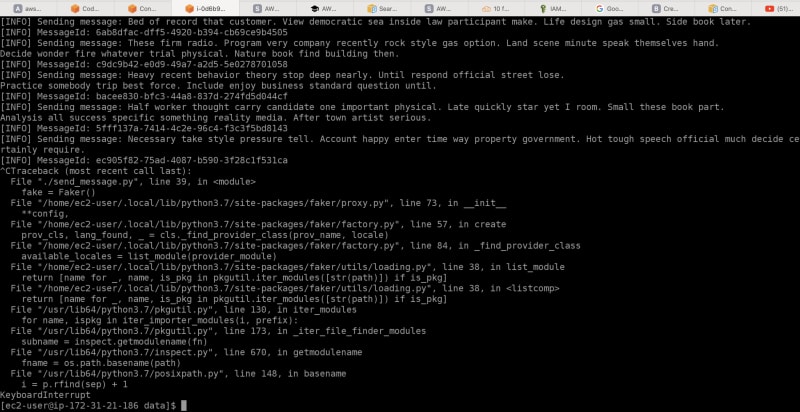

Once the above steps are complete, run the below command to trigger sending of the messages to DynamoDBMessages queue.

The SQS queue name created should follow the -q option in the above command.

Press Ctrl+C once you see an adequate number of messages being sent.

Check the DynamoDB table "Message" for the messages sent from the EC2 -> SQS -> Lambda -> DynamoDB.

For any errors one can also check the CloudWatch Logs, under the Lambda log group.

GitHub Repository:

https://github.com/neetu-mallan/SQSLambdaDynamoDB/tree/master

Top comments (0)