Software testing is a broad category that consists of various types of tests. And in order to build a scalable system, it is important to verify and pass all the possible edge test cases to ensure a fit system.

While some of the tests are directly administered on the system code (unit testing, integration testing) others are tested on the system in different environments (stress testing).

Testing that includes both code-based and non-code-based tests is called an E2E testing or end-to-end testing.

In this article, we are going to be focusing on non-code-based tests, specifically performance testing, and how these tests can provide you with valuable insights that can further help you improve your codebase.

Why Performance Testing?

Before getting to the “why” part, let’s first answer the question “What is performance testing?”. So, performance testing is used to test how well software performs when used in the context of an integrated system. It is non-functional in nature and is also referred to as load testing.

Performance testing is used to evaluate the program's efficiency and speed. It examines the system's performance under the specified load and determines the overall speed, stability, and responsiveness of the system.

Performance testing is an abstract concept in contrast to code-based testing. For example, in unit testing, the code is modularized and each unit of the code is individually tested.

In the case of performance testing, the control flow differs and the approach varies from application to application, as different applications require varied types of testing to test the performance. To streamline the process, one can incorporate a standard process to carry out the test.

The Process of Performance Testing

In performance testing, the first step is identifying the system environment. In it, you understand your production environment, physical test environment, and the testing resources that are available. Before you start the testing process, make sure to gain a thorough understanding of the hardware, software, and network settings that are being used. This will improve the effectiveness of your tests. It will also assist in identifying potential difficulties that testers might run into when doing performance testing processes.

The next step is to identify performance acceptance criteria. This step involves identifying the parameters and their end goals, which include throughput, resource allocation, and response times. Outside of these objectives and restrictions, additional project success criteria must be defined. Because the project specifications frequently do not offer a broad enough array of performance benchmarks, testers should be given the authority to create performance criteria and goals.

Moving ahead, the next step is designing the tests. This involves identifying essential scenarios in order to test for all potential use cases and determine how usage is likely to differ among end users. A range of end users must be simulated, performance test data must be planned, and the metrics to be measured must be specified.

Once the implementation design is in place, the test environment should be set up accordingly. Before running the test, you may configure the necessary tools and other resources for this.

After the setup is complete, execute the test design. Produce the performance tests in accordance with your test design.

Lastly, compile, examine, and disseminate the test results. After that, tweak the app and test it to see if performance has improved or worsened. Stop testing when the CPU becomes the bottleneck because improvements often decline with each subsequent test. You may then want to think about upgrading CPU power.

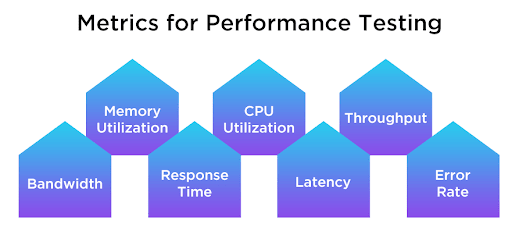

Metrics for Performance Testing

To better assess performance, one should consider a few system-level factors that play a vital role in performance.

The foremost of all is memory utilization, which is the percentage of primary memory consumed for performing the operations. Similar to memory utilization is CPU utilization, which is the percentage of CPU or processing power used for processing the requests.

To fine-tune the system for best performance, the throughput needs to be calculated. It gauges how many transactions an application can process in a second, or the number of requests a network or computer can process in a second. Based on the result, various system components can be adjusted to improve the throughput.

Another metric to focus on is the connection pool, which is the number of user requests that pooled connections can handle. The performance will improve when more requests are met by connections in the pool. Bandwidth determines the amount of data that is transmitted each second. A greater bandwidth results in a higher connection speed and vice versa.

Generally, the response time is used to check if an application is meeting the SLA or not. It indicates how fast a user request was processed and a response was generated. A lower response time implies better performance while a high response time signifies poor performance.

The final two metrics are latency and error rate. Latency is the time taken by the system to process a request. A low latency means that the system is responding quickly while a high latency indicates that there is a problem with the system. The error rate is self-explanatory and it shows how many requests resulted in errors.

All these metrics are important for performance testing and should be considered while assessing the performance of an application or system.

Conclusion

Performance testing KPIs must be understood by organizations so they may determine the effectiveness of performance testing across the enterprise. The above-discussed metrics will aid you in assessing the performance testing process' progress and success. It is recommended that you utilize load and performance testing services from next-generation QA and independent software services to acquire a high-performing, high-quality, scalable, and robust software.

Top comments (0)