What is Latency

In computing,"latency" describes some type of delay. It typically refers to delays in transmitting or processing data, which can be caused by a wide variety of reasons.

Note: 1 ns = 1 * 10^-9 sec

In 1990:

- L1 cache reference: 181 ns

- L2 cache reference: 784 ns

- Branch mispredict: 603 ns

- Main memory reference: 207 ns

- Compress 1K bytes with Zippy: 362,000 ns

- Send 2K bytes over commodity network: 1448 ns

- Read 1 MB sequentially from memory: 3038000 ns

- Round trip within same datacenter: 500,000 ns

- Disk seek: 20,000,000 ns

- Read 1 MB sequentially from disk: 640,000,000 ns

- Read 1 MB sequentially from SSD: 50,000,000 ns

In 2000:

- L1 cache reference: 6 ns

- L2 cache reference: 25 ns

- Branch mispredict: 19 ns

- Mutex lock/unlock: 94 ns

- Main memory reference: 100 ns

- Compress 1K bytes with Zippy: 11,000 ns

- Send 2K bytes over commodity network: 45,000 ns

- Read 1 MB sequentially from memory: 301,000 ns

- Round trip within same datacenter: 500,000 ns

- Disk seek: 10,000,000 ns

- Read 1 MB sequentially from disk: 20,000,000 ns

- Read 1 MB sequentially from SSD: 5,000,000 ns

In 2010:

- L1 cache reference: 1 ns

- L2 cache reference: 4 ns

- Branch mispredict: 3 ns

- Mutex lock/unlock: 17 ns

- Main memory reference: 100 ns

- Compress 1K bytes with Zippy: 2000 ns

- Send 2K bytes over commodity network: 1000 ns

- Read 1 MB sequentially from memory: 30,000 ns

- Round trip within same datacenter: 500,000 ns

- Disk seek: 5,000,000 ns

- Read 1 MB sequentially from disk: 3,000,000 ns

- Read 1 MB sequentially from SSD: 494,000 ns

In 2020:

- L1 cache reference: 1 ns

- L2 cache reference: 4 ns

- Branch mispredict: 3 ns

- Mutex lock/unlock: 17 ns

- Main memory reference: 100 ns

- Compress 1K bytes with Zippy: 2000 ns

- Send 2K bytes over commodity network: 44 ns

- Read 1 MB sequentially from memory: 3000 ns

- Round trip within same datacenter: 500,000 ns

- Disk seek: 2,000,000 ns

- Read 1 MB sequentially from disk: 825,000 ns

- Read 1 MB sequentially from SSD: 49000 ns

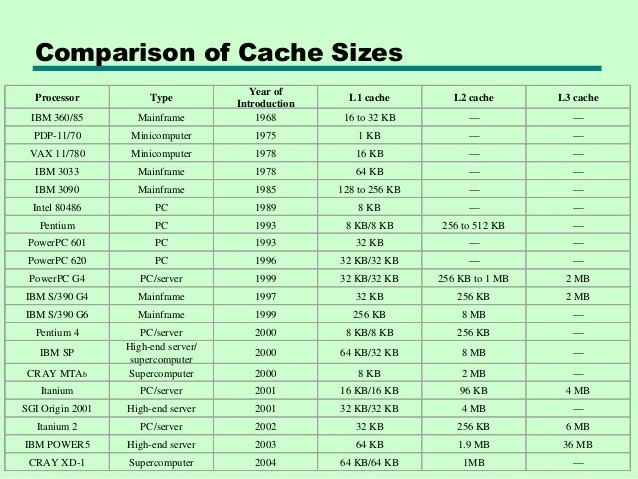

Comparison of Cache Sizes

Reference: berkeley.edu

Top comments (9)

You wrote 45 instead of 45000 for "Send 2K bytes over commodity network: 45 ns" 2000, and 98000 in 1998.

In 1998 it was 91000ns

Thank you for updating the picture :)

Unfortunately the complexity of our operating systems has grown even more, resulting in decreased responsiveness after all.

yep: youtube.com/watch?v=tmRJ649ICPU