You might wonder why I added a picture of a blue alien as my cover image. It's a character from one of my favourite movies "Home" and his name is Oh. Oh like Big "Oh" notation. Get it 😂?

Let us get serious now.

A long time ago, before the emergence of 10x engineers, software engineers sought ways to solve real-world problems with their programming skills. Different engineers came up with different solutions/algorithms to solve these problems. There was a need to compare these solutions based on their complexity and efficiency. This need gave birth to the Big O notation. What is the Big O notation then?

What is the Big O notation?

Big O notation is used in computer science to describe the performance or complexity of an algorithm.

Algorithms are to computer programs what recipes are to dishes. Different recipes can help you to make a particular meal but they don't always yield the same results. They don't always have the same steps, ingredients nor take the same amount of time to follow. Some recipes are faster and produce better results than others.

In the same way, different algorithms can achieve a particular computer program. However, they are not all equally efficient or take the same amount of time to run. We use Big O to measure the efficiency of an algorithm.

For example, let's consider sorting. There are many sorting algorithms e.g. mergeSort, bubble sort, quicksort, and insertion sort. How do you know which is more efficient or less complex? This is why the Big O notation exists.

You might wonder, why do we need a notation? Why don't we just consider the time it takes to run the algorithm? Here are two of many reasons:

Different computers have different processors and thus some computers will spend less time running an algorithm than others.

Some programming languages are faster than others.

It will be stressful taking all these factors into consideration when trying to analyze an algorithm. Rather than do that, the Big O notation uses something more standard - the input. It considers how the runtime of the algorithm grows in relation to the size of the input. It is also good to note that the Big O notation considers the worst-case scenario for its analysis.

Hopefully, you get the sense of it now. Next question that might come to your mind is "Why should I know this?"

Why should you care?

For a small app that processes little data, such analysis might be unnecessary. This is because the difference in the runtime of algorithms might have little impact on your app. For large applications that manipulate a large amount of data, this analysis is crucial. Because inefficient algorithms will create a significant impact on the processing time. With a good knowledge of Big-O notation, you can design algorithms for efficiency. Thus, you'll build apps that scale and save yourself a lot of potential headaches.

For your coding interviews. Yeah, you heard me right. You are likely to get asked by an interviewer the runtime complexity of your solution. So it's good to have an understanding of the Big O notation.

Let look at some common examples of Big O Notation

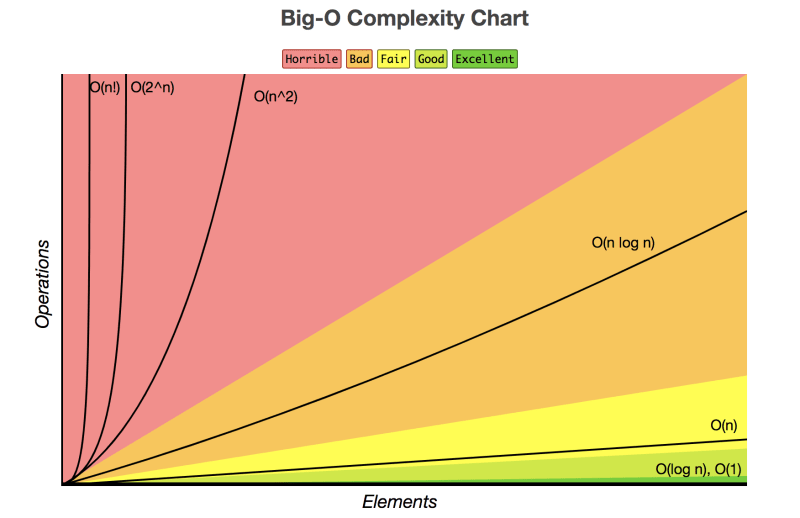

Common Runtime Complexities

1. O(1) - Constant Runtime

In this case, your algorithm runs the same time, regardless of the given input data set.

An example of this is returning the first element in the given data like in the example below.

function returnFirst(elements) {

return elements[0]

}

The runtime is constant no matter the size of the input given.

2. O(n) - Linear Runtime

Linear runtime occurs when the runtime grows in proportion with the size of the input data set. n is the size of the input data set.

A good example of this is searching for a particular value in a data set using an iteration like in the example below.

function constainsValue(elements, value) {

for (let element in elements) {

if (element === value) return true;

}

return false

}

We see that the time taken to loop through all elements in the array grows with an increase in the size of the array. But what if the element is found before it reaches the last element in the array? Does the runtime complexity change?

Remember that the Big O notation considers the worst-case scenario. In this instance, it's the case where the loops run through all elements in the array. So that is what determines the runtime complexity of the algorithm.

3. O(n^2 ) - Quadratic Runtime

O(n^2 ) denotes an algorithm whose runtime is directly proportional to the square of the size of the input data set.

An example of this is a nested iteration or loop to check if the data set contains duplicates.

function constainsDuplicate(elements) {

for (let element in elements) {

for (let item in elements){

if (element === item) return true;

}

}

return false

}

Deeper nested iterations will produce runtime complexities of O(n^3 ), O(n^4 ) etc

4. O(log n) - Logarithmic runtime

In this case, the runtime it takes for the algorithm to run will plateau no matter the size of the input data set.

A common example of this is a search algorithm like the binary search. The idea of a binary search is not to work with the entire data. Rather, reduce the amount of work done by half with each iteration. The number of operations required to arrive at the desired result will be log base 2 of the input size.

For further information on this runtime complexity, you can check some of the resources at the end of the article.

5. O(n log n) - Linearithmic runtime

Here, the runtime of the algorithm depends on running a logarithm operation n times.

Most sorting algorithms have a runtime complexity of O(n log n)

6. O(2^n ) - Exponential runtime

This occurs in algorithms where for each increase in the size of the data set, the runtime is doubled. For a small data set, this might not look bad. But as the size of the data increase, the time taken to execute this algorithm increases rapidly.

A common example of this is a recursive solution for finding Fibonacci numbers.

function fibonacci(num) {

if (num <= 1) return 1;

return fibonacci(num - 2) + fibonacci(num - 1)

}

7 O(n!) - Factorial runtime

In this case, the algorithm runs in factorial time. The factorial of a non-negative integer (n!) is the product of all positive integers less than or equal to n. This is a pretty terrible runtime.

Any algorithm that performs permutation on a given data set is an example of O(n!)

Conclusion

Hopefully, this article has helped you to grasp the concept of Big O notation.

Here are some resources where you can find more info on this topic.

- Big-O Notation: A primer for beginning devs

- A beginner's guide to Big O notation

- A Simplified Explanation of the Big O Notation

- All you need to know about “Big O Notation” to crack your next coding interview

- A story of Big O

- Big O cheat sheet

Got any addition or question? Please leave a comment.

Thanks for reading😊.

Top comments (23)

Thanks for this article, those are good and easy examples!

Still, because you mentioned it, I wonder if I would just walk out the room if sometimes someone asks me about big O notation in a job interview.

Not because I don’t understand it, but because I’ve never needed it a single time in the last 20 years.

I once was asked to reduce the execution time of a MySQL procedure. That query could take between 2 to 3 minutes to execute using a recursion-ish call that could, at worst, make it an O(n^3). My solution was to change the data structure, which was very strange, to a JSON one and use the Generated Column feature, we could reduce to an O(n^2) for the procedure and the time was at the 10ms. The only drawback was a little increase in insertion time.

I don't think people say "hey look, this triple-loop through all the dataset is an O(n^3)", you just know something is wrong and go up to a better solution right away.

Last but not least, knowing the kind of task you want to do with the data, how it will be managed, grow and access can help you select the proper type of data structure, may it be a set, a queue, a stack, a linked list, etc.

It depends on the job though. I do Java backend transaction processing so our team always needs to understand a basic understanding of runtime (and memory usage) when we code. It's mostly basic things like why something is 'faster' then O(n2). Sometimes to reduce runtime, we cache, but then we have to consider the size of the cache, etc

That being said, for front-end web dev, I don't think I've ever had to ever think about it.

Yes, I think the key point is that after some good or bad experiences, most developers will have an implicit knowledge that helps deciding on how to program something and when something’s very slow to find out why.

That said, I’ve probably used it a lot of times. But I never wrote down or talked explicitly about O().

And by the way, I was a java Backend developer for most of my time and just recently started in the frontend as well.

I ask this to senior devs pretty frequently, about how often they use Big O. They respond like you. I asked because I still struggle with learning it.

I think about that time I build a reporting tool in python, and it took 50 seconds to compile the report. I was still learning, and the thing worked so :shrug:.

Now that I 'sort' of understand Big O- if I had to refactor that code, I'm more likely to think about performance and when to use specific loops/nested loops.

I still won't be able to go, "AH - that's a O(n log n)" or whatever. But that background understanding does change how I will approach the problem.

true :)

great article, sarah!

might i add, 'grokking algorithms by aditya bhargava' is a great resource for getting up to speed with big o notation and algorithms in general (when and which to use).

github repo containing algorithms in different languages from grokking's masterpiece: github.com/egonSchiele/grokking_al...

Thank you for this useful information. Just placed an order for the book. It's exactly what I needed.

Great overview, although re: O(n log n) it may be worth noting that complexity is the average time complexity for most sorting algorithms as best and worst can range from O(n) to O(n2) for many of them.

I rarely use big o notation, but it is solid knowledge to have since it makes us think about function complexity and the cost’ around things like disk io, or something silly like asking a html element about its dimensions.

In that context, i find it remarkably relevant.

Nice article! Thank you Sarah :D

Thank you Pablo

Awesome article, Sarah!

Thanks Friday

Thank you

Thanks For this article

Thanks for reading it. I'm glad you like it.

Could you give an example for O(n!) kind of felt like it wasn’t explained well enough..👍 for the rest of them :)

Very nice article, Sarah!

Thank you Bruno

A bit easier way to explain #BigONotation :)

twitter.com/dr_dimaka/status/11555...

LOL!

Immediately I saw the topic, Oh the Boov came to mind and I mentioned his name. Opening the link then seeing him gave me joy!😂😂. My best animation yet! Amazing helpful post! Learnt more.😊