In the previous post, Istio: an overview and running Service Mesh in Kubernetes, we started Istion io AWS Elastic Kubernetes Service and got an overview of its main components.

The next task is to add an AWS Application Load Balancer (ALB) before Istio Ingress Gateway because Istio Gateway Service with its default type LoadBalancer creates nad AWS Classic LoadBalancer where we can attach only one SSL certificate from Amazon Certificate Manager.

Content

- Updating Istio Ingress Gateway

- Istio Ingress Gateway and AWS Application LoadBalancer health checks

- Create an Ingress and its AWS Application LoadBalancer

- A testing application

- Istio Gateway configuration

- Istio VirtualService configuration

The task

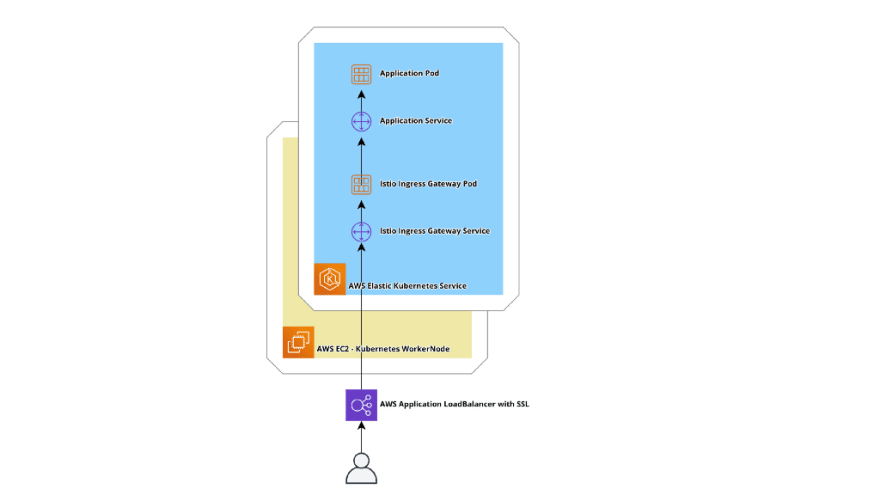

Currently, traffic in our applications is working with the following flow:

- in a Helm chart of an application, we have defined Ingress and Service, this Ingress creates AWS Application LoadBalancer with SSL

- a pocket from the ALB is sent to an application’s Service

- via this Service, it is sent to a Pod with the application

Now, let’s add Istio in here.

The idea is next:

- install Istion, it will create Istio Ingress Gateway — its Service and Pod

- in a Helm chart of the application will have Ingress, Service, and Gateway with VirtualService for the Istio Ingress Gateway

- Ingress of the application will create an ALB where SSL termination is done, traffic inside of the cluster will be sent via HTTP

- a packet from the ALB will be sent to the Istio Ingress Gateway’s Pod

- from the Istio Ingress Gateway following the rules defined in the Gateway and VirtualService of the application it will be sent to the Service of the application

- and from this Service to the Pod of the application

To do so we need to:

- add an Ingress to create an ALB with the ALB Ingress controller

- update a Service of the Istio Ingress Gateway, and instead of the LoadBalancer type will set the NodePort, so AWS ALB Ingress Controller can create a TargetGroup to be used with the ALB

- deploy a test application with a common Kubernetes Service

- for the testing application need to create a Gateway and VirtualService that will configure Envoy of the Istio Ingress Gateway to route traffic to the Service of the application

Let’s go.

Updating Istio Ingress Gateway

Istio we’ve installed in the previous chapter, so now we have an Istio Ingress Gateway with a Service with the сервисом с типом LoadBalancer type:

$ kubectl -n istio-system get svc istio-ingressgateway

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istio-ingressgateway LoadBalancer 172.20.112.213 a6f***599.eu-west-3.elb.amazonaws.com 15021:30218/TCP,80:31246/TCP,443:30486/TCP,15012:32445/TCP,15443:30154/TCP 25h

Need to change it and set the Service type to the NodePort, this can be done with the istioctl and --set:

$ istioctl install — set profile=default — set values.gateways.istio-ingressgateway.type=NodePort -y

✔ Istio core installed

✔ Istiod installed

✔ Ingress gateways installed

✔ Installation complete

Check the Service:

$ kubectl -n istio-system get svc istio-ingressgateway

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istio-ingressgateway NodePort 172.20.112.213 <none> 15021:30218/TCP,80:31246/TCP,443:30486/TCP,15012:32445/TCP,15443:30154/TCP 25h

NodePort, good — all done here.

Istio Ingress Gateway and AWS Application LoadBalancer health checks

But here is a question: how can we perform Health checks on the AWS Application LoadBalancer, as Istio Ingress Gateway uses a set of TCP ports — 80 for incoming traffic, and 12021 for its status checks?

If we will set the alb.ingress.kubernetes.io/healthcheck-port annotation in our Ingress, then ALB Ingress Controller will just ignore it without any message to its logs. The Ingress will be created, but a corresponding AWS LoadBalancer will not.

A solution was googled on Github — Health Checks do not work if using multiple pods on routes: move health-checks related annotations to the Service of the Istio Gateway.

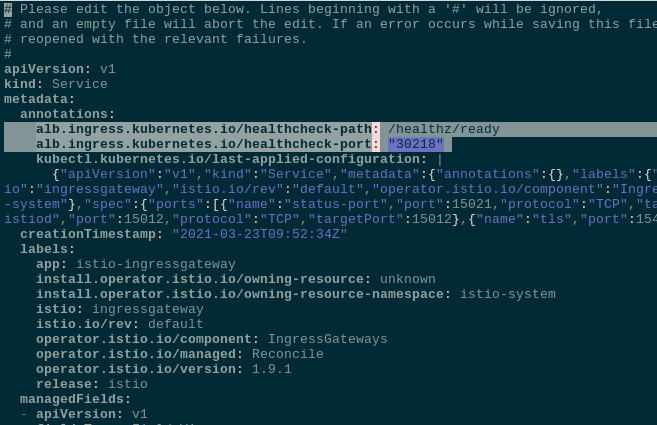

So, edit the istio-ingressgateway Service :

$ kubectl -n istio-system edit svc istio-ingressgateway

In its spec.ports find the status-port and its nodePort:

...

spec:

clusterIP: 172.20.112.213

externalTrafficPolicy: Cluster

ports:

- name: status-port

nodePort: 30218

port: 15021

protocol: TCP

targetPort: 15021

...

To configure the alb.ingress.kubernetes.io/alb.ingress.kubernetes.io/healthcheck-path get a readinessProbe from the Deployment, which creates pods with the istio-ingressgateway:

$ kubectl -n istio-system get deploy istio-ingressgateway -o yaml

…

readinessProbe:

failureThreshold: 30

httpGet:

path: /healthz/ready

…

Set annotations for the istio-ingressgateway Service: in the healthchek-port set the nodePort from the status-port, and in the healthcheck-path - a path from the readinessProbe:

...

alb.ingress.kubernetes.io/healthcheck-path: /healthz/ready

alb.ingress.kubernetes.io/healthcheck-port: "30218"

...

Now, during the creation of the Ingress, our ALB Ingress Controller will find a Service, specified in the backend.serviceName of the Ingress manifest, will read its annotations, and will apply the to a TargetGroup attached to the ALB.

When this will be deployed with Helm, those annotations can be set via values.gateways.istio-ingressgateway.serviceAnnotations.

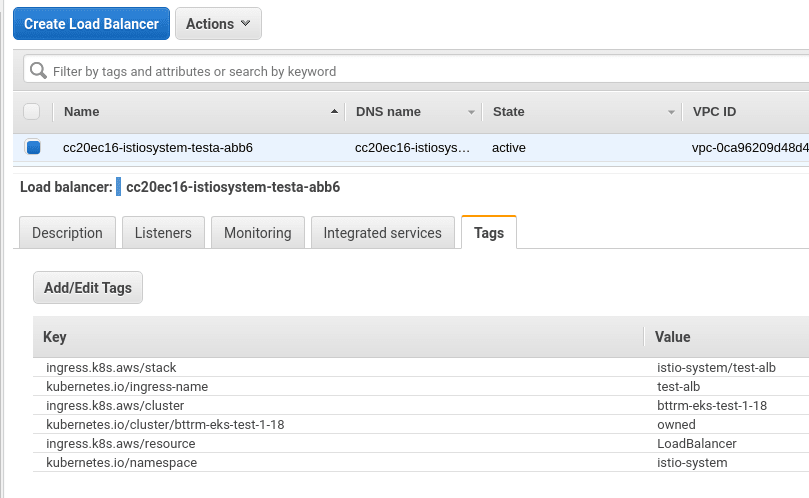

Create an Ingress and its AWS Application LoadBalancer

Next, add an Ingress — this will be our primary LoadBalancer of the application with the SSL termination.

Here, set an ARN of the SSL certificate from the AWS Certificate Manager. The Ingress must be created in the istio-system namespace as it needs to access the istio-ingressgateway Service:

--------

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: test-alb

namespace: istio-system

annotations:

# create AWS Application LoadBalancer

kubernetes.io/ingress.class: alb

# external type

alb.ingress.kubernetes.io/scheme: internet-facing

# AWS Certificate Manager certificate's ARN

alb.ingress.kubernetes.io/certificate-arn: "arn:aws:acm:eu-west-3:***:certificate/fcaa9fd2-1b55-48d7-92f2-e829f7bafafd"

# open ports 80 and 443

alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS":443}]'

# redirect all HTTP to HTTPS

alb.ingress.kubernetes.io/actions.ssl-redirect: '{"Type": "redirect", "RedirectConfig": { "Protocol": "HTTPS", "Port": "443", "StatusCode": "HTTP_301"}}'

# ExternalDNS settings: [https://rtfm.co.ua/en/kubernetes-update-aws-route53-dns-from-an-ingress/](https://rtfm.co.ua/en/kubernetes-update-aws-route53-dns-from-an-ingress/)

external-dns.alpha.kubernetes.io/hostname: "istio-test-alb.example.com"

spec:

rules:

- http:

paths:

- path: /*

backend:

serviceName: ssl-redirect

servicePort: use-annotation

- path: /*

backend:

serviceName: istio-ingressgateway

servicePort: 80

Deploy it:

$ kubectl apply -f test-ingress.yaml

ingress.extensions/test-alb created

Check the Ingress in the istio-system namespace:

$ kubectl -n istio-system get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

test-alb <none> * cc2***514.eu-west-3.elb.amazonaws.com 80 2m49s

And our AWS ALB is created:

In its Health checks of the TargetGroup we can see our TCP port and URI:

And targets are Healthy:

Check a domain, which was created from the external-dns.alpha.kubernetes.io/hostname annotation of the Ingress, see the Kubernetes: update AWS Route53 DNS from an Ingress post for more details on this:

$ curl -I [https://istio-test-alb.example.com](https://istio-test-alb.example.com)

HTTP/2 502

server: awselb/2.0

Grate! It’s working, we just have no application running behind the Gateway, so it even has no TCP port 80 Listener:

$ istioctl proxy-config listeners -n istio-system istio-ingressgateway-d45fb4b48-jsz9z

ADDRESS PORT MATCH DESTINATION

0.0.0.0 15021 ALL Inline Route: /healthz/ready*

0.0.0.0 15090 ALL Inline Route: /stats/prometheus*

But 15021 is already opened and health checks are working.

A testing application

Describe a common application — one namespace, two pods with the nginxdemos/hello image, and a Service:

--------

apiVersion: v1

kind: Namespace

metadata:

name: test-ns

labels:

istio-injection:

enabled

--------

apiVersion: apps/v1

kind: Deployment

metadata:

name: test-deploy

namespace: test-ns

labels:

app: test-app

version: v1

spec:

replicas: 2

selector:

matchLabels:

app: test-app

template:

metadata:

labels:

app: test-app

version: v1

spec:

containers:

- name: web

image: nginxdemos/hello

ports:

- containerPort: 80

resources:

requests:

cpu: 100m

memory: 100Mi

readinessProbe:

httpGet:

path: /

port: 80

nodeSelector:

role: common-workers

--------

apiVersion: v1

kind: Service

metadata:

name: test-svc

namespace: test-namespace

spec:

selector:

app: test-app

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

Deploy it:

$ kubectl apply -f test-ingress.yaml

ingress.extensions/test-alb configured

namespace/test-ns created

deployment.apps/test-deploy created

service/test-svc created

But our ALB still gives us 502 errors as we didn’t configure Istio Ingress Gateway yet.

Istio Gateway configuration

Describe a Gateway and VirtualService.

In the Gateway set a port to listen on, 80, and an Istio Ingress to be configured — the ingressgateway. In the spec.servers.hosts field set our testing domain:

--------

apiVersion: networking.istio.io/v1alpha3

kind: Gateway

metadata:

name: test-gateway

namespace: test-ns

spec:

selector:

istio: ingressgateway

servers:

- port:

number: 80

name: http

protocol: HTTP

hosts:

- "istio-test-alb.example.com"

Deploy:

$ kubectl apply -f test-ingress.yaml

ingress.extensions/test-alb configured

namespace/test-ns unchanged

deployment.apps/test-deploy configured

service/test-svc unchanged

gateway.networking.istio.io/test-gateway created

Check the listeners of the Istio Ingress Gateway one more time:

$ istioctl proxy-config listeners -n istio-system istio-ingressgateway-d45fb4b48-jsz9z

ADDRESS PORT MATCH DESTINATION

0.0.0.0 8080 ALL Route: http.80

0.0.0.0 15021 ALL Inline Route: /healthz/ready*

0.0.0.0 15090 ALL Inline Route: /stats/prometheus*

The TCP port 80 is here now, but traffic here is routed to nowhere:

$ istioctl proxy-config routes -n istio-system istio-ingressgateway-d45fb4b48-jsz9z

NOTE: This output only contains routes loaded via RDS.

NAME DOMAINS MATCH VIRTUAL SERVICE

http.80 * /* 404

* /healthz/ready*

* /stats/prometheus*

And if access our domain now, will get the 404, but this time not from the awselb/2.0 but from the istio-envoy, as the request is reaching the Ingress Gateway Pod:

$ curl -I [https://istio-test-alb.example.com](https://istio-test-alb.example.com)

HTTP/2 404

date: Fri, 26 Mar 2021 11:02:57 GMT

server: istio-envoy

Istio VirtualService configuration

In the VirtualService specify a Gateway to apply routes to, and the route itself — send all traffic to the Service of our application:

--------

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: test-virtualservice

namespace: test-ns

spec:

hosts:

- "istio-test-alb.example.com"

gateways:

- test-gateway

http:

- match:

- uri:

prefix: /

route:

- destination:

host: test-svc

port:

number: 80

Deploy, and check Istio Ingress Gateway routs again:

$ istioctl proxy-config routes -n istio-system istio-ingressgateway-d45fb4b48-jsz9z

NOTE: This output only contains routes loaded via RDS.

NAME DOMAINS MATCH VIRTUAL SERVICE

http.80 istio-test-alb.example.com /* test-virtualservice.test-ns

Now we can see that there is a route to our testing application, and then to the testing pods:

$ curl -I [https://istio-test-alb.example.com](https://istio-test-alb.example.com)

HTTP/2 200

date: Fri, 26 Mar 2021 11:06:52 GMT

content-type: text/html

server: istio-envoy

All done.

Originally published at RTFM: Linux, DevOps, and system administration.

Top comments (0)