Link to video: https://www.youtube.com/watch?v=mKH18sKUg5M

Docker simplified - its definition, benefits, Architecture and a quick demo of how easy it is to use

For a long time, Software Development has been isolated from Software Deployment, Maintenance and Operations.

However, with the advent of Docker, Developers now have an easy way to learn about deployment, configuration and operation, giving rise to what we today know as DevOps - one of the most sought after and well paid roles in the industry.

Docker Containers have also helped spark many industry trends in technology which are now considered standards, some of which include: automated CI/CD Pipelines, Microservices, Serverless architectures etc. just to name a few.

But what actually is Docker anyway!?!

Docker Definition

Docker is a platform that allows us to package our applications into deployable executables - called containers, with all its necessary OS libraries and dependencies.

This basically means we can separate our applications from the underlying infrastructure/hardware being used - enabling rapid development, testing and deployment.

"But how?" Or more importantly, "why?"

Because Docker Containers provide easy and efficient OS-level Virtualization.

"Now what the heck is Virtualization" you ask? It might sound like a daunting topic, and it can definitely get quite complex.

Lucky for you, I have written an entire blog on Virtualization - aiming to make it as simple as possible. I highly recommend you go though it first before continuing.

What is Virtualization? | Bare Metal vs Virtual Machines vs Containers

Just in case you're in a hurry or need a refresher, I'll summarize -

Virtualization is the technology that allows us to create & manage virtual resources that are isolated from the underlying hardware.

It is what powers Cloud Computing, and is utilized by almost every production systems of today.

The main benefits of Virtualization are:

- Better hardware-resource utilization

- Application isolation

- Improved scalability & availability

- Centralized administration & security

There are several types of Virtualization, among which Virtual Machines (VMs) and Containers are the most relevant to our discussion.

Alright, with this in mind, we can now ask…

Why Docker?

The main differentiating factor of (Docker) Containers vs Virtual Machines is the fact that Containers virtualize just the operating system, instead of virtualizing the the entire physical machine like VMs.

This means that Containers themselves do not include an entire Guest OS, making them substantially lighter, faster, more portable and flexible than it's alternative.

Docker itself provides the platform and tooling to manage the entire lifecycle of Containers.

To better understand why Docker is so widely adopted and is worth learning, let's look at -

The main benefits of Docker:

-

Simplicity

Docker kills the enormous complexity of integrating software development with operations, via managing all the heavy lifting of virtualization on its own using a single package.

-

Speed

You can get your application up and running in seconds or minutes, EVERY TIME, instead of spending hours or days setting up the environment only to do it all over again on a different machine or environment. A faster lifecycle means that your team can be much more agile.

-

Portability

Since Docker Images (i.e. blueprint of a Container) are immutable once built, you can deploy them on any environment that supports Docker and it will run exactly how it runs on your local machine. This means, you can deploy your application seamlessly to Cloud providers, on-premises or a hybrid environment. No more "but it works on my machine" headache!

NOTE: Even though Images are immutable, you can change the behavior of a Container by providing runtime configurations like environment variables or command arguments etc. -

Efficiency

Since containers share the resources of the host OS kernel, far fewer resources are required to run multiple containers, as opposed to VMs. Thus, hardware resource utilization is much more efficient.

-

Horizontal Scalability

One of the primary benefits of designing your system using containers is that, your system components are by default isolated. By having isolation/loose-coupling between your system components means that, you can develop and deploy them independently. If some components are more load intensive than others, simply - add more machines, run more containers and load balance them to scale out.

This is how giants like Google, Netflix, Uber etc. achieve limitless scalability! -

Freedom of Tech Stack

Docker is stack agnostic, meaning it doesn't care or restrict what language, library or framework you use on your Containers. So you, as the developer, can innovate with your choice of tools and frameworks.

This really opens up the whole world for tech enthusiasts like us - if you love learning new technologies and being able to actually use them in projects, you need to learn Docker! 🙌 -

Open Source & Community Support

Being open source and easy to use made Docker widely adopted, bringing in collaboration and community support. It's upstream repository is available as Moby.

"Docker sounds amazing. But what's the catch?"

Even though containers are awesome, they are not the one size fits all solution.

Since several containers run on the same host machine and do not virtualize the hardware, they provide a lower level of isolation when compared to VMs. This could give rise to security or compliance issues in some niche projects, which might be an important consideration.

But that is not an issue for most projects, so… chill out!

Okay, now for the most exciting part…

Docker Architecture

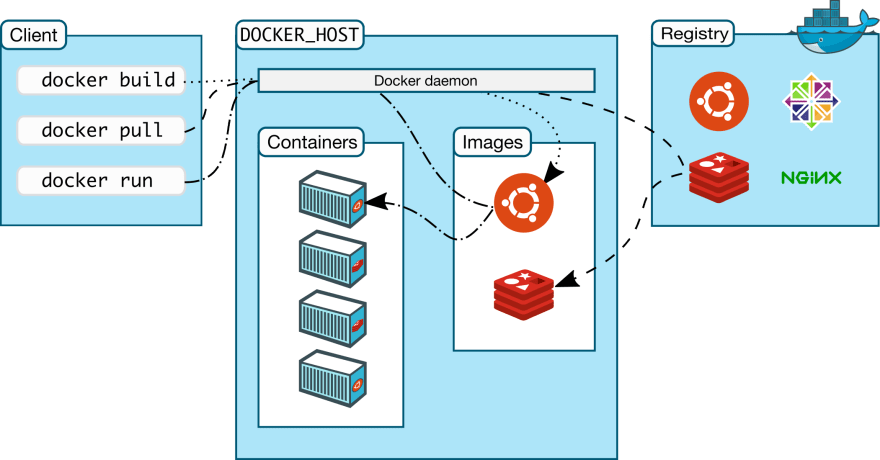

.

To understand Docker, we need to know about the following concepts:

Docker Daemon: The 'brain' of docker - it's a process that serves API requests, and manages Docker objects such as Images, Containers and Volumes etc. It can also communicate with other Docker Daemons if required.

Docker Client: The (docker) command line program that communicates with the Docker Daemon using a REST API.

Docker Host: The (physical or virtual) machine that runs the Daemon.

Container: This is the process that enables OS-level virtualization - by packaging up the required libraries and dependencies and providing isolation from the host and other containers.

Image: A read-only 'blueprint' or template for creating a Container.

Registry: A storage or repository of Images e.g. Docker Hub.

.

The main Docker Workflow is as follows:

- Install Docker on your system

- Get the Docker Images you need: pull Images from a Registry (via docker pull) and either use them directly OR build your own Image on top of existing ones (i.e. using a Dockerfile and docker build)

- Run Containers using those Images (via docker run / docker-compose / docker swarm etc.)

NOTE:

- you have to be logged in to the Docker Hub Registry in order to pull Images from it (via docker login)

- if you try to run a Container using an Image that doesn't exist locally, the Docker Daemon will automatically attempt to pull the Image from Docker Hub if it exists there.

To grasp the workflow properly I think it's best to look at…

A quick demo

Suppose you have Docker installed on your system. Now, to run a Redis Container, for example, just open up your terminal and execute:

docker run redis

"Wait what? You have Redis running in just 3 words!?!"

Yup, that's how simple it is!

"What about downloading archives, extracting, building binaries, managing configuration & setting permissions etc.? How does Docker achieve this?"

When you run the command, Docker performs these steps:

- The CLI (Client) turns the command you typed into a REST request which is made to the Daemon

- The Daemon checks if the 'latest' redis Image exists locally or not

- If not, the Daemon will pull the Image from the Docker Hub Registry & save it locally (it might take a few seconds/minutes to download the Image depending on your internet speed)

- Then the Daemon uses the Image to create a Container - since the Image already contains all the information required for doing so

- And VOILA! You now have a Redis server running on your system.

This was just an example. You can similarly run many other verified & official pre-built Images like MySQL, Ubuntu, Nginx, Apache Server etc. just to name a few.

You can also build your own Image by extending from existing ones; i.e. add your own code in your desired language, libraries or frameworks, and configure them as you like.

But let's keep it simple for this first blog of this series. In the upcoming ones, we shall get more hands on with Docker!

Conclusion

By simplifying virtualization, Docker has made containers the de-facto standard of today's modern software teams. It also allowed developers who were once afraid of operations, to now, manage and automate it instead.

It is no doubt that anyone in the IT industry will benefit from learning about Docker and Containers.

I hope it excites you as much as it excites me! 🎊

So, let's continue to learn more about Docker together!

In the next blog in this series, we'll learn more about how Images work, how to use them properly and build your own custom Images using a Dockerfile.

Docker Made Easy #2 is coming soon… so keep and eye (and notification) out. ;)

I would suggest you to install Docker on your system and start getting your hands dirty in case you haven't already done so.

See you on the next one. 👋

Till then, keep learning!

But most importantly…

Tech care!

Oldest comments (0)