Introduction

Testing is a key concept in modern software development. It's hard to imagine any modern, large web application of today, being developed or maintained without the support and assurance of automated tests in some way.

Automated tests are efficient because they empower developers to "move fast and break things". It's a bit like running on a suspended rope knowing that there's a net below you, that will catch you if you fall. You end up focusing more on the actual running and balance, because the fear of dire consequences if you fall is much, much lower.

With writing software the stakes are the same: you feel much more confident making a large refactor, re-working a core module in your application, making a large change in the interaction between several modules, if your tests will warn you when you break things.

Note that it's also possible to have applications and entire businesses being successful and successfully maintained without any automated tests. But, in those places, there is usually a team of QAs and additionally of "domain-expert users", testing and using your applications to ensure correctness at a technical level as well as from the "deliverables" perspective (e.g. having medical researchers/doctors using your application, if your domain is in medical sciences, telling you that "drug X can not be used when the list of medical conditions contains A, B or C, etc.)

The key takeaway will be that having automated tests in place constitutes an assurance for developers, and can actually make sure that if/when code reaches staging and/or the QA teams, that it does it with a very high certainty that the produced work is technically correct.

We need all types of tests

There are many articles online about how important it is to have multiple types of tests. This is for a good reason. Let's look at some of the characteristics of the different types of tests:

Unit tests: these are by far the most popular tests to write. They are supposed to be fast, self-contained, simple and a lot. Because they are exercising our code at its lowest level of complexity (a unit here is defined to be a single method that is part of the public interface of a class or module), these tests usually tend to be very simple and very technical. They are exercising the pieces of inner logic of a very specific class to assert the behavior of a very specific code unit. So, developers can write a lot of these tests, very fast.

Integration tests: these are a level above unit tests, and, their main purpose is to make sure that the multiple pieces of software that form our application work well together. They test the integration of our application with all the parts that live outside of our application. These are very important because they go one level above the assurance we get from unit tests: now that we know the internals work, how do the various pieces play together?

Since these tests are of a different nature, usually, they are harder to write, they run slower, and developers tend to write them a lot less, if not at all. I'd argue in the microservices paradigm most applications rely on these days, these are the most important tests. They strike the perfect balance between useful feedback and development speed/maintenance cost.E2E tests: These are yet another kind of tests, that tend to be driven "backwards", meaning, instead of testing our application from the inside to assert it will work well from "the outside", we do the opposite here: starting from the UI (using tools like Selenium and/or human QAs interacting with the app under several scenarios), does everything work as we expect it "inside"?

These are yet another level of tests that we can use to be sure that our application works. We can argue that these are the most important/useful tests. After all, users interacting and using our application, will do it from the UI.

However, these tests are hard to write and maintain, and the feedback they offer during the development process, is very limited, and thus, these tests are often overlooked or forgotten.

It is desirable to have all kinds of tests in place if we want to ensure that our applications work correctly, but, even more importantly:

Tests are all about balance and development assurance - write the tests you need today, to both drive your application architecture and correctness to where you want it to be. You can add or change more later.

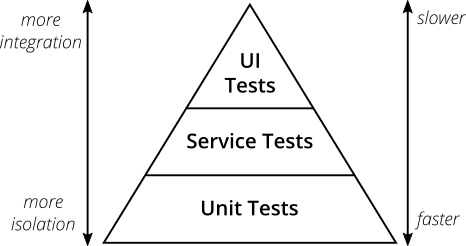

The popular test pyramid highlights the various types of tests we can rely on, together with their effectiveness as well as ROI. We see that unit tests stand at the bottom of the pyramid, they are the faster to write and run, but, work in very high isolation from the rest of the application.

This was a great view on how to write tests, but, let's not forget it first appeared in 2009 (11 years ago!!) and, over the course of the last 11 years, the way we build applications has changed a lot, and, so should our view on how and why we write tests. Let's explore some alternatives, while always keeping one thing in mind: we always need all types of tests.

Tests in modern software development

Over the course of the last 11 years, the way we view and build software has changed dramatically. So, it's only natural to think that the way we view application testing has changed with it. And it has.

As the applications evolve to become more decentralized and more distributed, the code flow within an application, depends more on the interaction between multiple services, most likely some external integrations with external APIs as well.

This means that to test a modern application, the assurance that each single, standalone service works well on its own is no longer sufficient to ensure that the application as a whole works correctly anymore.

In fact, the best way to test modern applications, relies on their architecture: a microservice-oriented architecture ends up driving the new ways that testing is done.

This type of architecture lends itself naturally to more integration tests and E2E tests instead of fully relying on simple unit tests as the main harness for correctness of inner logic. Why? Because the way this architecture works is that certain features work differently based on certain state of other (dependent) microservices.

So, it makes sense to have tests that pull in other services, test database access with certain users and/or roles, write fake data, even spin-up fully dedicated "TestApplications" whose idea is to inject mock services, or services using a test configuration, specifically used to test the application flow as a whole.

With this testing strategy, we get several benefits:

Tests that pull in other dependent services can provide much better correctness feedback and assurance, at the expense of a slightly more expensive start-up/setup time;

With Docker, it becomes possible to essentially simulate a complete environment within a certain test class or method, which can provide essential correctness feedback before moving to staging or production environments;

Tests are now closer to the real-world scenario when compared to more black-box tests, like unit ones;

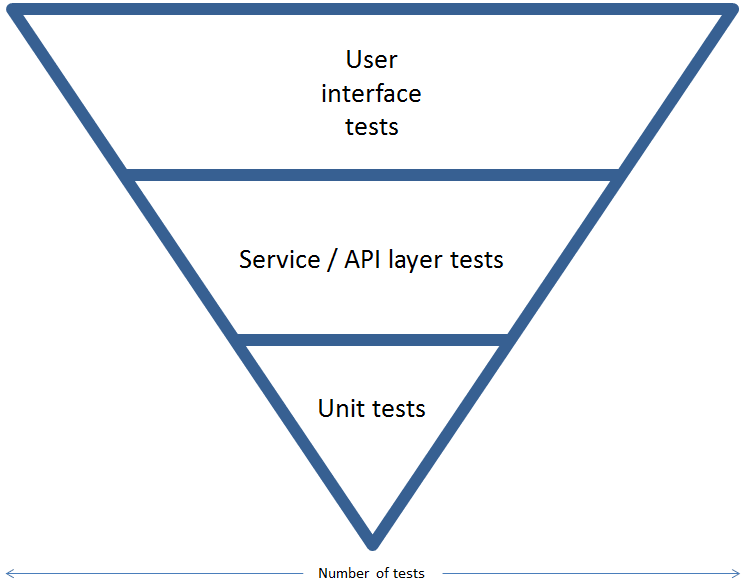

With this in mind, a new trend has emerged recently (last 5 years or so) called the "inverted testing pyramid":

And, while some people argued that inverting the test pyramid is an anti-pattern (see here for a link and a useful discussion about this specific topic), I like to think that it's not an anti-pattern, it's a reflection of modern software development practices, which makes it as good as it can or needs to be for current needs of modern teams.

Frameworks like SpringBoot make writing integration tests really simple, working based on annotations and configuration files, that allows us to configure environments as close as we want to production, so, when writing endpoints, the base go-to test should be a simple integration test that tests the base logic of the endpoint together with authentication, response expectations, request bodies, etc, using other services as needed (for example, a UserService to inject fake credentials in a request requiring a special authentication, etc.) and, only if the service itself contains some very complex inner logic, then we must add unit tests to cover it as well.

The idea for modern software testing seems to be:

Write fewer, but more representative tests, i.e. some unit, not a lot, mostly integration, some E2E

However, as always, it all depends on your current codebase, technologies being used, amount of legacy code that is untested, etc.

Use the best level of testing that is right for your application, and, focus on writing only the most important tests.

Kent C. Dodds has popularized what is now known as the test trophy, applied to JS:

Obviously, using a statically typed language can already provide us with the base of the trophy "for free", but the basic concepts still apply: we need to write less, but, more meaningful and useful tests.

Integration tests are essential to ensure units collaborate correctly, and, in this case, a unit can be a specific endpoint that requires the work of multiple services to exercise its purpose.

Unit tests are at the lower-level of abstraction from the code itself, and, they are small, self-contained and fast, and we can use them sparingly to ensure the correct implementation of business logic or some complex inner logic "hidden" inside a certain service.

E2E can be seen as less valuable, especially when we can have QA teams or people who are domain-experts testing our applications, much like real users would, but, it is very important to do them, whether automated or not.

Conclusion

Modern software development has changed the role and importance of testing, and while we looked at several types of testing, and we saw how cost-effective vs. benefit they can be, we saw that there has been a paradigm shift on how modern companies approach testing.

With the tools available at our disposal, we can rely on more representative and meaningful tests to ensure we deliver the maximum possible value.

Top comments (0)