Managing generative AI (GenAI) projects involves tracking training data, model parameters and training runs to improve model performance. Comparing experiments, identifying optimal configurations and managing deployments without a reliable system can become overwhelming. These challenges slow progress across machine learning (ML), from supervised and unsupervised learning to advanced neural networks for large language models (LLMs), creating inefficiencies in the entire GenAI and ML workflow.

MLflow, an Apache 2.0-licensed open-source platform, addresses these issues by providing tools and APIs for tracking experiments, logging parameters, recording metrics and managing model versions. It also helps to address common machine learning challenges, including efficiently tracking, managing, deploying ML models and enhancing workflows across different ML tasks. Amazon SageMaker with MLflow offers secure collaboration, automated life cycle management and scalable infrastructure.

Why Use Managed MLflow?

Simplified infrastructure: Reduces the need to manually manage infrastructure, freeing time for more critical experimentation and model refinement.

Streamlined model tracking: Simplifies logging essential information across experiments, improving consistency.

Cost-effective resource allocation: Frees resources, enabling teams to allocate more time and energy to refining machine learning model quality rather than managing operations.

Core components of managed MLflow on SageMaker

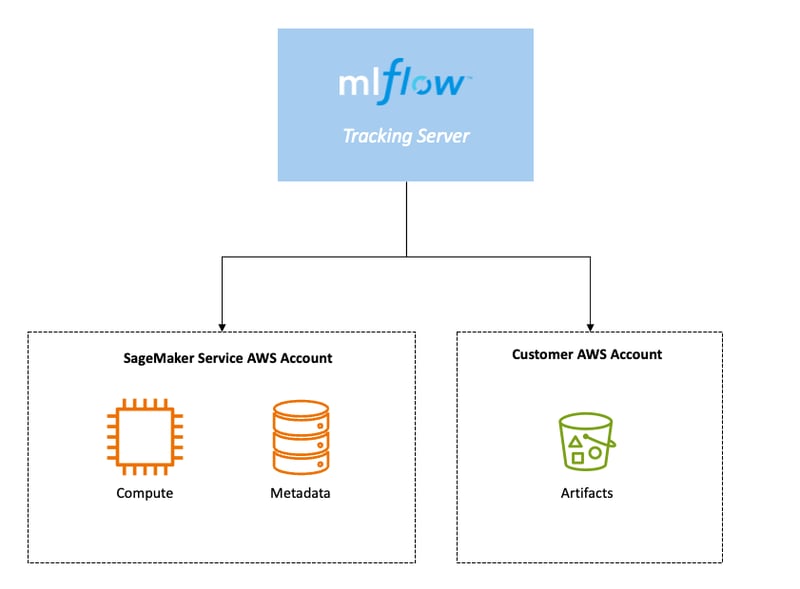

The fully managed MLflow capability on SageMaker is built around three core components:

MLflow Tracking Server: With just a few steps, you can create an MLflow Tracking Server through the SageMaker Studio UI. This stand-alone HTTP server serves multiple REST API endpoints for tracking runs and experiments, enabling you to begin monitoring your ML experiments efficiently.

MLflow backend metadata store: The metadata store is a critical part of the MLflow Tracking Server, where all metadata related to experiments, runs, and artefacts are persisted.

MLflow artefact store: This component provides a storage location for all artefacts generated during ML experiments, such as trained models, datasets, logs, and plots. Utilising an Amazon Simple Storage Service (Amazon S3) bucket, it offers a customer-managed AWS account for storing these artefacts securely and efficiently.

Benefits of Amazon SageMaker with MLflow

Using Amazon SageMaker with MLflow can streamline and enhance your machine learning workflows:

Comprehensive Experiment Tracking: Track experiments in MLflow across local integrated development environments (IDEs), managed IDEs in SageMaker Studio, SageMaker training jobs, SageMaker processing jobs, and SageMaker Pipelines.

Unified Model Governance: Models registered in MLflow automatically appear in the SageMaker Model Registry. This offers a unified model governance experience that helps you deploy MLflow models to SageMaker inference without building custom containers.

Efficient Server Management: SageMaker manages the scaling, patching, and ongoing maintenance of your tracking servers without customers needing to manage the underlying infrastructure.

Effective Monitoring and Governance: Monitor the activity on an MLflow Tracking Server using Amazon EventBridge and AWS CloudTrail to support effective governance of their Tracking Servers.

To learn in depth about the topic, check out the full blog here: https://devmar.short.gy/mlflow

Top comments (0)