In this post we'll look at some non-scientific experiments I ran to measure the performance latency of the Hybrid Connection Manager (HCM) in Azure.

What is the Hybrid Connection Manager (HCM)?

Do you need to connect an on-premises resource to a service in Azure? Does the idea of setting up VPNs or Express Routes, asking IT for dedicated IP addresses, and opening up in-bound ports make you queasy? Then Hybrid Connection Manager is a potential solution. You can read all about HCM in the Azure Documentation.

HCM is built specifically for App Service, Azure's Platform-As-A-Service offering for web apps and APIs. You can use HCM to connect to an on-premises database or other service. HCM has really nice integration with App Service making it really easy to set up.

HCM is built on top of the Azure Relay Hybrid connection capability. You can use Relays to build your own hybrid connections between other Azure services and your services running locally. HCM is specifically for App Service.

The Experiments

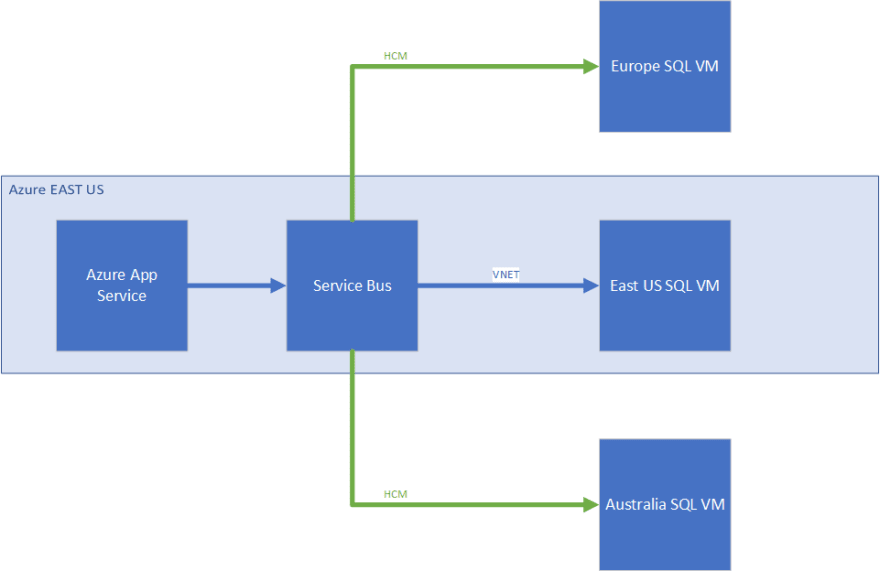

I wanted to see what kind of latency was introduced when using HCM. Theoretically it should not introduce much. HCM communicates via Azure Service Bus. As long as the App Service and Service Bus are in the same region, there should be virtually zero latency for that communication. Of course, there will be latency over the internet to the on-premises location. Optimizing for that is outside the scope of this post.

I did two experiments

- What was latency like when connecting to servers in different parts of the world?

- What was latency like when connecting to servers in the same region as the app service?

In these experiments I set up VMs in different Azure regions to effectively "mock" on-premises environments. I don't have hardware for testing lying around all over the world. ;)

Experiment 1 Set Up

I created 3 VMs (SQL 2019, Win 2019) all the same SKU in Azure (A4v2, all the same configuration, using spot instances) and installed the Northwind sample database.

- One in Europe, with HCM installed.

- One in East US.

- One in Australia, with HCM installed.

I created a simple Web API that will send the same query to any of these databases.

- The Web API is deployed to Azure App Service in East US.

- The App Service uses HCM to talk to Australia and Europe, the SQL Servers are not exposed to the internet.

- The App Service is connected to the VNET where the East US SQL Server is. So that call is over the network via Private IP Address. The database not exposed to the internet.

Experiment 1 Test

I setup a JMeter Test Plan with 100 users, 1 second ramp up, 100 Loops per SQL Server – so 10,000 requests per SQL Server. All running the same query on each database. It took a few minutes to run.

Experiment 1 Results

Here’s the application dependency map. Europe is on top, US in the middle, Australia on the bottom. You can see Australia took the longest. That makes sense, it's the furthest from US East.

I also outputted the results of the SQL Statistics to make sure I was only comparing network time and not latency introduced due to compute time.

customMetrics

| where name == 'SQLStatistics'

| extend networkTime = toint(customDimensions["NetworkServerTime"]), database = tostring(customDimensions["Database"])

| project timestamp, networkTime, ['database']

Here is the result which is very similar to the application dependency map above.

Experiment 2 Set Up

For experiment 2, I compared the latency between 2 VMs running in the same region as the App Service (US East).

- One VM is connected through a virtual network integration

- The other VM is connected via the Hybrid Connection Manager (HCM)

Remember that these are both the same size VM SKUs with the same hardware, same OS and SQL versions, running the same query in the same Azure region. So “everything else being equal”, the request time difference should be isolated to just network latency.

Experiment 2 Test

Similar to Test 1. 10,000 runs (100 users, 1 second ramp up, 100 loops).

Experiment 2 Results

It looks like HCM introduced about an average of 9ms of latency (13.1ms - 4.4ms) over connecting directly through a virtual network. In the image below SQL connected via HCM is on top and SQL connected via network is on the bottom.

Results and Final Thoughts

Overall, I found that HCM does not introduce much overhead at 9ms of additional latency inside the same data center. It costs about $10/month per listener, and there is a charge for data transfer over the first 5GB per month. More pricing information here: Azure Service Bus Pricing.

You could build something similar to HCM yourself. For instance you can use Inlets but you'd have to maintain the server yourself and pay for it. Plus, HCM is a breeze to set up and can be automated with the Azure CLI

If anyone is interested in seeing a latency comparison between Inlets and HCM, let me know!

Top comments (0)