AI-powered solutions are transforming businesses by automating tasks, improving customer interactions, and generating insights. While OpenAI’s GPT-4 is already a powerful tool, fine-tuning it for your specific business needs can significantly improve accuracy, efficiency, and relevance.

In this guide, we’ll cover:

✅ Prompt engineering for better responses

✅ Using embeddings to enhance search and recommendations

✅ Fine-tuning GPT-4 for domain-specific applications

🔹 Step 1: Understanding Fine-Tuning vs. Prompt Engineering

Before fine-tuning GPT-4, it's crucial to decide whether you actually need to.

| Method | Use Case | Pros | Cons |

|---|---|---|---|

| Prompt Engineering | Optimizing queries for better responses | No training required, quick results | Limited customization |

| Embeddings | Custom search, recommendation systems | Context-aware, scalable | Requires external database |

| Fine-Tuning | Industry-specific, personalized AI responses | Highly customized results | Expensive, requires training data |

💡 If your goal is improving response quality, start with prompt engineering before moving to fine-tuning.

🔹 Step 2: Optimizing GPT-4 Responses with Prompt Engineering

Fine-tuning might not always be necessary. Well-structured prompts can drastically improve GPT-4’s accuracy.

✅ Example 1: Generic Prompt vs. Engineered Prompt

❌ Basic Prompt:

"Tell me about marketing."

✅ Optimized Prompt:

"Explain digital marketing strategies for SaaS startups, focusing on SEO and paid ads, in under 300 words."

✅ Example 2: Using Few-Shot Learning

Instead of retraining GPT-4, you can provide examples in your prompt.

Input:

"Convert the following casual message into a professional email:

Casual: Hey John, can you send over that report? Need it ASAP. Thanks!"

❌ Without Example:

"Dear John, please send me the report at your earliest convenience."

✅ With Example:

Examples:

Casual: "Need that file now!"

Professional: "Could you kindly send me the requested file at your earliest convenience?"

Output:

"Dear John, I hope you're doing well. Could you kindly send over the report at your earliest convenience? Thank you!"

🔹 Step 3: Using Embeddings for Contextual AI Responses

If you need domain-specific knowledge (e.g., legal, medical, or financial data), embeddings can help GPT-4 retrieve relevant information from a knowledge base.

✅ How Embeddings Work

1️⃣ Convert your documents into vector representations.

2️⃣ Store vectors in a vector database (e.g., Pinecone, Weaviate, or FAISS).

3️⃣ When a user queries GPT-4, retrieve relevant context before generating responses.

✅ Generating Embeddings with OpenAI API

import openai

response = openai.Embedding.create(

input=["Your business data or documents"],

model="text-embedding-ada-002"

)

embedding = response["data"][0]["embedding"]

print(embedding)

🚀 Use Case: Improves search accuracy by matching queries with stored knowledge.

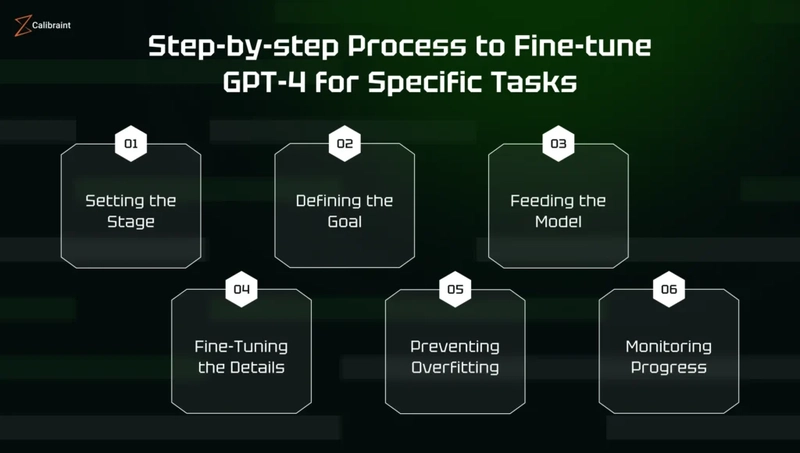

🔹 Step 4: Fine-Tuning GPT-4 for Your Business

If prompt engineering and embeddings aren’t enough, fine-tuning GPT-4 allows you to train it on your specific dataset.

✅ Step 1: Prepare Your Training Data

Fine-tuning requires a JSONL file with structured examples.

{

"messages": [

{ "role": "system", "content": "You are a legal assistant." },

{ "role": "user", "content": "What are the GDPR compliance rules?" },

{

"role": "assistant",

"content": "GDPR rules include data protection, user consent, and transparency requirements."

}

]

}

🔹 Best Practices:

- Provide at least 100+ examples for effective fine-tuning.

- Ensure clear and structured responses.

✅ Step 2: Upload Your Data to OpenAI

openai tools fine_tunes.prepare_data -f training_data.jsonl

✅ Step 3: Train Your Model

openai api fine_tunes.create -t "training_data_prepared.jsonl" -m "gpt-4"

🔹 Fine-Tuning Considerations:

✅ Best for domain-specific knowledge (e.g., legal, medical, fintech).

✅ Improves accuracy in repetitive business tasks.

❌ More expensive than embeddings (cost depends on tokens used).

🔹 Step 5: Deploying Your Fine-Tuned GPT-4 Model

Once fine-tuned, your model can be accessed via the OpenAI API like this:

import openai

response = openai.ChatCompletion.create(

model="ft:gpt-4:your-company:model-id",

messages=[{"role": "user", "content": "Summarize our latest financial report."}]

)

print(response["choices"][0]["message"]["content"])

🚀 Deploy via API in chatbots, customer support, or business analytics tools!

🔹 Summary: Which AI Strategy Should You Choose?

| Use Case | Solution |

|---|---|

| Improve general responses | Prompt Engineering |

| Add business-specific knowledge | Embeddings + Vector Database |

| Need fully customized AI | Fine-Tuning GPT-4 |

🔹 Final Thoughts

🎯 Start with Prompt Engineering → Use Embeddings for Knowledge → Fine-Tune Only if Needed

Fine-tuning GPT-4 can revolutionize your business, but it’s important to weigh cost vs. benefit. If prompt optimization and embeddings provide what you need, you can save time and money while still delivering highly relevant AI responses.

🚀 Now you’re ready to build AI solutions tailored to your business needs!

Top comments (0)