RESTful APIs is pretty common nowadays, as a clear and well-structured resource-oriented standard. But we often have to face the use case that requires to call multi APIs to collect all the necessary data. This quickly becomes a problem if your client is a mobile device with limited network, and only interested in a subset data of all your API responses.

A popular solution to this problem is GraphQL , a query language from Facebook. Although GraphQL is a powerful tool, it still requires you to define all the schemas & functions before the client can use it. In this post, I will show you a different approach, by creating a Batch Request API, that can quickly integrate into your REST service without the need to define any schema.

The code

For a Batch Request API, we'll need an Engine that able to build & execute requests sequentially, then collect all responses. For all of the hard part, I will use this JsonBatch library, that provides many out-of-box features like: build & extract JSON object, conditional & looping requests, aggregate functions, ... You can test out it features here

Below is the code to setup a Batch Engine :

@Bean

public BatchEngine getBatchEngine(ObjectMapper objectMapper, RequestDispatcherService dispatcherService) {

Configuration conf = Configuration.builder()

.options(Option.SUPPRESS_EXCEPTIONS)

.jsonProvider(new JacksonJsonProvider(objectMapper))

.mappingProvider(new JacksonMappingProvider(objectMapper))

.build();

JsonBuilder jsonBuilder = new JsonBuilder(Functions.basic());

return new BatchEngine(conf, jsonBuilder, dispatcherService);

}

And next is the Controller. The logic is quite simple, we only need to build the original request and pass it to Batch Engine along with batch template. After Batch Engine return response, we just warp it up with ResponseEntity and return.

@RequestMapping(value = "/batch", method = RequestMethod.POST, produces = MediaType.APPLICATION_JSON_UTF8_VALUE)

public ResponseEntity batchRequests(@RequestHeader MultiValueMap<String, String> headers, @RequestBody BatchRequest batchRequest) {

Request request = buildOriginalRequest(headers, batchRequest.getData());

Response response = batchEngine.execute(request, batchRequest.getTemplate());

return new ResponseEntity(response.getBody(), HttpStatus.resolve(response.getStatus()));

return responseEntity;

}

private Request buildOriginalRequest(MultiValueMap<String, String> headers, Object body) {

Request request = new Request();

request.setBody(body);

HashMap<String, List<String>> headerMap = new HashMap<>();

headers.forEach(headerMap::put);

request.setHeaders(headerMap);

return request;

}

BatchRequest is a simple Json object:

{

"data": { input data needed to build requests }

"template": { batch template to instruct how to build & execute requests }

}

We also need to implement a simple RequestDispatcher:

@Service

public class RequestDispatcherService implements RequestDispatcher {

@Autowired

private RestTemplate restTemplate;

@Override

public Response dispatch(Request request, JsonProvider jsonProvider, DispatchOptions options) {

HttpHeaders requestHeaders = new HttpHeaders();

requestHeaders.putAll(request.getHeaders());

HttpEntity<Object> httpEntity = request.getBody() != null ?

new HttpEntity<>(request.getBody(), requestHeaders)

: new HttpEntity<>(requestHeaders);

ResponseEntity responseEntity = restTemplate.exchange(

"Your service host" + request.getUrl(),

HttpMethod.resolve(request.getHttpMethod()),

httpEntity,

Object.class);

return buildResponse(responseEntity);

}

private Response buildResponse(ResponseEntity responseEntity) {

Response response = new Response();

Map<String, List<String>> headers = new HashMap<>();

responseEntity.getHeaders().forEach(headers::put);

response.setHeaders(headers);

response.setStatus(responseEntity.getStatusCodeValue());

response.setBody(responseEntity.getBody());

return response;

}

}

And it's done. Now let's test it.

A real scenario

Currently, in our system (a Fintech product), we have this scenario: After user login to our system, we will retrieve all the user's information, that includes:

- User profile

- User’s company profile

- User’s wallet information

Normally we have to call 4 APIs to collect all responses, but only need a subset of data. With Batch API, we only have to make a single call with request body like that:

{

"data": {

"token": "user's token"

},

"template": {

"requests": [

{

"http_method": "GET",

"url": "/oauth/payload",

"headers": {

"Authorization": "Bearer @{$.original.body.token}@"

},

"body": null,

"requests": [

{

"http_method": "POST",

"url": "/report/users",

"headers": {

"Authorization": "Bearer @{$.original.body.token}@"

},

"body": {

"id": "$.responses[0].body.data.user_id"

},

"requests": [

{

"http_method": "POST",

"url": "/report/wallets",

"headers": {

"Authorization": "Bearer @{$.original.body.token}@"

},

"body": {

"user_id": "$.responses[0].body.data.user_id"

},

"requests": [

{

"http_method": "POST",

"url": "/report/companies",

"headers": {

"Authorization": "Bearer @{$.original.body.token}@"

},

"body": {

"id": "$.responses[1].body.data.users[0].company_id"

}

}

]

}

]

}

]

}

],

"responses":[

{

"body": {

"id": "$.responses[1].body.data.users[0].id",

"full_name": "@{$.responses[1].body.data.users[0].first_name}@ @{$.responses[1].body.data.users[0].last_name}@",

"email": "$.responses[1].body.data.users[0].email",

"company": {

"id": "$.responses[3].body.data.companies[0].id",

"name": "$.responses[3].body.data.companies[0].name"

},

"wallets": [

{

"id": "$.id",

"balance": "$.balance",

"currency": "$.currency",

"__array_schema": "$.responses[2].body.data.wallets"

}

]

}

}

]

}

}

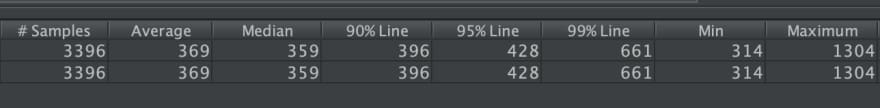

I have run a performance test with both ways. Below is the test result when run multi requests:

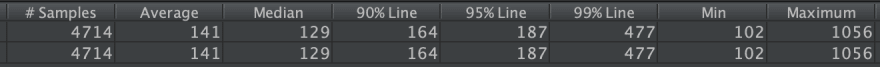

And next is the test result when run a batch request:

As you can see, the batch request way only took on average ~141 ms while the multi requests way took ~369 ms.

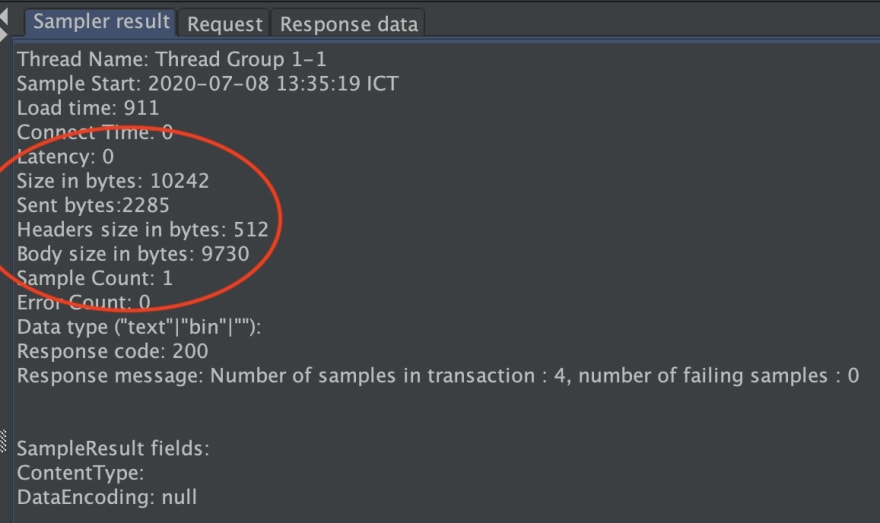

Next, let’s look at the size of response. Below is response size when run multi requests:

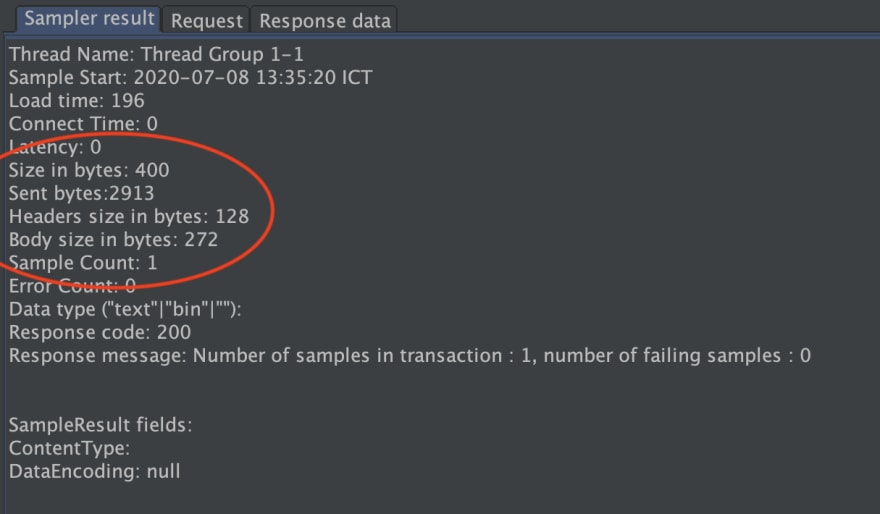

And here is the response size when run batch request:

By strip out all the unnecessary data from response, we able to reduce the response size 30+ times (9730 > 272)

Conclusion

As you can see, with a Batch API, we can archive almost same result as GraphQL without the limit of pre-defined schema

Originally published at rey5137.com

Top comments (0)