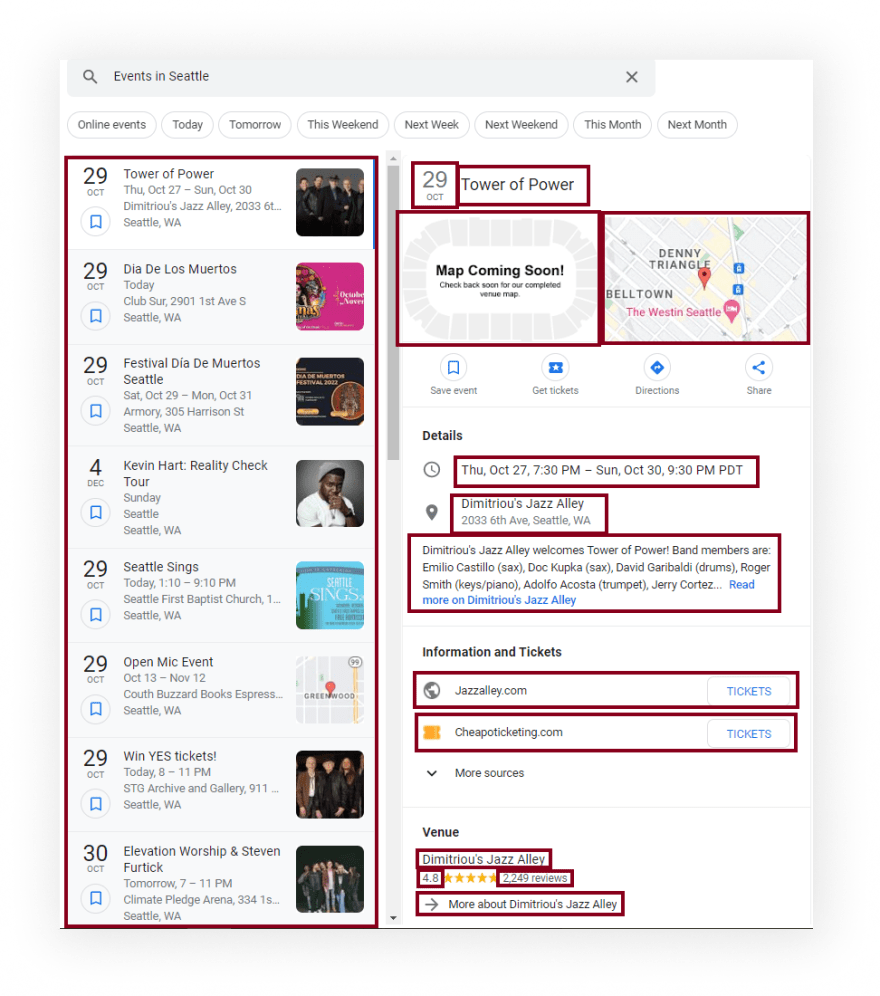

What will be scraped

Full code

If you don't need an explanation, have a look at the full code example in the online IDE

const cheerio = require("cheerio");

const axios = require("axios");

const searchString = "Events in Seattle"; // what we want to search

const AXIOS_OPTIONS = {

headers: {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.64 Safari/537.36",

}, // adding the User-Agent header as one way to prevent the request from being blocked

params: {

q: searchString, // our encoded search string

hl: "en", // parameter defines the language to use for the Google search

ibp: "htl;events", // parameter defines the use of Google Events results

},

};

function getResultsFromPage() {

return axios.get("https://www.google.com/search", AXIOS_OPTIONS).then(function ({ data }) {

let $ = cheerio.load(data);

if (!$("li[data-encoded-docid]").length) return null;

const imagesPattern = /var _u='(?<url>[^']+)';var _i='(?<id>[^']+)/gm; //https://regex101.com/r/qBLEPN/1

const images = [...data.matchAll(imagesPattern)].map(({ groups }) => ({

id: groups.id,

url: decodeURIComponent(groups.url.replaceAll("\\x", "%")),

}));

return Array.from($("li[data-encoded-docid]")).map((el) => ({

title: $(el).find(".dEuIWb").text(),

date: {

startDate: `${$(el).find(".FTUoSb").text()} ${$(el).find(".omoMNe").text()}`,

when: $(el).find(".Gkoz3").text(),

},

address: Array.from($(el).find(".ov85De span")).map((el) => $(el).text()),

link: $(el).find(".zTH3xc").attr("href"),

eventLocationMap: {

image: `https://www.google.com${$(el).find(".lu_vs").attr("data-bsrc")}`,

link: `https://www.google.com${$(el).find(".ozQmAd").attr("data-url")}`,

},

description: $(el).find(".PVlUWc").text(),

ticketInfo: Array.from($(el).find(".RLN0we[jsname='CzizI'] div[data-domain]")).map((el) => ({

source: $(el).attr("data-domain"),

link: $(el).find(".SKIyM").attr("href"),

linkType: $(el).find(".uaYYHd").text(),

})),

venue: {

name: $(el).find(".RVclrc").text(),

rating: parseFloat($(el).find(".UIHjI").text()),

reviews: parseInt($(el).find(".z5jxId").text().replace(",", "")),

link: `https://www.google.com${$(el).find(".pzNwRe a").attr("href")}`,

},

thumbnail: images.find((innerEl) => innerEl.id === $(el).find(".H3ReNc .YQ4gaf").attr("id"))?.url,

image: images.find((innerEl) => innerEl.id === $(el).find(".XiXcOd .YQ4gaf").attr("id"))?.url,

}));

});

}

async function getGoogleEventsResults() {

const events = [];

while (true) {

const resultFromPage = await getResultsFromPage();

if (resultFromPage) {

events.push(...resultFromPage);

AXIOS_OPTIONS.params.start ? (AXIOS_OPTIONS.params.start += 10) : (AXIOS_OPTIONS.params.start = 10);

} else break;

}

return events;

}

getGoogleEventsResults().then((result) => console.dir(result, { depth: null }));

Preparation

First, we need to create a Node.js* project and add npm packages cheerio to parse parts of the HTML markup, and axios to make a request to a website.

To do this, in the directory with our project, open the command line and enter:

$ npm init -y

And then:

$ npm i cheerio axios

*If you don't have Node.js installed, you can download it from nodejs.org and follow the installation documentation.

Process

First of all, we need to extract data from HTML elements. The process of getting the right CSS selectors is fairly easy via SelectorGadget Chrome extension which able us to grab CSS selectors by clicking on the desired element in the browser. However, it is not always working perfectly, especially when the website is heavily used by JavaScript.

We have a dedicated web Scraping with CSS Selectors blog post at SerpApi if you want to know a little bit more about them.

The Gif below illustrates the approach of selecting different parts of the results.

Code explanation

Declare constants from cheerio and axios libraries:

const cheerio = require("cheerio");

const axios = require("axios");

Next, we write what we want to search, the request options: HTTP headers with User-Agent which is used to act as a "real" user visit, and the necessary parameters for making a request:

const searchString = "Events in Seattle"; // what we want to search

const AXIOS_OPTIONS = {

headers: {

"User-Agent": "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/101.0.4951.64 Safari/537.36",

}, // adding the User-Agent header as one way to prevent the request from being blocked

params: {

q: searchString, // our encoded search string

hl: "en", // parameter defines the language to use for the Google search

ibp: "htl;events", // parameter defines the use of Google Events results

},

};

📌Note: Default axios request user-agent is axios/<axios_version> so websites understand that it's a script that sends a request and might block it. Check what's your user-agent.

Next, we write a function that makes the request and returns the received data from the page. We received the response from axios request that has data key that we destructured and parse it with cheerio:

function getResultsFromPage() {

return axios

.get("https://www.google.com/search", AXIOS_OPTIONS)

.then(function ({ data }) {

let $ = cheerio.load(data);

...

})

}

Next, we check if no "events" result on the page, we return null. We do that to stop our scraper when there are no more pages left:

if (!$("li[data-encoded-docid]").length) return null;

Then, we need to get images data from the script tags, because when the page loads for thumbnails and images use placeholders with resolution 1px x 1px, and the real thumbnails and images are set by JavaScript in the browser.

First, we define imagesPattern, then using spread syntax we make an array from an iterable iterator of matches, received from matchAll method.

Next, we take match results and make objects with image id and url. To make a valid url we need to remove all "\x" chars (using replaceAll method), decode it (using decodeURIComponent method) and make from these objects the images aray:

//https://regex101.com/r/qBLEPN/1

const imagesPattern = /var _u='(?<url>[^']+)';var _i='(?<id>[^']+)/gm;

const images = [...data.matchAll(imagesPattern)].map(({ groups }) => ({

id: groups.id,

url: decodeURIComponent(groups.url.replaceAll("\\x", "%")),

}));

Next, we need to get the different parts of the page using next methods:

title: $(el).find(".dEuIWb").text(),

date: {

startDate: `

${$(el).find(".FTUoSb").text()} ${$(el).find(".omoMNe").text()}

`,

when: $(el).find(".Gkoz3").text(),

},

address: Array.from($(el)

.find(".ov85De span"))

.map((el) => $(el).text()),

link: $(el).find(".zTH3xc").attr("href"),

eventLocationMap: {

image:

`https://www.google.com${$(el).find(".lu_vs").attr("data-bsrc")}`,

link:

`https://www.google.com${$(el).find(".ozQmAd").attr("data-url")}`,

},

description: $(el).find(".PVlUWc").text(),

ticketInfo: Array.from($(el).find(".RLN0we[jsname='CzizI'] div[data-domain]"))

.map((el) => ({

source: $(el).attr("data-domain"),

link: $(el).find(".SKIyM").attr("href"),

linkType: $(el).find(".uaYYHd").text(),

})),

venue: {

name: $(el).find(".RVclrc").text(),

rating: parseFloat($(el).find(".UIHjI").text()),

reviews: parseInt($(el).find(".z5jxId").text().replace(",", "")),

link: `https://www.google.com${$(el).find(".pzNwRe a").attr("href")}`,

},

thumbnail: images

.find((innerEl) => innerEl.id === $(el).find(".H3ReNc .YQ4gaf").attr("id"))

?.url,

image: images

.find((innerEl) => innerEl.id === $(el).find(".XiXcOd .YQ4gaf").attr("id"))

?.url,

Next, we write a function in which we get results from each page (using while loop), check if results are present, add them to the events array (push method) and set to request params new start value (it means the number from which we want to see results on the next page).

When no more results on the page (else statement) we stop the loop and return the events array:

async function getGoogleEventsResults() {

const events = [];

while (true) {

const resultFromPage = await getResultsFromPage();

if (resultFromPage) {

events.push(...resultFromPage);

AXIOS_OPTIONS.params.start ? (AXIOS_OPTIONS.params.start += 10) : (AXIOS_OPTIONS.params.start = 10);

} else break;

}

return events;

}

Now we can launch our parser:

$ node YOUR_FILE_NAME # YOUR_FILE_NAME is the name of your .js file

Output

[

{

"title":"Tower of Power",

"date":{

"startDate":"29 Oct",

"when":"Thu, Oct 27, 7:30 PM – Sun, Oct 30, 9:30 PM PDT"

},

"address":[

"Dimitriou's Jazz Alley",

"2033 6th Ave, Seattle, WA"

],

"link":"https://www.jazzalley.com/www-home/artist.jsp?shownum=6377",

"eventLocationMap":{

"image":"https://www.google.com/maps/vt/data=OmPOWRffaJ5QzQJzj_uJm9rhXFYS6C1W0lRoW6r_BArGhlrBB-5S3BDjaSpWtzFtkC2hXFY3JZP_L5gkPrVhFrhYgkYNXe4IWGdzx4Qz3bqn0IBRo6I",

"link":"https://www.google.com/maps/place//data=!4m2!3m1!1s0x5490154b9ece636b:0x67dcbe766e371a09?sa=X&hl=en"

},

"description":"Dimitriou's Jazz Alley welcomes Tower of Power! Band members are: Emilio Castillo (sax), Doc Kupka (sax), David Garibaldi (drums), Roger Smith (keys/piano), Adolfo Acosta (trumpet), Jerry Cortez...",

"ticketInfo":[

{

"source":"Jazzalley.com",

"link":"https://www.jazzalley.com/www-home/artist.jsp?shownum=6377",

"linkType":"TICKETS"

},

{

"source":"Cheapoticketing.com",

"link":"https://www.cheapoticketing.com/events/5307844/tower-of-power-tickets",

"linkType":"TICKETS"

},

{

"source":"Feefreeticket.com",

"link":"https://www.feefreeticket.com/tower-of-power-dimitrious-jazz-alley/5307844",

"linkType":"TICKETS"

},

{

"source":"Visit Seattle",

"link":"https://visitseattle.org/events/tower-of-power-3/",

"linkType":"MORE INFO"

},

{

"source":"Facebook",

"link":"https://m.facebook.com/events/612485650587298/",

"linkType":"MORE INFO"

}

],

"venue":{

"name":"Dimitriou's Jazz Alley",

"rating":4.8,

"reviews":2249,

"link":"https://www.google.com/search?hl=en&q=Dimitriou%27s+Jazz+Alley&ludocid=7484066096647445001&ibp=gwp%3B0,7"

},

"thumbnail":"https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcRmK8jQnWyhw2p5LAL5XEEQxRwjd7Gpyc9FgPOod4DVzL1jLAxdTuPUSoA&s",

"image":"https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcT8lisCKUpEb3Nf0-dvbWup-FQFFJS6aEbH0ST6YFMnANDwTCcpWavkGmlcEw&s=10"

},

... and other events results

]

Using Google Events API from SerpApi

This section is to show the comparison between the DIY solution and our solution.

The biggest difference is that you don't need to create the parser from scratch and maintain it.

There's also a chance that the request might be blocked at some point from Google, we handle it on our backend so there's no need to figure out how to do it yourself or figure out which CAPTCHA, proxy provider to use.

First, we need to install google-search-results-nodejs:

npm i google-search-results-nodejs

Here's the full code example, if you don't need an explanation:

const SerpApi = require("google-search-results-nodejs");

const search = new SerpApi.GoogleSearch(process.env.API_KEY); //your API key from serpapi.com

const searchQuery = "Events in Seatle";

const params = {

q: searchQuery, // what we want to search

engine: "google_events", // search engine

hl: "en", // parameter defines the language to use for the Google search

};

const getJson = () => {

return new Promise((resolve) => {

search.json(params, resolve);

});

};

const getResults = async () => {

const eventsResults = [];

while (true) {

const json = await getJson();

if (json.events_results) {

eventsResults.push(...json.events_results);

params.start ? (params.start += 10) : (params.start = 10);

} else break;

}

return eventsResults;

};

getResults().then((result) => console.dir(result, { depth: null }));

Code explanation

First, we need to declare SerpApi from google-search-results-nodejs library and define new search instance with your API key from SerpApi:

const SerpApi = require("google-search-results-nodejs");

const search = new SerpApi.GoogleSearch(API_KEY);

Next, we write what we want to search (searchQuery constant) and the necessary parameters for making a request:

const searchQuery = "Events in Seatle";

const params = {

q: searchQuery, // what we want to search

engine: "google_events", // search engine

hl: "en", // parameter defines the language to use for the Google search

};

Next, we wrap the search method from the SerpApi library in a promise to further work with the search results:

const getJson = () => {

return new Promise((resolve) => {

search.json(params, resolve);

});

};

And finally, we declare the function getResult that gets data from each page and return it:

const getResults = async () => {

...

};

In this function, we get json with results from each page (using while loop), check if events_results are present, add them to the eventsResults array (push method) and set to request params new start value (it means the number from which we want to see results on the next page).

When no more results on the page (else statement) we stop the loop and return the eventsResults array:

const eventsResults = [];

while (true) {

const json = await getJson();

if (json.events_results) {

eventsResults.push(...json.events_results);

params.start ? (params.start += 10) : (params.start = 10);

} else break;

}

return eventsResults;

After, we run the getResults function and print all the received information in the console with the console.dir method, which allows you to use an object with the necessary parameters to change default output options:

getResults().then((result) => console.dir(result, { depth: null }));

Output

[

{

"title":"Elevation Worship & Steven Furtick",

"date":{

"start_date":"Oct 30",

"when":"Sun, Oct 30, 7 – 11 PM"

},

"address":[

"Climate Pledge Arena, 334 1st Ave N",

"Seattle, WA"

],

"link":"https://www.songkick.com/concerts/40548998-elevation-worship-at-climate-pledge-arena",

"event_location_map":{

"image":"https://www.google.com/maps/vt/data=TSHcoPf0jiU-kXXoAgZTWPVPPnQjq7wHkR9cBuWJM6kQ7JYhbWG5RIkTWU09eeFOCznkgTEvgAL_bXHHUtRRfSJtyYlmFSCYRX4TS-sWW9T5FQajwP0",

"link":"https://www.google.com/maps/place//data=!4m2!3m1!1s0x5490154471be8ed3:0xde04af6753ca2e27?sa=X&hl=en",

"serpapi_link":"https://serpapi.com/search.json?data=%214m2%213m1%211s0x5490154471be8ed3%3A0xde04af6753ca2e27&engine=google_maps&google_domain=google.com&hl=en&q=Events+in+Seatle&type=place"

},

"description":"Get ready for a full worship experience as Steven Furtick preaches and Elevation Worship leads some of their hit songs including \"See A Victory\", \"Great Are You Lord\", new hit song \"Same God\", and...",

"ticket_info":[

{

"source":"Ticketmaster.com",

"link":"https://ticketmaster.evyy.net/c/253185/264167/4272?u=https%3A%2F%2Fwww.ticketmaster.com%2Felevation-nights-tour-seattle-washington-10-30-2022%2Fevent%2F0F005CF4B5353529",

"link_type":"tickets"

},

{

"source":"Festivaly.eu",

"link":"https://festivaly.eu/en/elevation-nights-tour-climate-pledge-arena-seattle-2022",

"link_type":"tickets"

},

{

"source":"Rateyourseats.com",

"link":"https://www.rateyourseats.com/mobile/tickets/events/elevation-nights-tour-tickets-seattle-climate-pledge-arena-october-30-2022-4035222",

"link_type":"tickets"

},

{

"source":"Songkick",

"link":"https://www.songkick.com/concerts/40548998-elevation-worship-at-climate-pledge-arena",

"link_type":"more info"

},

{

"source":"Live Nation",

"link":"https://www.livenation.com/event/vvG1HZ923guKft/elevation-worship-steven-furtick",

"link_type":"more info"

}

],

"venue":{

"name":"Climate Pledge Arena",

"rating":4.5,

"reviews":4285,

"link":"https://www.google.com/search?hl=en&q=Climate+Pledge+Arena&ludocid=15998104634649095719&ibp=gwp%3B0,7"

},

"thumbnail":"https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcRSLAz2tc5y7qinf8ohwnJF2Nj61Je4n_1XPAXwADU&s",

"image":"https://encrypted-tbn0.gstatic.com/images?q=tbn:ANd9GcR5D0FqE7I45LfG6t1JoHy3xzdCScBAzbyh0AR1WUX2q5Xq&s=10"

},

... and other events results

]

Links

If you want to see some projects made with SerpApi, write me a message.

Add a Feature Request💫 or a Bug🐞

Top comments (0)