Introduction:

In this article, we will explore how to set up a load balancing infrastructure using Ansible on three Azure instances. The manager instance will serve as the load balancer, while the other two instances will act as web servers. To streamline the process, we will organize our playbooks into a single file and leverage Ansible's import feature for modularity and better management.

Setting Up Azure Instances:

To begin, we provision three Azure instances: one as the manager and the other two as web servers. The manager instance will be responsible for handling the load balancing, while the web server instances will serve the application.

Installing Ansible on the Manager:

Once the instances are up and running, we install Ansible on the manager instance. Ansible provides a simple and efficient way to automate the configuration and management of our infrastructure.

Since Ansible is available in the Extra Packages for Enterprise Linux (EPEL)

sudo yum install epel-release

sudo yum install ansible

Configuring the Ansible Inventory:

Next, we configure the Ansible inventory to include the two web server instances. The inventory allows Ansible to know which hosts it should target for various tasks.

Organizing Playbooks:

To keep our playbooks organized and modular, we create a single playbook file named "all-playbooks.yml." This file will include multiple playbooks using the import feature in Ansible.

#all-playbooks.yml

---

- import-playbook: update.yml

- import-playbook: install-services.yml

- import-playbook: setup-app.yml

- import-playbook: setup-lb.yml

Package Management:

In our "all-playbooks.yml" file, the first imported playbook is "update.yml." This playbook is responsible for updating the system packages on all three instances. It ensures that our instances have the latest updates before proceeding with the configuration.

---

- name: updating nodes

hosts: all

become: true

tasks:

- name: updating

yum:

name: '*'

state: latest

Installing Apache and PHP:

In the same "all-playbooks.yml" file, we import the "install-services.yml" playbook. This playbook installs Apache and PHP, but only on the manager instance. As the load balancer, the manager does not need the same application as the web servers.

---

- hosts: all

become: true

tasks:

- name: install apache

yum:

name:

- httpd

- php

state: present

- name: Ensure appache starts

service:

name: httpd

state: started

enabled: yes

Uploading Application Files:

We upload the application file "index.php" to the web server instances. To ensure that Apache restarts whenever changes happen, we use handlers, which are triggered when specific events occur.

---

- hosts: nodes

become: true

tasks:

- name: upload application file

copy:

src: ../index.php

dest: /var/www/html

mode: 0755

notify: restart apache

handler:

- name: restart apache

service: name=httpd state=restarted

Load Balancer Configuration:

To set up the manager as a load balancer, we leverage the power of Jinja templates within Ansible. In the "setup-lb.yml" playbook, we insert a Jinja snippet inside an Apache directive called "proxybalancer." This configuration enables the manager to distribute incoming requests across the two web server instances, effectively balancing the load.

See the jinja code :

ProxyRquests off

<Proxy balancer://webcluster >

{% for hosts in groups ['nodes'] %}

BalancerMember http://{{hostvars[hosts]['anible_host']}}

{% endfor %}

ProxySet lbmethod=byrequests

</Proxy>

# Optional

<Location /balancer-manager>

SetHandler balancer-manager

</Location>

ProxyPass /balancer-manager !

ProxyPass / balancer://webcluster/

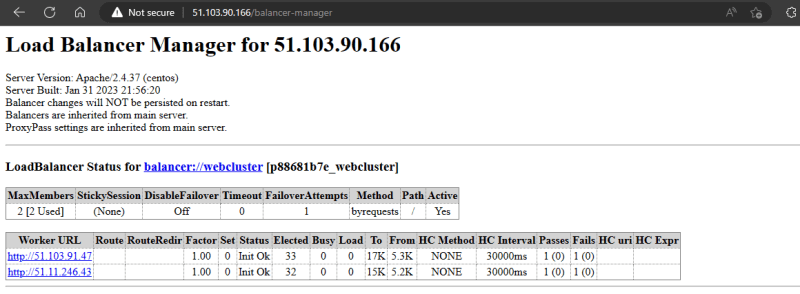

Now, let's take a look at the result:

Conclusion:

By following the steps outlined in this article, we have successfully configured a load balancing infrastructure using Ansible and three Azure instances. The manager instance acts as the load balancer, while the other two instances serve as web servers. Organizing playbooks into a single file using Ansible's import feature makes the management and maintenance of the infrastructure much more efficient. With this setup, we have a scalable and robust system that can handle increased traffic and ensure high availability for our application.

Top comments (0)