Introduction

I’m currently working on a video-processing project requiring lot’s of computational power. To run it, I’ll need to spin up GPU-powered EC2 instances in AWS, process the videos and shut the instances down afterwards to keep costs low. I need a way to store the videos and trigger the processing service as soon as they are uploaded to S3.

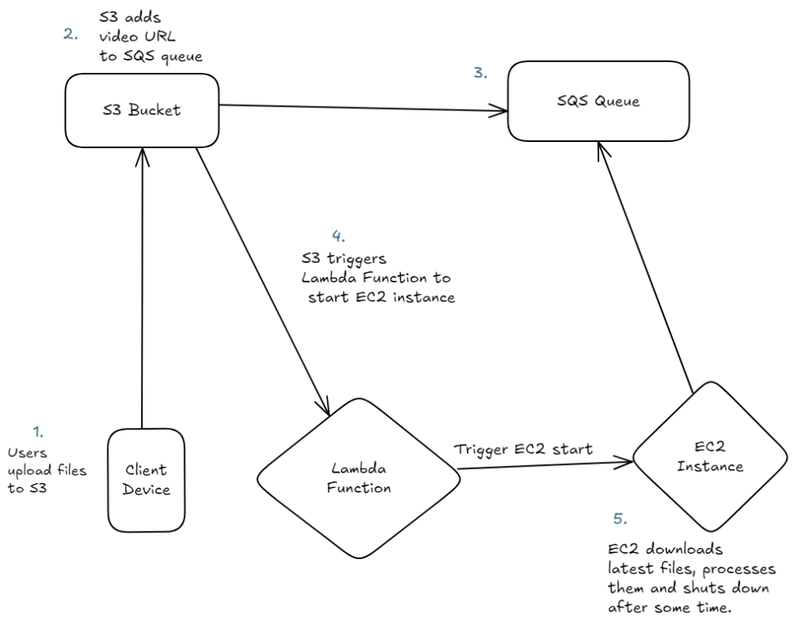

My solution to the problem is to use S3 for storage and have it send out notifications to AWS Lambda and Amazon Simple Queue Services (SQS) whenever new videos are uploaded. S3 will pingLambda to start an EC2 instance to process the videos if one isn’t already running. Once started, the EC2 instance will download the videos from S3, process them and upload them back to the bucket. Eventually, I’ll set this up to handle multiple videos concurrently and scale automatically based on the number of videos that need processing.

This diagram explains what I’m working towards:

In this post, I’ll share how I have solved steps 1 – 3 of the image above; sending notifications to a SQS queue whenever a file is uploaded to S3.

Step 1: Creating an S3 Bucket

S3, plays a crucial role in this architecture; storing user uploads and notifying other services about them. If you’d like to learn more about it, read this article I wrote covering what it is and how it works. To start, I created a standard S3 bucket and gave it a descriptive name e.g video-processing-bucket. Next, I created anSQS Queue and configured the bucket to send notifications to it whenever new files are uploaded to a specific directory in the bucket. I’ll cover how to do that shortly, let me show you how to set up and configure an SQS queue.

Step 2: Creating and configuring SQS

SQS is a managed cloud service that helps different applications communicate smoothly by passing messages between them. It is similar to RabbitMQ and Apache Kafka. It makes sure that messages don’t get lost and allows services to process the messages at their own pace, even if they’re temporarily offline.

SQS queues come in two flavours; Standard and FIFO. Standard queues offer basic message queuing functionality and FIFO (First In First Out) queues offer message ordering and deduplication. I chose the Standard queue because its cheaper than FIFO and because I don’t need messages to be ordered. After creating the queue, I added the following policy to it, replacing the SQS ARN , source bucket ARN and SourceAccount fields:

{

"Version": "2012-10-17",

"Id": "example-ID",

"Statement": [

{

"Sid": "example-statement-ID",

"Effect": "Allow",

"Principal": {

"Service": "s3.amazonaws.com"

},

"Action": [

"SQS:SendMessage"

],

"Resource": "SQS-queue-ARN",

"Condition": {

"ArnLike": {

"aws:SourceArn": "arn:aws:s3:*:*:awsexamplebucket1"

},

"StringEquals": {

"aws:SourceAccount": "bucket-owner-account-id"

}

}

}

]

}

This gives the specified bucket permission to send event notifications to this SQS Queue.

Step 3: Configuring S3

Next, I enabled event notifications in the S3 bucket I created in Step 1. To enable event notifications in an S3 bucket, navigate to:

Buckets –> Select your bucket –> Properties –> Event notifications –> Create event notification.

In the Event notification menu, I specified a prefix to limit the notifications the queue receives. So, instead of enabling notifications for the entire bucket, I configured S3 to only send out notifications for file uploads to a specific directory in the bucket. When you set this up, S3 sends a test message to the queue to verify that it can send messages to it.

I uploaded some files to different directories in the bucket and confirmed that the notification messages were only fired off for the directory I had specified. Each notification message contains information about the uploaded file and its location. Information I’ll need to process the files later.

Conclusion and next steps

Parts 1 through 3 of my plan are done, and notifications are sent to SQS whenever files are uploaded to S3. The next thing for me to do is to set up a similar notification from S3 to trigger starting an EC2 instance. Once that’s done, I’ll write the video processing code on to run on the EC2 instance.

Top comments (0)