Business Use Application/Relevance:

Applications like banking applications, streaming(like netflix), music applications(like spotift), weather application process millions and trillions of livestream real-time data a day. How do they do so? Using Kafka architecture. This project simulates how a shopping app would process numerous shopping orders in a day without lag or breakdown.

Challenges I Faced and How I Solved Them:

- My applications kept going offline. I started my applications in tmux terminals that did not die out when I refreshed the page or went away for 10 minutes

- My warehouse application not picking up my order: my kafka server configuration for my warehose application was set wrong. Upon fixing it I was able to pickup orders.

How do large companies handle massive traffic for websites without crashing and insane slowness? Enter Apache Kafka. Kafka sits in-betwen applications allowing them in real-time to send and receive real-time traffic and messages. Think of how millions of people get the Amazon packages from order to delivery. How does that happen application-side without incredible lags? Thank kafka. Here I simulate real-time event driven architecture by showing by multiple applications can function with a large user base. An example is how banks server multiple clients through their apps, showing real-time bank account updates, login, fraud check without significant lags. This architecture also shows how companies like Amazon handle millions or purchases based on certain events without crashes.

Project Steps:

Creating Ec2 instance That Will Host Apache Kafka for Me

- For simplicity and quickness, I will use the ec2 console to create an ec2 instance with the following specifications: us-east-1, t2.medium, ubuntu image:

- I will then launch my ec2 instance and connect to it via ec2 instance connect for SSH for simplicity:

Setting Up Apache Kafka 3.0.0 on Kafka Server For Scala 2.13 on Kafka Server

- I will navigate to the website to find the download link for Kafka. You can use any version that would work for my setup: https://kafka.apache.org/downloads

- Under binary downloads I will copy the web address for Scala 2.13

- I will then execute the command on my terminal:

wget https://archive.apache.org/dist/kafka/3.0.0/kafka_2.13-3.0.0.tgz

tar -xvf kafka_2.13-3.0.0.tgz

- I will then install Java(Amazon coretto) as kafka requires java. Follow instructions as per your operating system and CPU architecture. For ubuntu I used the following commands:

# Navigate to https://docs.aws.amazon.com/corretto/latest/corretto-8-ug/downloads-list.html

wget -O - https://apt.corretto.aws/corretto.key | sudo gpg --dearmor -o /usr/share/keyrings/corretto-keyring.gpg && \

echo "deb [signed-by=/usr/share/keyrings/corretto-keyring.gpg] https://apt.corretto.aws stable main" | sudo tee /etc/apt/sources.list.d/corretto.list

sudo apt-get update; sudo apt-get install -y java-1.8.0-amazon-corretto-jdk

java -version

Setting Up Kafka With KRaft Helper:

- I will navigate to the directory of the unzipped kafka folder and run the ff commands:

tmux new -s kafka

cd kafka_2.13-3.0.0

bin/kafka-storage.sh random-uuid #this generates a random UUID that will be used in the next step to start the Kafka service

- Make sure to copy the random generate uuid:

- I will format my kafka storage using the randomly generated uuid:

bin/kafka-storage.sh format -t <randomly-generated-uuid> -c config/kraft/server.properties

- I will edit the server properties using the ff:

vim config/kraft/server.properties

- I will set the listeners to the following:

- I will set the advertised listeners with the IP address of my ec2 instance:

- I will then save server.properties text file

- In my security group, the following ports are required for Kafka to work: ports 9092(this is the port applications either sending traffic to or consuming data from will hit) and 9093(this is the port kafka brokers will use for intra-cluster communication. :

- I have opened ports 9092 and 9093 to the internet in my security group modification. This is bad security practice but since this is a personal project I have opened internet access wide to create ease:

- I will now start my kafka service:

bin/kafka-server-start.sh ~/kafka_2.13-3.0.0/config/kraft/server.properties

# Use CTRL+b, then d to detach session. keeps kafka running in background

- If you see something like this and you do not get an error message this means that your kafka server has started:

- I will create a new tmux shell for my shopping application. This repo will be for a sample shopping application:

git clone https://github.com/kodekloudhub/kafka.git

cd kafka

- In the final_project directory you will find two applications toy-shop and warehouse-ui :

ls final_project/

Configuring Applications to Send Traffic To Kafka

- I will navigate to the toy shop application and run the following commands:

cd final_project/toy-shop

tmux new -s shop

- If you get the following error from installing the virtual environment install this:

sudo apt install python3.12-venv -y

python3 -m venv .venv #reinstall virtual environment again

source .venv/bin/activate #activate virtual environment

- I will install python dependencies for this application in the virtual environment:

pip3 install -r requirements.txt

- I will configure my application to connect to Kafka by modifying my application(app.py) to connect to the Kafka server:

vim app.py

- Modify the following in app.py and save:

# Kafka producer configuration

conf = {

'bootstrap.servers': 'localhost:9092', # Modify to localhost

'client.id': socket.gethostname()

}

- Just for ease I will open port 5000 to the internet. This is not recommended in enterprise settings:

- I will start my application with the command:

python3 app.py --host=0.0.0.0 --port=5000

I will detach this shell so that I can keep it running:

# CTRL +b, then d

- To view the application, navigate to:

http://<ec2-public-ip-address>:5000

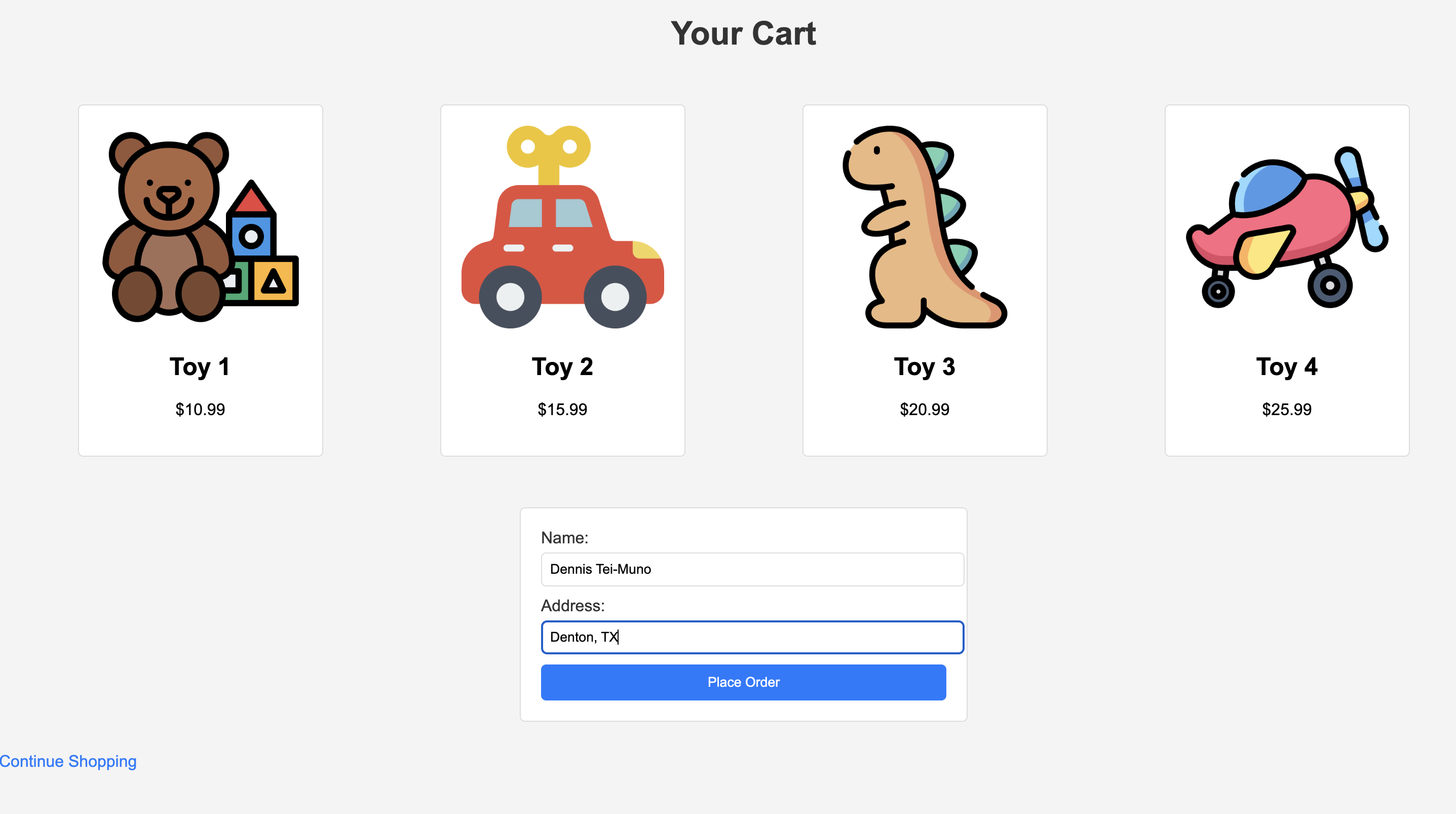

- I will add a couple of items to my cart and then at the bottom of my page click "view cart":

- After placing my orders I see that logs were generated that showed that kafka received real-time messaging logs about the orders I placed:

(kafka.server.KafkaConfig)

[2025-10-25 10:00:16,231] INFO [SocketServer listenerType=BROKER, nodeId=1] Starting socket server acceptors and processors (kafka.network.SocketServer)

[2025-10-25 10:00:16,235] INFO [SocketServer listenerType=BROKER, nodeId=1] Started data-plane acceptor and processor(s) for endpoint : ListenerName(PLAINTEXT) (kafka.network.SocketServer)

[2025-10-25 10:00:16,235] INFO [SocketServer listenerType=BROKER, nodeId=1] Started socket server acceptors and processors (kafka.network.SocketServer)

[2025-10-25 10:00:16,236] INFO Kafka version: 3.0.0 (org.apache.kafka.common.utils.AppInfoParser)

[2025-10-25 10:00:16,237] INFO Kafka commitId: 8cb0a5e9d3441962 (org.apache.kafka.common.utils.AppInfoParser)

[2025-10-25 10:00:16,237] INFO Kafka startTimeMs: 1761386416236 (org.apache.kafka.common.utils.AppInfoParser)

[2025-10-25 10:00:16,237] INFO [Controller 1] The request from broker 1 to unfence has been granted because it has caught up with the last committed metadata offset 9. (org.apache.kafka.controller.BrokerHeartbeatManager)

[2025-10-25 10:00:16,239] INFO Kafka Server started (kafka.server.KafkaRaftServer)

[2025-10-25 10:00:16,242] INFO [Controller 1] Unfenced broker: UnfenceBrokerRecord(id=1, epoch=8) (org.apache.kafka.controller.ClusterControlManager)

[2025-10-25 10:00:16,270] INFO [BrokerLifecycleManager id=1] The broker has been unfenced. Transitioning from RECOVERY to RUNNING. (kafka.server.BrokerLifecycleManager)

[2025-10-25 10:04:01,528] INFO Sent auto-creation request for Set(cartevent) to the active controller. (kafka.server.DefaultAutoTopicCreationManager)

[2025-10-25 10:04:01,544] INFO [Controller 1] createTopics result(s): CreatableTopic(name='cartevent', numPartitions=1, replicationFactor=1, assignments=[], configs=[]): SUCCESS (org.apache.kafka.controller.ReplicationControlManager)

[2025-10-25 10:04:01,545] INFO [Controller 1] Created topic cartevent with topic ID iZpPpV8XR8e7AoLiwX6jGg. (org.apache.kafka.controller.ReplicationControlManager)

[2025-10-25 10:04:01,545] INFO [Controller 1] Created partition cartevent-0 with topic ID iZpPpV8XR8e7AoLiwX6jGg and PartitionRegistration(replicas=[1], isr=[1], removingReplicas=[], addingReplicas=[], leader=1, leaderEpoch=0, partitionEpoch=0). (org.apache.kafka.controller.ReplicationControlManager)

[2025-10-25 10:04:01,581] INFO [ReplicaFetcherManager on broker 1] Removed fetcher for partitions Set(cartevent-0) (kafka.server.ReplicaFetcherManager)

[2025-10-25 10:04:01,598] INFO [LogLoader partition=cartevent-0, dir=/tmp/kraft-combined-logs] Loading producer state till offset 0 with message format version 2 (kafka.log.Log$)

[2025-10-25 10:04:01,604] INFO Created log for partition cartevent-0 in /tmp/kraft-combined-logs/cartevent-0 with properties {} (kafka.log.LogManager)

[2025-10-25 10:04:01,607] INFO [Partition cartevent-0 broker=1] No checkpointed highwatermark is found for partition cartevent-0 (kafka.cluster.Partition)

[2025-10-25 10:04:01,608] INFO [Partition cartevent-0 broker=1] Log loaded for partition cartevent-0 with initial high watermark 0 (kafka.cluster.Partition)

Viewing Receiving Application: Allowing Warehouse-UI application receive real-time orders for information and processing

- I will open up port 5003 on my security group:

- I will open another timux terminal:

tmux new -s warehouse

# I will create a virtual environment

python3 -m venv .venv

source .venv/bin/activate

# I will change directory to my warehouse ui-application

cd /root/kafka-project/kafka/final_project/toy-shop

#install python requirements for app:

python -r requirements.txt

# change directory to warehouse-ui application:

cd ../warehouse-ui

vim app.py

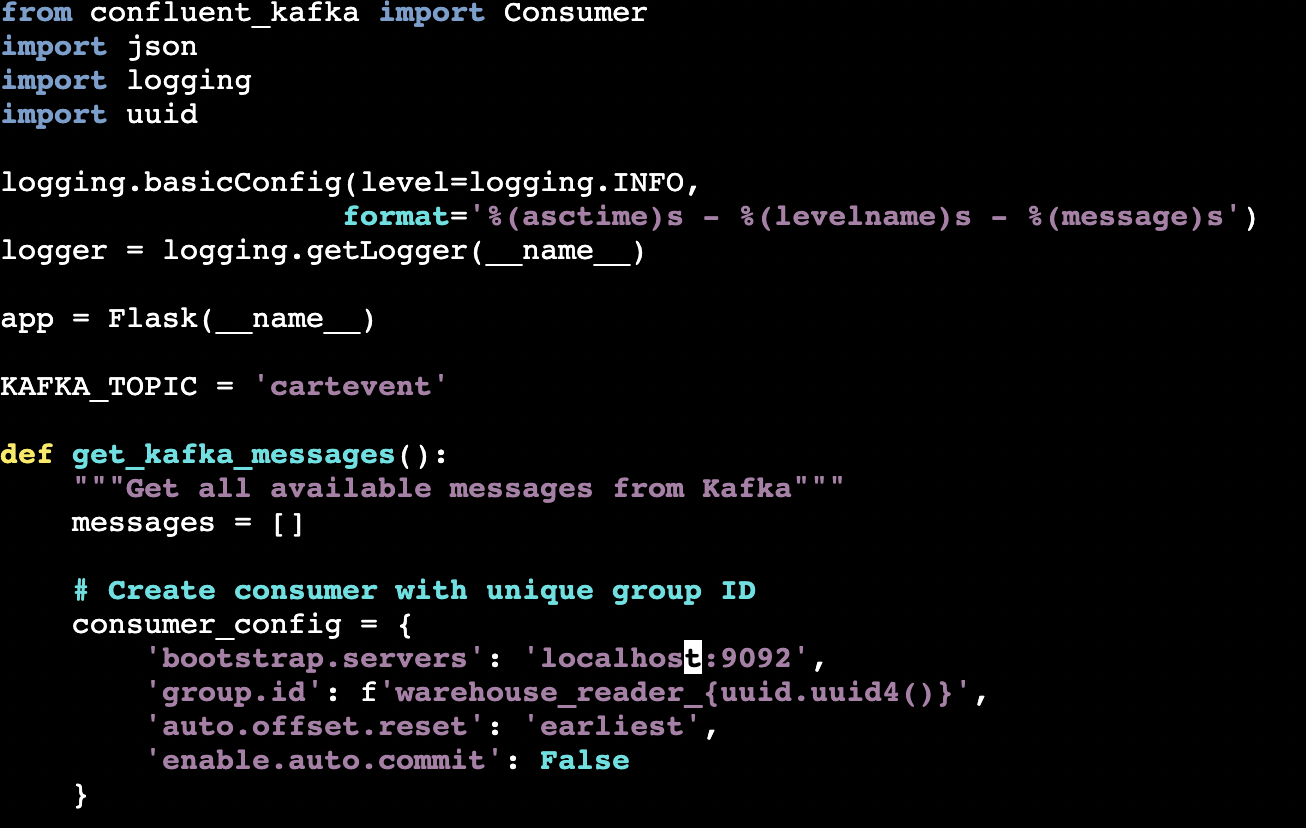

- Change the bootstrap server in appy.py to localhost:9092:

I will then run my application using :

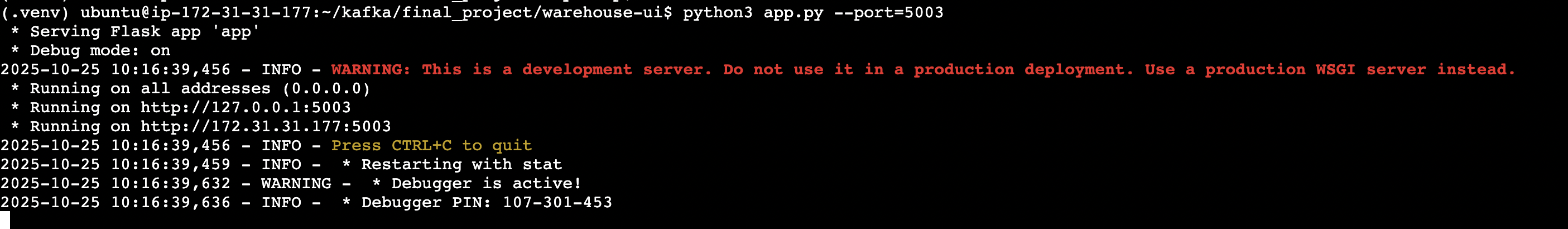

python3 app.py --port=5003

- I will visit my warehouse-ui application using:

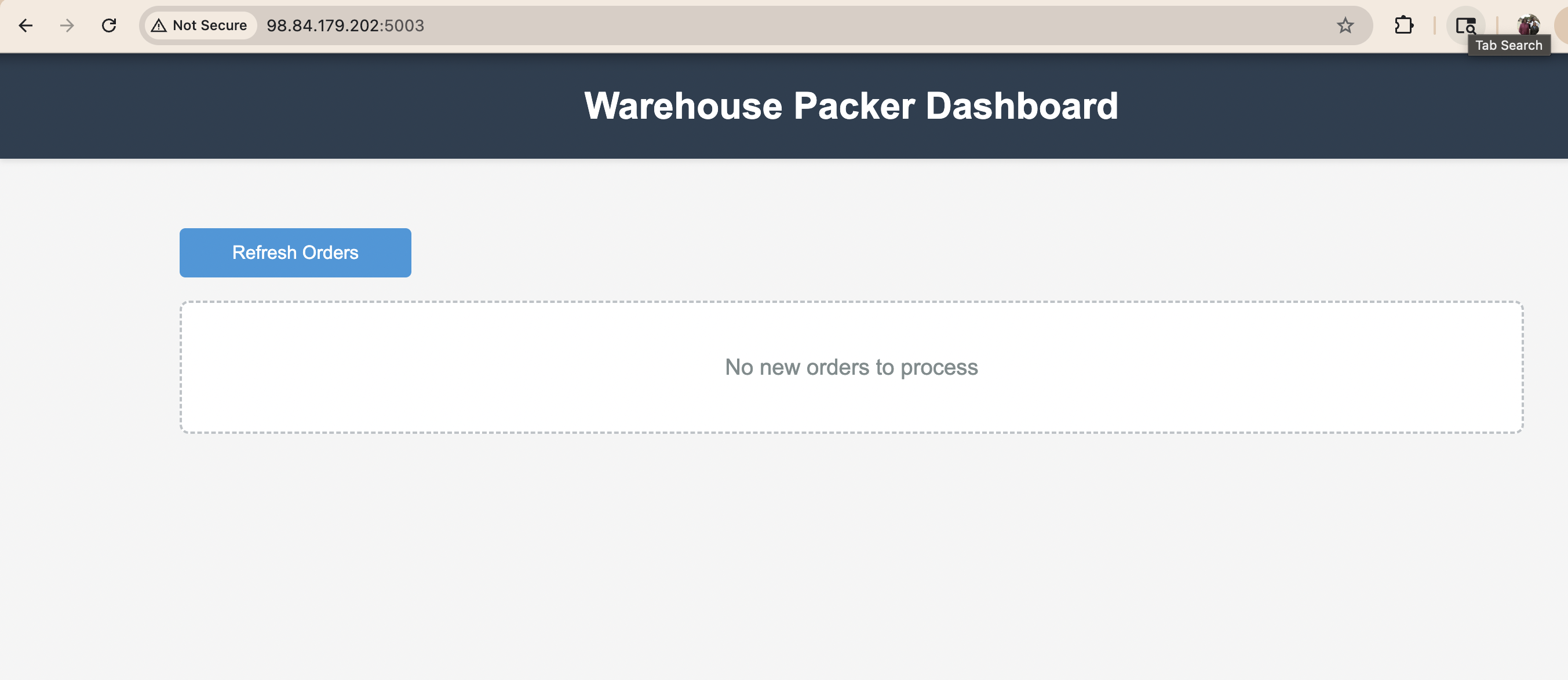

http://<ec2-public-ip-address:5003

## Testing my Kafka Setup

- Ensure your kafka server is running:

ss -tulnp | grep 9092

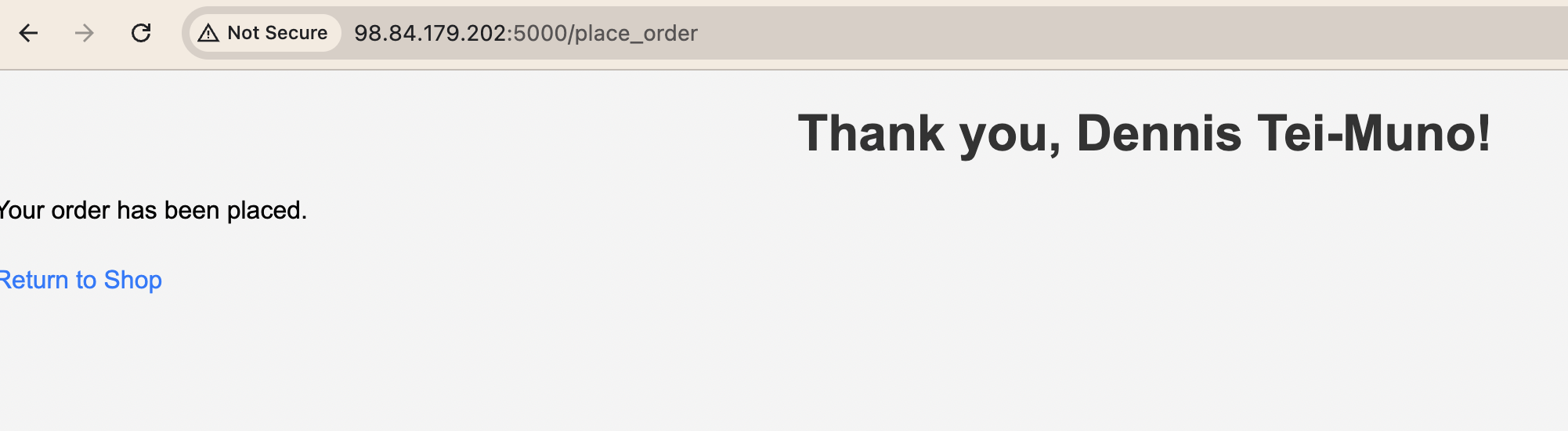

- On my toy-shop UI application I will make a couple of orders and go into my cart and place my order:

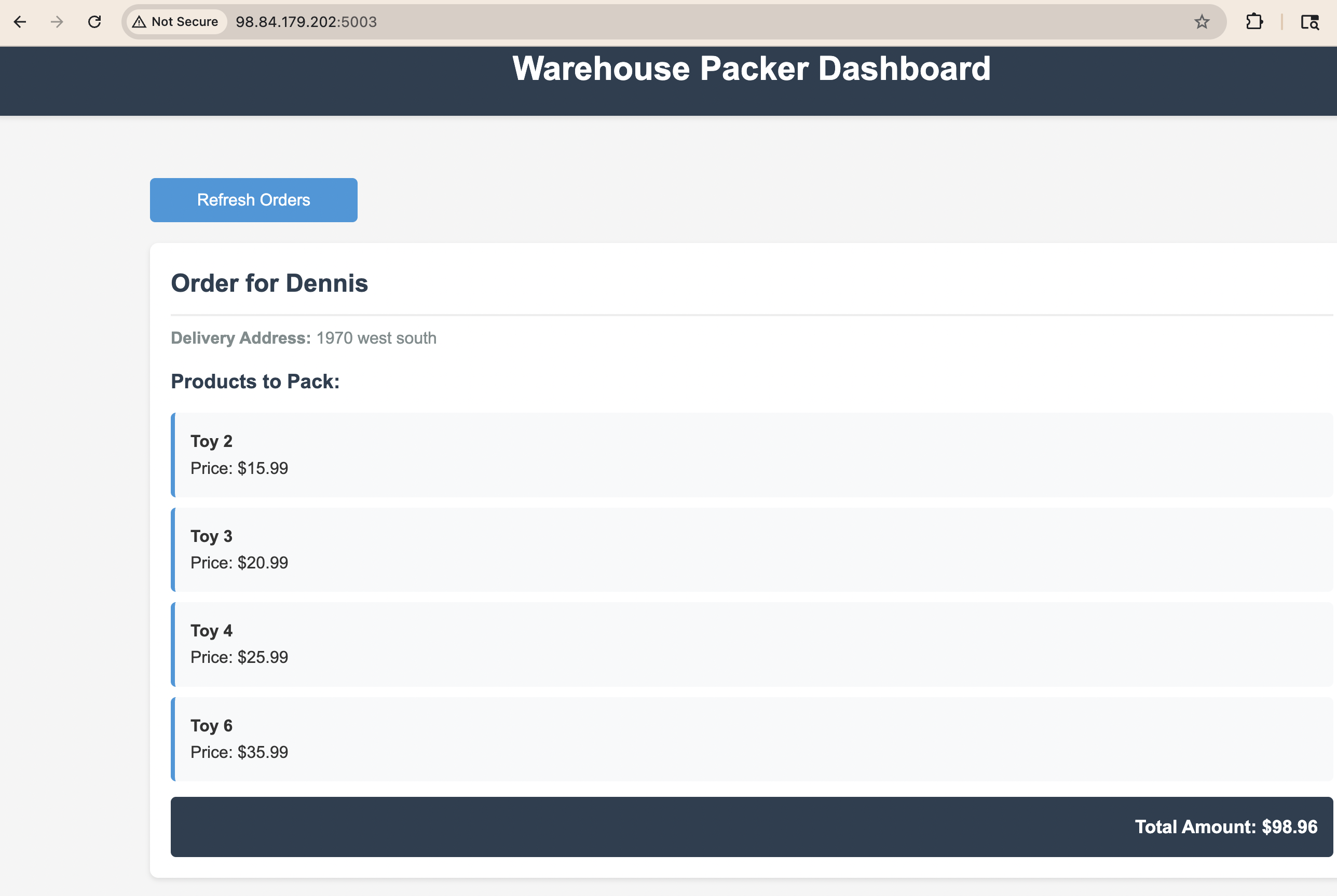

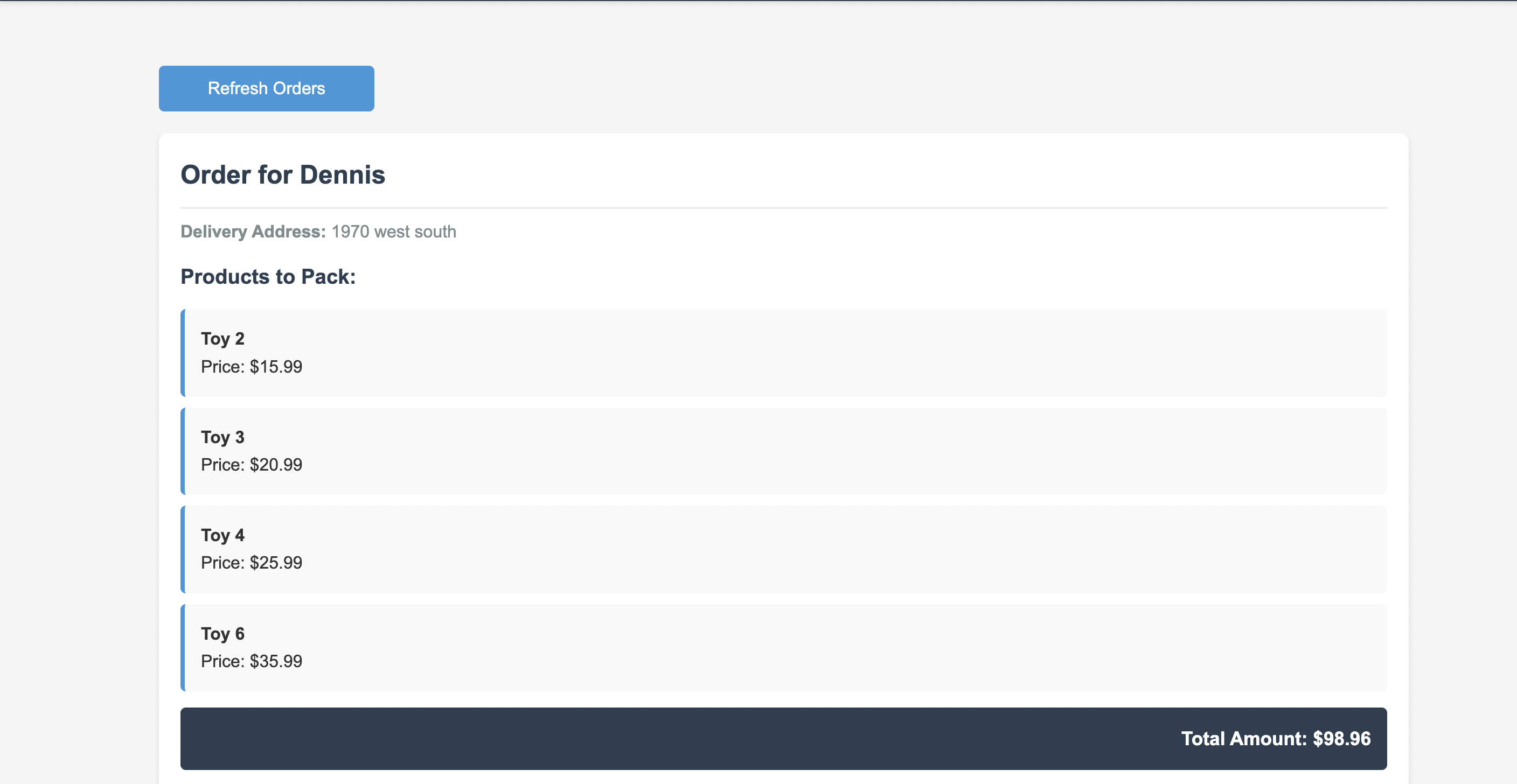

- Boom! My orders have been picked up in the warehouse picker application:

- This shows the power of Kafka where real-time important transaction or events are coordinated between applications. For this example, this is for a shopping application but think weather applications, banking applications and more.

Real-time communication shown here, courtesy kafka in application!

Top comments (2)

Fantastic article! This is a really clear and practical simulation of an event-driven architecture. I've been building an AI-powered educational platform on AWS myself, but using a more synchronous request-response model with Lambda and API Gateway. Your post has me seriously thinking about my V2.0 architecture, especially for heavy tasks like PDF generation. Using a queue (like SQS, as a simpler alternative to Kafka for my use case) to handle this asynchronously seems like a huge win for user experience. In your opinion, what's the most critical factor when deciding to move from a simple synchronous model to a decoupled, event-driven one? Is it a specific processing time threshold, the need for fault tolerance, or more about future-proofing?

Thanks for the feedback. I will say that to move from a simple synchronous model to a decoupled, event-driven one you will have to be working with applications that require speed and that are highly used. For example banking, shopping, even musics applications will require high-throughput real-time connectivity and kafka will serve that purpose