In today's post, I'll walk through how to build a multi-service application that shares a common database layer. This architecture pattern is particularly useful when you're breaking a monolith into microservices or when you want to separate concerns while maintaining data consistency. All code examples and the complete implementation are available on GitHub at https://github.com/callezenwaka/database.

Project Structure

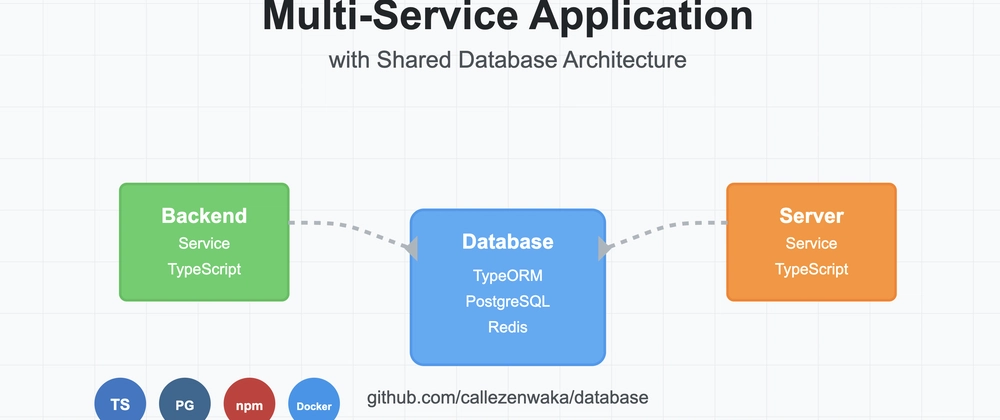

Let's look at the architecture we'll be building:

.

├── LICENSE

├── README.md

├── backend

│ ├── Dockerfile

│ ├── README.md

│ ├── src

│ └── tsconfig.json

├── database

│ ├── Dockerfile

│ ├── README.md

│ ├── src

│ └── tsconfig.json

├── docker-compose.yml

└── server

├── Dockerfile

├── README.md

├── src

└── tsconfig.json

This structure follows a clean separation of concerns:

- database: Contains shared database functionality

- backend: Application backend service

- server: Application server service

The Shared Database Package

The core of our architecture is the shared database package which provides:

- A unified connection mechanism

- Migration management

- Consistent APIs for database operations

- Entity definitions shared across services

Database Package Overview

The database package is designed to be consumed by other services within our application. It's not published to npm but referenced locally by other packages.

Here's what makes it special:

- Automatically builds TypeScript code when installed by other services

- Provides a consistent interface for database operations

- Handles connection pooling and lifecycle management

- Centralizes migration and seeding logic

Implementation Details

Setting Up the Database Package

The database package uses TypeORM to interface with PostgreSQL. Here's how to use it:

import { getDataSource, closeDatabase } from '@database/database';

// Connect to the database

async function connectToDatabase() {

const dataSource = await getDataSource();

// Use the dataSource for database operations

// When done, close the connection

await closeDatabase();

}

Creating a Resilient Service

Let's examine a real-world implementation from our backend service's entry point:

// backend/src/index.ts

... // other codes

async function bootstrap() {

try {

// Start the server first

const server: Server = app.listen(PORT, () => {

logger.info(`Backend running on http://localhost:${PORT}`);

});

// Check Redis connection

try {

const pingResult = await redisClient.ping();

app.locals.redisAvailable = pingResult === 'PONG';

logger.info('Redis connection established:', app.locals.redisAvailable);

} catch (redisError) {

app.locals.redisAvailable = false;

logger.warn('Redis connection failed:',

redisError instanceof Error ? redisError.message : String(redisError));

// Redis will automatically attempt reconnection based on its config

redisClient.on('connect', () => {

app.locals.redisAvailable = true;

logger.info('Redis connection restored');

});

redisClient.on('error', () => {

app.locals.redisAvailable = false;

});

}

// Then attempt database connection

try {

await getDataSource('backend');

logger.info('Database connection established. Full functionality available.');

app.locals.databaseAvailable = true;

} catch (dbError) {

logger.error(String(dbError));

let databaseError = dbError instanceof Error ? dbError.message : String(dbError);

logger.warn('Database connection failed. Operating in limited functionality mode:', databaseError);

app.locals.databaseAvailable = false;

// Attempt periodic reconnection with attempt tracking

let reconnectAttempts = 0;

const MAX_RECONNECT_ATTEMPTS = 10; // Set to -1 for unlimited attempts

const reconnectInterval = setInterval(async () => {

reconnectAttempts++;

try {

await getDataSource('backend');

logger.info(`Database connection established after ${reconnectAttempts} attempts. Full functionality restored.`);

app.locals.databaseAvailable = true;

app.locals.reconnectAttempts = reconnectAttempts;

clearInterval(reconnectInterval);

} catch (error) {

// Log with attempt count

logger.debug(`Database reconnection attempt ${reconnectAttempts} failed`);

// Check if we've reached the max attempts (if not unlimited)

if (MAX_RECONNECT_ATTEMPTS > 0 && reconnectAttempts >= MAX_RECONNECT_ATTEMPTS) {

logger.error(`Maximum reconnection attempts (${MAX_RECONNECT_ATTEMPTS}) reached. Giving up on database connection.`);

app.locals.dbReconnectionExhausted = true;

clearInterval(reconnectInterval);

}

}

}, 30000); // try every 30 seconds

}

// Handle graceful shutdown

const shutdown = async () => {

logger.info('Shutting down server...');

// Shutdown logic for server, Redis, and database

// ...

};

// Handle termination signals

process.on('SIGTERM', shutdown);

process.on('SIGINT', shutdown);

} catch (error) {

logger.error('Failed to start server:',

error instanceof Error ? error.message : String(error));

process.exit(1);

}

}

bootstrap();

Using the Database Package in Services

To use the database package in your services, add it as a local dependency:

{

"dependencies": {

"@database/database": "file:../database"

}

}

This creates a symbolic link to the database package, allowing your service to import its functionality.

Local Development

Starting the Development Environment

Our project includes Docker configurations for easy local development:

# Start docker components

docker-compose down -v && docker-compose up -d

# Confirm database is running

docker exec -it database-app-postgres-1 psql -U app_user -d app_db

# Check service logs

docker logs --tail 20 database-service-name

Running Services

If you decide to run the servers locally, you could use the command:

# Start server

npm run dev # npm run start:dev - for initialization

Database Management

Running Migrations

The database package includes scripts for managing database migrations, which are defined in the package.json file:

{

"name": "@database/database",

"version": "1.0.0",

"scripts": {

"migrate": "ts-node src/run-migrations.ts",

"typeorm": "typeorm-ts-node-commonjs",

"migration:run": "typeorm-ts-node-commonjs migration:run -d src/index.ts",

"migration:generate": "typeorm-ts-node-commonjs migration:generate -d src/index.ts -n",

"migration:create": "typeorm-ts-node-commonjs migration:create src/migrations/",

"seed": "ts-node src/seeds/run-seeds.ts",

"reset": "npm run migration:run && npm run seed"

}

}

These scripts make database management convenient:

# Run all pending migrations

npm run migration:run

# Generate a new migration based on entity changes

npm run migration:generate -- MigrationName

# Create a new empty migration

npm run migration:create

Seeding Data

For development and testing, you can seed your database using the predefined scripts:

# Run seed scripts

npm run seed

# Reset database (run migrations and seeds)

npm run reset

The reset command is particularly useful for local development as it runs all migrations and then seeds the database with test data in one step.

Resilient Connection Management

A robust multi-service application needs to handle connection issues gracefully. Let's break down the key components of our resilient connection management:

Connection States

Our backend service tracks connection states in the application:

app.locals.databaseAvailable = true; // Database connection status

app.locals.redisAvailable = true; // Redis connection status

This allows the application to provide limited functionality even when connections fail.

Automatic Reconnection

For database connections:

- The service attempts reconnection with an exponential backoff strategy

- Reconnection attempts are tracked and can be limited

- The application maintains state awareness throughout

For Redis:

- We leverage Redis's built-in reconnection capabilities

- Event listeners track connection state changes

- The application adapts functionality based on availability

Graceful Shutdown

The shutdown process:

- Stops accepting new requests

- Closes the HTTP server

- Terminates Redis connections

- Closes database connections

- Exits the process with appropriate status code

This ensures all resources are properly released when the application terminates.

Architecture Benefits

This architecture offers several advantages:

- Code reuse: Database logic is written once and shared across services

- Consistency: All services interact with the database in the same way

- Maintenance: Database changes affect a single package, not multiple services

- Separation of concerns: Each service focuses on its core functionality

- Resilience: Services can handle connection failures gracefully

- Self-healing: Automatic reconnection attempts restore full functionality

Testing

We can test the full implementation by spinning up docker compose with docker command docker-compose down -v && docker-compose up -d.

Then, we could use postman to make API calls. To add a new user, make a POST request to the backend POST endpoint.

{

"username": "User Test",

"email": "user@test.com",

"password": "password"

}

While to add a new blog, make a POST API call to server endpoint:

{

"title": "Getting Started with Coding",

"description": "A new beginner's guide to TypeScript and its powerful features.",

"author": "User Test",

"isActive": true

}

Troubleshooting

If you encounter issues importing from the database package, ensure:

- The package has been built (

distdirectory exists) - The service has the correct reference in package.json

- TypeScript paths are configured correctly in the consuming service

Conclusion

A shared database layer can simplify development across multiple services while maintaining consistency and reducing duplication. By centralizing database logic, you create a more maintainable system that's easier to evolve over time.

If you would like to explore this architecture further or use it as a starting point for your own projects, check out the complete implementation at https://github.com/callezenwaka/database.

The repository includes all the code we have discussed in this post, plus additional examples and configurations that can help you get started quickly.

Have you implemented a similar architecture? I'd love to hear about your experiences in the comments!

Top comments (0)