Introduction

Ok, so you have been developing a nice script or program, and it's now working just you we want it to, that's great! But it's also not enough... you need to host it somewhere!

I'll show you here how to deploy your program (be it a script, a scraper, or whatever) to a Linux VM in Google Cloud Platform's Compute Engine service.

If you don't have a Linux VM in GCP, you can follow this other tutorial of mine on how to spin up a free VM in GCP.

If you followed that, you should have a GCP project (I'll refer to it as $PROJECT) and a VM (with a name that I'll reference as $INSTANCE). Of course, you may already have that without having followed my other post, that's just as good.

NOTE: This tutorial assumes that you are also working from a Linux machine (as I do). If you use Windows (please don't) or Mac, the same concepts apply but some commands may differ. Don't worry, there will be links to the official documentation where you can see the exact differences and adapt to your case.

Set up gcloud locally

If you haven't done this yet, it's important to have gcloud installed locally to be able to connect your local machine to all your GCP services (in this case, it will be just the VM).

To do so, just follow the official docs for the gcloud sdk. All the steps are very easy to follow, and all the default configurations are good enough.

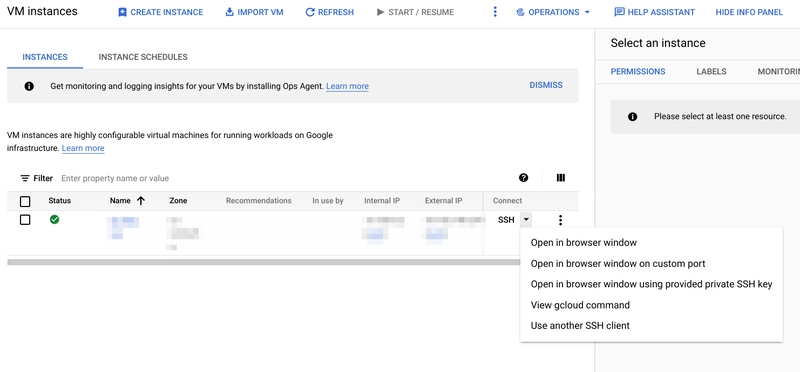

To test that it's working properly, let's try to ssh to our VM. To get the gcloud command to do it, go to the Compute Engine service in the [GCP console], see your VM listed and click on the 3 dots and then on "View gcloud command".

This will prompt a dialog that displays the command that we will need to use to connect to the VM.

Copy that command and run it locally in a terminal, you should see something like this:

And that's it! Now we're ready to deploy our code to our VM using the gcloud command.

Deploy the code

I'll assume a simple structure for the code, because I want to focus on the concept of deploying the code and not on the nitty-gritty details of production-ready code (which is incredible important but not essential to explain how to perform a deployment, and will get in the way of the concept I want to explain here).

.

├── src

│ └── ...

├── docs

│ └── ...

└── tests

└── ...

├── main.py

├── deploy.sh

└── ...

This means that there is a directory src where the code is, some other directories not essential but usually present like docs and tests, an entry-point main.py (this is the file that you execute to run your program, in this case it's a Python file but it could be any other kind), and a deployment script deploy.sh.

We'll focus, naturally, on the deployment script.

Copy the code over to the VM

The first part is to copy the code over to the VM, so it runs there instead of on our local machine.

Let's assume we have our program contained in the directory /path/to/local/app in our local machine, and we want to put it in the directory /path/to/remote/app in the VM.

First we can zip the needed files into a temporary file temp.tar.gz:

$ cd /path/to/loca/app

$ tar czf /tmp/temp.tar.gz src main.py

In this case I'm only taking the src directory and the main.py entry-point, but of course that you can include more directories and/or files if you need it for your program to run properly.

After doing so, we can send that temporary file to the VM (you'll need to have $ZONE, $PROJECT, and $INSTANCE as environment variables or simply replace them in the command):

gcloud compute scp --zone $ZONE --project $PROJECT --recurse /tmp/temp.tar "$INSTANCE:/tmp/"

And finally, extract the contents to the correct directory in the VM:

gcloud compute ssh --zone $ZONE $INSTANCE --project $PROJECT --command="cd /path/to/remote/app && tar xzf /tmp/temp.tar"

And done! Now the code is in the VM ready to run there.

Set up the VM so the code can run

Ok, so... the code is in the VM ready to be executed, but some configurations will be needed. For instance, if it's a Python program, it will need a Python interpreted of the right version installed in the VM and then the correct requirements installed with pip.

To do so, we need to ssh to our VM, and apply the proper configurations. For example, I'll assume our program uses Python 3.8 and the requirements are saved in a requirements.txt file.

First we connect via SSH:

$ gcloud compute ssh --zone $ZONE $INSTANCE --project $PROJECT

Now we install Python 3.8 in the VM using apt.

$ sudo apt install python3.8

And install the requirements:

$ python3.8 -m pip install -r requirements.txt

And finally test our program:

$ cd /path/to/remote/app

$ python3.8 main.py

And done! If it runs (and it should if it was running locally) it means that the VM is really ready to execute our program.

Set up a cronjob

Now the program runs whenever we execute it, but that is hardly useful since we are still running it manually as we do in our local machine.

The whole idea is to have it run periodically to do whatever it does hourly, or daily, or every 20 minutes, or whenever you need it to.

For instance, I sometimes need some information from a website as soon as it's published, so I set up a simple scraper and have it run hourly and notify me via email and telegram whenever there is a change.

To achieve that, we'll set up a cronjob, which is quite easy to do. First we SSH to the VM and then we use the following command:

$ crontab -e

This will open up an editor, where we need to add a line into it:

0 * * * * cd /path/to/remote/app && python3.8 main.py

In this case it will run hourly according to the cron expression that we used. If you're not familiar with cron expressions, it's quite simple, and you can understand it quickly here.

Finally, we can check that the new cronjob is correctly set by checking the syslog:

$ tail -f /var/log/syslog

You should see some CRON entries whenever your code is executed. If you're not sure, just change the cron expression so it runs every minute (*/1 * * * *) and inspect the syslog again.

And done!

Now your code is running periodically in a Linux VM in GCP Compute Engine.

Deployment script

After having explained all the steps, it's easy to put them all together into the deployment script (deploy.sh). The only addition is that we remove the current files in the remote directory before copying over the new files:

#!/bin/bash

set -e

# Project base path to reference files.

LOCAL_PATH="/path/to/local/app"

# Delete the current script and requirements files

echo "Deleting remote files."

REMOTE_PATH="/path/to/remote/app"

FILES="src main.py"

$ZONE=...

$INSTANCE=...

$PROJECT=...

gcloud compute ssh --zone $ZONE $INSTANCE --project $PROJECT \

--command="cd $REMOTE_PATH && rm -rf $FILES"

# Send the current files

echo "Sending new files."

tar czf /tmp/temp.tar.gz $FILES

gcloud compute scp --zone $ZONE --project $PROJECT \

--recurse "/tmp/temp.tar.gz" $INSTANCE:/tmp/"

gcloud compute ssh --zone $ZONE $INSTANCE --project $PROJECT \

--command="cd $REMOTE_PATH && tar xzf /tmp/temp.tar"

# Install the latest requirements

echo "Installing requirements."

gcloud compute ssh --zone $ZONE $INSTANCE --project $PROJECT \

--command="cd $REMOTE_PATH && python3.8 -m pip install -r requirements.txt"

And then whenever we want to deploy our code we simply execute our deployment script:

$ cd /path/to/local/app

$ bash deploy.sh

Conclusion

I showed you how to copy over your code from your local machine to a remote Linux VM in GCP Compute Engine, set it up to run the code properly and then set up a cronjob to execute it periodically.

All the steps are quite simple and easy to follow, but if I overlooked anything or you are having trouble with any step, feel free to leave a comment and I'll reply as soon as I can.

Cheers!

Top comments (0)