Introduction

I’ve been a developer for over fifteen years. I probably belong to the “past” generation of developers — those old-timers who manually wrote code in high-level languages like Java, PHP, JavaScript, and Go. I’ve noticed that I’m becoming increasingly conservative in my choice of tools and technologies for projects I’m responsible for. That’s why I approach the AI hype, copilots, agents, and the like with caution and skepticism.

But I decided to give it a try — and I’d like to share my observations: what works and what doesn’t. I’ll say upfront: overall, I liked it. I’d even say I’m impressed.

Tools

I tried for quite a while to get used to copilots, but I couldn’t. Inline suggestions mostly irritate and slow me down. At least in my primary language — Go — I find it simpler and faster to write the code myself rather than checking and fixing what the model generated.

Cursor didn’t work for me either — possibly because I’ve been using JetBrains IDEs for many years and don’t want to give up the familiar interface and toolset. Ultimately, for my experiments, I settled on a combination: the console-based Claude Code client and JetBrains GoLand.

The Project

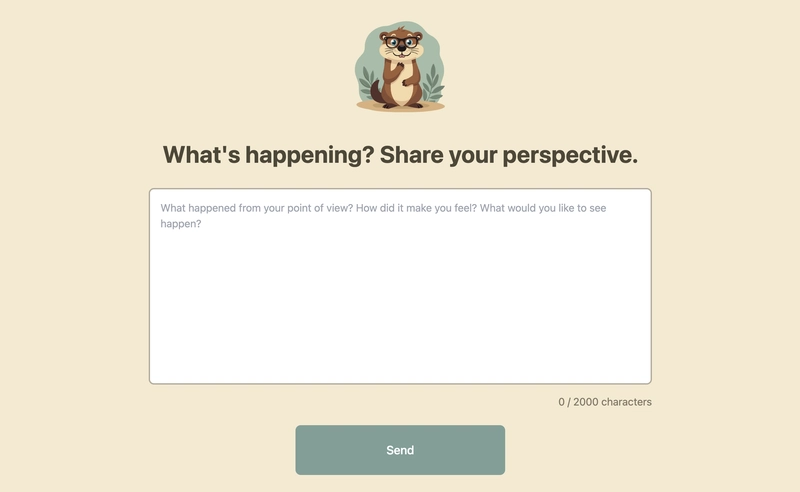

The idea for a pet project to experiment with came unexpectedly. I was chatting with an old friend — we were complaining to each other about our “life partners,” with whom it’s sometimes difficult to find common ground. And then it hit me: AI Conflict Resolution!

By Monday morning, the prototype was ready. (You can try it here: theudra.com)

My Approach

I’ll start with a disclaimer: this is my first serious experience using AI in development, so I probably did everything wrong. Feel free to comment — teach me how it should be done.

I began by creating a project in the web version of Claude. The idea was: I have a “Product Manager” who generates PRDs (Product Requirements Document), and a “Developer” — Claude Code — who writes code based on this documentation.

First, I formulated the general concept: Vision, Mission, Target Audience, etc., and saved the resulting document in the project. Then, together with Claude, we prepared a basic PRD describing the main user flow.

Iteration

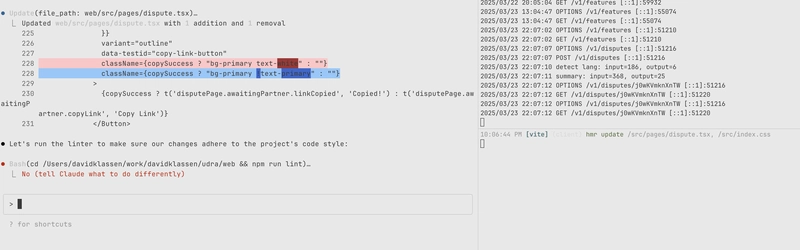

The implementation of each feature looked like this:

- In the web version of Claude, using the existing documentation, I prepare a separate document describing the feature. This document is added to the ./docs directory in the project repository.

- I ask Claude Code to create an implementation plan. Then we clarify details, the order of steps, and so on.

- Claude Code implements the plan step by step. After each step, I manually validate the result.

- In the web version of Claude, I formulate a list of test cases.

- In Claude Code, we write tests and polish the implementation.

- Commit — and move on to the next feature. It might seem strange that tests are left until the end. I’ll explain below why I chose this approach.

Challenges

Architecture and Business Logic

Once the code volume exceeds 2–3 thousand lines, LLMs start to struggle with making correct architectural decisions. Don’t expect Claude Code to understand on its own when and how to separate DTO models and the domain level, or where logic should reside — in the repository or in the service. All this needs to be explained manually, and then you still need to clean everything up yourself. Otherwise, you’ll end up with a mess.

Complex business logic (in my case — the content translation subsystem and language detection of input data) requires an especially careful approach. If a feature involves a large number of states (for example, a combination of selected locale and input language), there’s a risk that the LLM will start generating nonsense with multiple branches, get confused in the logic, and only make the situation worse when trying to fix everything.

Such logic needs to be manually decomposed, the architecture thought through, distributed across components, and presented in the most digestible form possible.

Tests

I’ve already partially mentioned this point. If you ask an LLM to work in the TDD spirit and generate tests first — it won’t work. It will generate nonsense, and then Claude Code will enter an endless, meaningless cycle of mutual adjustment of code and tests.

If you generate tests after the code is already ready — you’ll get a set that tests a lot of implementation details. Since Claude Code sees the code in context, it will definitely use this to its advantage.

The approach that worked for me was: preparing test cases in the web version of Claude based on the PRD, and then transforming these cases into code.

I also had to add specific instructions in CLAUDE.md to avoid spontaneous initiatives from the assistant:

## Test Guidelines

- NEVER modify test files unless explicitly asked to do so

- Tests are used to validate code changes and ensure functionality

- Breaking tests makes it difficult to validate other changes

- If you need a test changed or updated, ask for explicit permission first

- Do not attempt to run and fix test issues yourself - wait for guidance

Without these instructions, the assistant constantly tried to “fix” the tests as soon as one of them started failing.

Result

The outcome was a quite functional prototype: theudra.com.

There are many points that could be criticized, but overall — it’s decent.

Conclusions

The combination I tried works well for developing prototypes. Can it be used to create a full-fledged MVP? I don’t know… Perhaps with certain experience — yes.

My feeling is that within this task, the acceleration was approximately 3–5 times. Additionally, I noticed great potential for tool improvement. For example, Claude Code often “greps” the source code when it needs to find usages of a specific symbol. It seems that integration with LSP or similar tools could significantly improve the quality of the result — this is something that immediately stands out.

And, of course, we should expect further development of the LLMs themselves. Verdict: I liked it. There’s definitely something to it.

Originally published on Medium.

Top comments (0)