I have recently coded from a scratch Gradio app for the famous Blip2 captioning models.

1 Click auto installers with instructions are posted here : https://www.patreon.com/posts/sota-image-for-2-90744385

This post also have 1 click Windows & RunPod installers with Gradio interfaces supporting batch captioning as well for the following image vision models : LLaVA (4-bit, 8-bit, 16-bit, 7b, 13b, 34b), Qwen-VL (4-bit, 8-bit, 16-bit), Clip_Interrogator Gradio APP that supports 115 Clip Vision models with combination of 5 caption models.

All precisions are working on Windows as well with our special installers.

16-bit mode works fastest meanwhile 8-bit mode works slowest. 4-bit mode is slower than 16-bit precision but faster than 8-bit precision.

Look at all the information below.

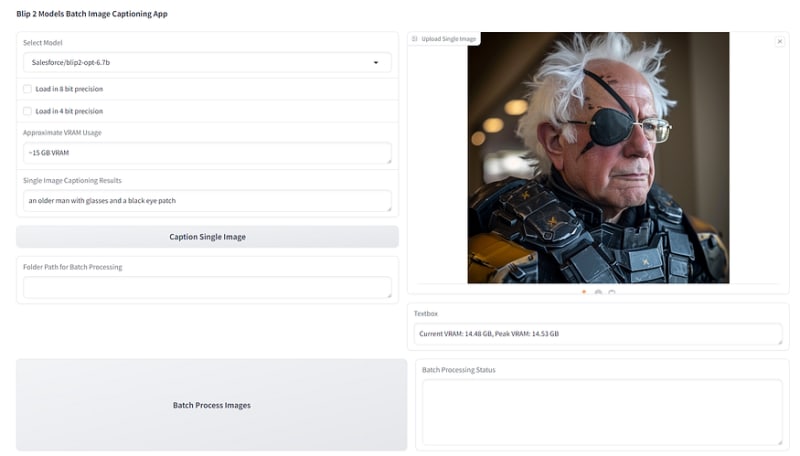

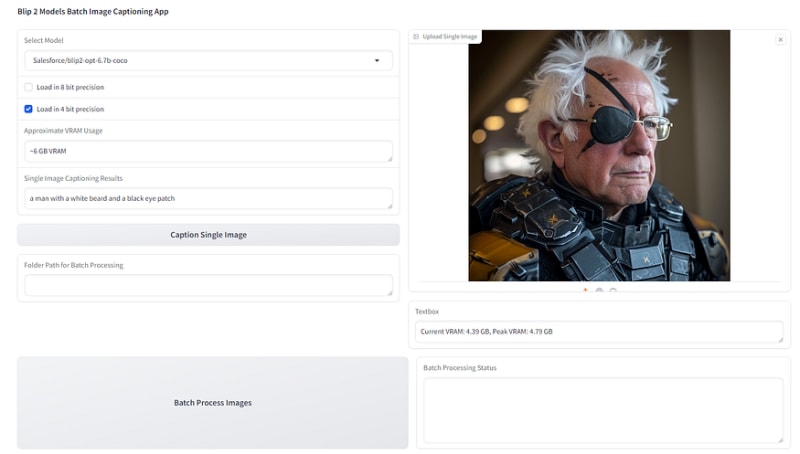

Blip 2 Models Batch Image Captioning App

The testings are as below.

When doing batch processing, only 1 image at a time is captioned. So there weren't parallel captioning of images.

Salesforce/blip2-opt-6.7b — 16-bit precision

Batch processing speed on RTX A6000 : Speed: 0.32 second/image

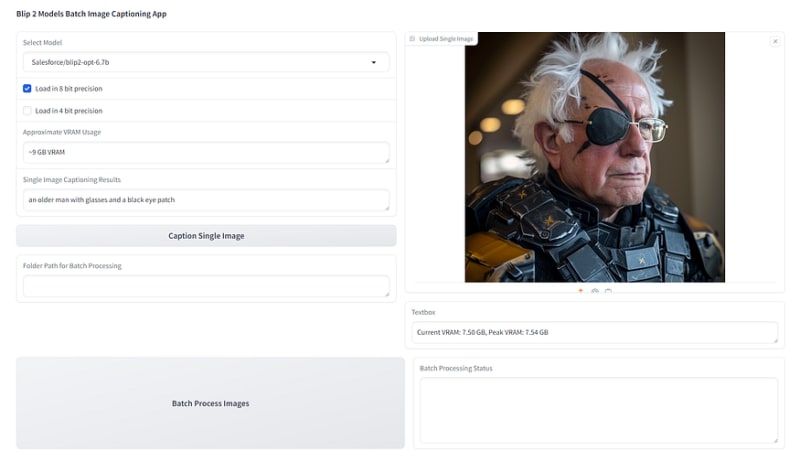

Salesforce/blip2-opt-6.7b — 8-bit precision

Batch processing speed on RTX A6000 : Speed: 1.7 second/image

Salesforce/blip2-opt-6.7b — 4-bit precision

Batch processing speed on RTX A6000 : Speed: 0.65 second/image

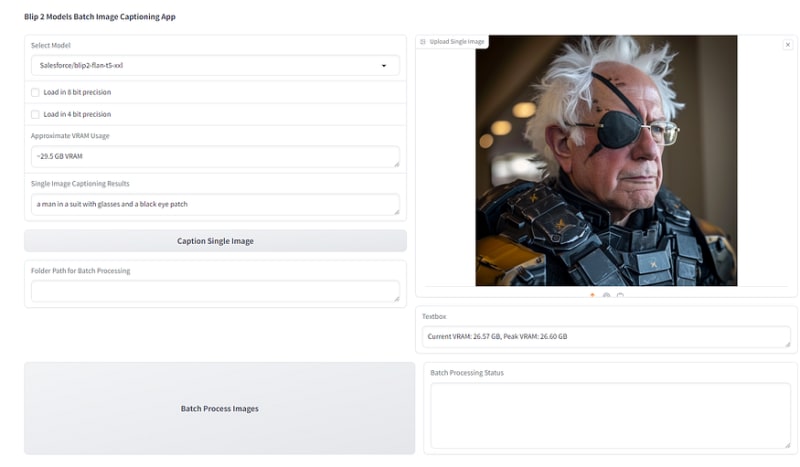

Salesforce/blip2-flan-t5-xxl— 16-bit precision

Batch processing speed on RTX A6000 : Speed: 0.41 second/image

Salesforce/blip2-flan-t5-xxl — 8-bit precision

Batch processing speed on RTX A6000 : Speed: 1.6 second/image

Salesforce/blip2-flan-t5-xxl — 4-bit precision

Batch processing speed on RTX A6000 : Speed: 0.82 second/image

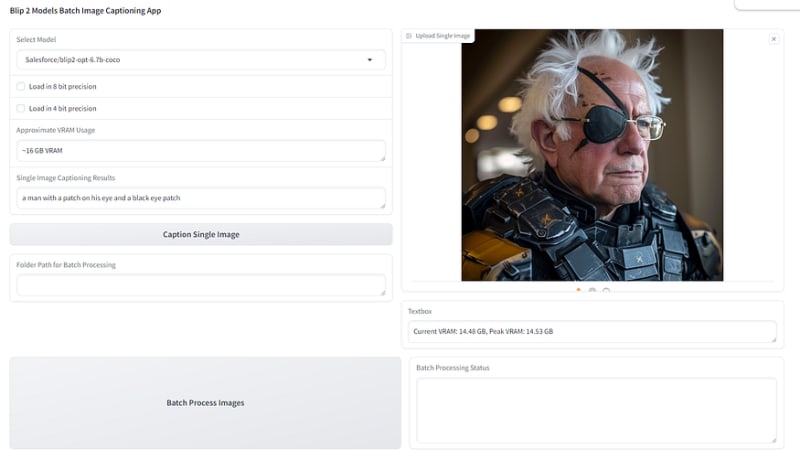

Salesforce/blip2-opt-6.7b-coco— 16-bit precision

Batch processing speed on RTX A6000 : Speed: 0.39 second/image

Salesforce/blip2-opt-6.7b-coco — 8-bit precision

Batch processing speed on RTX A6000 : Speed: 2.01 second/image

Salesforce/blip2-opt-6.7b-coco — 4-bit precision

Batch processing speed on RTX A6000 : Speed: 0.74 second/image

Top comments (0)