As part of my job, I recently had to modify Fluentd to be able to stream logs to our Zebrium Autonomous Log Monitoring platform. In order to do this, I needed to first understand how Fluentd collected Kubernetes metadata. I thought that what I learned might be useful/interesting to others and so decided to write this blog.

If you want to play around with Fluentd in a daemonset container, you can install the version that we use here (you can also find more detail on our docs page).

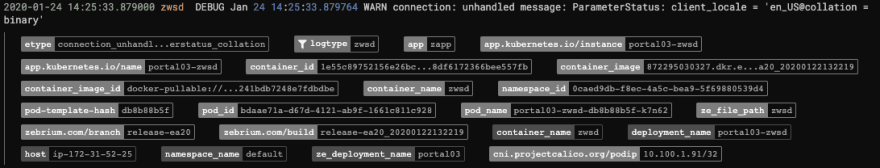

Here's a screen shot from our UI of the metadata that we collect from Kubernetes:

As you can see, there's a lot of metadata in a Kubernetes cluster like hostname, namespace, namespace, pod name, container name and much more. You may be wondering now, how this metadata on your Kubernetes cluster is magically collected?

To understand how it works, first I will explain the relevant Fluentd configuration sections used by the log collector (which runs inside a daemonset container). Below is an example fluentd config file (I sanitized it a bit to remove anything sensitive).

<source>

@type tail

format json

path "/var/log/containers/*.log"

read_from_head true

pos_file "/mnt/var/cache/zebrium/containers_logs.pos"

time_format %Y-%m-%dT%H:%M:%S.%NZ

utc true

tag "containers.*"

<parse>

time_format %Y-%m-%dT%H:%M:%S.%NZ

@type json

localtime false

time_type string

</parse>

</source>

<filter containers.**>

@type kubernetes_metadata

@log_level "warn"

annotation_match [".*"]

de_dot false

tag_to_kubernetes_name_regexp ".+?\\.containers\\.(?<pod_name>[a-z0-9]([-a-z0-9]*[a-z0-9])?(\\.[a-z0-9]([-a-z0-9]*[a-z0-9])?)*)_(?<namespace>[^_]+)_(?<container_name>.+)-(?<docker_id>[a-z0-9]{64})\\.log$"

container_name_to_kubernetes_regexp "^(?<name_prefix>[^_]+)_(?<container_name>[^\\._]+)(\\.(?<container_hash>[^_]+))?_(?<pod_name>[^_]+)_(?<namespace>[^_]+)_[^_]+_[^_]+$"

</filter>

The "<source>" section tells Fluentd to tail Kubernetes container log files. On a Kubernetes host, there is one log file (actually a symbolic link) for each container in /var/log/containers directory, as you can see below:

root# ls -l

total 24

lrwxrwxrwx 1 root root 98 Jan 15 17:27 calico-node-gwmct_kube-system_calico-node-2017627b57442d7d41d39bc8c2f62fd9979cd61ca2afbd53ba2c9f712aa7f311.log -> /var/log/pods/kube-system_calico-node-gwmct_62c0dba5-36fd-432d-bb10-da7040165c33/calico-node/0.log

lrwxrwxrwx 1 root root 98 Jan 15 17:27 calico-node-gwmct_kube-system_install-cni-1e6b3d4b09c4b881f6bf837da98b41fe555332d4638339b79acb16c7b0c2478e.log -> /var/log/pods/kube-system_calico-node-gwmct_62c0dba5-36fd-432d-bb10-da7040165c33/install-cni/0.log

You can also see the symbolic link has pod name, namespace, container name in the symbolic links.

The log messages from containers are tagged with a "containers.<container_log_file_path>" tag as defined in the tail source section. They match the filter "containers.**" in the next section. This filter is a type of kubernetes_metadata. The kubernetes_metadata plugin looks at the tag, in the case of calico container, it is "containers.containers.var.log.containers.calico-node-gwmct_kube-system_calico-node-2017627b57442d7d41d39bc8c2f62fd9979cd61ca2afbd53ba2c9f712aa7f311.log", it applies a regex match pattern defined by tag_to_kubernetes_name_regexp on the tag to extract pod name, namespace and container name.

Getting additional metadata

Besides pod name, namespace and container name, there is also other metadata such as host, deployment name, namespace_id, etc that I needed. To achieve this, I needed to do some extra work as part of zlog-collector (see links at the top of this blog).

This extra metadata is actually retrieved by calling the Kubernetes API. Since the Kubernetes API requires authentication, you may be wondering how this plugin gets permission to call the API.

To understand how log collector uses the Kubernetes API, we need to look at the zlog-collector deployment file zlog-collector.yaml. At the top of this file, it defines the service account, cluster role and cluster role binding:

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: zlog-collector

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: zlog-collector

rules:

- apiGroups:

- ""

resources:

- namespaces

- deployments

- pods

- events

verbs:

- get

- list

- watch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: zlog-collector

roleRef:

kind: ClusterRole

name: zlog-collector

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: zlog-collector

namespace: default

As you see in the ClusterRole section, it creates a cluster role named zlog-collector that has permission to get, list and watch resources namespace, deployments, pods and events. The service account zlog-collector is bound to cluster role zlog-collector. The service account is used in the daemonset configuration so zlog-collector daemonset has the permission to get, list and watch resources:

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: zlog-collector

spec:

selector:

matchLabels:

app: zlog-collector

template:

metadata:

labels:

app: zlog-collector

zebrium.com/exclude: ""

spec:

serviceAccountName: zlog-collector

containers:

- name: zlog-collector

But how exactly do all these permissions pass on to actual software programs running inside zlog-collector? Unfortunately it is not documented anywhere, so I spent some time hacking around to understand how this works. Now I am sharing this information for free :-) It is nothing short of Kubernetes magic!

First use the command below to see the zlog-collector container configuration:

root# docker ps | grep zlog-collector

a197a31fc989 zebrium/zlog-collector "/usr/local/bin/entr…" 8 days ago Up 8 days k8s_zlog-collector_zlog-collector-k86td_default_e417a451-31a9-4e57-8542-5adafa734bbb_0

root# docker inspect a197a31fc989 | less

...

"HostConfig": {

"Binds": [

...

"/etc/localtime:/etc/localtime:ro",

"/var/lib/kubelet/pods/e417a451-31a9-4e57-8542-5adafa734bbb/volumes/kubernetes.io~secret/zlog-collector-token-9dnd8:/var/run/secrets/kubernetes.io/serviceaccount:ro",

"/var/lib/kubelet/pods/e417a451-31a9-4e57-8542-5adafa734bbb/etc-hosts:/etc/hosts",

"/var/lib/kubelet/pods/e417a451-31a9-4e57-8542-5adafa734bbb/containers/zlog-collector/67f8d326:/dev/termination-log"

],

...

As you can see credential information is bind-mounted on /var/run/secrets/kubernetes.io/serviceaccount inside zlog-collector container. This location is the default location for kubernetes client library to get credentials. Let's see what is in the directory:

root# ls /var/lib/kubelet/pods/e417a451-31a9-4e57-8542-5adafa734bbb/volumes/kubernetes.io~secret/zlog-collector-token-9dnd8/

ca.crt namespace token

root# cat /var/lib/kubelet/pods/e417a451-31a9-4e57-8542-5adafa734bbb/volumes/kubernetes.io~secret/zlog-collector-token-9dnd8//token

291bnQubmFtZSI6Inpsb2ctYdf429sVjdG9yIiwia3ViZXJduZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9...

When service account is associated with a container, Kubernetes "bind-mount's" the credential information into the container so it can use the certificate and token to call Kubernetes API. It is Kubernetes magic!

If you want to see all of this in practice, you can download and install zlog-collector at https://github.com/zebrium/ze-fluentd-plugin. You can also sign-up for a free Zebrium account to see what all the metadata looks like here. And please stay tuned for my next blog which will cover a bunch of interesting tips and tricks I've learned about Fluentd!

Posted with permission of the author: Brady Zuo @ Zebrium

Top comments (0)