Introduction

This blog post is a road trip: picking up some friends and trying out some things. So do not expect any well formulated business or technical problem that this blog post will provide a solution to. It is about playing around with some stuff from the area of Kubernetes namely event-driven scaling aka KEDA and Kyma as well as Azure Functions running on Kubernetes.

Do you want to see where the road trip guided us and learn where there have been some rougher and some surprisingly smooth parts of the ride? Then let’s go and hit the road!

All the code is available in my GitHub repository: Link

Prerequisites

In case you want to follow along you need a (small) Kubernetes Cluster as well as Kyma running on that cluster.

There are basically two versions of Kyma: the open-source version that you can install on any Kubernetes cluster or the managed version that is available on the SAP Business Technology Platform (including the trial). While the later has some advantages (basically you do not need to set up Kubernetes and Kyma and it is for free) it does not give you full cluster access which is needed to install KEDA. The managed offering is therefore no option.

Okay, so let us use then use a Kubernetes cluster on Azure or GCP and install Kyma on top as described in the documentation. And here we face the first rough part of our journey: You can install Kyma on those Kubernetes offerings, but you have again two options: install Kyma without or with an own domain.

The installation without an own domain ( see https://kyma-project.io/docs/#installation-install-kyma-on-a-cluster) is relying on xip.io as wildcard DNS provider. There is one problem with this: xip.io is down since May 2021 and will not be available again.

So you can install Kyma 1.x without own domain, but you have no access to the Kyma console UI. An issue is open for this (https://github.com/kyma-project/kyma/issues/11924) and according to the Kyma slack channel the situation will improve with the upcoming release of Kyma 2.x, but until then you must provide an own domain to get access to the Kyma console UI. From my point of view this lifts the entry barrier of trying out open-source Kyma, but currently this is the way.

The Kyma documentation (https://kyma-project.io/docs/#installation-install-kyma-with-your-own-domain) is quite helpful here and proposes to use freenom.com to get a free domain. If you do that be aware that you will be the user of the free domain not the owner, so freenom can revoke the domain at any point in time without prior notice (and it is not clear, if, when and why this might happen).

I was a bit luckier as I could use Gardener to provide me a Kubernetes cluster and install Kyma 1.24 on top of it.

Phew ... first obstacle overcome, and we did not even really start the trip.

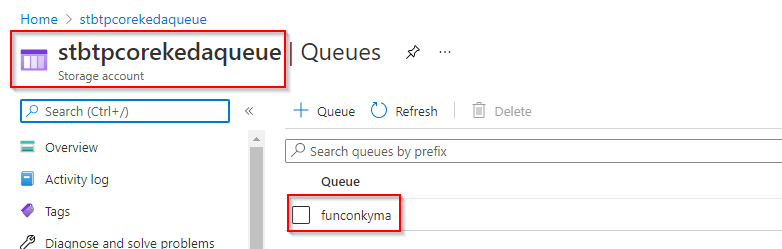

Next I wanted to rely on the scalers that KEDA delivers out of the box (see KEDA scalers) and use an Azure Function as scaled object, so I decided to take the easiest configuration that makes sense and used Azure Queue Storage (https://azure.microsoft.com/en-us/services/storage/queues/#overview) to provide messages as events for scaling. If you want to follow along, you consequently also need an Azure subscription (and not only for the queues as we will see later). I created a queue with the name funconkyma where I put my messages in.

Point of Interest No 1: The Subject to Scale aka Azure Function on K8s

In order to scale something event driven with KEDA, we need a workload in our cluster. I decided to use Kyma as an opinionated stack on top of Kubernetes so the first idea would be to use Kyma functions for that. However, they rely either on NATS eventing or on the SAP Event Mesh and both are not supported by scalers of KEDA.

As I "just" wanted to play around and not write a custom scaler, I decided to use another type of workload and as I am a fan of Azure Functions and you can deploy them to Kubernetes, I gave that a try.

As mentioned, I used Azure Queue Storage as trigger for the KEDA scaler as well as trigger for the Azure Function. So let's build the Azure Function.

I locally scaffolded a basic Azure Function in TypeScript that is triggered by a queue from that Azure Storage. No magic in there I just want to see if things basically work. Here is the code

import { AzureFunction, Context } from "@azure/functions"

const queueTrigger: AzureFunction = async function (context: Context, myQueueItem: string): Promise<void> {

context.log('Queue trigger function processed work item: ', myQueueItem);

};

export default queueTrigger;

and the configuration:

{

"bindings": [

{

"name": "myQueueItem",

"type": "queueTrigger",

"direction": "in",

"queueName": "funconkyma",

"connection": "stbtpcorekedaqueue_STORAGE"

}

],

"scriptFile": "../dist/SampleQueueTriggerTS/index.js"

}

Following my usual development loop I first wanted to see if things work locally, so putting the storage connection string into local.settings.json, keying in npm run start and putting a message in a queue after the Function started should be all to check.

And here comes a small surprise: I did not specify a value for the parameter AzureWebJobStorage in my local.settings.json file. This caused an error during start-up. But depending on the Azure Functions trigger the connection to a storage is necessary for the internal mechanics of Azure Functions to work properly. To be precise this is required for all triggers other than httpTrigger, kafkaTrigger, rabbitmqTrigger. This is described in the Azure Functions documentation at https://docs.microsoft.com/en-us/azure/azure-functions/storage-considerations.

Okay, but what does this mean for the deployment to Kubernetes? If you do not use an HTTP, Kafka or RabbitMQ trigger you can containerize your Azure Function and deploy it to any Kubernetes cluster but you will have implicit dependencies to Azure. This dependencies should be mentioned more clearly in the documentation in my opinion. Also something to take a closer look at when deploying Azure Functions with Azure Arc as air gapped scenarios might be an issue then (I did not investigate further into that up to now).

Consequently, I provided the storage (using the same as for the messages) and things worked as expected, the function gets triggered and picks up the message from the queue.

Cool, so next let us put the Azure Function into a Docker container image. The Azure Functions CLI does a great job in supporting you via func init –-docker-only which creates the necessary Dockerfile as well as a .dockerignore file.

Nothing to change there so build the image and push it to Docker Hub. (I use a Makefile for that, as you can see in my GitHub repository).

Let's finish this part of the trip with two remarks:

- Set the auth level of the Azure Function to

anonymousas you will not deploy the app to Azure and consequently no function key will be created (see: https://docs.microsoft.com/de-de/azure/azure-functions/functions-create-function-linux-custom-image). - If you want to switch off the Azure Function App Landing page set the parameter

AzureWebJobsDisableHomepagetotrue.

Point of Interest No 2: Deployment to Kyma

As we have the Docker image in place, we can now deploy the Azure Function to Kyma. But wait, in Azure we have the App settings for configuring the connection to the storage account. How to do that when using a container?

The Azure Functions runtime expects the values for the settings as environment parameters, so we can specify them in our deployment YAML file in the env section for the container.

I deployed the value for the connection string separately as a Kubernetes secret and referenced it in my deployment file.

Here is the content of the deployment file:

apiVersion: apps/v1

kind: Deployment

metadata:

name: queue-function

labels:

app: queue-function

spec:

replicas: 1

selector:

matchLabels:

app: queue-function

template:

metadata:

labels:

app: queue-function

spec:

containers:

- image: <dockerhubaccount>/simple-queue-azfunc:0.0.1

name: queue-function

ports:

- containerPort: 80

env:

- name: AzureWebJobsStorage

valueFrom:

secretKeyRef:

key: AzureWebJobsStorage

name: secretforqueuefunction

- name: stbtpcorekedaqueue_STORAGE

valueFrom:

secretKeyRef:

key: stbtpcorekedaqueue_STORAGE

name: secretforqueuefunction

---

apiVersion: v1

kind: Service

metadata:

name: queue-function

labels:

app: queue-function

spec:

ports:

- port: 80

protocol: TCP

targetPort: 80

selector:

app: queue-function

type: ClusterIP

status:

loadBalancer: {}

And here is the secret:

apiVersion: v1

kind: Secret

metadata:

name: secretforqueuefunction

labels:

app: queue-function

type: Opaque

data:

AzureWebJobsStorage: <Base64 encoded connection string>

stbtpcorekedaqueue_STORAGE: <Base64 encoded connection string>

In addition I also created a apirule.yaml file which is sepcific to Kyma to have access to the Azure Function App landing page via HTTP.

# Just to check that Function worker is running for queue

apiVersion: gateway.kyma-project.io/v1alpha1

kind: APIRule

metadata:

name: queue-function

spec:

gateway: kyma-gateway.kyma-system.svc.cluster.local

rules:

- path: /.*

accessStrategies:

- handler: noop

config: {}

methods:

- GET

service:

host: queue-function

name: queue-function

port: 80

This is just to check that the app per se is up and running and to narrow down potential errors:

I deleted the rule as soon as I confirmed that the app is up and running.

After the successful deployment I have one pod up and running, the connection to the Azure Storage is available via the env variables:

We can check if a message in the queue is picked up and processed ... and it is:

Up to now we achieved that we have an Azure Functions pod up and running that processes messages from the Azure Storage Queue. That is good, but the pod is always up and running (no scale to zero) and the scaling of the pod is done via the metrics of CPU and memory consumption so the basic Horizontal Pod Autoscaler which is not really fitting.

Let us improve this by putting some KEDA fairy dust on top and enable event-based scaling including a scale to zero.

Point of Interest No 3: KEDA on Kyma

First things first, we need to get KEDA into our cluster. There are different ways how to do that available described in the documentation (see https://keda.sh/docs/deploy/).

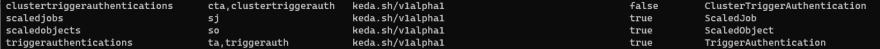

I decided to go for the installation via HELM chart which is straightforward. The installation gives you

- a dedicated KEDA namespace

- two pods in the namespace

- several new CRDs that you can use for the event-driven scaling

The stage is set, so let’s scale our pod that contains the Azure Function.

The basis for this is the so called ScaledObject CRD that defines which workload to scale. The definition consists a generic part applicable for every scaler (see: https://keda.sh/docs/concepts/scaling-deployments/#scaledobject-spec). This part defines for example the cooling period i. e. how long to wait until to scale back down to the specified minimal replica count.

In addition, the definition contains a scaler-specific part. In our case this section follows the Azure Storage Queue specification for the scaler (see https://keda.sh/docs/scalers/azure-storage-queue/).

One parameter of the specification is the variable for the connection string that needs to be specified. But wait … we have that in our Kubernetes secret, so how can we get access to that?

KEDA provides a predefined way on how to access these type of data via additional CRDs namely TriggerAuthentication (reusable in one namespace) and ClusterTriggerAuthentication (reusable in the cluster). These CRDs allow us to reference environment variables, secrets and pod authentication providers as source for your data. The CRDs can then be referenced in the ScaledObject YAML file.

And this is exactly what I did, I referenced my Kubernetes secret in the TriggerAuthentication YAML file:

apiVersion: keda.sh/v1alpha1

kind: TriggerAuthentication

metadata:

name: queue-trigger-auth

spec:

secretTargetRef:

- parameter: stbtpcorekedaqueue_STORAGE

name: secretforqueuefunction

key: stbtpcorekedaqueue_STORAGE

And used the TriggerAuthentication in my ScaledObjectYAML file:

apiVersion: keda.sh/v1alpha1

kind: ScaledObject

metadata:

name: azure-queue-scaledobject

spec:

scaleTargetRef:

name: queue-function

cooldownPeriod: 60

triggers:

- type: azure-queue

authenticationRef:

name: queue-trigger-auth

metadata:

queueName: funconkyma

connectionFromEnv: stbtpcorekedaqueue_STORAGE

queueLength: "3"

Attention: you might google around to find some code snippets for KEDA - be aware that the API of KEDA changed and some blog posts reference the old one which was

keda.k8s.io/v1alpha1.

Finally, I deployed those files to the cluster into the namespace where my Azure Function deployment lives

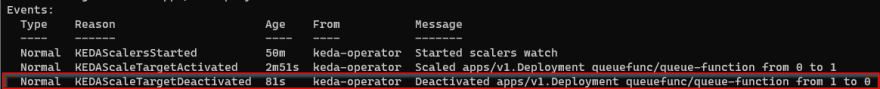

and … bahm, the pod nearly instantaneously scaled to zero! That looks good.

Next, I queued a message and the magic happened: KEDA picked up the message, scaled up the pod

and the pod processed the message as expected.

After the grace period the pod scaled back down to zero:

No obstacles, this just worked. What a smooth finish of the road trip with Kyma, KEDA and Azure Functions.

Summary

In general, starting with KEDA is a really smooth experience. The concept follows a clean designed and there is no big entry barrier to overcome in order to make use of it. Several scalers are available out-of-the-box, which helps to try things out with something scaling trigger that you are already familiar with.

I would state that in case you need to handle event-driven scaling of workloads on Kubernetes, you should take a closer look at KEDA.

I think that KEDA would be a very nice addition to the opinionated stack of Kyma covering the aspect of event-driven scaling including a scale to zero. Concerning Kyma functions there might be some work ahead as custom scalers are needed. Maybe the Prometheus scaler might be also worth looking at as a feasible workaround.

Last but not least Azure Functions on Kubernetes: due to some dependencies on Azure Storage or Microsoft/Azure-specific services the deployment to Kubernetes comes with some flaws. So in case you want to adopt this scenario investigation is needed especially with respect to the triggers and their implicit requirements.

That’s it for this road trip, let’s see where the next one will take us 😎

Top comments (0)