Written by Ovie Okeh✏️

Ever heard of the term “AI agent” and wondered what it means? Put simply, they're digital bots that can interpret what we want, make autonomous choices, and complete tasks. You tell them what you need using programming languages or specific commands, and they can use tools available to them to accomplish your commands.

However, working with these AI agents often requires a sandbox environment for multiple reasons, including security, safety, and ease of deployment. This is where E2B comes into the picture.

In this post, we’ll discuss AI agents and how to use the E2B platform to build sandbox environments for them. To follow along with the code in this article, take a look at the GitHub repo.

What is E2B?

E2B is a platform used to augment building AI autonomous agents. It allows you to provide your AI agent access to tools in a safe, sandboxed environment. With its sandbox feature, you can set up an environment with whatever tools and packages your AI agent requires for the tasks you want it to accomplish.

This is very helpful as you can effectively decouple your agent’s deployment from its working area. This means that any actions, like file system operations, are contained within a separate sandbox, and, in the case of rogue or unwanted actions, means that the deployment environment will remain safe and stable.

Understanding AI-powered agents

So, what can these AI agents actually do? Well, almost anything, as long as it can be accomplished through code and combined with the right tools and data. A possible use case is an AI agent that periodically goes through your YouTube playlists and generates quizzes for you to test your memory and knowledge of the videos you’ve watched.

A simpler agent might watch a media folder and automatically add filenames to the images in the folder after viewing and processing the image content.

Advantages of using AI agents

Why would you build an AI agent instead of doing a task yourself or hiring someone else to do it? There are numerous situations where it might make sense to build one. A few examples are:

- Repetitive tasks: Some tasks may not necessarily be complex, but take a lot of manual effort. AI agents shine in this regard, especially if these tasks include categorization, summarization, or extracting insights

- Scalability: Since AI agents don’t need to sleep, eat, or be compensated, they can be scaled to be quite large and run for as long as needed. This means that with the right setup, you can accomplish quite a lot

- Adaptability: Some AI models can make decisions in controlled environments and process unstructured data. This opens the door for a wide variety of tasks and problems that could be solved with an agent

Building an AI agent

Now that we have established a basic understanding of AI agents and how E2B might help us build one, let’s go ahead and build a simple AI agent. This agent will:

- Watch for changes to a file containing questions

- Extract questions from the file whenever it’s updated

- Write the answers into a file that can be downloaded as long as the sandbox is running

It’s quite simple, but the goal is to show an example of how an agent might be built and to show how E2B augments this process.

Prerequisites

To follow along with this tutorial, you will need the following:

- Node.js v≥18

- OpenAI’s Node package and API keys

- An E2B API key (Grab a key here)

Setting up your environment

To start, initialize a Node.js project by running the following commands in your terminal:

mkdir scholar-agent && cd scholar-agent

npm init -y

npm install @e2b/sdk dotenv openai

mkdir lib

touch .env lib/index.js

So far, we’ve:

- Created a new directory (

scholar-agent) and navigated into it - Initialized a new Node.js project and installed some packages:

- Created a directory (

lib), and in that directory, added some files:-

.env- where we store our API keys -

lib/index.js- the main source code of the project

-

Adding agent functionality

With the project structure set up, it’s time to add the functionality. Ensure that you add your OpenAI key to the .env file, and then open up lib/index.js so we can start building out the agent:

// lib/index.js

require("dotenv").config()

const { OpenAI } = require("openai")

const { Sandbox } = require("@e2b/sdk")

// ...continues below

Start by setting up the environment and importing the necessary modules:

-

require("dotenv").config()initializes the environment variables from a.envfile -

OpenAIfor interacting with OpenAI's API -

Sandboxfrom@e2b/sdkfor interacting with E2B’s sandboxes

// ...continues from above

const INPUT_FOLDER = "input"

const INPUT_FILE = `${INPUT_FOLDER}/topics.txt` const OUTPUT_FOLDER = "explanations" async function fileSystemSetup(sandbox) { const directories = [OUTPUT_FOLDER, INPUT_FOLDER] for (const dir of directories) { try { await sandbox.filesystem.makeDir(dir) } catch (error) { console.error(`Error setting up directory ${dir}:`, error.message) } } } // ...continues below

We continue with more setup operations and define a bunch of variables to represent the files and folders the agent will operate on.

Then, we add a fileSystemSetup function that creates the necessary directories (INPUT_FOLDER and OUTPUT_FOLDER) using the sandbox.filesystem.makeDir method. We then catch and log any errors that might occur during this process.

Note how, instead of using the native Node fs library to create the directory, we’re creating the directory using the E2B Filesystem API. This provides:

- A hosted directory where you upload and download files for free

- A safe space to add/remove files without worrying about safety

// ...continues from above

async function saveToFile(name, data, sandbox) {

data = data.trim()

const formattedName = name.replace(/\s+/g, "-").toLowerCase()

const timestamp = new Date().getTime()

const fileName = `/${timestamp}-${formattedName}.md` const filePath = `${OUTPUT_FOLDER}${fileName}` try { await sandbox.filesystem.write(filePath, data) console.info(`Data saved to ${filePath} successfully.`) return { filePath, fileName } } catch (err) { console.error("Error saving data to file:", err) } } // ...continues below

Next is a function, saveToFile, that saves the provided data to a file, which:

- Formats the filename by removing any non-alphanumeric characters

- Creates a new file path based on the original file name and the current timestamp

- Writes the data to the file system based on this path

We also catch and log any errors during this process.

Again, note that we created this file using E2B’s Filesystem API instead of Node’s fs.

Connecting to an LLM

So far, we haven’t included any AI in our code and it can’t really do much. Let’s fix this by adding a function that will allow us to query OpenAI’s GPT-3.5 model for the answers in our questions input file;

// ...continues from above

async function answerQuestionWithGPT(question) {

const openaiClient = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

})

try {

const gptResponse = await openaiClient.chat.completions.create({

messages: [

{

role: "system",

content: `

- You are the world's best tutor

- You are the best at explaining complex concepts in simple terms

- You will be asked questions about a wide range of topics

- Your job is to answer the questions in a way that is easy to understand

- Your answers should be informative, fun, and engaging

`,

},

{

role: "user",

content: question,

},

],

model: "gpt-3.5-turbo",

});

return gptResponse?.choices[0]?.message.content?.trim()

} catch (error) {

console.error("Error summarizing text:", error.message);

return "";

}

}

// ...continues below

This answerQuestionWithGPT function accepts a text question as an argument, initializes the OpenAI API client, and then queries the Completions API with a prompt and the question argument. At the end, we simply return the trimmed response from GPT-3.5.

In this instance, our usage of AI is very simple. However, you could take this much further by giving the model access to tools like a code interpreter, API integrations, etc. This would allow for a wider variety of tasks to be handled by the agent.

Observing the agent’s output

You can observe the agent's activity through the console logs sprinkled all over the code and by checking the output in the explanations folder within the sandbox. To access the explanations sandbox folder, you can use the Filesystem API to read the generated files:

// ...continues from above

async function viewFiles({ path, sandbox, download = true }) {

try {

const files = await sandbox.filesystem.list(path)

const decodedFiles = []

if (download && files?.length) {

for (const file of files) {

const filePath = `${path}/${file.name}`

const fileData = await sandbox.filesystem.read(filePath)

decodedFiles.push({ [filePath]: fileData })

}

}

return {

files,

decodedFiles,

}

} catch (error) {

console.error("Error viewing files:", error.message)

}

}

// ...continues below

This function will let us view the output of the agent later. We list all the files in a directory, and if there are files, we read and save all of them into an array.

It’s a simple way of doing it as we are simply accessing the file system via the E2B Filesystem APIs and outputting the contents in the console.

A more robust way would be to set up a server within the sandbox and access that server through the Sandbox URL. Maybe this is an exercise for you? Hint: The E2B Process API might come in handy:

// ...continues from above

async function agent(sandbox) {

try {

const inputFile = await sandbox.filesystem.read(INPUT_FILE)

const questions = inputFile

.split("\n")

.map((q) => q.trim())

.filter(Boolean)

for (const question of questions) {

const explanation = await answerQuestionWithGPT(question)

if (explanation) {

const { filePath } = await saveToFile(question, explanation, sandbox)

console.info(`Saved explanation to ${filePath}`)

}

}

if (questions.length) {

const { files, decodedFiles } = (await viewFiles({ path: OUTPUT_FOLDER, sandbox })) ?? {}

console.info(`Files in ${OUTPUT_FOLDER}:`, files)

console.info(`Decoded files in ${OUTPUT_FOLDER}:`, decodedFiles)

await sandbox.filesystem.write(INPUT_FILE, "")

}

} catch (err) {

console.error("Error in agent function:", err)

}

}

// ...continues below

Building the agent’s core function

Let’s move on to the core function of the agent. Here, we read the input file from the sandbox’s filesystem and format the contents into an array of trimmed questions. We answer each question with the answerQuestionWithGPT function above and save the response in the sandbox’s filesystem.

In the end, we view the output explanations folder to inspect the agent’s output. Then, we clear the input file to ensure that those questions aren’t answered again:

// ...continues from above

async function main() {

try {

if (!process.env.OPENAI_API_KEY) {

throw new Error("OPENAI_API_KEY is not set in environment variables")

}

if (!process.env.E2B_API_KEY) {

throw new Error("E2B_API_KEY is not set in environment variables")

}

const sandbox = await Sandbox.create({ template: "base" })

console.info(`Sandbox URL: https://${sandbox.getHostname()}`)

await fileSystemSetup(sandbox)

const inputFolderWatcher = sandbox.filesystem.watchDir(INPUT_FOLDER)

inputFolderWatcher.addEventListener((event) => {

if (event.operation !== "Write") {

return;

}

agent(sandbox).catch((err) => {

console.error("Error running agent:", err)

})

})

await inputFolderWatcher.start()

setTimeout(async () => {

await sandbox.filesystem.write(

INPUT_FILE,

`

What is an AI agent?

How do butterflies get their colors?

Why do we have leap years?

`

)

}, 1000)

} catch (error) {

console.error("Error running bot:", error.message)

}

}

main().catch((err) => {

console.error("Unhandled error:", err)

})

// end of file

Finally, we get to the main flow of the agent. I like to think of this as the thinking brain of the agent. Here, we:

- Check to ensure that the OpenAI and E2B API keys are available in the environment

- Create a new E2B sandbox and log the sandbox URL to the console

- N.B., you can use this URL to access the sandbox’s file system, process, etc.

- Next, we set up the working folders within the sandbox and add an event listener to the input file folder. This event listener will be triggered every time an

fs(filesystem) operation happens within the folder (e.g., when we update theinput/topics.txtfile) - Within this event listener, we run the

agentfunction so that new questions are processed as they’re handled, but only onWriteevents - Finally, we updated the input file with some test questions to trigger the bot

Running the AI agent

With the main logic and functions of the AI agent in place, we can run it and see it in action. Run the agent by executing node lib/index.js in your terminal. This will kickstart the main function, setting up the sandbox environment and preparing the agent for processing questions.

Once the agent is running, it will monitor the input/topics.txt file for changes. When new questions are added to this file, the agent will process them, generating answers using the GPT model.

Note: E2B Sandboxes can run for only 24 hours at the moment. There are plans to make it indefinite, but for now, you can resume a sandbox anytime with its id with await Sandbox.reconnect(sandbox.id).

Debugging

The E2B SDK only works with Node versions >18. So, if you see the following error while trying to run the agent, try switching to any Node version greater >18:

Error running bot: Headers is not defined

Conclusion

With the right use case, models, and your imagination, AI agents can be used to accomplish almost any task possible in the digital space. What we’ve covered in this tutorial is just a glimpse of what you can do, especially when taking advantage of E2B’s features.

If you would like to explore the topic of building AI agents further, here are some options you could explore:

- Expand the agent's capabilities: Consider integrating more complex AI models or additional APIs to enhance the agent's functionality. For instance, connecting to external data sources for more comprehensive answers or using E2B’s custom sandboxes that allow you to add even more tools like Code Interpreter to your agents

- Implement a user interface: To make it easier to interact with the agent, you could create some sort of UI or CLI to interact with the agent

- Continuous improvement: AI models are being improved every day. Keep an eye out for interesting ones and see what you can build with them

Hopefully, this tutorial was a good intro to the world of AI agents. All the code referenced can be downloaded here.

Are you adding new JS libraries to build new features or improve performance? What if they’re doing the opposite?

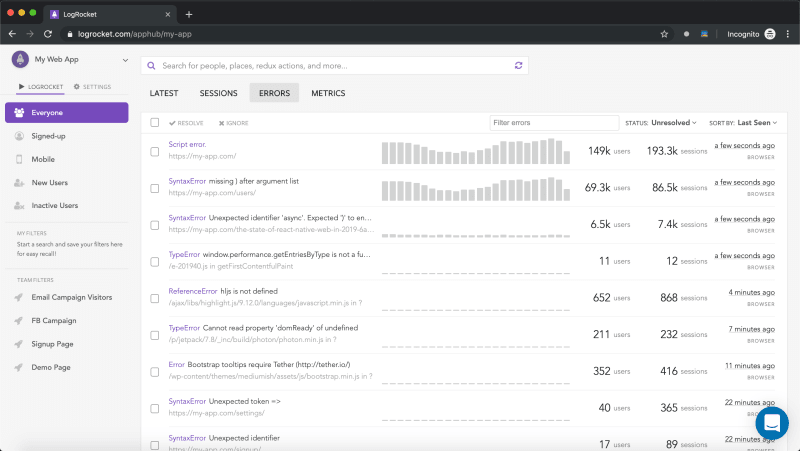

There’s no doubt that frontends are getting more complex. As you add new JavaScript libraries and other dependencies to your app, you’ll need more visibility to ensure your users don’t run into unknown issues.

LogRocket is a frontend application monitoring solution that lets you replay JavaScript errors as if they happened in your own browser so you can react to bugs more effectively.

LogRocket works perfectly with any app, regardless of framework, and has plugins to log additional context from Redux, Vuex, and @ngrx/store. Instead of guessing why problems happen, you can aggregate and report on what state your application was in when an issue occurred. LogRocket also monitors your app’s performance, reporting metrics like client CPU load, client memory usage, and more.

Build confidently — start monitoring for free.

Top comments (0)