In my previous post on using Redis as a Database I mentioned how I’m using it to store and retrieve tweets in the context of Visualizer, my pet project.

Now I want to show some performance characteristics of Visualizer.

Test Setup

The tests were performed on my M1 Pro Macbook Pro with 32 GB of ram, connected to WiFi and on battery power. I’m running both Visualizer microservices in Release mode with Jetbrains Rider, Redis Stack in the command line, Visualizer Frontend in VSCode and the current version of macOS Ventura.

Data Ingestion

Here’s the code to store a single tweet in Redis

This runs in a dedicated microservice and is executed every time a new tweet is retrieved from Twitter’s sample stream. In a future post I’ll present the architecture of Visualizer.

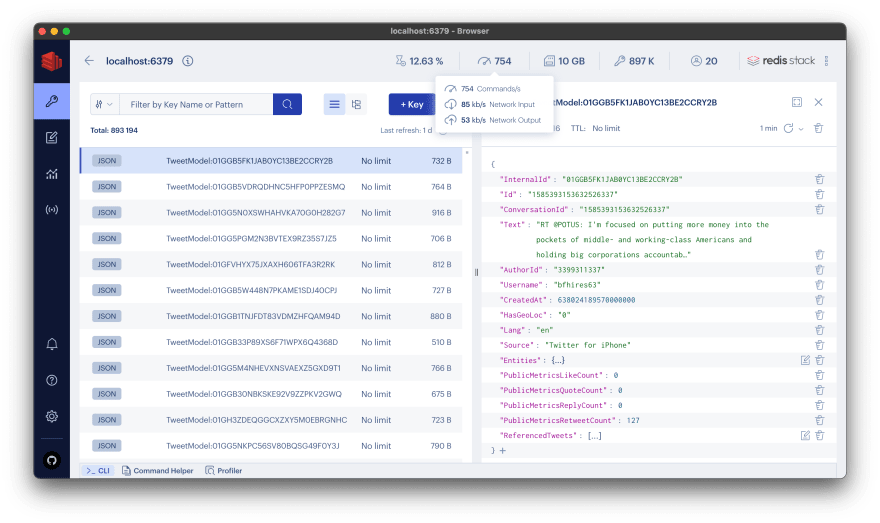

Using RedisInsight I can see that with my current setup I can manage to send around 700 commands per second, mostly storing tweets.

Data Retrieval

Retrieving data is handled by another microservice, which only reads from Redis.

Using K6 I wrote a script that retrieves 10 tweets from the GraphQL API

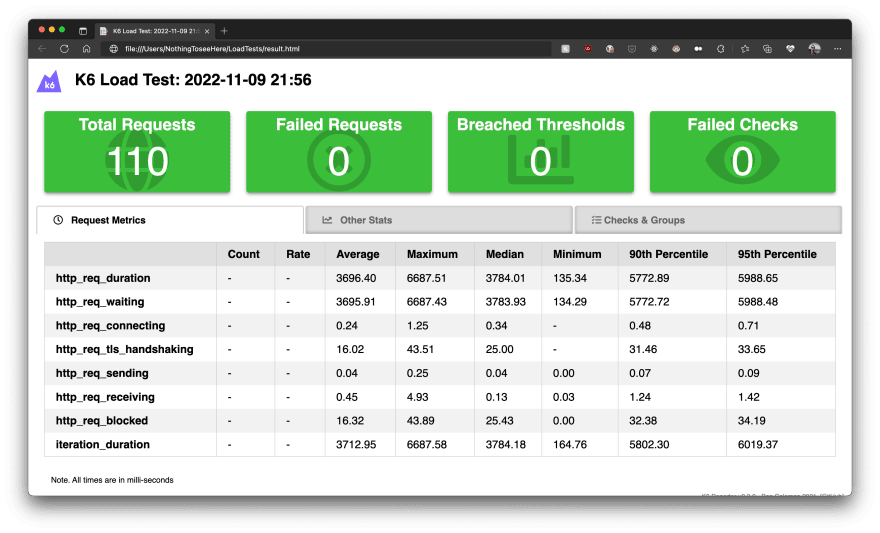

The results are … a bit disappointing

The average duration of 110 requests, over a period of 6 seconds, is ~3.7 seconds. I definitely need to investigate that. My suspicion is that I’m a bit inefficient with the deserialisation and that the GraphQL API has an inherent overhead.

Fortunately K6 has native support for Redis 😎 so I wrote another K6 script that basically does the same thing that the Visualizer microservice does behind the scenes, i.e. formulate and send a command to Redis to retrieve data from a certain index, sorted and paginated. But this time there’s no deserialization and GraphQL overhead

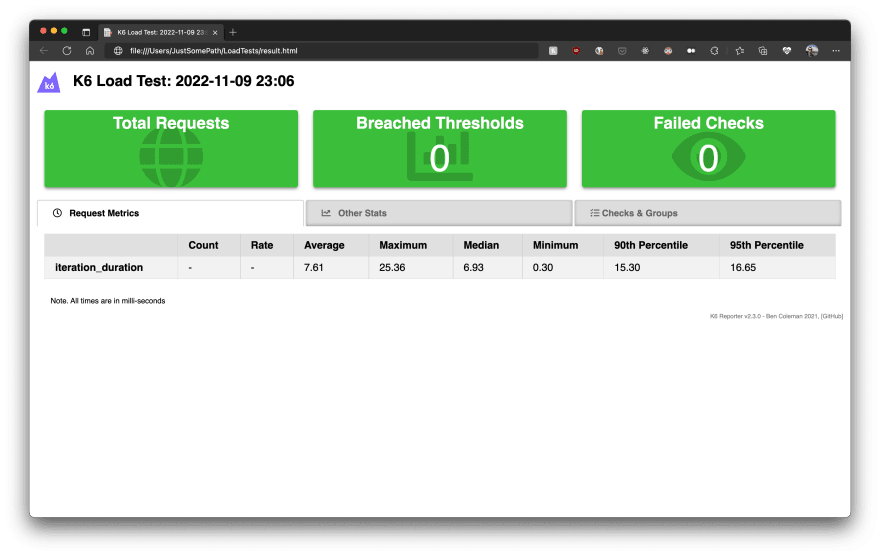

And the results are much better

FYI, the Iteration_duration includes setup+request+teardown. This time around the average request duration was around 7.6 milliseconds.

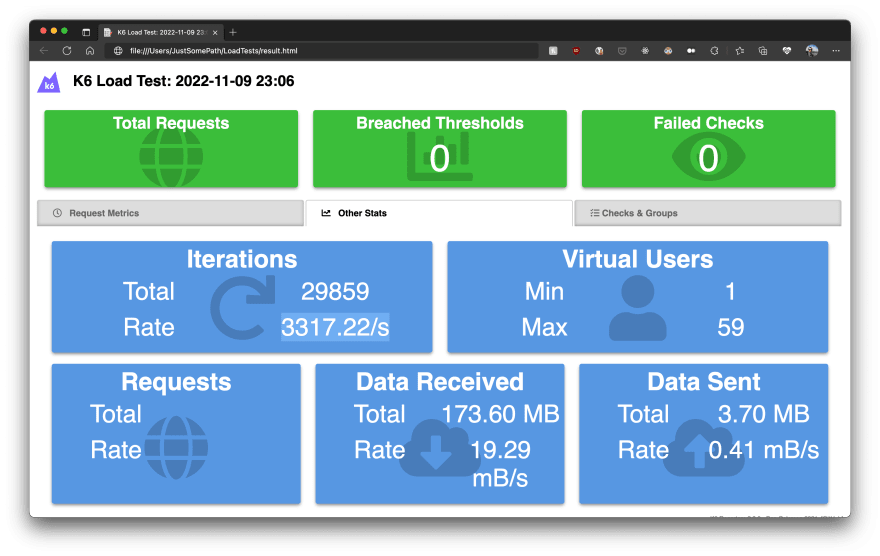

With up to 60 concurrent virtual users (a K6 term) Redis managed to serve over 29000 requests in 9 seconds, which works out to be around 3300 requests per second.

Conclusion

The system performed excellently on my battery-powered Macbook Pro, managing to store hundreads of tweets per second.

The read performance through my Visualizer microservice is disappointing and I definitely need investigate this further. Fortunately the problem doesn’t seem to be with Redis, which means I have a change to improve it.

Stay tuned for more updates from my journey in Redisland.

If you liked this post let me know on Twitter 😉 (@MunteanMarius), give it a ❤️ and follow me to get more content on Redis, Azure, MAUI and other cool stuff.

Top comments (0)