In the last 8 months, the frontend team at Tinybird has revamped the way we manage our code base. We made 3 big changes (plus a bunch of little ones) that have shortened our frontend continuous integration (CI) execution time despite a massive increase in code size and test coverage.

In this post, I’ll explain how we used a combination of a monorepo, Turborepo, and pnpm to modernize our frontend stack to ship more code, faster.

What is Tinybird?

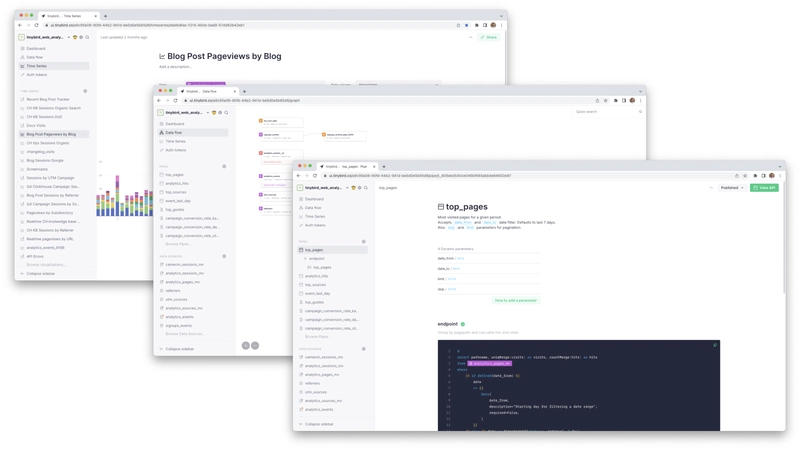

Tinybird is a serverless real-time database for developers and data engineers. With Tinybird, you can ingest data from multiple sources at scale, query it with SQL, and instantly publish your queries as low-latency, parameterized APIs to power your app dev. You can sign up for free if you want to try that out.

Behind all the magic is a devoted engineering team that’s shipping code as fast as we can. We follow continuous delivery practices; in a good week, we’ll push over 100 changes to our production codebase. Fast CI times matter. #speedwins.

Our backend team recently detailed how they cut their CI pipeline execution time in half. This was a big win for us. Tinybird is a data platform, so the backend is the biggest piece of our product and the lion’s share of the codebase. It’s what makes the engine roar.

But while Tinybird might be one of the most powerful analytics tools on the planet, the special sauce in the recipe is undoubtedly the developer experience. Developers choose Tinybird because, as we’ve been told, we “make OLAP feel like Postgres.”

The frontend UI takes center stage in the Tinybird developer experience, and we needed to make similar changes to our frontend CI to keep up with the backenders. Here’s how we did it.

Stage 0: Simple code, no type safety, no e2e tests

June 2022

When we started building the Tinybird UI, things were simple. We had 3 pieces of code:

- A main UI app

- A styles library

- An API wrapper library

…all written in Javascript using React. We had a few basic unit tests, but zero end-to-end (e2e) testing. Don’t judge; there were some solid benefits:

- Very fast build times

- Zero time-wasting boilerplate

- We could be hacky and agile and get away with it

Of course, the project grew. More features, more UI paths. We ended up with a lot of duplicated components and very little standardization.

Plus, the team grew. Fresh eyes on messy code led to a lot of head-scratching and Slack threading. We didn’t have any type safety, and we lacked good test coverage. It was easy to make mistakes and introduce errors.

Dev speed slowed down since our team couldn’t rely on guardrails from type safety or CI. Shipping to production felt… risky.

To make matters worse, our code was split across multiple repositories. This gave us a number of fits:

- No ability to track issues in a central location. Team members had to track their tasks across multiple GitLab projects, which created frustration.

- Minor changes to multiple packages required changes across multiple repos. This meant multiple merge requests that needed their own approvals.

- Duplicated code. Merging to multiple repos was annoying, so we cut some corners to avoid it, including adding the same code to multiple places.

All in all, our code and process may have worked for a seed-stage startup, but it was time for us to grow up a bit and bring the consistency and safety expected from an enterprise-grade data platform.

Stage 1: Type safety, standardization, increased test coverage

September 2022

After finding ourselves in a classic startup code-mire, we decided to fix some things:

- We started using [TypeScript](https://www.typescriptlang.org/), migrating our packages and libraries (but not yet the main app). From this point on, every new package has been fully written in Typescript. At the same time, we started typing old parts of the codebase progressively.

- We created a standardized components library so we could start reusing code and enforcing more consistency.

- We increased unit test coverage. We ended up with about 2,200 unit tests over nearly 160,000 LOC.

- We added some e2e tests. Specifically, we started testing critical paths, sign-up and login flows, and some of the fundamental aspects of Tinybird usage like creating Workspaces, Data Sources, and Pipes. We rely on Cypress to create our e2e tests.

At this point, we ended up with a much larger code base and many more tests. Critically, we weren’t caching any tests in our CI, and only a few in our local dev. Every new production build would run all the tests.

So, while we had added the guardrails to keep us from careening off course, our CI execution times slowed waaayyyy down. In fact, they more than doubled, from 9 minutes to almost 20.

Stage 2: Monorepo, Turborepo, pnpm

February 2023

Stage 1 brought a big quality boost to the project at the expense of our CI execution times. We knew the project was only going to keep growing, and we were pursuing even higher test coverage. That left us staring down the barrel of 20-30+ minutes of CI time. At that rate, we literally wouldn’t have enough minutes in a day to ship all we wanted to ship.

So, we made 3 big changes to our stack:

- We consolidated our code into a monorepo.

- We used Turborepo to handle tasks and cache test results.

- We used pnpm to install our packages.

Monorepo

Before these changes, our libraries and packages were all in individual repositories. So we had many different build processes to maintain, each individually tested, and we couldn’t cache unit test results across different repos.

We switched to a monorepo and brought all the frontend code - the app, the libraries, and the packages - into a single repository. This consolidated our code and issue tracking, removed code duplicates, and simplified the merge request process.

But, a monorepo can come with its own set of problems. Without a smart approach, it won’t scale. We could end up with a load of CI tasks and actually go backward in terms of CI pipeline time.

That’s why we used Turborepo.

Turborepo

Turborepo is an incredible, high-performance build system for JavaScript/TypeScript monorepos. It handles every package task and caches the logs and the outputs from them. With Turborepo, we can intelligently build only the pieces of code that have changed, and aggressively cache unit tests results across the different code bases that aren’t impacted by the changes we’ve recently committed.

We’ve started using Turborepo just to handle all the CI tasks (test, build, and lint). Turborepo’s smart protocol made this really easy, and it made job management much more efficient. There was almost zero configuration, and the default templates were almost perfect out of the box.

At first, we only used cache in local development, but shortly thereafter we implemented cache in the CI.

Right now, we use GitLab CI for the cache. Turborepo generates some artifacts (builds & meta), and our GitLab CI saves these artifacts into Google Cloud Storage. We generate a key associated with the cache files based on the current task and step in the CI, and we use that key in future deployments. In this way, we can incrementally build the app, only rebuilding the parts that change.

This process isn’t perfect out of the box, and it took some work to get the config right. Notably, we currently aren’t using Vercel remote cache (which would work out of the box). A few of our projects do run on Vercel, so in the future, we think we can make some efficiency gains by switching to the Vercel remote cache.

pnpm

As its name suggests, pnpm is a performant package manager. And it works great with our monorepo structure.

When we switched to a monorepo and had multiple apps in one place, some apps had the same dependencies but were pinned to different versions. So npm became really slow, as it was prone to errors while trying to resolve complex dependency trees. We tried implementing npm cache without any benefit; it didn’t improve our total build time at all.

We also tried yarn, but it too was running into dependency resolution conflicts.

pnpm has a much faster and more consistent dependency resolution algorithm than standard npm and yarn, so it works much more quickly and efficiently in re-using dependencies across the many projects in a monorepo. In our implementation, pnpm never hit any resolution errors.

We have a total of five CI pipelines. With npm install, each pipe took 2+ minutes to run even with caching. With pnpm and its caching, we cut those build times down to 10 seconds. That means that, across our five pipelines, we’ve cut our times down from 10+ minutes to just 50 seconds with pnpm.

Along with these 3 major changes, we further migrated our code to Typescript and added over 600 additional unit tests to support multiple major new features and some internal applications that also live in the repo. We now have over 400 tests in our new packages and 3,000 in the main app. Turborepo handles both.

Even with all these new additions, the uncached CI time clocked in at just 15 minutes, a 25% improvement over the prior stage despite a big increase in test coverage and LOC.

But with caching and our trifecta of updates, we shaved off another ~8 minutes for an average total CI time of about ~7 minutes (depending on what’s cached).

All told we’ve eliminated about 5 hours of CI time every week. With the combination of Typescript, a monorepo structure, Turborepo, and pnpm, we ship faster and with more confidence. Faster development times lead to faster feedback from our users which leads to even faster development times for new features as the development flywheel accelerates.

Next Steps

Of course, good engineering never sleeps. In the future, we will focus on adding even more tests covering more features. And we’ll make some additional changes to our codebase, namely:

- Migrating everything to TypeScript

- Splitting our frontend from our backend repo to simplify the CI process so that frontend changes don’t impact backend deployments

- Migrating from vanilla React to Next.js As we make improvements, we’ll write about them.

Summary

We significantly improved our CI execution time and code management of our frontend code base by implementing a combination of a monorepo, Turborepo, and pnpm. These changes allowed us to ship code faster and with more confidence, despite a massive increase in our code size and test coverage.

You can apply the lessons learned from us by evaluating your own codebases and considering the adoption of a monorepo structure, leveraging Turborepo for efficient task handling and caching, and using pnpm for faster package management. By doing so, you can also optimize your CI pipelines and enhance your overall development experience.

For those interested in learning more about building better CI pipelines for your front ends, we recommend you check out our other blog post on this subject: Better CI Pipeline with Data. Also, you can explore these resources:

Monorepo Best Practices:

- Scaling a Monorepo at Google

- Monorepos: Please don't!

- Awesome Monorepo: A curated list of resources related to monorepos

- Benefits and challenges of monorepo development practices

Turborepo Documentation:

pnpm Guides:

More about Tinybird

Interested in learning more about Tinybird, the real-time database for developers? Follow us on Twitter or LinkedIn, join our growing Slack community, or check out our blog.

Top comments (0)