Notes from Week 1

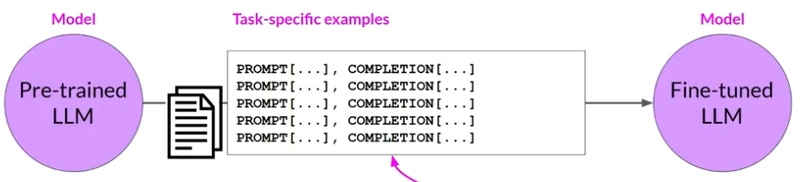

Fine Tuning

Fine tuning is the process of training the model with task-specific examples. This is done via supervised learning by providing samples of prompts and desired completions.

Instruction Fine Tuning

Instruction fine tuning is a specific method for fine tuning the model for a specific task, like text summarization. This fine tuning is done by providing a dataset with pairs of prompt/completion. The prompt should have an instruction and the completion is the desired result.

For example for text summarization the dataset can include:

Summarize the following text:

[example text]

[example completion]

In instruction fine tuning ALL the models weights are updated (full fine tuning) so the compute resources that are required are quite big.

Prepare the dataset

As we said, it is important to format the prompt in the dataset as an "instruction". While there are many public datasets that are not formatted as "instructions" we can convert them to be instructional according to the task we want to achieve.

For example the Amazon products review dataset has: product_title, review_headline, review_body, star_rating, if we want to use this dataset for text generation we could format the following prompt:

Generate a {{start_rating}}-start review (1 being lowest and 5 being highest) about this product {{product_title}}.

|||

{{review_body}}

Or for text summarization:

Give a short sentence describing the following product review:

{{review_body}}

|||

{{review_headline}}

Fine Tuning Process

The fine tuning process is like a regular neural network training process.

We give the model our prompt, and then we calculate the cross-entropy loss of the model output vs the desired output, update the model weights using back propagation and continue over several epochs (remember that the model output is words probabilities, we use that to compute the loss).

By the end of this process you will have a fine-tuned Instruct LLM model.

Single Task Fine Tuning

While LLMs can perform many tasks in fine tuning we can fine tune for a single specific task, like summarization, for this often a relatively small dataset of 500-1000 examples is enough.

Catastrophic Forgetting

The downside of fine tuning on a single task can lead to a phenomenon called "catastrophic forgetting". This is where the model "forgets" how to perform other original tasks it was trained on. This happens because fine tuning updated the entire weights of the model, so other tasks can start to behave like the single task it was fine tuned on.

So what can we do?

- First we make sure we need the model to generalize on other tasks, maybe the fine tuned model on the single task is all we need

- We can fine tune the model on multiple tasks, but this requires a significant amount of data, like 50,000-100,000 samples.

- Use a method called PEFT - Parameter Efficient Fine-tuning. In this method we do not modify the entire weights of the model but only a small set of adapter layers of the specific task.

Multi Task Fine Tuning

Multitask fine-tuning is an extension of single task fine-tuning, where the training dataset is comprised of example inputs and outputs for multiple tasks. Here, the dataset contains examples that instruct the model to carry out a variety of tasks, including summarization, review rating, code translation, and entity recognition. You train the model on this mixed dataset so that it can improve the performance of the model on all the tasks simultaneously, thus avoiding the issue of catastrophic forgetting.

FLAN

FLAN, which stands for fine-tuned language net, is a specific set of instructions used to fine-tune different models.

FLAN-T5, the FLAN instruct version of the T5 foundation model while FLAN-PALM is the flattening struct version of the palm foundation model.

FLAN-T5 is a great general purpose instruct model. In total, it's been fine tuned on 473 datasets across 146 task categories.

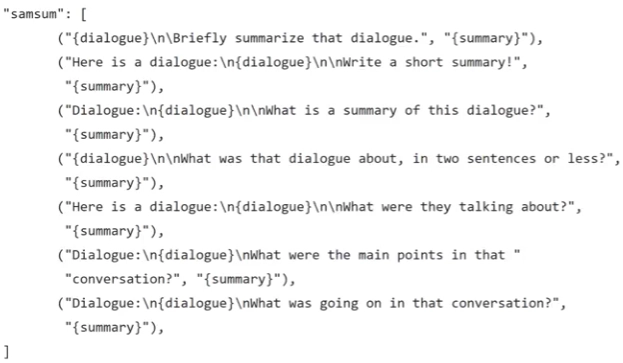

One example of a prompt dataset used for summarization tasks in FLAN-T5 is SAMSum.

Using these dialogues and summaries different instruction prompts have been generated:

Including different ways of saying the same instruction helps the model generalize and perform better.

Model Evaluation

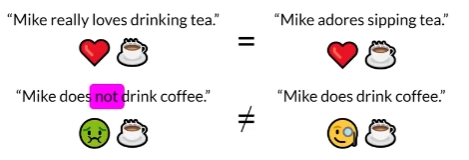

In traditional machine learning, you can assess how well a model is doing by looking at its performance on training and validation data sets where the output is already known. You're able to calculate simple metrics such as accuracy (because the models are deterministic). But with large language models where the output is non-deterministic and language-based evaluation is much more challenging.

Note in the second example there is only 1 word difference between the two sentences, a simple measurement metric could have marked them as similar.

We will discuss 2 evaluation methods, ROUGE and BLUE, it is important to note that the evaluation scores are not comparable across difference tasks, for example a ROUGE score for a text summarization task cannot be compared to a ROUGE score of title generation task.

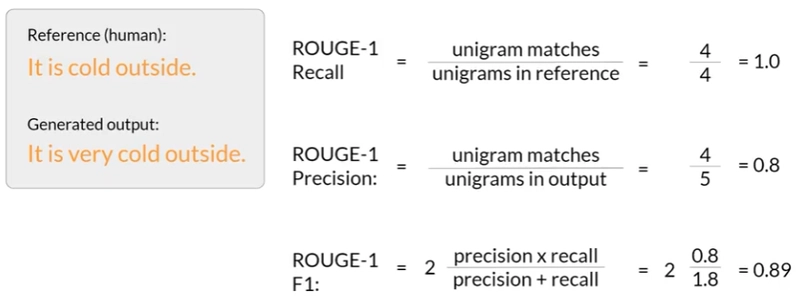

ROUGE

ROUGE or recall oriented under study for jesting evaluation is primarily employed to assess the quality of automatically generated summaries by comparing them to human-generated reference summaries.

It is best used for text summarization (compare the generated summary to one ore more reference summaries).

ROUGE-N

With ROUGE-N (i.e. ROUGE-1, ROUGE-2 etc.) we take an N words sequence from the generated output and compare it to the reference (human) output, we can compute the standard metrics of recall/precision/f1.

For example ROUGE-1

(single word is called "unigram")

Note that if the generated output was "It is NOT cold outside" the scores were exactly the SAME!

Here is an example of ROUGE-2

(two words sequence is called "bigram")

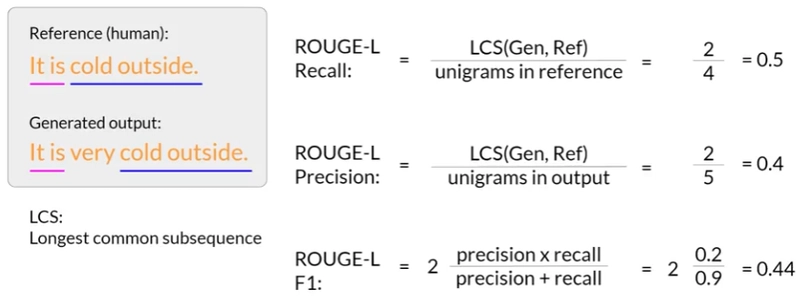

ROUGE-L

Instead of just increasing the sequence length we test in ROUGE-N we can test for the Longest Common Sequence (LCS):

As we saw, ROUGE scores for totally wrong generations can be the same as correct generations, choosing the correct ROUGE metric is highly dependent on the task at hand and the length of generated output.

BLEU

BLEU, or bilingual evaluation understudy is an algorithm designed to evaluate the quality of machine-translated text by comparing it to human-generated translations.

It is used for text translation.

BLUE = Avg(precision across range of n-gram sizes)

Here are example of BLUE scores for different generations compared to a human reference:

As we get closer to the reference the score gets closer to 1.

Benchmarks

Both rouge and BLEU are quite simple metrics and are relatively low-cost to calculate. You can use them for simple reference as you iterate over your models, but you shouldn't use them alone to report the final evaluation of a large language model. For overall evaluation of your model's performance, however, you will need to look at one of the evaluation benchmarks that have been developed by researchers.

Benchmarks like GLUE and SuperGLUE assess general language understanding and complex reasoning, respectively, with leaderboards for model comparison. Newer benchmarks like MMLU and BIG-bench challenge LLMs with tasks requiring extensive world knowledge and problem-solving abilities, while HELM emphasizes transparency and holistic evaluation, including fairness, bias, and toxicity metrics, to provide a nuanced understanding of LLM performance and limitations.

Parameter Efficient Fine-Tuning (PEFT)

Training large language models (LLMs) via full fine-tuning is computationally expensive due to the massive memory requirements for storing model weights, optimizer states, gradients, and activations, often exceeding consumer hardware capabilities. Parameter-efficient fine-tuning (PEFT) methods address this by updating only a small subset of parameters or adding new, trainable components while freezing most of the original LLM, significantly reducing memory usage and enabling training on single GPUs.

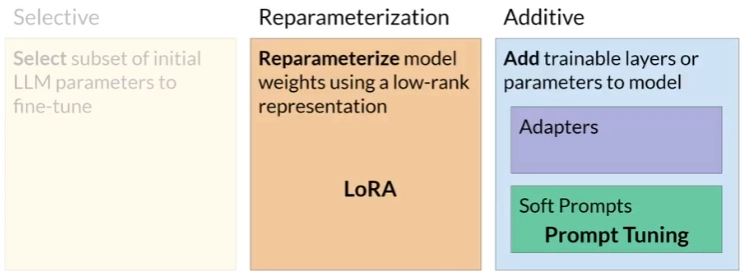

There are 3 main PEFT techniques and we will take a look at 2 of them (the results of "selective" PEFT technique are mixed so we won't look at it).

Low-Rank Adaptation (LoRA)

In a Transformer model we usually have 2 neural networks in the encoder, and 2 neural networks in the decoder, the first is the self-attention network and the second is the feed-forward network (that produces the result). When training such LLM we are essentially training the parameters of all these networks.

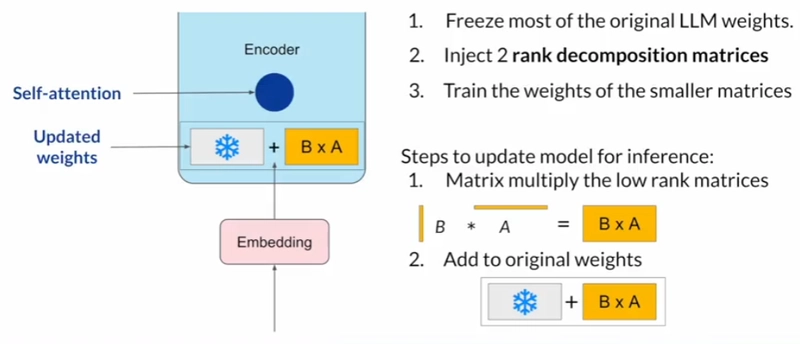

In LoRA fine tuning we keep the original weights of the self-attention layer (can be the feed-forward as well) untouched (the matrix with the snow icon on it) but we add 2 small metrices (the column matrix B and the row matrix A), the multiplication result BxA produces a matrix with the same dimensions like the original self-attention layer matrix, so we can add them together to get a modified self-attention layer.

During training all we have to learn are the low-rank (small) A, B metrices.

Example

In the original "attention is all you need" paper the transformer weights matrix were 512 x 64 (32,768 parameters).

If we want to fine tune it using LoRA we can select a combination of any 2 matrices whose multiplication result a matrix of dimension 512 x 64, we can use a simple 1x64 matrix with 512x1 matrix, this is considered a LoRA with rank r = 1.

For r = 8 we will use matrices with dimensions: 8x64 and 512x8, so during training we will have to learn only 4,608 parameters, compared to 32,768 parameters of the original layer (86% reduction).

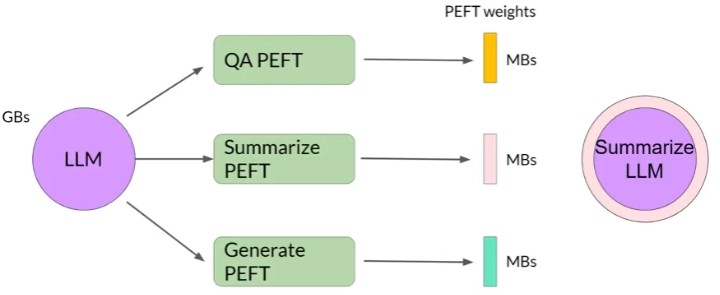

Multiple LoRAs

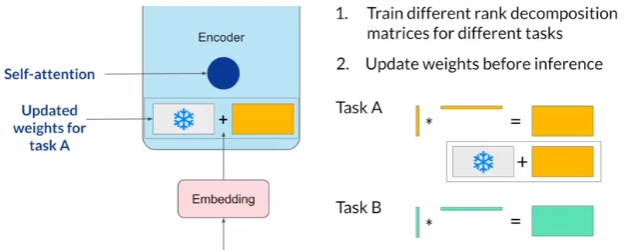

In full fine tuning we can fine tune the model to perform multiple tasks, so we have a single model that can handle various tasks.

In LoRA fine tuning we are usually targeting a specific task.

So how can we use the same model for different specialized tasks? since LoRA matrices are small we can store them easily in memory, and during inference we can inject the correct task-specific LoRA matrix to the original model.

Evaluation

In order to evaluate the performance of LoRA lets compare its ROUGE score to a base model and to a fully fine tuned model.

We will use the FLAN-T5 model.

We can see that a fully fine tuned model has a ROUGE score that is higher by 80% compared to the base model, but note that the LoRA fine tuned model has a score that is higher by 75%, almost like the fully fine tuned model !

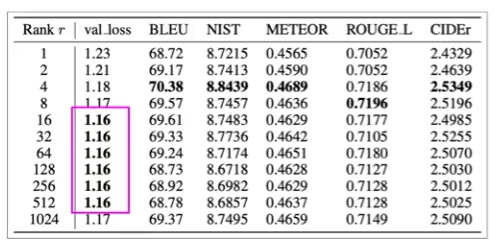

Choosing the LoRA rank

A research by Microsoft tested different ranks of LoRA for the same task, measuring results with different valuations, here are the results:

- A LoRA rank of 4 achieved the highest score in most of the evaluations.

- The training value loss did not improve with higher LoRA ranks beyond 16.

Prompt Tuning

While prompt tuning sounds like prompt engineering they are NOT the same!

In prompt engineering we are making changes to the prompt trying to make the model output the text we want.

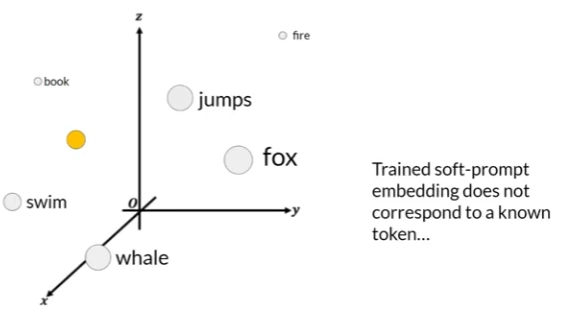

With prompt tuning we are adding few "virtual token" vectors to our prompt vectors, the values of these token vectors are being learned during training.

Each word in the input prompt is represented by an embedding vector, so basically we are adding few more embedding vectors to our prompt (so these are not "real" word tokens hence called "virtual tokens"), then we train the model to learn the values of these extra embeddings.

Basically it is very similar to LoRA, instead of altering the self-attention layer we are changing the input embeddings, in both cases we need to learn only a small set of parameters.

Evaluation

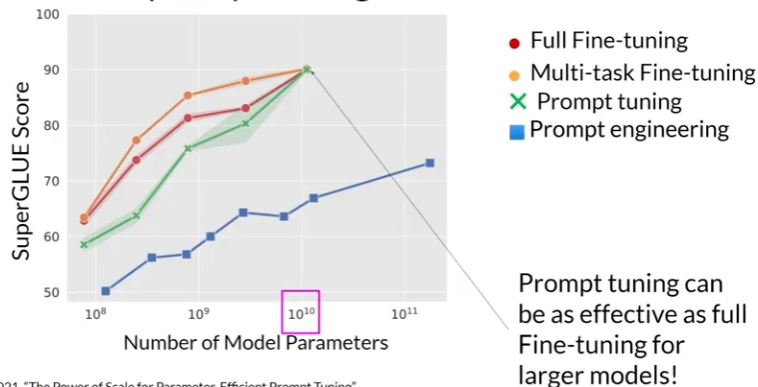

We can see that the larger the model the closer the performance of prompt tuning is to fully-fine tuned.

Top comments (0)