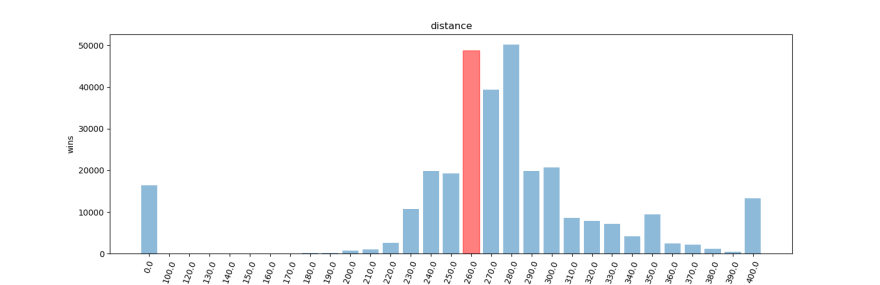

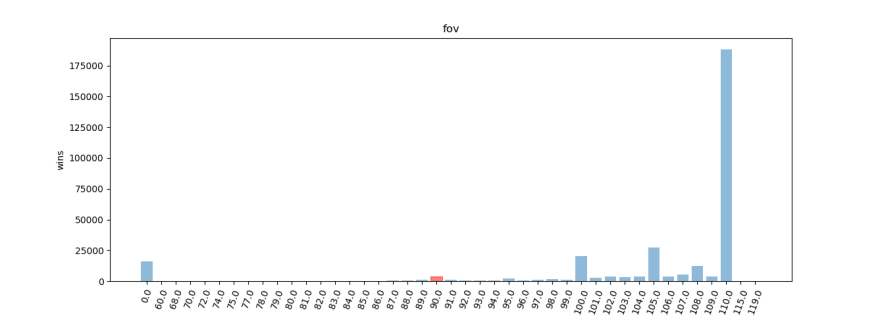

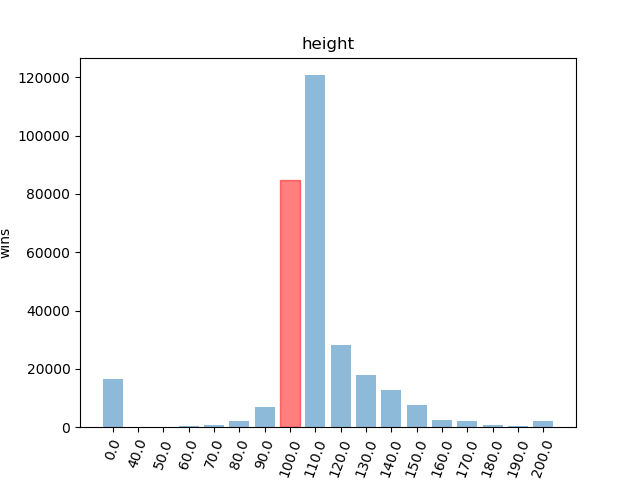

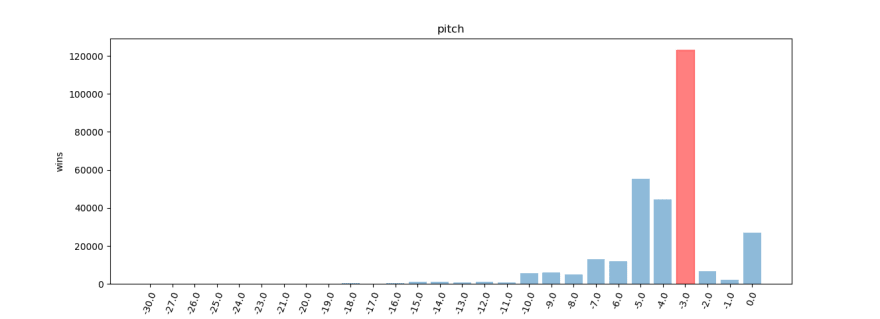

By pulling the camera settings from 150,000 replays and only considering the settings of the team that won we can pretend to have found the "best" configuration.

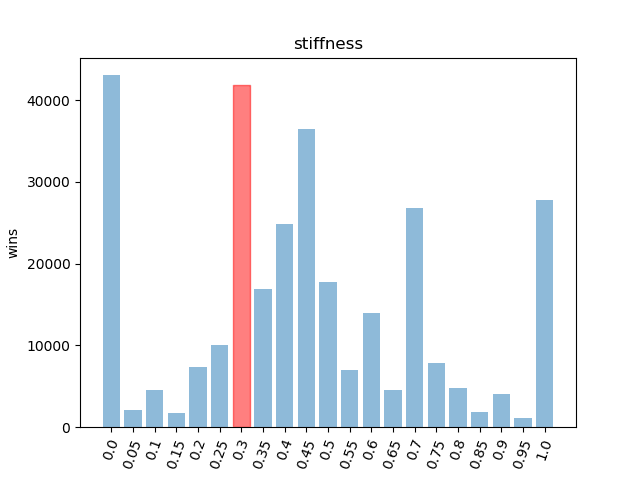

Red bars indicate default camera settings.

0 is invalid data but i left it in because why not.

Graphs showing the wins per configuration per option.

About the Data

SunlessKhan on youtube put out a video for https://ballchasing.com/ recently which is a site that lets users upload replays from rocketleague. It provides a pretty awesome way to view the replay in your browser, but also provides a ton of analytics, stats, and info about the match.

Camera settings always seem to be an interesting debate in the community. So I decided to find out what settings most people are using.

Getting the Data

I'll be honest, i was going to write out what i did but it actually turned out to not be very interesting. It boiled down to.

- Use css selectors to select the data you want.

- You can use selectors to get links to the pages that contain the data you want, and to get the links to paginate to the next page. This is especially useful for websites that don't have simple pagination urls.

- Use node and cheerio. Node makes it easy to scrape asynchronously while.

- Use timers or timeout to be nice to the server.

- Sometimes it's easier to output messy data and clean it up with things like

sedandtr.

Here's the tool I used... it's pretty poorly written by me about a year ago and there's no comments in the code itself and it almost always mostly works.

agentd00nut

/

css_scraper

agentd00nut

/

css_scraper

Simplify web scraping through css selectors.

Css_scraper

Simplify web scraping through css selectors.

Easily scrape links, text, and files from a single page by specifying multiple selectors for each data type.

Combine the output to easily read the results.

Dump raw output for easy processing with other tools or to disk.

Scrape multiple pages by specifying a next link selector and how many pages to scrape

Scrape many pages by specifying a next page selector.

Control what page to start scraping on.

Specify load timeouts.

Use sleep intervals to wait before getting the next page.

Specify prefix text to add to links or file src's

Scrape multiple pages by specifying how a url paginates

Specify custom delimiters for output

italics are soon to be features.

Don't be a jerk

Obviously use discretion when using anything that scrapes data from web pages It's your fault if you get your ip banned from a site you like or…

The real power is that you can combine the -n next pagination selector with the -d depth selector.

The depth selector will apply all your -t -f -l selectors to every link it finds.

The next pagination selector will follow the link it finds to get to the next page.

Use -p to paginate only a certain number of times.

You'll likely want to use the -r to get non json styled output.

Making the graphs

Again this ended up not being very interesting. I just used matplotlib in python to increment a counter in a dictionary where they key was the camera setting for the team that won the match.

Top comments (0)