Recently I went through the process of setting up Drone CI on my Raspberry Pi. The plan was to use my Raspberry Pi as a build server for this website as well as other projects.

However, the Sharp image library that Gatsby uses to resize images doesn’t natively work on ARM processors. I did try to compile it in the docker image but I couldn’t get the correct version to be picked up when running the builds. On top of that the builds were incredibly slow on my already overworked Raspberry Pi 2B+.

So I started looking for x86 based single board computers (SBC) that would be able to run them. However, most of them aren’t that great and start at around £100, a bit more than a Raspberry Pi (the LattePanda looked quite good if you are interested).

At that price point, I might as well just run Drone CI on AWS in a micro EC2 (t2.micro £73 a year or free for first year).

Then I remembered, we have been using GitHub Actions at work and they are surprisingly good and cost effective. Really I am a bit late to the party with this!

Cost of GitHub Actions

If you have a public repository then you can run GitHub actions for free. Yes free!

Most of my code however is not public and for private repositories GitHub give you 2,000 minutes per month free (3,000 on a pro account). These builds also run with a generous 2 cores and 7 GB of RAM, a lot more than a t2.micro!

It takes around 2 - 3 minutes to build and deploy my blog to S3, so I am not likely to run out minutes each month with the frequency I post.

This is assuming your code runs on Linux which mine does. If you need to run on Windows then you only get 1,000 minutes a month. If for some reason you need your build to run on MacOS then that drops to 200 minutes.

Essentially at 2,000 minutes and $0.008 per minute on Linux, GitHub are giving us $16 dollars of runtime for free per month. As Windows and MacOS are more expensive to run you will use up your free credits quicker.

What can you do with GitHub Actions

With GitHub Actions you can pretty much do anything you would normally do on a build server.

- Running builds and tests

- Starting up docker containers

- Run integration tests

On top of that it comes with tons of community made plugins as well.

Deploying a Gatsby website to S3

So this blog is hosted on Amazon S3 with Cloudfront. At the moment, this website costs me around £0.50 a month to run, which I can’t complain about (I have shared my terraform build in a previous post if you are interested).

However, until now I have been pushing up changes to my S3 bucket locally from my machine (yuck).

My current build process consists of a few commands that are set up in my package.json file.

-

yarn lintwhich runs./node_modules/.bin/eslint --ext .js,.jsx --ignore-pattern public . -

yarn buildrunsgatbsy build -

yarn deployruns the following command to upload to S3 and invalidate my Cloudfront cache.

gatsby-plugin-s3 deploy --yes; export AWS_PAGER=""; aws cloudfront create-invalidation --distribution-id E5FDMTLPHUTLTL --paths '/*';

The above is assuming I have also run yarn install at some point in the past and I have credentials set up on my machine to allow me to upload to Amazon S3.

GitHub Workflow File

To be able to use GitHub actions you need to create a workflow file under .github/workflows in your project.

This is what my workflow.yml file looks like:

name: Deploy Blog

on:

push:

branches:

- main

jobs:

build:

runs-on: ubuntu-latest

timeout-minutes: 10

steps:

- uses: actions/checkout@v2

- uses: actions/setup-node@v2

with:

node-version: 12

- name: Caching Gatsby

id: gatsby-cache-build

uses: actions/cache@v2

with:

path: |

public

.cache

node_modules

key: ${{ runner.os }}-gatsby-alexhyett-site-build-${{ github.run_id }}

restore-keys: |

${{ runner.os }}-gatsby-alexhyett-site-build-

- name: Install dependencies

run: yarn install

- name: Run Lint

run: yarn run lint

- name: Build

run: yarn run build

- name: Set AWS credentials

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: eu-west-1

- name: Deploy to S3

run: yarn run deploy

I am going to go through each of these steps so you know what they do.

This is my scripts section in my package.json file. I reference these in my workflow to keep things consistant.

"scripts": {

"lint": "./node_modules/.bin/eslint --ext .js,.jsx --ignore-pattern public .",

"develop": "gatsby develop",

"build": "gatsby build",

"clean": "gatsby clean",

"serve": "gatsby serve",

"deploy": "gatsby-plugin-s3 deploy --yes; export AWS_PAGER=\"\"; aws cloudfront create-invalidation --distribution-id E5FDMTLPHUTLTL --paths '/*';"

}

Define Build Environment and Timeout

build:

runs-on: ubuntu-latest

timeout-minutes: 10

Here we specify what operating system our workflow is going to run on. Here I am running on linux so I pick the ubuntu runner. You also have the option of Windows or MacOS:

windows-latestmacos-latest

You can find a list of runners in the GitHub Docs.

I have also specified a timeout of 10 minutes. It is good practise to set a timeout so that a malfunctioning workflow doesn’t eat up all your free minutes.

Define when to run this workflow

on:

push:

branches:

- main

As it is only me contributing to this blog, I just have the workflow set to run when something is committed to the main branch. So if I merge a PR or commit straight to main it will kick off a build and deploy my blog.

Checkout Code

- uses: actions/checkout@v2

You will definitely need this step, as it checks out you code into the runner.

Setup Node

- uses: actions/setup-node@v2

with:

node-version: 12

As Gatsby relies on Node we need to set it up. I am using version 12 so I set that up here in the script.

Cache Folders

- name: Caching Gatsby

id: gatsby-cache-build

uses: actions/cache@v2

with:

path: |

public

.cache

node_modules

key: ${{ runner.os }}-gatsby-alexhyett-site-build-${{ github.run_id }}

restore-keys: |

${{ runner.os }}-gatsby-alexhyett-site-build-

As you may know most React applications end up with a huge number of packages in the node_modules folder. If you are starting from a fresh project, a considerable amount of time is taken up downloading these packages. This will impact your build times and therefore your free minutes on GitHub.

For Gatsby we also want to cache the .cache folder and public folder so we don’t have to rebuild these each time.

GitHub action caches work by specifying a key which we use to find the cache. In this case, my key will look like this:Linux-gatsby-alexhyett-site-build-675994106. As I have specified restore-keys it will also match any keys starting with Linux-gatsby-alexhyett-site-build-.

If I chose to build on Windows instead then it would create a new cache key. There are various methods you can use to create a cache key which you can find more about on the repository.

Caches are kept while they are in use but if not used in 7 days they are removed.

Install Yarn Packages

- name: Install dependencies

run: yarn install

This installs all of the npm packages require by my website. As the node_modules folder is cached this will be quicker after the first run.

Run Lint

- name: Run Lint

run: yarn run lint

Check for any lint errors in my code before continuing. Lint is set up as script in my package.json file and is the equivalent to ./node_modules/.bin/eslint --ext .js,.jsx --ignore-pattern public .

Run Build

- name: Build

run: yarn run build

Another script in my package.json equivalent to gatsby build.

Set to AWS Credentials

- name: Set AWS credentials

uses: aws-actions/configure-aws-credentials@v1

with:

aws-access-key-id: ${{ secrets.AWS_ACCESS_KEY_ID }}

aws-secret-access-key: ${{ secrets.AWS_SECRET_ACCESS_KEY }}

aws-region: eu-west-1

To be able to push to AWS and invalidate the Cloudfront cache we need to provide access keys and specify the region. Access keys are added using GitHub secrets in your project. I will cover how to set these up in a bit.

Upload to S3 and Invalidate Cache

- name: Deploy to S3

run: yarn run deploy

Finally we need to push the built files in the public directory up to S3 and invalidate the Cloudfront cache. I do this with another script set up in package.json file.

Which is the equivalent to running this:

gatsby-plugin-s3 deploy --yes; export AWS_PAGER=""; aws cloudfront create-invalidation --distribution-id E5FDMTLPHUTLTL --paths '/*';

In case you are wondering, the export AWS_PAGER=""; command is so that the AWS CLI doesn’t prompt you to press enter after the invalidation has been done. This was a breaking change that the AWS team introduced recently.

Setting GitHub Secrets

The workflow script specifies a couple of secrets, ${{ secrets.AWS_ACCESS_KEY_ID }} and ${{ secrets.AWS_SECRET_ACCESS_KEY }}.

Ideally, you should create a user with just enough permissions to be able to perform what we need and no more.

To do this we need to create a new policy by logging on to AWS Console and going to IAM > Policies.

Then we need to create a policy with the following JSON, replacing yourdomain.com with the name of your S3 bucket for your website.

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "AccessToGetBucketLocation",

"Effect": "Allow",

"Action": ["s3:GetBucketLocation"],

"Resource": ["arn:aws:s3:::*"]

},

{

"Sid": "AccessToWebsiteBuckets",

"Effect": "Allow",

"Action": [

"s3:PutBucketWebsite",

"s3:PutObject",

"s3:PutObjectAcl",

"s3:GetObject",

"s3:ListBucket",

"s3:DeleteObject"

],

"Resource": [

"arn:aws:s3:::yourdomain.com",

"arn:aws:s3:::yourdomain.com/*"

]

},

{

"Sid": "AccessToCloudfront",

"Effect": "Allow",

"Action": ["cloudfront:GetInvalidation", "cloudfront:CreateInvalidation"],

"Resource": "*"

}

]

}

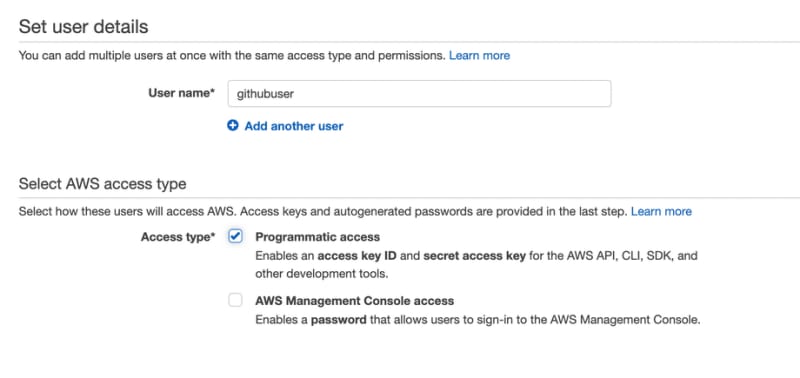

Once the policy is created you need to create a new user under IAM > Users. Give it a name such as githubuser and select programmatic access:

Next select “Attach existing policies directly” and pick the policy we created in the last step.

On the last page you will be given your Access Key Id and Secret. You will only be shown this secret once so make sure you copy it down.

Lastly we need to add these secrets to GitHub. On your repository go to Settings > Secrets from their you need to add “New repository secret” and add in AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY.

Now the next time you commit changes to your main branch it will kick off the build:

There you have it. Much simpler than setting up your own CI tool either locally or in the cloud.

Conditional Compilation

This is a fairly simple example, although you can do a lot more with GitHub actions if you use docker or some of the action plugins.

If I wasn’t the only one contributing to this blog, I would also set up some of these steps to run on pull requests as well.

To do that I would set the on statement to run:

on: [push, pull_request]

And then add an if statement to the AWS and Deploy Steps:

if: { { github.ref == 'ref/head/main' } }

Final Thoughts

If you don’t have your own build server using GitHub Actions can be a great alternative. For public projects it is free and for private personal projects the 2,000 minutes is likely to be more than enough.

Top comments (1)

Good article, love the quality of your explanations and your retention of fluency even after wading through technical matters. Especially liked the explanation of how Github Actions which was clearer than Github's own documentation.

Would like to point the entirely free options that integrate well with Gatsby and leave open the possibilities of varied CMS options for additional authors to the blog, for your (if you don't already know) and everyone else's benefit.

Both are part of the awfully named JAMstack paradigm, which minimize developer overhead but as a Linux user myself, I do not mind that so much. Instead I was drawn in by the absolutely 0 dollars/pounds sterling I pay for using these loaded platforms and stayed because the ease of integrating CI/CD tools into my workflow.