Learn how Docker is transforming the way we code

Ever since Docker went live in early 2013 it’s had a love-hate relationship with programmers and sysadmins. While some ‘experienced’ developers that I’ve talked to have a strong dislike for containerization in general (more on that later), there’s a reason why a lot of major companies including eBay, Twitter, Spotify and Lyft have reportedly adopted Docker in their production environments.

So what exactly does Docker do?

Ever worked with VMware, VirtualBox, Parallels or any other virtualization software? Well, Docker’s pretty much the same (albeit without a fancy GUI) where it creates a virtual machine with an operating system of your choice bundled with only your web application and its dependencies.

But aren’t virtual machines slow?

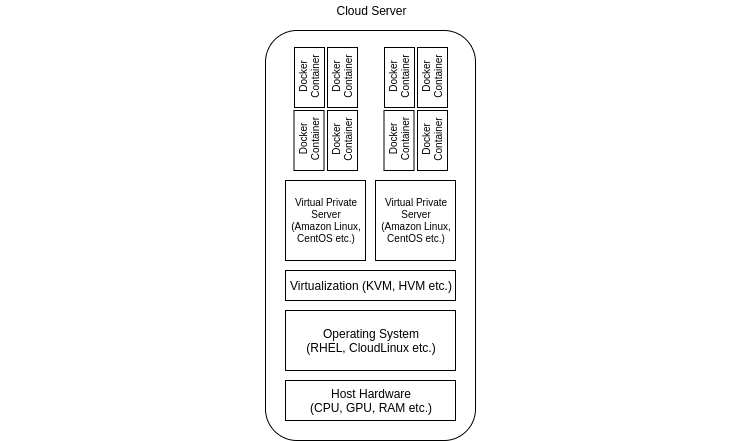

Virtualization is what drives the cloud computing revolution, and I like to call Docker the last step of virtualization which actually executes the business logic that you’ve developed.

But, your right — typical virtual machines are slow and what Docker does cannot be entirely categorized under virtualization. Instead, Docker provides an abstraction on top of the kernel’s support for different process namespaces, device namespaces etc. by using runc (maintained by the Open Containers Initiative) which allows it to share a lot of the host system’s resources. Since there isn’t an additional virtualization layer between the Docker container and the host machine’s kernel a container manages to provide nearly identical performance to your host.

A fully virtualized system gets its own set of resources allocated to it and does minimal sharing (if any) which results in more isolation, but it’s heavier (requiring more resources) — however, with Docker, you get less isolation but the containers are pretty lightweight (requiring fewer resources).

If you need to run a system where you absolutely require full isolation with guaranteed resources (e.g. a gaming server) then a virtual machine based on KVM or OpenVZ is probably the way to go. But, if you just want to isolate separate processes from each other and run a bunch of them on a reasonably sized host without breaking the bank, then Docker is for you.

If you want to learn more about the performance aspects of running a containerized system here’s a great research paper from IBM: An Updated Performance Comparison of Virtual Machines and Linux Containers (2014, Felter et. al) that does a sound comparison of virtual machines and containers.

Can’t I simply upload my application straight on to a bunch of cloud servers?

Well you can, if you don’t care about stuff like infrastructure, environment consistency, scalability or availability.

Imagine for a moment the following scenario: you manage a dozen Java services and deploy them on separate servers running Ubuntu with Java 8 for your Dev, QA, Staging and Production environments. Even if you haven’t made your applications highly available that’s a minimum of 48 servers that you need to manage (12 services x 4 environments).

Now imagine your team spearheads an organization change policy requiring you to upgrade your runtimes to Java 11. That’s 48 servers that you need to log in to and manually update. Even using tools like Chef or Puppet, that’s a lot of work.

Here’s a simpler solution

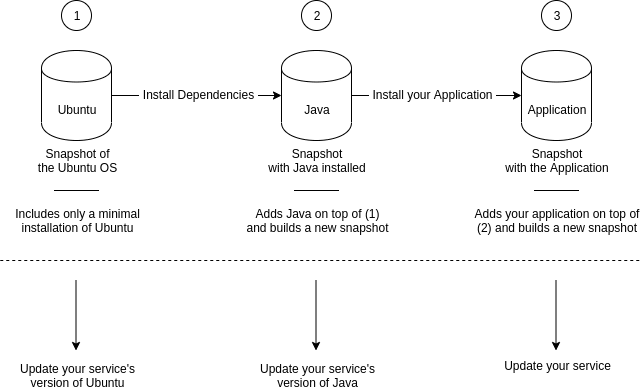

Docker lets you create a snapshot of the operating system that you need and install only the required dependencies on it. One side of this that I love is that you can manage all the ‘bloatware’ or lack thereof. You could use a minimal installation of Linux (I recommend Alpine Linux, although for the purpose of this article I’ll continue with Ubuntu) and only install Java 8 on it.

When the time comes to update, simply edit your Java image’s Dockerfile to use Java 11, build and push to a container repository (like Docker Hub or Amazon ECR), after which all you need to do is change your application containers base-image tag to reference the new snapshot and re-deploy them.

Here's a Gist of a sample Docker container built on top of the Ubuntu 18.04 minimal operating system.

I would build and push this image to the Docker Hub account damian using the tag oracle-jdk-ubuntu-18.04:1.8.0_191 and then use it to build another container for my services to run on:

# Instructs Docker to build this container on top of this snapshot

FROM damian/oracle-jdk-ubuntu-18.04:1.8.0_191

# Copys the application JAR to the container

COPY build/hello-world.jar hello-world.jar

# Executes this command when the container starts

CMD java -jar hello-world.jar

Now if I needed to update my services to Java 11, all I need to do is publish a new version of my Java snapshot with a compatible JRE installed, and update the tag in the FROM declaration in the service container, instructing the container to use the new base image. Voila, with your next deployment you’ll have all your services up-to-date with the latest updates from Ubuntu and Java.

But how would this help me during development?

Good question.

I recently started using Docker in unit tests. Imagine you’ve got thousands of test cases (and if you do, believe me, I feel your pain) which connect to a database where each test class needs a fresh copy of the database and whose individual test cases will perform CRUD operations on the data. Normally one would reset the database after each test using something like Flyway by Redgate, but this means that your tests would have to run sequentially and would take a lot of time (I’ve seen unit test suites that take as long as 20 minutes to complete because of this).

With Docker, you could easily create an image of your database (I recommend TestContainers), run a database instance per test class inside a container, and then run your entire test suite parallelly. Since all the test classes are running in parallel and are linked to separate databases, they could all run on the same host at the same time and finish in a flash (assuming your CPU can handle it).

Another place I find myself using Docker is when coding in Golang (whose configurations and dependency management I find to be messy )— instead of directly installing Go on my development machine I follow a method similar to Konstantin Darutkin’s by maintaining a Dockerfile with my Go installation + dependencies configured to live-reload my project when I make a change to a source file.

This way since I’ve got my project and Dockerfile version-controlled, and if I ever need to change my development machine or reformat it, all I need to do is simply reinstall Docker to continue from where I left off.

To sum up…

If you are a startup undecided on what’s going to power your new tech stack, or an established service provider thinking of containerizing your Prod and NonProd environments but fear sailing on ‘untested’ waters (smirk), consider for a moment the following.

Consistency

You might have the best developers in the entire industry, but with all the different operating systems out in the wild everyone prefers their own setup. If you’ve got your local environment properly configured with Docker, all a new developer need do is install it, spawn a container with your application and kick-off.

Debugging

You can easily isolate and eliminate issues with the environment across your team without needing to know how their machine setup. A good example of this is when we once had to fix some time synchronization issues on our servers by migrating from ntpd to Chrony — and all we did was update our base image, with our developers being none the wiser.

Automation

Most CI/CD tools including Jenkins, CircleCI, TravisCI etc. are now fully integrated with Docker, which makes propagating your changes from environment to environment a breeze.

Cloud Support

Containers need to be monitored and controlled or else you will have no idea what’s running on your servers and DataDog, a cloud-monitoring company had this to say on Docker:

Containers’ short lifetimes and increased density have significant implications for infrastructure monitoring. They represent an order-of-magnitude increase in the number of things that need to be individually monitored.

The solution to this monstrous endeavour is available in self-managed cloud orchestration tools such as Docker Swarm and Kubernetes as well as vendor-managed tools such as AWS’s Elastic Container Service and the Google Kubernetes Engine which monitor and manage container clustering and scheduling.

With the widespread use of Docker and its tight integration with Cloud Service Providers like AWS and Google Cloud, its quickly becoming a no-brainer to dockerize your new or existing application.

Top comments (2)

Good explanation on Docker. Thanks

Great explanation! Helpful! Keep it up!