Preface

Databend has gained the attention of many community members since it was open sourced in 2021. Databend is developed with Rust, therefore we designed Rust related courses and established several Rust interest groups in order to attract more developers, especially those with zero Rust development experience.

Databend also introduced Good First issue” labels to encourage newcomers of the community to make their first contributions. So far, there are more than 100 contributors, which is quite something.

However, after several iterations in the past year, the code of Databend has become increasingly complex. With 260, 000 lines of Rust code and 46 crates in the master branch at present, even developers familiar with Rust would be confused after cloning the repository, not to mention the increasing difficulty for newcomers. An article column on reading source code of Databend has been appealed for multiple times in many community groups to help make Databend code more accessible.

In response, we will launch a series of articles on Reading source code of Databend, we hope that these articles can help strengthen the communication between developers and the community, and create a source of inspiration.

The Story of Databend

A question that many developers have asked me is that: Why do you use Rust to build a database from scratch? In fact, this question can be divided into two sub-questions:

- Why choose rust?

Answer: Most of our early members were contributors to well-known databases such as Clickhouse, tidb and tokudb. From the perspective of technology stacks, we are more familiar with C++ and Go. Tiger brother u/bohutang also used Go to implement a small database prototype vectorsql during the epidemic. Some developers said that the vectorsql architecture is very elegant and worth learning from.

All languages have advantages and disadvantages, and should be selected according to the specific scenarios. At present, most DMBS use C++ or Java, and the rather new NewSQL uses more of Go. According to experience, C and C++ stand for high performance because it is easy for developers to write C/C++ code with high operating efficiency. However, the development efficiency of C++ is unbearably low, and it is difficult for developers to write memory-safe and concurrent-safe code at one time with the deficient tool chain. On the contrary, Go fits the standard of elegance and simplicity better with its sufficient tool chain and high development efficiency. Yet the generics procedure is too slow, and the memory of DB cannot be flexibly controlled. The running performance cannot be compared with C/C + +, especially when using SIMD instructions where interaction with assembly code is required. What we needed is a language with both development efficiency (memory security, concurrency security, and sufficient tool chain) and operation efficiency. At that time rust seemed to be our only choice, and we never regretted our choice afterwards. Rust can meet our needs perfectly, and is also cool!

- Why build a database system from scratch?

In general, there are only two routes:

Secondary Development and Optimization based on well-known Open-Source Database

Most people may choose this route, because with a good database base there is no need to do more repetitive work and thus can focus on optimization, improvement and reconstruction. In this way, the release of versions can be promoted and commercialized earlier. The disadvantage is that versions after forking is equivalent to another independent system, which cannot be fed back to the community, such as various sub genres under PG.

Building a new database system from scratch

This route is rather hard to follow, because the whole concept of database system is too large, and each sub direction is worthy of ten years of study or more. On the other hand, since there is no existing foundation, the designer can adjust and design more flexibly without paying too much attention to the historical problems. At the very beginning, Databend was designed for the scenario of cloud native data warehouse, which is very different from the traditional database system. The cost of code transformation may be the same as the cost of doing it from scratch. Therefore, we chose the second route to create a new cloud data warehouse from scratch.

Architecture

The architecture determines the superstructure, so let's start with the architecture of Databend.

r/DatafuseLabs - Reading Source Code of Databend (1) :Introduction

Although we started from scratch with Rust, we have also integrated some excellent open-source components or ecology to avoid duplication of work, for example, Databend is compatible with the ANSI SQL standard, provides support for mainstream protocols such as MySQL / Clickhouse, embraces the arrow ecology of the Internet of things and the storage format is based on the parquet format of big data, etc. We not only actively make contributions to the upstream communities, such as arrow2/Tokio and other open- source libraries, but also open sourced some common components as independent projects in GitHub (openraft, opendal, opencache, opensrv, etc.).

Databend is a cloud native elastic database. We not only separated computing and storage, but also carefully designed each layer to obtain extreme elasticity. Databend can be divided into three layers: meta-service layer, query layer and storage layer. These three layers can be flexibly expanded, which means that users can choose the most suitable cluster size for their business and scale the cluster according to the development of business.

Next, we will introduce the main code modules of Databend from the perspective of these three layers.

Modules

MetaService Layer

MetaService layer is mainly used to store and read persistent meta-data information, such as Catalogs / Users.

| Package | Usage |

|---|---|

| meta | The MetaService service is deployed as an independent process and can be deployed in multiple clusters. The bottom layer uses Raft for distributed consensus, and queries are sent and received as Grpc and MetaService. |

| meta/types | Definition of various structures that are stored in MetaService layer. Since these structures need to be persisted eventually, data serialization methods also need to be considered. Currently, Protobuf format is used for serialization and deserialization, the mutual serialization rule code of related Rust structures and Protobuf is defined in the common/ proto-conv subdirectory. |

| meta/sled-store | Currently, sled is used in MetaService layer to save persistent data. The interfaces related to sled are encapsulated in this subdirectory. |

| meta/raft-store | The openraft user layer needs to implement a storage interface to save data in the raft store. This subdirectory is the storage layer of openraft implemented by MetaService layer, which depends on sled storage, besides a state machine is implemented here which needs be customized by the openraft user layer. |

| meta/api | The user layer API interface exposed to query implemented based on kvapi. |

| common/meta/grpc | A client module encapsulated by grpc, the MetaService client uses this to communicate with the MetaService. |

| raft | https://github.com/datafuselabs/openraft, a full asynchronous Raft library derived from the async-raft project. |

Query Layer

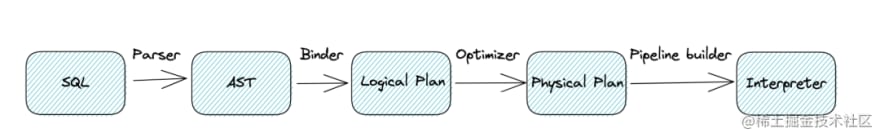

Query nodes are mainly used for calculation. Multiple query nodes can form MPP clusters, and the performance will expand horizontally with the number of query nodes theoretically. The SQL instructions will undergo the following conversion processes in query:

A SQL string is first parsed into AST syntax tree by parser, then bound with other information like catalog and turned into logical plan by Binder. Next, the logical plan is converted into physical plan by a series of optimizer processing. Finally, the physical plan is traversed to build the corresponding execution logic. The modules involved in the query layer are as follows:

| Package | Usage |

|---|---|

| query | Query service, the entry of the entire function is in bin/databend-query.rs, and contains some sub modules. Here are some important ones: (1) api - exposed to external http/RPC interfaces, (2) catalogs - catalogs management. The default catalog (stored in MetaService) and hive catalog (stored in hive meta store) are supported at present, (3) Clusters - query clustering, (4) Config - query configuration, (5) databases - database engines supported by query, (6) evaluator - expression evaluation tools, (7) Interpreters - SQL executor, which performs physical execution after the plan is built by SQL, (8) pipelines - the implemented scheduling framework of physical operators, (9) Servers - exposed services, including Clickhouse/MySQL/http, etc, (10) Sessions - session management, (11) SQL - including new planner design, new binder logic, new optimizers design, (12) Storages - table engines, the most common one is the fuse engine, (13) table_functions - table functions, such as numbers. |

| common/ast | New SQL parser implemented base on nom_rule. |

| common/datavalues | The definition of various columns, representing the layout of data in memory. This part will be gradually migrated to common/expressions. |

| common/datablocks | Datablock represents Vec Set, which encapsulates some common methods. This part will be gradually migrated to common/expressions. |

| common/functions | Declarations of scalar functions and aggregate functions. |

| common/hashtable | The implementation of a linear detection, which is mainly used in group by aggregation functions and join scenarios. |

| common/formats | Serialization and deserialization of external data in various formats, such as CSV/TSV/Json formats. |

| opensrv | https://github.com/datafuselabs/opensrv |

Storage Layer

Storage layer mainly involves the management of Snapshots, Segments and index information of tables, and the interaction with the underlying IO. One of the highlights of the storage layer is the implementation of increment view like that of Iceberge based on Snapshot isolation. We can now obtain time travel access to tables in any historical state.

Future work

This source code reading series has just started, the following tutorials will explain the source code of each module step by step according to the introduction sequence. Most of the tutorials will be in the form of articles, and when it comes to some important and interesting module designs, we may use live video to encourage communication. It is only a preliminary plan at present. We will accept your suggestions to make adjustment on time or content during the process. In any case, we hope that this activity may appeal to more like-minded people to participate in the development of Databend, and to learn, communicate and grow together.

About Databend

Databend is an open source modern data warehouse with elasticity and low cost. It can do real-time data analysis on object-based storage.We look forward to your attention and hope to explore the cloud native data warehouse solution, and create a new generation of open source data cloud together.

Databend documentation:https://databend.rs/

Twitter:https://twitter.com/Datafuse_Labs

Slack:https://datafusecloud.slack.com/

Wechat:Databend

Top comments (0)