This article was originally posted on Everything DevOps.

Persisting Data in Kubernetes is complex. And this is because though Pods have readable and writable disk space, the disk space still depends on the Pod’s lifecycle.

When building applications on Kubernetes, there are use cases where developers would want storage for their Pods that doesn’t depend on a Pod’s lifecycle, is available for all nodes, and can survive cluster crashes.

To meet those use cases, Kubernetes provides developers with PersistentVolumes, which are volume plugins like volumes that have a lifecycle independent of any individual Pod that uses it.

In this article, with the help of this Kubernetes docs demo example, you will learn what PersistentVolumes are and how to configure a Pod to use a PersistentVolume for storage.

Prerequisite

To follow this article, you would need a single Node Kubernetes cluster with the kubectl command-line tool configured to communicate with the cluster.

If you don’t have a single Node cluster, you can create one by using Minikube — which this article uses.

What are Persistent Volumes?

PersistentVolumes (PVs) are units of storage in a Kubernetes cluster that an administrator has provisioned. As a Node is a cluster resource, a PersistentVolume is a resource in the cluster.

A PersistentVolume provides storage in a Kubernetes cluster through an API object that captures the details of the actual storage implementation, be that NFS, iSCSI, or a cloud-provider-specific storage system.

How to use PersistentVolume for storage

To use a PersistentVolume, you must request it through PersistentVolumeClaims (PVC). A PersistentVolumeClaim is a request for storage used to mount a PersistentVolume into a Pod.

One can summarize the entire process in the following three steps:

- The cluster administrator creates a PersistentVolume backed by a physical storage. And they do not associate the volume with any Pod.

- The developer (cluster user) creates a PersistentVolumeClaim that is automatically bound to a suitable PersistentVolume.

- The developer then creates a Pod that uses the created PersistentVolumeClaim for storage.

In this article, you will take the role of a cluster administrator and user to walk through the steps.

Configuring a Pod to use a PersistentVolume for storage

In this article, you will create a hostPath Persistent Volume. Kubernetes supports hostPath volumes for development and testing on a single Node cluster. A hostPath PersistentVolume uses a file or directory on the single Node to emulate network-attached storage.

In a production cluster, it is not recommended you use hostPath as it presents many security risks. Instead, the cluster administrator would provision a network resource like an NFS share, a Google Compute Engine persistent disk, or an Amazon Elastic Block Store volume.

Creating a file for the hostPath

To create a file for the hostPath, open a shell to the single Node in your cluster. As this article uses Minikube, the terminal command will be:

$ minikube ssh

In the shell, create a directory for the file:

# This article assumes that your Node uses 'sudo' to run commands as the superuser

$ sudo mkdir /mnt/tmp

In the /mnt/tmp directory, create an index.html file:

$ sudo sh -c "echo 'Hello from a Kubernetes storage' > /mnt/tmp/index.html"

Test that the index.html file exists:

$ cat /mnt/tmp/index.html

The output of the above command should be:

Hello from a Kubernetes storage

Now, you can close the shell to your single Node. Next, you will create a PersistentVolume for the hostPath.

Creating a hostPath PersistentVolume

You use a YAML configuration file to create a PersistentVolume, like any Kubernetes component.

Below is the YAML configuration file for a hostPath PersistentVolume:

apiVersion: v1

kind: PersistentVolume

metadata:

name: learn-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/tmp"

The YAML configuration file specifies that the volume is at /mnt/tmp on the cluster's Node. The configuration also defines a storage of 10 gibibytes and an access mode of ReadWriteOnce. The access mode of ReadWriteOnce means the volume can be mounted as read-write by a single Node. The configuration file also defines the PersistentVolume StorageClassName as manual, which Kubernetes will use to bind the PersistentVolumeClaim requests to this PersistentVolume.

Create the hostPath PersistentVolume with the above configuration using the command below:

$ kubectl apply -f https://raw.githubusercontent.com/Kikiodazie/persisting-data-in-kubernetes-with-persistent-volumes/master/hostPathPersistentVolume.yaml

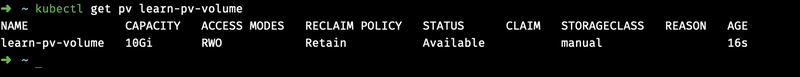

Verify the creation of the PersistentVolume with the command below:

// learn-pv-volume is the pv name defined in the configuration file above

$ kubectl get pv learn-pv-volume

The above command will show the PersistentVolume STATUS as Available, which means the PersistentVolume is yet to be bound to a PersistentVolumeClaim.

Next, you will create a PersistentVolumeClaim to request the PersistentVolume you’ve created.

Creating a PersistentVolumeClaim

Same with PersistentVolume, you use a YAML file. Below is a PersistentVolumeClaim configuration file that requests a volume of at least three gibibytes and can allow read-write access for at least one Node.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: learn-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 3Gi

To create a PersistentVolumeClaim with the above configurations, run the command below:

$ kubectl apply -f https://raw.githubusercontent.com/Kikiodazie/persisting-data-in-kubernetes-with-persistent-volumes/master/persistentVolumeClaim.yaml

After running the above command, the Kubernetes control plane will look for a PersistentVolume that satisfies the claim's requirements. Supposed the control plane finds a suitable PersistentVolume with the same StorageClass, it binds the claim to that PersistentVolume.

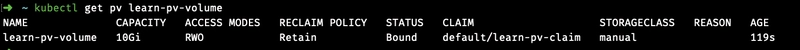

To confirm that the PersistentVolumeClaim binds to the PersistentVolume, get the PersistentVolume’s STATUS with the command below:

$ kubectl get pv learn-pv-volume

The above command will show a STATUS of Bound, as shown in the screenshot below.

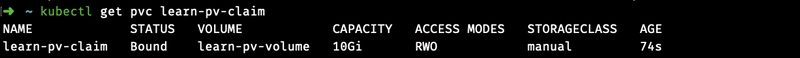

You can also see that the PersistentVolumeClaim is bound to the PersistentVolume by looking at the PersistentVolumeClaim:

$ kubectl get pvc learn-pv-claim

Next, you will create a Pod to use the PersistentVolumeClaim as a volume.

Creating a Pod

Below is the YAML configuration file for the Pod you will create:

apiVersion: v1

kind: Pod

metadata:

name: learn-pv-pod

spec:

volumes:

- name: learn-pv-storage

persistentVolumeClaim:

claimName: learn-pv-claim

containers:

- name: learn-pv-container

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: learn-pv-storage

Looking at the above configuration file, you would notice that it specifies the PersistentVolumeClaim and not the PersistentVolume. This is because the claim is a volume from the Pod’s perspective.

To create the Pod with the above configurations, run the command below:

$ kubectl apply -f

https://raw.githubusercontent.com/Kikiodazie/persisting-data-in-kubernetes-with-persistent-volumes/master/persistentVolumePod.yaml

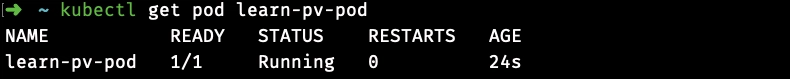

To verify that the container (Ngnix) in the Pod is running, run the command below:

$ kubectl get pod learn-pv-pod

Next, you will confirm that the Pod has been configured to use the hostPath PersistentVolume as storage from the PersistentVolumeClaim.

Testing the configuration

To test and confirm the configuration, you verify that the Nginx server is serving the index.html file from the hostPath volume.

To do so, enter the shell of the Nginx container running in your Pod with the command below:

$ kubectl exec -it learn-pv-pod -- /bin/bash

In the shell, run the commands below to install curl, which you will use to serve the index.html file.

$ apt update

$ apt install curl

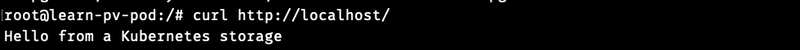

After installing curl, use it to verify the configuration works with the command below:

$ curl http://localhost/

The above command will output the text you wrote to the index.html file on the hostPath volume:

Now, even if the Pod dies or restarts, upon recreation, it will have access to the same storage.

To test it out, delete the Pod with:

$ kubectl delete pod learn-pv-pod

And create it again with:

$ kubectl apply -f https://raw.githubusercontent.com/Kikiodazie/persisting-data-in-kubernetes-with-persistent-volumes/master/persistentVolumePod.yaml

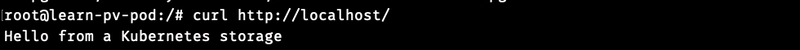

Then get into the shell of the Pod and rerun the curl command:

$ kubectl exec -it learn-pv-pod -- /bin/bash

$ curl http://localhost/

You will still get the output of the

Cleaning up

Clean up the entire setup by deleting the Pod, PersistentVolumeClaim, and the PersistentVolume with the commands below:

$ kubectl delete pod learn-pv-pod

$ kubectl delete pvc learn-pv-claim

$ kubectl delete pv learn-pv-volume

Also, delete the index.html file and the directory by running the following commands in the Node shell:

$ sudo rm /mnt/tmp/index.html

$ sudo rmdir /mnt/tmp

Conclusion

This article introduced you to PersistentVolumes in Kubernetes. It also showed you how to configure a Pod to use a PersistentVolume for storage.

There is more to learn about persisting data in Kubernetes with volumes. To learn more, check out the following resources:

Top comments (1)

Thanks for the article!

State management is always an issue. For small projects I would also use in-kubernetes persistent state, but for larger projects I believe I would rather move the database out of the cluster and make it completely stateless.