We shall install grafana using helm. To install helm

$ curl -fsSL -o get_helm.sh https://raw.githubusercontent.com/helm/helm/main/scripts/get-helm-3

$ chmod 700 get_helm.sh

$ ./get_helm.sh

Initialize it

helm init

Create a service account for helm.

kubectl --namespace kube-system create serviceaccount tiller

Bind the new service account to the cluster-admin role. This will give tiller admin access to the entire cluster.

kubectl create clusterrolebinding tiller-cluster-rule --clusterrole=cluster-admin --serviceaccount=kube-system:tiller

Deploy tiller and add the line serviceAccount: tiller to spec.template.spec

kubectl --namespace kube-system patch deploy tiller-deploy -p '{"spec":{"template":{"spec":{"serviceAccount":"tiller"}}}}'

Test helm is working

helm list

You can now proceed to install grafana.

Adding the Official Grafana Helm Repo

helm repo add grafana https://grafana.github.io/helm-charts

helm repo update

LOGS

We use Loki, a Grafana project, for logs.

Install helm chart with the following Stack enabled ( Loki, Promtail, Grafana, Prometheus )

helm install loki grafana/loki-stack --set grafana.enabled=true,prometheus.enabled=true, \

--set prometheus.server.retention=2d,loki.config.table_manager.retention_deletes_enabled=true,loki.config.table_manager.retention_period=48h, \

--set grafana.persistence.enabled=true,grafana.persistence.size=1Gi, \

--set loki.persistence.enabled=true,loki.persistence.size=1Gi, \

--set prometheus.alertmanager.persistentVolume.enabled=true,prometheus.alertmanager.persistentVolume.size=1Gi, \

--set prometheus.server.persistentVolume.enabled=true,prometheus.server.persistentVolume.size=1Gi

We have enabled persistence for loki. A persistent volume of the size specified will be created to store the logs. A PV will also be created for prometheus metrics, grafana data eg dashboards as well as alertmanager data. Prometheus metrics and loki logs will be retained for 2 days. We run the command below to get the default username and password.

kubectl get secret loki-grafana -o go-template='{{range $k,$v := .data}}{{printf "%s: " $k}}{{if not $v}}{{$v}}{{else}}{{$v | base64decode}}{{end}}{{"\n"}}{{end}}'

A service called loki-grafana is created. Expose it using NodePort or Loadbalancer service type if you need to access it over the internet.

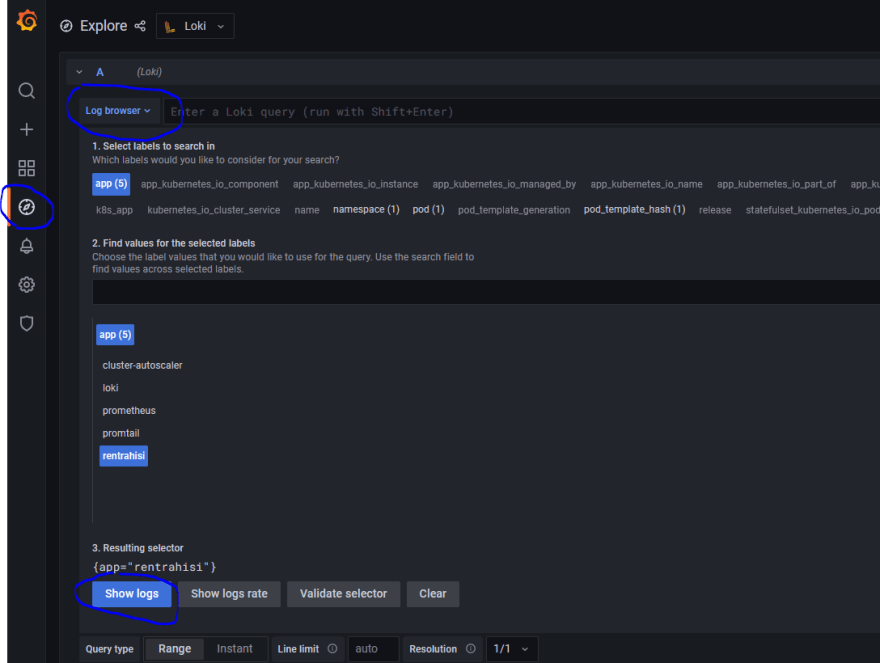

Log in using the admin username and password you got. Ensure Loki has been selected on the top left dropdown menu. Navigate to the explore menu item on the left, click on log browser. You will be able to see your cluster logs.

Loki uses LogQL query language. See below sample query.

{app="rentrahisi"} != "ELB-HealthChecker/2.0" | pattern `<src_ip> <_> - - <time> "<method> <uri> <http_version>" <response_code> <response_size> <referrer> <user_agent>` | response_code = "200"

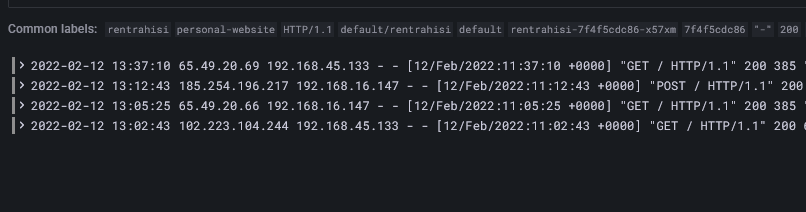

This queries logs for a deployment called rentrahisi. It ignores logs with the string "ELB-HealthChecker/2.0". We then parse apache logs like the one below.

2022-02-07 12:36:54

120.1.3.4 192.168.26.38 - - [07/Feb/2022:10:36:54 +0000] "GET /favicon.ico HTTP/1.1" 404 460 "http://ingress.kayandal.awsps.myinstance.com/" "Mozilla/5.0 (Windows NT 10.0; Win64; x64; rv:91.0) Gecko/20100101 Firefox/91.0

We only fetch logs where the response code is 200.

METRICS

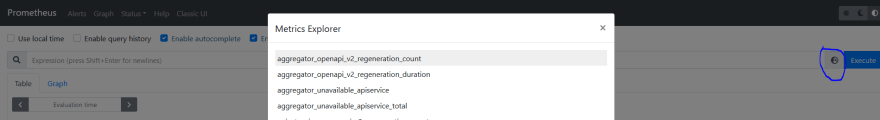

We will use prometheus. Expose the service called loki-prometheus-server using a LoadBalancer or NodePort service. We are able to search for metrics.

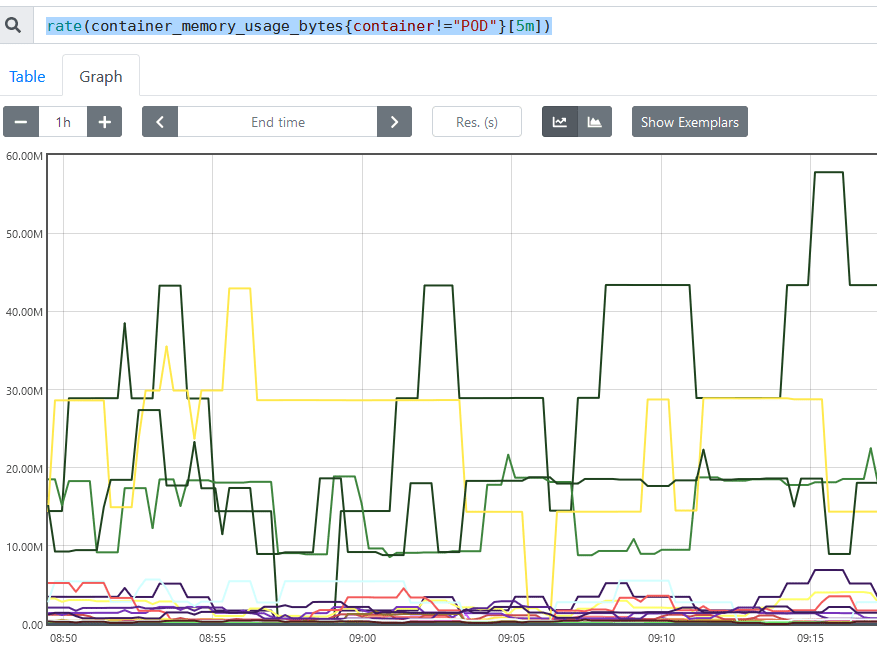

We can use below query to get container memory usage.

rate(container_memory_usage_bytes{container!="POD"}[5m])

The same method can be used to graph other metrics. We can see a list of recommended metrics to monitor here.

We can complement prometheus using Grafana which is able to leverage the Prometheus data source to create information-rich dashboards in a user-friendly visual format.

On the left menu, click create then add new panel.

Select Prometheus as the data source in the highlighted dropdown below and enter the PromQl query below gotten from here in the metrics browser which shows the percentage cpu utilization of pods.

sum(rate(container_cpu_usage_seconds_total{image!="", container!="POD"}[5m])) by (pod) /

sum(container_spec_cpu_quota{ image!="", container!="POD"} /

container_spec_cpu_period{ image!="", container!="POD"}) by (pod) *100

Save the dashboard. This process can be repeated for other metrics.

We can also setup alerts for when metrics breach thresholds eg

changes(kube_pod_container_status_restarts_total[5m])

This would alert for pod restarts within 5 min.

I have a Grafana dashboard with common metrics here.

Latest comments (0)