Hey Dev.to community! 👋

Have you ever wondered which local LLM is better at answering your specific questions? Or if that fancy new model is actually worth the disk space? Well, I've been tinkering with Ollama lately, and I wanted an easy way to put different models head-to-head.

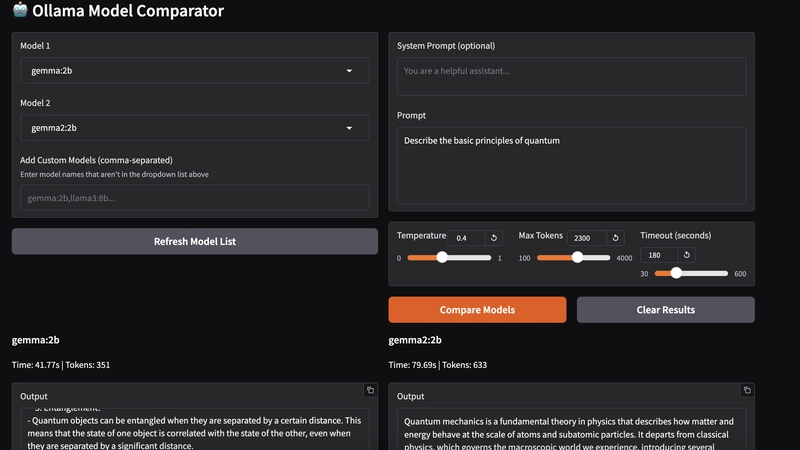

So I built the Ollama Model Comparator - a super simple but surprisingly useful tool that lets you compare responses from different Ollama models side by side.

What does it do?

It's pretty straightforward:

- Pick any two Ollama models from a dropdown (or add custom ones)

- Enter your prompt

- Hit "Compare" and watch the magic happen

- Check out not just the responses, but also the generation time and token counts

Tech stuff (for the curious)

The app is built with:

- Python and Gradio for the UI (super easy to use!)

- Requests library to talk to Ollama's API

- A sprinkle of passion for local LLMs

Getting started in 30 seconds

- Make sure you have Ollama running

- Clone the repo:

git clone https://github.com/your-username/ollama-model-comparator.git - Install deps:

pip install gradio requests - Run it:

python app.py - Open your browser to http://127.0.0.1:7860

That's it! No API keys, no complicated setup.

What's next & how you can help

This is just v1, and I'd love to make it better with your help! Some ideas I'm thinking about:

- Adding response streaming (because waiting is boring)

- Supporting more than two models at once (royal rumble style)

- Adding more detailed comparison metrics

- Making the UI prettier (I'm a backend person, can you tell? 😅)

If you're into local LLMs and want to contribute, I'd love your help! The code is simple enough that even if you're new to Python, you can probably jump in.

Try it out!

The project is available on GitHub under the MIT license.

Would love to hear what you think! Which models are you comparing? Any surprising results? Drop a comment below!

Happy comparing! 🚀

Top comments (0)