Recently I deployed some Azure DevOps (ADO) agents on Azure Kubernetes Service (AKS) and use the new workload identity add-on to authenticate to Azure. We mainly use these agents to deploy other Azure with Terraform / Azure CLI / Azure PowerShell within our private network. Builds are done on Microsoft-hosted agents.

Pros:

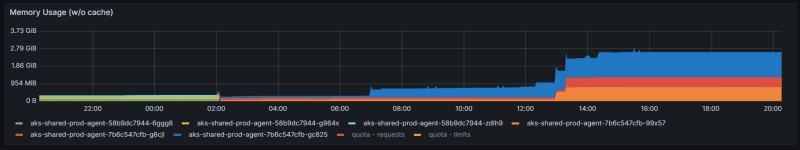

- Resource utilisation is better than using VMs. Agents used for deployment are not very resource intensive on CPU/Memory as they mostly do network I/O to talk to various APIs and deploy things.

You can use KEDA to auto-scale agents based on number of pending ADO jobs. This lets you scale down during quiet periods to reclaim resources.

You can deploy agents within your private network. Most of our deployment targets are behind either private link or IP-whitelisted resources. You cannot use Microsoft-hosted agents to deploy to those. You must use self-hosted agents on e.g. network-joined AKS cluster.

When running deployment pipelines on ADO agents, you will have to authenticate to Azure to deploy or manage resources. You can use service principals, but they are not the most secure option because they require a Client ID and Secret.

Managed identities are great for keyless authentication to Azure resources. Terraform and all Azure tools support managed identity authentication. You can get rid of the need for principal credential rotation.

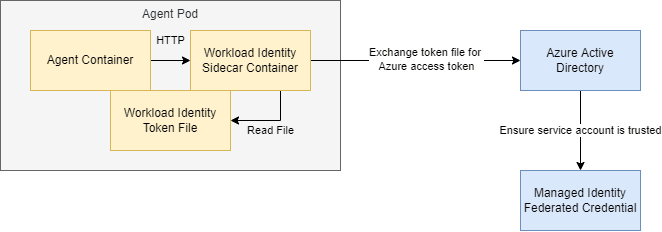

Workload identity add-on for Kubernetes is the new way of assigning managed identities to pods in Kubernetes. This can be done between multiple Azure subscriptions (and even cross-tenant), and agent pod doesn't even have to live on Azure Kubernetes Service (could be EKS or GKE). It is less clunky than aad-pod-identity.

Cons:

Anyone who can execute jobs on that agent pool will be able to obtain managed identity access token. If you share agent pools between multiple teams then you shouldn't use this method. Each team will be able to access each other's resources.

For multiple teams you should ideally have 1 managed identity linked to 1 agent pool, and 1 agent pool usable only by 1 team (ADO project).

This is more of a "fill in the blanks" guide than a step-by-step tutorial. I am going to only briefly mention some setup steps because they are better documented elsewhere.

Set up a basic ADO agent on AKS first:

Create a basic ADO agent image. This just involves a base Debian image, some dependencies and a pre-made script from Microsoft. My agents are all based on a Linux distro, so you can find the guide here.

Add your dependencies on top of the base ADO agent image. In my case, I just need Terraform and PowerShell, but you can also install Azure CLI, kubectl etc.

FROM <Base ADO agent image>

WORKDIR /tools

# To make it easier for build and release pipelines to run apt-get,

# configure apt to not require confirmation (assume the argument by default)

ENV DEBIAN_FRONTEND=noninteractive

RUN echo "APT::Get::Assume-Yes \"true\";" > /etc/apt/apt.conf.d/90assumeyes

# Updating paths to tools

ENV PATH="/tools/azure-powershell-core:/tools/terraform:/tools:${PATH}"

# Install basics

RUN apt-get update

RUN apt-get install curl zip unzip

# Install Terraform

ADD https://releases.hashicorp.com/terraform/1.4.6/terraform_1.4.6_linux_amd64.zip /tmp/terraform.zip

RUN unzip /tmp/terraform.zip -d terraform

RUN chmod +x terraform/terraform

# Install PowerShell Core

ADD https://github.com/PowerShell/PowerShell/releases/download/v7.1.5/powershell_7.1.5-1.ubuntu.20.04_amd64.deb powershell.deb

RUN dpkg -i powershell.deb ; exit 0

RUN apt-get install -f

WORKDIR /azp

- Push your ADO agent image to a registry of your choice, in my case ACR:

az acr login -n <acr_name>

docker push <acr_name>.azurecr.io/<ado_agent_image>

- You need an AKS cluster with workload identity add-on deployed. You can create one using Azure CLI as described here. In my case, clusters are deployed using Terraform, so I just set these properties to true. If using Terraform, you will need to output OIDC issuer URL to be used in subsequent steps.

resource "azurerm_kubernetes_cluster" "cluster" {

(...)

oidc_issuer_enabled = true

workload_identity_enabled = true

(...)

}

output "aks_oidc_issuer_url" {

value = azurerm_kubernetes_cluster.cluster.oidc_issuer_url

}

- Create ADO agent pool and personal access token to join agents to a pool.

- Deploy your ADO agent image as a Kubernetes deployment. You can use YAML, though I prefer to create everything in Terraform.

### variables.tf

variable "pool_name" {}

variable "ado_token" {}

variable "replicas" {

default = 1

}

variable "requests" {

default = {

cpu = "100m"

memory = "512Mi"

}

}

variable "limits" {

default = {

memory = "2Gi"

}

}

variable "disk_space_limit" {

default = "4Gi"

}

locals {

work_dir = "/mnt/work"

ado_agent = "ado-agent-${var.pool_name}"

}

### providers.tf

terraform {

<backend_configuration>

required_providers {

helm = {

source = "hashicorp/helm"

version = "<3.0.0"

}

kubernetes = {

source = "hashicorp/kubernetes"

version = "<3.0.0"

}

}

}

### kubernetes.tf

resource "kubernetes_namespace_v1" "namespace" {

metadata {

name = "ado-agents-${var.pool_name}"

}

}

resource "kubernetes_secret_v1" "env_vars" {

metadata {

name = local.ado_agent

namespace = kubernetes_namespace_v1.namespace.metadata[0].name

}

data = {

# These variables are used by the script from Step 1.

# Have a look at the script Microsoft provides in their guide and what other config options they have available.

# Each of these secret Key-Value entries is another environment variable mounted to the pod.

AZP_URL = "https://dev.azure.com/<your_org_name>"

# You can source this from input variable, Azure Key Vault, etc.

AZP_TOKEN = var.ado_token

AZP_POOL = var.pool_name

AZP_WORK = local.work_dir

}

}

resource "kubernetes_deployment_v1" "ado_agent" {

metadata {

name = local.ado_agent

namespace = kubernetes_namespace_v1.namespace.metadata[0].name

}

spec {

replicas = var.replicas

selector {

match_labels = {

app = local.ado_agent

}

}

template {

metadata {

labels = {

app = local.ado_agent

}

}

spec {

container {

name = local.ado_agent

image = "<acr_name>.azurecr.io/<ado_agent_image>"

# We link all variables from a secret ref instead of listing them here directly.

# so that your PAT token doesn't show up on kubectl describe pod

env_from {

secret_ref {

name = kubernetes_secret_v1.env_vars.metadata[0].name

}

}

volume_mount {

name = "temp-data"

mount_path = local.work_dir

}

resources {

limits = var.limits

requests = var.requests

}

}

volume {

name = "temp-data"

# Using node's ephemeral disk space for agent storage.

# Deployments agents do not use much disk space and can be wiped after pod dies.

empty_dir {

size_limit = var.disk_space_limit

}

}

}

}

}

}

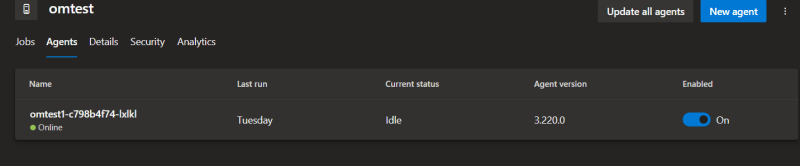

- Use terraform plan and apply while your kubectl context is linked to your AKS cluster. This should create a new agent and link it to the pool. Microsoft's start up script downloads a latest version of the agent each time a pod starts up, registers it against the pool and removes it on pod termination.

PS> kubectl get deployment

NAME READY UP-TO-DATE AVAILABLE AGE

omtest1 1/1 1 1 11d

- This set up is just a normal ADO agent without workload identity linked together. You would still need a Client ID / Secret or Azure DevOps Service Connection to use in your pipelines.

Adding workload identity to ADO agent:

Workload identity works by linking a Kubernetes service account to a managed identity via federated credentials. This establishes trust between a specific Kubernetes cluster + namespace + service account and a managed identity. An identity no longer has to be assigned to AKS nodes like in aad-pod-identity.

Create a user-assigned managed identity and give it some role assignment over Azure resources or resource group, so it can manage it. Here is modified Terraform from above.

### variables.tf

variable "pool_name" {}

variable "ado_token" {}

variable "replicas" {

default = 1

}

variable "requests" {

default = {

cpu = "100m"

memory = "512Mi"

}

}

variable "limits" {

default = {

memory = "2Gi"

}

}

variable "disk_space_limit" {

default = "4Gi"

}

variable "location" {

default = "westeurope"

}

variable "managed_identity_scope {}

# Take this from your AKS deployment

variable "aks_oidc_issuer_url" {}

locals {

work_dir = "/mnt/work"

ado_agent = "ado-agent-${var.pool_name}"

}

### providers.tf

terraform {

<backend_configuration>

required_providers {

helm = {

source = "hashicorp/helm"

version = "<3.0.0"

}

kubernetes = {

source = "hashicorp/kubernetes"

version = "<3.0.0"

}

azurerm = {

source = "hashicorp/azurerm"

version = "<4.0.0"

}

}

}

provider "azurerm" {

features {}

}

### azure.tf

resource "azurerm_resource_group" "group" {

name = "rg-identity-${var.pool_name}"

location = var.location

}

resource "azurerm_user_assigned_identity" "identity" {

name = "mi-${var.pool_name}"

resource_group_name = azurerm_resource_group.group.name

location = var.location

}

# This resource will establish trust between Kubernetes cluster, namespace and service account.

# Only pods assigned that service account will be able to obtain an access token from Azure for this corresponding managed identity.

resource "azurerm_federated_identity_credential" "identity" {

resource_group_name = azurerm_user_assigned_identity.identity.resource_group_name

parent_id = azurerm_user_assigned_identity.identity.id

name = "${kubernetes_namespace_v1.namespace.metadata[0].name}-${kubernetes_service_account_v1.service_account.metadata[0].name}"

subject = "system:serviceaccount:${kubernetes_namespace_v1.namespace.metadata[0].name}-${kubernetes_service_account_v1.service_account.metadata[0].name}"

audience = ["api://AzureADTokenExchange"]

issuer = var.aks_oidc_issuer_url

}

# Give the managed identity some role over a resource group it will manage.

# This does not have to be done in Terraform if you don't have permissions to do it.

resource "azurerm_role_assignment" "deployment_group" {

scope = var.managed_identity_scope

role_definition_name = "Contributor"

principal_id = azurerm_user_assigned_identity.identity.principal_id

}

### kubernetes.tf

resource "kubernetes_namespace_v1" "namespace" {

metadata {

name = "ado-agents-${var.pool_name}"

}

}

resource "kubernetes_secret_v1" "env_vars" {

metadata {

name = local.ado_agent

namespace = kubernetes_namespace_v1.namespace.metadata[0].name

}

data = {

AZP_URL = "https://dev.azure.com/<org_name>"

AZP_TOKEN = var.ado_token

AZP_POOL = var.pool_name

AZP_WORK = local.work_dir

}

}

# Pods would normally use namespace default service account.

# Instead, we create a specific service account for these pods and link it to Azure.

# You do not have to automount service account token.

# Workload Identity will still mount a signed token file different to normal Kubernetes Service account token file.

# This token can be exchanged with Azure for an Azure access token.

resource "kubernetes_service_account_v1" "service_account" {

metadata {

namespace = kubernetes_namespace_v1.namespace.metadata[0].name

name = local.ado_agent

# These annotations are optional but they will set the "default" managed identity for this service account.

# This is helpful if you plan to have 1:N SA->MI federated credentials.

annotations = {

"azure.workload.identity/client-id" = azurerm_user_assigned_identity.identity.client_id

"azure.workload.identity/tenant-id" = azurerm_user_assigned_identity.identity.tenant_id

}

}

automount_service_account_token = false

}

resource "kubernetes_deployment_v1" "ado_agent" {

metadata {

name = local.ado_agent

namespace = kubernetes_namespace_v1.namespace.metadata[0].name

}

spec {

replicas = var.replicas

selector {

match_labels = {

app = local.ado_agent

}

}

template {

metadata {

# This label must be specified so workload identity add-on is able to process the pod.

labels = {

app = local.ado_agent

"azure.workload.identity/use" = "true"

}

# While workload identity works by token-exchange method by default, this is not supported by Azure terraform provider.

# If we want to use managed identity authentication in our agent, we must inject a sidecar.

# This extra container will spoof a managed identity endpoint and provide us with an access token.

annotations = {

"azure.workload.identity/inject-proxy-sidecar" : "true"

}

}

spec {

# Link the service account to deployment, so all pods created by the deployment have the SA token.

service_account_name = kubernetes_service_account_v1.service_account.metadata[0].name

automount_service_account_token = false

container {

name = local.ado_agent

image = "<acr_name>.azurecr.io/<ado_agent_image>"

env_from {

secret_ref {

name = kubernetes_secret_v1.env_vars.metadata[0].name

}

}

volume_mount {

name = "temp-data"

mount_path = local.work_dir

}

resources {

limits = var.limits

requests = var.requests

}

}

volume {

name = "temp-data"

empty_dir {

size_limit = var.disk_space_limit

}

}

}

}

}

}

- Once deployed, your agent pod will have an extra sidecar container and init container:

PS> kubectl get pod

NAME READY STATUS RESTARTS AGE

ado-agent-<pool_name>-7b8d79dd65-hwb22 2/2 Running 0 3d22h

PS> kubectl describe pod

Name: ado-agent-<pool_name>-7b8d79dd65-hwb22

Namespace: ado-agents-<pool_name>

(...)

Labels: app=ado-agent-<pool_name>

azure.workload.identity/use=true

Annotations: azure.workload.identity/inject-proxy-sidecar: true

(...)

Init Containers:

azwi-proxy-init:

Image: mcr.microsoft.com/oss/azure/workload-identity/proxy-init:v1.0.0

(...)

Environment:

PROXY_PORT: 8000

AZURE_CLIENT_ID: <managed_identity_client_id>

AZURE_TENANT_ID: <managed_identity_tenant_id>

AZURE_FEDERATED_TOKEN_FILE: /var/run/secrets/azure/tokens/azure-identity-token

AZURE_AUTHORITY_HOST: https://login.microsoftonline.com/

Mounts:

/var/run/secrets/azure/tokens from azure-identity-token (ro)

Containers:

ado-agent-<pool_name>:

Image: <acr_name>.azurecr.io/<ado_agent_image>

(...)

Environment Variables from:

ado-agent-<pool_name> Secret Optional: false

Environment:

AZURE_CLIENT_ID: <managed_identity_client_id>

AZURE_TENANT_ID: <managed_identity_tenant_id>

AZURE_FEDERATED_TOKEN_FILE: /var/run/secrets/azure/tokens/azure-identity-token

AZURE_AUTHORITY_HOST: https://login.microsoftonline.com/

Mounts:

/mnt/work from temp-data (rw)

/var/run/secrets/azure/tokens from azure-identity-token (ro)

azwi-proxy:

Image: mcr.microsoft.com/oss/azure/workload-identity/proxy:v1.0.0

(...)

Port: 8000/TCP

Host Port: 0/TCP

Args:

--proxy-port=8000

--log-level=info

Environment:

AZURE_CLIENT_ID: <managed_identity_client_id>

AZURE_TENANT_ID: <managed_identity_tenant_id>

AZURE_FEDERATED_TOKEN_FILE: /var/run/secrets/azure/tokens/azure-identity-token

AZURE_AUTHORITY_HOST: https://login.microsoftonline.com/

Mounts:

/var/run/secrets/azure/tokens from azure-identity-token (ro)

(...)

Obtaining an access token using workload identity:

- Guides here and here describe how access tokens are obtained via workload identity. Since we use a sidecar to mimic an IMDS endpoint, getting an access token is described here. Let's try it using kubectl exec.

PS> kubectl exec -it ado-agent-<pool_name>-7b8d79dd65-hwb22 -- pwsh

Defaulted container "ado-agent-<pool_name>" out of: ado-agent-<pool_name>, azwi-proxy, azwi-proxy-init (init)

PowerShell 7.2.6

Copyright (c) Microsoft Corporation.

https://aka.ms/powershell

Type 'help' to get help.

PS /azp> Invoke-WebRequest -Uri 'http://169.254.169.254/metadata/identity/oauth2/token?api-version=2018-02-01&resource=https%3A%2F%2Fmanagement.azure.com%2F' -Headers @{Metadata="true"}

StatusCode : 200

StatusDescription : OK

Content : {"access_token":"eyJ0eXA...

RawContent : HTTP/1.1 200 OK

Server: azure-workload-identity/proxy/v1.0.0 (linux/amd64) 9893baf/2023-03-27-20:58

Date: Tue, 23 May 2023 09:17:48 GMT

Content-Type: application/json

Content-Length: 1707

{"access_to…

Headers : {[Server, System.String[]], [Date, System.String[]], [Content-Type, System.String[]], [Content-Length, System.String[]]}

Images : {}

InputFields : {}

Links : {}

RawContentLength : 1707

RelationLink : {}

PS /azp> (Invoke-RestMethod -Uri 'http://169.254.169.254/metadata/identity/oauth2/token?api-version=2018-02-01&resource=https%3A%2F%2Fmanagement.azure.com%2F' -Headers @{Metadata="true"}).access_token

eyJ0eXA...

We are now ready to run a Terraform pipeline against this new agent pool.

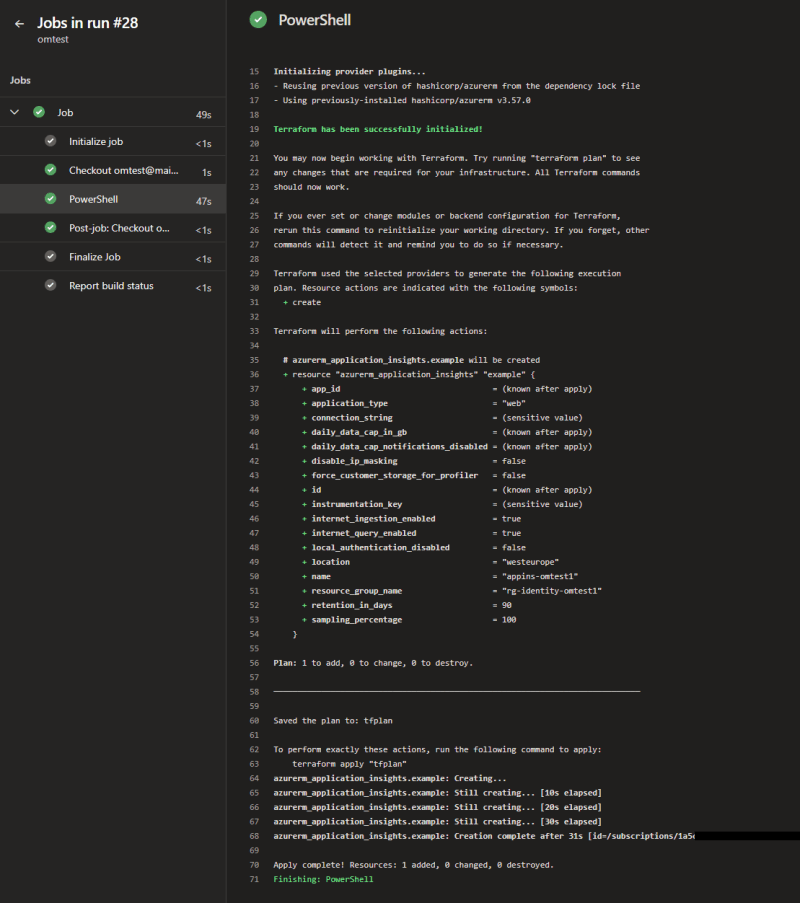

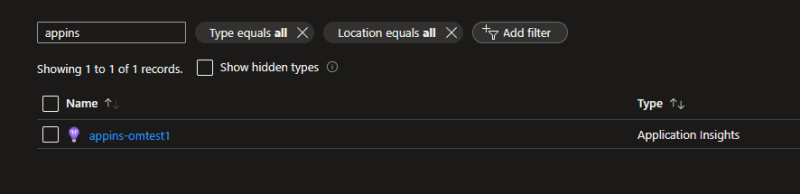

Running a pipeline:

- Create a Terraform repo in Azure DevOps to create some example resources.

### main.tf

terraform {

<backend_configuration>

required_providers {

azurerm = {

source = "hashicorp/azurerm"

version = "<4.0.0"

}

}

}

provider "azurerm" {

# We can just use this flag to authenticate

use_msi = true

subscription_id = "<subscription_id>"

features {}

}

resource "azurerm_application_insights" "example" {

name = "appins-omtest1"

location = "westeurope"

resource_group_name = "rg-identity-omtest1"

application_type = "web"

}

- Create YAML pipeline to run Terraform:

name: $(Rev:rr)

trigger:

- main

pool: omtest1

steps:

- pwsh: |

terraform init

terraform plan -out tfplan

terraform apply -auto-approve tfplan

workingDirectory: $(Build.SourcesDirectory)/terraform-deploy-with-managed-identity

- Result:

In summary:

We have our ADO agent running in AKS and use workload + managed identity combination to achieve keyless authentication to Azure for running Terraform pipelines.

Top comments (0)