As the internet grows and services become increasingly “server-less” one of the big issues facing companies and startups are cloud security misconfigurations. Servers now can be spun up, torn down, and scaled with a few clicks, as a result security can be overlooked.

Here are some common bucket uses:

- Publicly accessible data - Google LandSat

- Phone or Web App storage - Instagram, Homeroom, Cluster

- Websites - RottenTomatoes, IMDB

There are many tools that exist for bucket hunting the ones below are my favorite methods for finding open buckets.

Amazon S3 Buckets

S3 was launched March 14, 2006 and is currently the largest datastore on the internet. The current URL format for all S3 buckets is

https://<BucketName>.s3.amazonaws.com

It’s simple enough to write your own script to brute force the existence of a bucket with a GET request, but rather than reinvent the wheel let’s use vetted and weathered tools.

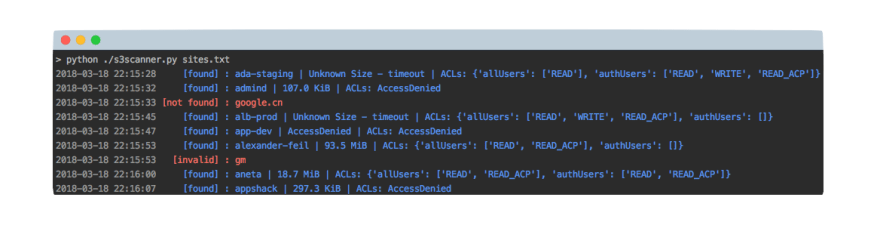

S3Scanner

A simple and effective brute forcer that permutes user supplied wordlist, checks for the existence of, and dumps the contents of the bucket.

GitHub - sa7mon/S3Scanner: Scan for open AWS S3 buckets and dump the contents

Bucket-Stream

Find interesting Amazon S3 Buckets by watching certificate transparency logs. This tool listens to various certificate transparency logs and attempts to find buckets from permutations of the certificates domain name.

Google Cloud Buckets

Google’s cloud service offerings and environment grows daily. They offer free credits for new users and incentives for small businesses. The URL structure doesn’t follow the same pattern as S3 and as such there are no certificates to listen for, more computationally expensive methods are needed.

http://storage.cloud.google.com/<BucketName>

GCPBucketBrute

A script to enumerate Google Storage buckets, determine what access you have to them, and determine if they can be privilege escalated.

Microsoft Azure Buckets

Not wanting to be left out of the race, Microsoft started their Azure cloud early 2010. Offering as many if not more services as its competitors, its bucket url’s are harder to find and enumerate. Fortunately there are tools and documentation that can help us. The URL format is as follows:

https://<ACCOUNT>.blob.core.windows.net/<CONTAINER>/<BLOB>

Brute forcing multiple parts of a string together is computationally complex, so we'll break it up into smaller parts.

For brute forcing we are going to use gobuster to help us enumerate DNS and directory entries.

GitHub - OJ/gobuster: Directory/File, DNS and VHost busting tool written in Go

Starting from smallest set of enumeration targets to largest set

Account < Container < Blob

Brute force Account

Use gobuster DNS module

gobuster -m dns -u “blob.core.windows.net” -wBrute force Container

Use gobuster DIR module

gobuster -m dir -u “.blob.core.windows.net” -e -s 200,204 -fwFinding BLOBs

If listing is enabled you can use the same endpoint with some added query parameters.

https://<ACCOUNT>.blob.core.windows.net/<CONTAINER>?restype=container&comp=list

Otherwise you can attempt to brute force filenames using gobuster. (Not recommended)

Digital Ocean Spaces

A relative newcomer to the space digital ocean has a “S3 compatible” system they call spaces, and with this compatibility comes the same issues and enumeration methods as S3.

https://<BUCKETNAME>.<DATACENTER>.digitaloceanspaces.com

Digital Ocean offers 5 different global data centers which impact the URL

sfo2 - https://<BUCKETNAME>.sfo2.digitaloceanspaces.com

nyc3 - https://<BUCKETNAME>.nyc3.digitaloceanspaces.com

ams3 - https://<BUCKETNAME>.ams3.digitaloceanspaces.com

sgp1 - https://<BUCKETNAME>.sgp1.digitaloceanspaces.com

fra1 - https://<BUCKETNAME>.fra1.digitaloceanspaces.com

Bucket names can contain [a-z][0-9][-] and must be between 3 - 63 characters in length.

Spray & Pray

Don’t care about targeting a specific platform? These tools below will provide you a plethora of data covering (maybe?) every cloud storage provider. Don’t expect them to work the same or as well as some of the tools above but they are great when targeting specific websites.

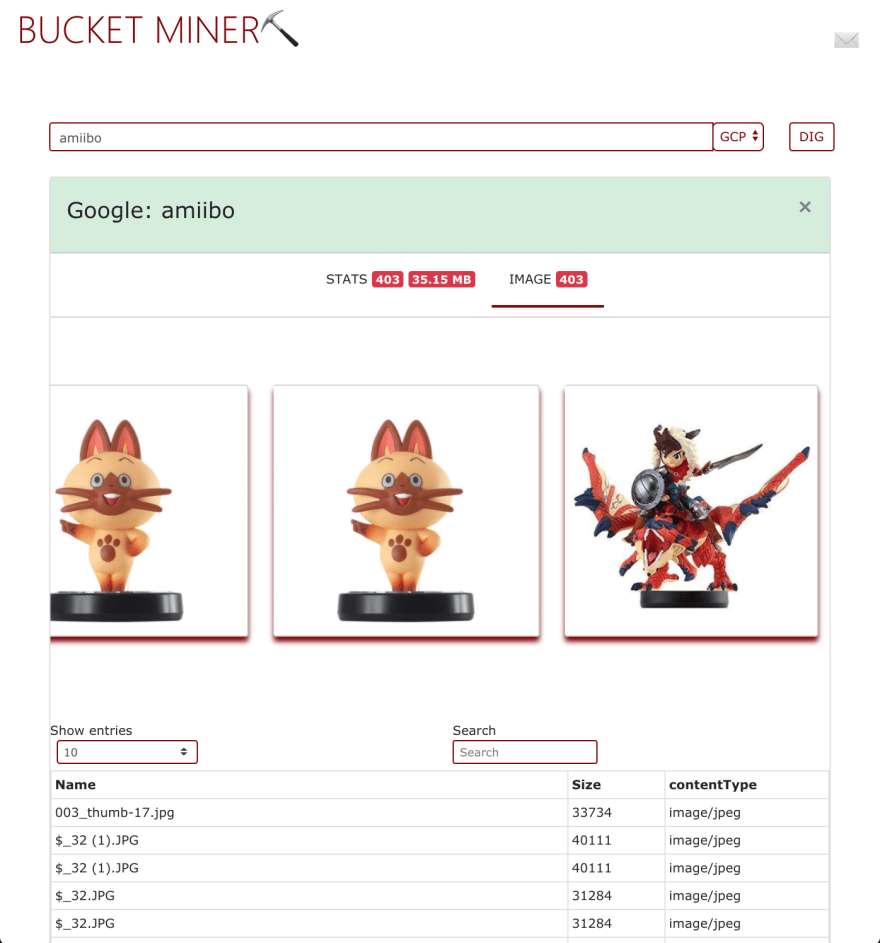

Visualization

All of the tools listed above are expected to be executed from the command line; the power users of these services interact with them via the command line; yet the overwhelming majority of the files stored need a GUI to be viewed for content and context analysis.

Bucket Miner brings you that capability, enter a bucket name and if it is publicly accessible will be presented to you for easy and intuitive viewing.

These tools should get you started hunting for open buckets. Did I miss a tool you like or some new methods I didn’t talk about? Comment below and let’s chat!

Enjoyed the post? Let me know! 💛🦄🔖

Oldest comments (0)