In this post, I will look at how Amazon DynamoDB fit in a multi-region design.

As a part of the series The Multi-Region road, you can check out the other parts:

- Part 1 - A reflection on what to consider before starting a multi-region architecture.

- Part 2 - CloudFront failover configuration.

- Part 3 - Amazon API Gateway HTTP API failover and latency configuration.

Amazon DynamoDB

Amazon DynamoDB is a Serverless NoSQL Database, and it is designed to provide automated storage scaling and low latency. In addition, it is managed, and it can be used when speed and reliability are a requirement.

For this article, I am interested only in:

But it is worth mentioning a few things:

Before going into each section, it is essential to highlight that DAX cannot be used in a multi-region scenario. This is because DAX replicates itself within the same region. Therefore, to achieve multi-region, I must deploy a DAX cluster in each region and create some replication and failover mechanism.

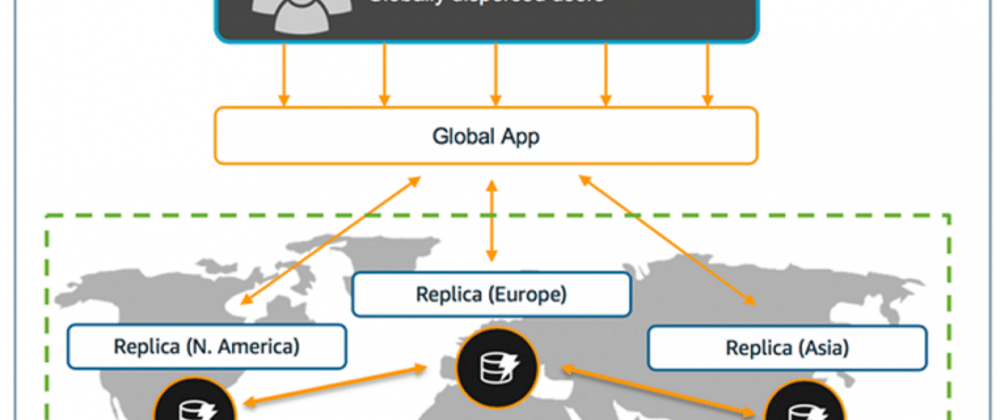

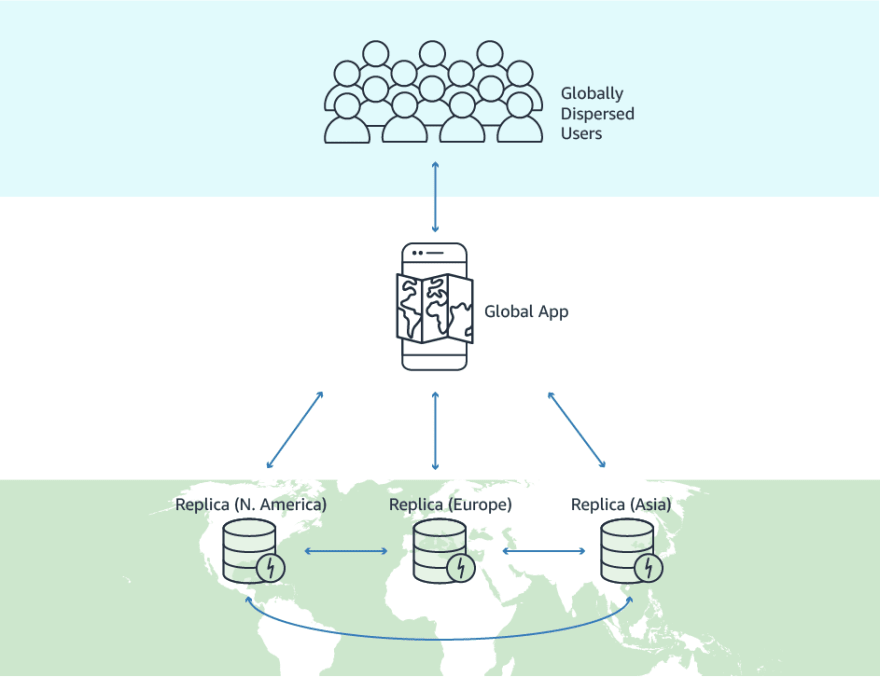

Global Tables

Global tables are a perfect match for a multi-region architecture because they replicate data in other regions for me in seconds. In case of a region failure, the data will stay available, moving the requests to the next best latency region.

If this is not enough from a business point of view, global tables can be helpful in the case of region expansion because what I need to do is add the new region and redeploy the stack, and Dynamo DB will do all the heavy lifting.

Moreover, people sometimes don't consider the use of DynamoDB as a caching system. As I mentioned, I cannot use DAX or a similar in-memory cache system because the cluster should be:

- replicated in each region

- it will cost so much to maintain and replicate data

- because the VPC configuration scalability of the application could be a problem

So the overall ToC will be higher than using global tables as a worldwide cache system without considering that now thanks to the on-demand capacity of DynamoDB, I don't need to worry about the uncertain volume of requests.

Last but not least is the performance consideration to write and read locally or, better to say, in your region and let DynamoDB propagates the write to the other replica tables in the different regions. Suppose you think about concurrent writing and possible conflicts across regions because last-writer-wins, I could solve it using a FIFO queue in a specific region and make sure to write in the perfect order (DynamoDB does not support strongly consistent reads across Regions).

You cannot convert a resource of type AWS::DynamoDB::Table into a resource of type AWS::DynamoDB::GlobalTable by changing its type in your template. Doing so might result in the deletion of your DynamoDB table.

AWSTemplateFormatVersion: 2010-09-09

Transform: 'AWS::Serverless-2016-10-31'

Description: DynamoDb.

Resources:

##########################################################################

# DynamoDB #

##########################################################################

MyTable:

Type: AWS::DynamoDB::GlobalTable

Properties:

KeySchema:

- AttributeName: pk

KeyType: HASH

AttributeDefinitions:

- AttributeName: pk

AttributeType: S

StreamSpecification:

StreamViewType: NEW_AND_OLD_IMAGES

Replicas:

- Region: eu-central-1

- Region: eu-west-1

- Region: eu-west-2

BillingMode: PAY_PER_REQUEST

Outputs:

MyTableName:

Description: "My Table Name"

Value: !Ref MyTable

Export:

Name: MyTableName

DAX

DynamoDB is designed for scale and performance, but sometimes, based on the uses case, you need the extra push to have a better response time, and this is where DAX comes to play.

The usage of DAX is pretty much invisible, and in fact, it is possible to plug it into an existing application code with a few changes:

const AmazonDaxClient = require('amazon-dax-client');

const dynamodb = new AmazonDaxClient({endpoints: [process.env.DAX_ENDPOINT]});

While for a Lambda deployment, there are some extra steps to consider:

- Create a security group where to deploy the Lambda

- Allow this security group to access in ingress the DAX security group

- Assign the Lambda to all the private subnets of DAX

- Assign the DAXRole (from the template) to the Lambda

As I already mentioned, DAX caches replicate within the same region. Therefore, to achieve multi-region with DAX, I must deploy a DAX cluster in each region and create some replication and failover mechanism. Other factors to consider when using DAX are:

- It must be inside the VPC, so your Lambda with all the limitations that this configuration brings. DAX could be your single point of failure in a serverless application like every other cluster. See Scaling a DAX Cluster

Here is a simple deployment template for DAX.

AWSTemplateFormatVersion: 2010-09-09

Description: DAX

##########################################################################

# Parameters #

##########################################################################

Parameters:

VpcId:

Description: VPC where DAX will be created

Type: String

PrivateSubNet1:

Description: private subnet of the vpc

Type: String

PrivateSubNet2:

Description: private subnet of the vpc

Type: String

PrivateSubNet3:

Description: private subnet of the vpc

Type: String

Resources:

##########################################################################

# DAX Subnet Group #

##########################################################################

DAXSubnetGroup:

Type: AWS::DAX::SubnetGroup

Properties:

Description: Subnet group for DAX

SubnetIds:

- !Ref PrivateSubNet1

- !Ref PrivateSubNet2

- !Ref PrivateSubNet3

##########################################################################

# DAX Security Group #

##########################################################################

DAXSecurityGroup:

Type: AWS::EC2::SecurityGroup

Properties:

GroupDescription: Security Group for DAX

VpcId: !Ref VpcId

DAXSecurityGroupIngress:

Type: AWS::EC2::SecurityGroupIngress

DependsOn: DAXSecurityGroup

Properties:

GroupId: !GetAtt DAXSecurityGroup.GroupId

IpProtocol: tcp

FromPort: 8111

ToPort: 8111

SourceSecurityGroupId: !GetAtt DAXSecurityGroup.GroupId

##########################################################################

# DAX IAM ROle #

##########################################################################

DAXRole:

Type: AWS::IAM::Role

Properties:

AssumeRolePolicyDocument:

Statement:

- Action:

- sts:AssumeRole

Effect: Allow

Principal:

Service:

- dax.amazonaws.com

- lambda.amazonaws.com

Version: '2012-10-17'

RoleName: DAX-Role

Policies:

-

PolicyName: DAXAccess

PolicyDocument:

Version: '2012-10-17'

Statement:

- Effect: Allow

Resource: '*'

Action:

- dax:PutItem

- dax:GetItem

- dynamodb:DescribeTable

- dynamodb:GetItem

- dynamodb:PutItem

ManagedPolicyArns:

- arn:aws:iam::aws:policy/service-role/AWSLambdaVPCAccessExecutionRole

##########################################################################

# DAX #

##########################################################################

DaxCluster:

Type: AWS::DAX::Cluster

Properties:

IAMRoleARN: !GetAtt DAXRole.Arn

NodeType: dax.r5.large

ReplicationFactor: 3

SecurityGroupIds:

- !GetAtt DAXSecurityGroup.GroupId

SubnetGroupName: !Ref DAXSubnetGroup

Outputs:

DAXClusterDiscoveryEndpoint:

Description: "DAX Cluster Discovery Endpoint"

Value: !GetAtt DaxCluster.ClusterDiscoveryEndpoint

Export:

Name: DAXClusterDiscoveryEndpoint

DAXClusterArn:

Description: "DAX Cluster Arn"

Value: !GetAtt DaxCluster.Arn

Export:

Name: DAXClusterArn

DAXSecurityGroup:

Description: "DAX Security Group"

Value: !GetAtt DAXSecurityGroup.GroupId

Export:

Name: DAXSecurityGroup

DAXRoleArn:

Description: "DAX Role Arn"

Value: !GetAtt DAXRole.Arn

Export:

Name: DAXRoleArn

Conclusion

Global tables are easy to use and perfect for a multi-region architecture. Still, as usual, every application has different needs and use cases, so maybe before using DynamoDB as a cache, it is good to keep in mind that the typical latency of DynamoDB is around 10ms if the application is warm. If it is acceptable, it is a valid and more straightforward option. However, if the requirement is to have 1ms latency is better to consider an in-memory solution like DAX with the overall complexity and mantainence. Until the day that AWS will offer a serverless cache service with multi-region replication capability, all the above must be considered.

Top comments (0)