In this article, I’ll explore the Azure Container Storage service, explaining its relationships with the other Kubernetes storage functionalities. However, before talking about it, let’s first review the Kubernetes storage services.

Containers, as we know, changed the shape of software development. However, their ephemeral nature means any data saved during a container’s lifecycle is lost upon its termination. To ensure data persistence and sharing across containers, Kubernetes introduced the concept of volumes.

What are Volumes?

A volume in Kubernetes is a directory that can be accessed by containers within a pod to store data. The location and underlying storage of this directory depend on the volume type. For example, the emptyDir volume type is a temporary directory on the host node. This directory is accessible to all containers within the pod that have configured the volume. However, it is not accessible by containers running on other nodes’ pods.

In the example provided, I’ve deployed the following resources in my AKS cluster:

apiVersion: apps/v1

kind: Deployment

metadata:

name: weather-app

spec:

replicas: 3

selector:

matchLabels:

app: weather-app

template:

metadata:

labels:

app: weather-app

spec:

volumes:

- name: weather-app

emptyDir: {}

containers:

- name: temperature-api

image: registry.k8s.io/ubuntu-slim:0.1

volumeMounts:

- name: weather-app

mountPath: /temperature

args:

- "-c"

- "while true; do timeout 0.5s yes >/dev/null; sleep 0.5s; done"

- name: humidity-api

image: registry.k8s.io/ubuntu-slim:0.1

volumeMounts:

- name: weather-app

mountPath: /humidity

command: ["/bin/sh"]

args:

- "-c"

- "while true; do timeout 0.5s yes >/dev/null; sleep 0.5s; done"

- name: web-application

image: registry.k8s.io/ubuntu-slim:0.1

volumeMounts:

- name: weather-app

mountPath: /temperature

- name: weather-app

mountPath: /humidity

command: ["/bin/sh"]

args:

- "-c"

- "while true; do timeout 0.5s yes >/dev/null; sleep 0.5s; done"

If I access the temperature-api container in any of the pods via the shell and list its contents, I can the temperature directory. This directory matches the mount path specified for the volume’s container.

kubectl get pod

NAME READY STATUS RESTARTS AGE

weather-app-78855b8f7d-bcbql 3/3 Running 0 19h

weather-app-78855b8f7d-nc645 3/3 Running 0 19h

weather-app-78855b8f7d-xmfsm 3/3 Running 0 19h

kubectl get pods weather-app-78855b8f7d-nc645 -o=jsonpath='{.metadata.uid}'

fe46222e-3cfa-4e41-9a07-9ff940435548

kubectl exec -it weather-app-78855b8f7d-nc645 -c temperature-api -- sh

# ls

bin dev home lib64 mnt proc run srv temperature usr

boot etc lib media opt root sbin sys tmp var

# touch temperature/sample.json

# ls

sample.json

If I log into the node and explore the content within the /var/lib/kubelet/pods/<POD-ID> directory I will be able to view not only the weather-app volume but also its content. In this case, that includes the sample.json file I’ve previously created.

$ ls /var/lib/kubelet/pods

0ac8291c-24cd-4b4c-9de5-4c6f37c188c8 792c4a3a-6238-4d56-a477-515f026f1338

0c748dad-91b9-4dfb-90f4-8b3c4847b6f7 8f0cecd1-ffd0-4556-a902-d25cab7e3fd5

1111b71b-0051-4c28-a15c-92580b8b7b72 90414e12-8073-4df9-b5cd-4c723d40a496

2384bdf2-65db-4c15-97b3-640276ea8bc3 a4d74959-0021-436e-b7c6-34f8d249a9ca

2acba766-6202-4288-9588-acb972ca77cb c70bb0b8-06fc-4148-9ea8-e9ff370b85b8

43195978-5512-4c8a-86cd-bb72c8e5d162 ceb29f4c-a18a-45ce-92ed-0ccde4d06586

43b23be2-5fb1-4dcd-9e75-160151b65300 e4bb764c-9d92-4306-9006-66a924197147

4ca23b04-5220-4832-be02-aa77e34fbd28 ea6fb720-b4e7-42f3-ba56-e687992d2c38

4e7cb21b-c0a1-48d1-a03b-ff4d4a71b181 fe46222e-3cfa-4e41-9a07-9ff940435548

$ tree /var/lib/kubelet/pods/fe46222e-3cfa-4e41-9a07-9ff940435548

/var/lib/kubelet/pods/fe46222e-3cfa-4e41-9a07-9ff940435548

├── containers

│ ├── humidity-api

│ │ └── 6e6db8d8

│ ├── temperature-api

│ │ └── 994c912c

│ └── web-application

│ └── c9d6fc18

├── etc-hosts

├── plugins

│ └── kubernetes.io~empty-dir

│ ├── weather-app

│ │ └── ready

│ └── wrapped_kube-api-access-hpbxl

│ └── ready

└── volumes

├── kubernetes.io~empty-dir

│ └── weather-app

│ └── sample.json

└── kubernetes.io~projected

└── kube-api-access-hpbxl

├── ca.crt -> ..data/ca.crt

├── namespace -> ..data/namespace

└── token -> ..data/token

As you can see in this example, the usage of volumes makes, the data sharing between pods much easier. However, it’s crucial to understand that each replica of the weather-app pod has its own unique emptyDir volume. With three replicas of the weather-app pod deployed, there are three separate emptyDir volumes.

Volume categories

Volumes fall under three primary categories:

-

Ephemeral Volumes: They are tied to a pod’s lifecycle, and all data are lost once the pod terminates. Examples include

emptyDir,downwardAPI,configMap,secret, andephemeral. -

Persistent Volumes (PVs): They persist beyond a pod’s lifecycle, and the data is maintained even if the pod restarts. Examples span cloud-based storages like

gcePersistentDisk,awsElasticBlockStore,azureDisk, network storage systems such as nfs, cephfs, and software-defined storage solutions. -

Projected Volumes: Map multiple existing volumes to the same directory. Examples include

downwardAPI,configMap,secret, andserviceAccountToken. We’ve already seen an example in the previous code snippet.

$ tree /var/lib/kubelet/pods/fe46222e-3cfa-4e41-9a07-9ff940435548

/var/lib/kubelet/pods/fe46222e-3cfa-4e41-9a07-9ff940435548

├── containers

├── etc-hosts

├── plugins

└── volumes

└── kubernetes.io~projected

└── kube-api-access-hpbxl

├── ca.crt -> ..data/ca.crt

├── namespace -> ..data/namespace

└── token -> ..data/token

Persistent Volumes, Persistent Volume Claims and Storage Classes

Persistent Volumes has different performance characteristics and properties which are influenced by the underlying service. For example, while Azure Files offers built-in scalability and Azure AD integration, NFS provide cross-platform versatility without specifying drivers and support the RWX (Read-Write-Many) access mode, permitting multiple nodes to mount the volume as read-write.

An import consideration about PV (Persistent Volumes) is that they signal Kubernetes about a storage ready for use, by defining the underlying storage service and its configuration, but it won’t allocate it for any workload. In the following example, I’ve defined the configuration needed to create a Persistent Volume that points to an Azure File service.

apiVersion: v1

kind: PersistentVolume

metadata:

name: azurefile-pv

spec:

capacity:

storage: 10Gi

accessModes:

- ReadWriteOnce

azureFile:

secretName: azure-storage-account-gtrekter-secret

shareName: fstraininguks01

readOnly: true

Once Kubernetes knows about the existence of your Persistent volume, you can then start assigning it to your pods by using Persistent Volume Claim (PVC). This claim abstracts the storage from the underlying infrastructure, by preventing you from specifying secrets, and configurations in the deployment manifest; you will simply specify your requirements. For example, This PVC (Persistent Volume Claim) request 5Gi of storage of our previously created PV with ReadWriteOnce access mode.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: azurefile-pvc

spec:

resources:

requests:

storage: 5Gi

accessModes:

- ReadWriteOnce

PV and PVC are based on the precondition that the Kubernetes administrator has manually provisioned the storage defined in the PV and that the resource is reachable. With the introduction of Storage Classes, we can make the entire provisioning dynamic, and in line with the PVC requirements. In this example, whenever a PersistentVolumeClaim that requests this StorageClass is created, Kubernetes will use the parameters specified in the StorageClass to dynamically provision a new Azure Files share.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: azurefile-pvc

spec:

storageClassName: azurefile

resources:

requests:

storage: 5Gi

accessModes:

- ReadWriteOnce

---

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: azurefile

provisioner: kubernetes.io/azure-file

parameters:

storageAccount: myazurestorageaccountname

location: eastus

skuName: Standard_LRS

secretNamespace: default

Container Storage Interface (CSI)

Prior to version 1.13, Kubernetes used “in-tree” plugins to interface with storage services like Azure Files and AWS Elastic Block Store. These plugins were embedded directly within the Kubernetes binaries. This approach posed several challenges. For example, reliability and security concerns arose due to the inclusion of third-party storage code in the core Kubernetes binaries. It also added to the maintenance burden of Kubernetes maintainers who had to test and oversee the code for various storage services. Additionally, vendors seeking to introduce support for their storage systems in Kubernetes, or fix a bug in an existing volume plugin, were compelled to conform to the Kubernetes release process, resulting in potentially delay of releases of bug fixes or updates.

To address these challenges, Kubernetes introduced the Container Storage Interface (CSI). This standard for exposing arbitrary block and file storage systems allowed vendors to develop, deploy, and update plugins for integrating new or enhancing existing storage systems in Kubernetes without altering the core Kubernetes codebase.

The CSI requires the Kubernetes administrator to install the drivers in the cluster, and many vendors already provided the procedure to do so, for example, to enable CSI storage drivers on an AKS cluster, you can use the following code:

az aks update -n myAKSCluster -g myResourceGroup --enable-disk-driver --enable-file-driver --enable-blob-driver --enable-snapshot-controller

This command activates the Azure Disks CSI driver (--enable-disk-driver), Azure Files CSI driver (--enable-file-driver), Azure Blob Storage CSI driver (--enable-blob-driver), and the Snapshot controller (--enable-snapshot-controller).

Azure Container Storage

Now that we have a foundational understanding of Kubernetes storage mechanisms, let’s move to the recently introduced Azure Container Storage.

Using specific CSI drivers to facilitate cloud storage for containers meant adjusting storage services, initially designed for IaaS workloads, for containerized environments. This adaptation often resulted in operational challenges, increasing risks concerning application availability due to potential bugs or incompatibilities in CSI drivers. Additionally, it could affect scalability and introduce performance delays.

Inspired by OpenEBS, an open-source Kubernetes storage solution, Azure Container Storage introduces a true container-native storage. By providing a managed volume orchestration system using microservice-based storage controllers within a Kubernetes framework, Azure Container Storage separates the storage management layer from both pods and the underlying storage, removing the need for many CSI drivers. In fact, while, by default, when we provision a new Azure Kubernetes cluster we will have the following CSI drivers enabled:

$ kubectl get csidrivers.storage.k8s.io

NAME ATTACHREQUIRED PODINFOONMOUNT STORAGECAPACITY TOKENREQUESTS REQUIRESREPUBLISH MODES AGE

disk.csi.azure.com true false false <unset> false Persistent 11m

file.csi.azure.com false true false <unset> false Persistent,Ephemeral 11m

we will just need one CSI diver for all storage types.

$ kubectl get csidrivers.storage.k8s.io 13:41:52

NAME ATTACHREQUIRED PODINFOONMOUNT STORAGECAPACITY TOKENREQUESTS REQUIRESREPUBLISH MODES AGE

containerstorage.csi.azure.com true false false <unset> false Persistent 44h

...

Components

Azure Container Instances components include:

- A Storage Pool: This is an aggregation of storage resources presented as a storage entity for an AKS cluster.

- A Data Services Layer: This layer undertakes responsibilities like replication, encryption, and other supplementary functions that the foundational storage provider might not offer.

- A Protocol Layer: This layer exposes the provisioned volumes to application pods using the NVMe-oF protocol. NVMe over Fabrics (NVMe-oF) is a protocol that extends the NVMe storage interface over network fabrics, which offers a faster attach/detach of PVs.

Installation

The installation of Azure Container Sotorage on a AKS cluster is pretty straightforward. First we need to add or upgrade to the latest version of both the aks-preview cli extension and k8s-extension.

$ az extension add --upgrade --name aks-preview

$ az extension add --upgrade --name k8s-extension

``

Register the `Microsoft.containerService`, and `Microsoft.KubernetesConfiguration` resource providers.

$ az provider register --namespace Microsoft.ContainerService --wait

$ az provider register --namespace Microsoft.KubernetesConfiguration --wait

Previously we had to grant permissions to the Azure Kubernetes Service so that the service was able to provision storage for the cluster, update the existing node pool label to associate it with the correct IO engine for Azure Container Storage, and create a new extension of type `microsoft.azurecontainerstorage` to the Kubernetes cluster.

$ az aks nodepool update --resource-group rg-training-dev-krc --cluster-name aks-training-dev-krc --name agentpool --labels acstor.azure.com/io-engine=acstor

$ export AKS_MI_OBJECT_ID=$(az aks show --name aks-training-dev-krc --resource-group rg-training-dev-krc --query "identityProfile.kubeletidentity.objectId" -o tsv)

$ export AKS_NODE_RG=$(az aks show --name aks-training-dev-krc --resource-group rg-training-dev-krc --query "nodeResourceGroup" -o tsv)

$ az role assignment create --assignee $AKS_MI_OBJECT_ID --role "Contributor" --resource-group "$AKS_NODE_RG"

$ az k8s-extension create --cluster-type managedClusters --cluster-name aks-training-dev-krc --resource-group rg-training-dev-krc --name acstor --extension-type microsoft.azurecontainerstorage --scope cluster --release-train stable --release-namespace acstor

However, with the latest version of the `aks-preview` and `k8s-extension` extensions, we just need to execute the following command. It will take care of the AAD role propagation and everything else.

az aks update --name aks-training-dev-krc --resource-group training-dev-krs --enable-azure-container-storage azureDisk

In this scenario, I’ve activated AzureDisk for container storage. However, there are other options available:

- **Azure Elastic SAN (elasticSan)**: This option allows for storage to be allocated as needed for each volume or snapshot created. It supports simultaneous access from multiple clusters to a single SAN, but each persistent volume can only be connected to by one consumer at any given time.

- **Azure Disks (azureDisk)**: Here, storage allocation is based on the specified size of the container storage pool and the maximum size of each volume.

- **Ephemeral Disk (ephemeralDisk)**: With this choice, AKS identifies the ephemeral storage available on its nodes and utilizes these drives for deploying volumes.

Before getting into an example of Azure Container Storage usage, let’s take a moment to double-check what the extension added to our cluster, starting from the available resource types.

$ kubectl api-resources

NAME SHORTNAMES APIVERSION NAMESPACED KIND

...

challenges acme.cert-manager.io/v1 true Challenge

orders acme.cert-manager.io/v1 true Order

...

certificaterequests cr,crs cert-manager.io/v1 true CertificateRequest

certificates cert,certs cert-manager.io/v1 true Certificate

clusterissuers cert-manager.io/v1 false ClusterIssuer

issuers cert-manager.io/v1 true Issuer

...

configsyncstatuses clusterconfig.azure.com/v1beta1 true ConfigSyncStatus

extensionconfigs ec clusterconfig.azure.com/v1beta1 true ExtensionConfig

capacityprovisionerconfigs containerstorage.azure.com/v1alpha1 true CapacityProvisionerConfig

diskpools dp containerstorage.azure.com/v1alpha1 true DiskPool

storagepools sp containerstorage.azure.com/v1alpha1 true StoragePool

...

etcdclusters etcd etcd.database.coreos.com/v1beta2 true EtcdCluster

...

jaegers jaegertracing.io/v1 true Jaeger

...

alertmanagerconfigs amcfg monitoring.coreos.com/v1alpha1 true AlertmanagerConfig

alertmanagers am monitoring.coreos.com/v1 true Alertmanager

podmonitors pmon monitoring.coreos.com/v1 true PodMonitor

probes prb monitoring.coreos.com/v1 true Probe

prometheuses prom monitoring.coreos.com/v1 true Prometheus

prometheusrules promrule monitoring.coreos.com/v1 true PrometheusRule

servicemonitors smon monitoring.coreos.com/v1 true ServiceMonitor

thanosrulers ruler monitoring.coreos.com/v1 true ThanosRuler

...

blockdeviceclaims bdc openebs.io/v1alpha1 true BlockDeviceClaim

blockdevices bd openebs.io/v1alpha1 true BlockDevice

diskpools dsp openebs.io/v1alpha1 true DiskPool

...

As you can see, we have a bunch of new operators. Within them you can see both resources specific to the Azure container storage and OpenEBS. Next, let’s inspect the Storage Classes.

$ kubectl get storageclass

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

acstor-azuredisk containerstorage.csi.azure.com Delete WaitForFirstConsumer true 28m

acstor-azuredisk-internal disk.csi.azure.com Retain WaitForFirstConsumer true 32m

acstor-azuredisk-internal-azuredisk disk.csi.azure.com Retain WaitForFirstConsumer true 28m

...

$ kubectl describe sc acstor-azuredisk

Name: acstor-azuredisk

IsDefaultClass: No

Annotations:

Provisioner: containerstorage.csi.azure.com

Parameters: acstor.azure.com/storagepool=azuredisk,ioTimeout=60,proto=nvmf,repl=1

AllowVolumeExpansion: True

MountOptions:

ReclaimPolicy: Delete

VolumeBindingMode: WaitForFirstConsumer

Events:

The newly introduced `acstor-azuredisk` Storage Class is specifically designed for the Azure Container Storage operations.

# Example

In this example, we’ll explore how Azure Disks are integrated into a Kubernetes cluster through Azure Container Storage, with a focus on the relationships among the various resources involved. The first step is to create a new Storage Pool.

apiVersion: containerstorage.azure.com/v1alpha1

kind: StoragePool

metadata:

name: training-azuredisk

namespace: acstor

spec:

poolType:

azureDisk: {}

resources:

requests: {"storage": 100Gi}

Executing this configuration results in the creation of a new storage pool resource:

$ kubectl get sp -A

NAMESPACE NAME CAPACITY AVAILABLE USED RESERVED READY AGE

...

acstor training-azuredisk 10737418240 10562019328 175398912 108290048 True 38s

$ kubectl describe sp -n acstor training-azuredisk

Name: training-azuredisk

API Version: containerstorage.azure.com/v1beta1

Kind: StoragePool

...

Spec:

Pool Type:

Azure Disk:

Sku Name: Premium_LRS

Resources:

Requests:

Storage: 10Gi

Zones:

Status:

Available: 10562015232

Available Replicas: 0

Capacity: 10737418240

Conditions:

Last Transition Time: 2024-03-05T03:04:14Z

Message: Storage pool is ready.

Reason: Ready

Status: True

Type: Ready

Last Transition Time: 2024-03-05T03:03:57Z

Message: Storage pool is ready.

Reason: Ready

Status: False

Type: Degraded

Last Transition Time: 2024-03-05T03:03:57Z

Message: StoragePool is scheduled.

Reason: Scheduled

Status: True

Type: PoolScheduled

Max Allocation: 10562015232

Reserved: 108294144

Used: 175403008

Events:

Behind the scenes, Azure initializes a new Storage Class named `actor-azuredisk`

$ kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

...

acstor-azuredisk-internal-training-azuredisk disk.csi.azure.com Retain WaitForFirstConsumer true 112s

acstor-training-azuredisk containerstorage.csi.azure.com Delete WaitForFirstConsumer true 112s

...

$ kubectl describe sc acstor-azuredisk-internal-training-azuredisk

Name: acstor-azuredisk-internal-training-azuredisk

IsDefaultClass: No

Annotations:

Provisioner: disk.csi.azure.com

Parameters: skuName=Premium_LRS

AllowVolumeExpansion: True

MountOptions:

ReclaimPolicy: Retain

VolumeBindingMode: WaitForFirstConsumer

Events:

$ kubectl describe sc acstor-training-azuredisk

Name: acstor-training-azuredisk

IsDefaultClass: No

Annotations:

Provisioner: containerstorage.csi.azure.com

Parameters: acstor.azure.com/storagepool=training-azuredisk,ioTimeout=60,proto=nvmf,repl=1

AllowVolumeExpansion: True

MountOptions:

ReclaimPolicy: Delete

VolumeBindingMode: WaitForFirstConsumer

Events:

A Disk Pool serves as a reservoir of Azure Disks, meeting the storage needs within the cluster, managed by the `capacity-provisioner` responsible for provisioning and reclaiming storage.

$ kubectl get dp -A

NAMESPACE NAME CAPACITY AVAILABLE USED RESERVED READY AGE

...

acstor training-azuredisk-diskpool-cbarq 10737418240 10562015232 175403008 108294144 True 4m5s

$ kubectl describe dp training-azuredisk-diskpool-cbarq -n acstor

Name: training-azuredisk-diskpool-cbarq

Namespace: acstor

...

API Version: containerstorage.azure.com/v1beta1

Kind: DiskPool

Spec:

Disk Pool Source:

Azure Disk:

Storage Class Name: acstor-azuredisk-internal-training-azuredisk

Resources:

Requests:

Storage: 10Gi

Status:

Available: 10562015232

Available Replicas: 0

Capacity: 10737418240

Conditions:

Last Transition Time: 2024-03-05T03:04:14Z

Message: StoragePool is ready.

Reason: PoolReady

Status: True

Type: Ready

Last Transition Time: 2024-03-05T03:04:14Z

Message: All resources are Online.

Reason: PoolReady

Status: False

Type: Degraded

Last Transition Time: 2024-03-05T03:03:57Z

Message: StoragePool is scheduled.

Reason: PoolScheduled

Status: True

Type: PoolScheduled

Max Allocation: 10562015232

Reserved: 108294144

Used: 175403008

Events:

As mentioned earlier, Azure Container Storage leverages OpenEBS. Kubernetes, in turn, generates an OpenEBS DiskPool resource, which oversees the creation and deletion of volumes on the underlying storage infrastructure.

$ kubectl get dsp -A

NAMESPACE NAME NODE STATUS CAPACITY USED AVAILABLE RESERVED

acstor csi-2ht4k aks-agentpool-10525397-vmss000002 Online 10737418240 175403008 10562015232 108294144

...

$ kubectl describe dsp -n acstor csi-2ht4k

Name: csi-2ht4k

Namespace: acstor

...

Annotations: acstor.azure.com/expansion-requested: 10737418240

API Version: openebs.io/v1alpha1

Kind: DiskPool

...

Spec:

Disks:

/dev/sdc

Node: aks-agentpool-10525397-vmss000002

Status:

Available: 10562015232

Capacity: 10737418240

Node: aks-agentpool-10525397-vmss000002

Reserved: 108294144

State: Online

Used: 175403008

Events:

Type Reason Age From Message

Normal Created 6m49s dsp-operator Created or imported pool

Normal Online 6m49s dsp-operator Pool online and ready to roll!

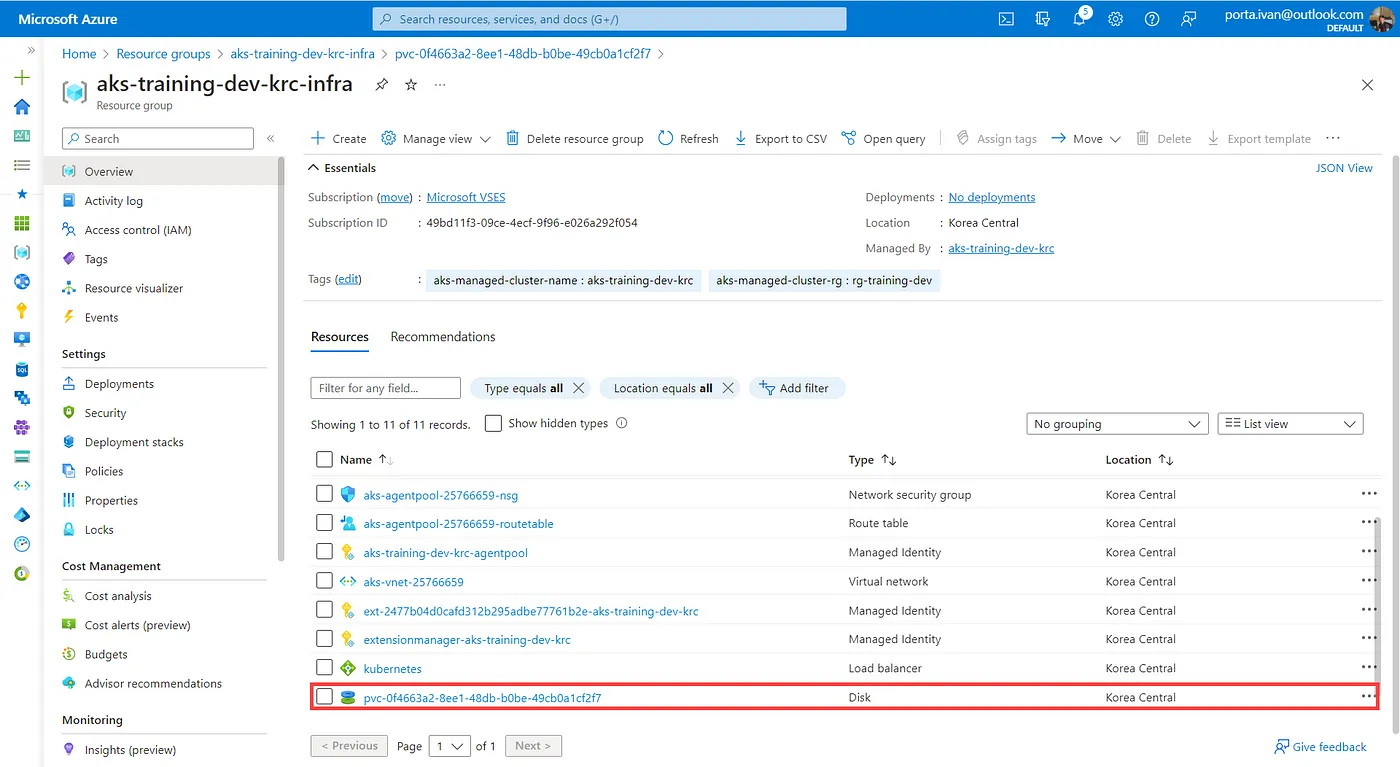

Ultimately, Azure configures the Persistent Volumes (PVs) for us, based on Azure Disks. These disks communicate with Azure through the CSI `driverdisk.csi.azure.com`.

$ kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-0f4663a2-8ee1-48db-b0be-49cb0a1cf2f7 10Gi RWO Retain Bound acstor/training-azuredisk-diskpool-cbarq-diskpool-pvc-utmernhf acstor-azuredisk-internal-training-azuredisk 7m48s

...

$ kubectl describe pv pvc-0f4663a2-8ee1-48db-b0be-49cb0a1cf2f7

Name: pvc-0f4663a2-8ee1-48db-b0be-49cb0a1cf2f7

Labels:

Annotations: pv.kubernetes.io/provisioned-by: disk.csi.azure.com

volume.kubernetes.io/provisioner-deletion-secret-name:

volume.kubernetes.io/provisioner-deletion-secret-namespace:

Finalizers: [kubernetes.io/pv-protection external-attacher/disk-csi-azure-com]

StorageClass: acstor-azuredisk-internal-training-azuredisk

Status: Bound

Claim: acstor/training-azuredisk-diskpool-cbarq-diskpool-pvc-utmernhf

Reclaim Policy: Retain

Access Modes: RWO

VolumeMode: Block

Capacity: 10Gi

Node Affinity:

Required Terms:

Term 0: topology.disk.csi.azure.com/zone in []

Message:

Source:

Type: CSI (a Container Storage Interface (CSI) volume source)

Driver: disk.csi.azure.com

FSType:

VolumeHandle: /subscriptions/49bd11f3-09ce-4ecf-9f96-e026a292f054/resourceGroups/aks-training-dev-krc-infra/providers/Microsoft.Compute/disks/pvc-0f4663a2-8ee1-48db-b0be-49cb0a1cf2f7

ReadOnly: false

VolumeAttributes: csi.storage.k8s.io/pv/name=pvc-0f4663a2-8ee1-48db-b0be-49cb0a1cf2f7

csi.storage.k8s.io/pvc/name=training-azuredisk-diskpool-cbarq-diskpool-pvc-utmernhf

csi.storage.k8s.io/pvc/namespace=acstor

requestedsizegib=10

skuName=Premium_LRS

storage.kubernetes.io/csiProvisionerIdentity=1709604784805-9137-disk.csi.azure.com

Events:

To utilize the storage provided by PVs, a Persistent Volume Claim (PVC) is essential. This PVC, associated with the acstor-azuredisk-internal storage class, links to the PV (indicated by the Bound status).

$ kubectl get pvc -A

NAMESPACE NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

acstor training-azuredisk-diskpool-cbarq-diskpool-pvc-utmernhf Bound pvc-0f4663a2-8ee1-48db-b0be-49cb0a1cf2f7 10Gi RWO acstor-azuredisk-internal-training-azuredisk 9m4s

$ kubectl describe pvc training-azuredisk-diskpool-cbarq-diskpool-pvc-utmernhf -n acstor

Name: training-azuredisk-diskpool-cbarq-diskpool-pvc-utmernhf

Namespace: acstor

StorageClass: acstor-azuredisk-internal-training-azuredisk

Status: Bound

Volume: pvc-0f4663a2-8ee1-48db-b0be-49cb0a1cf2f7

Labels: acstor.azure.com/diskpool=training-azuredisk-diskpool-cbarq

acstor.azure.com/managedby=capacity-provisioner

acstor.azure.com/storagepool=training-azuredisk

app.kubernetes.io/component=storage-pool

app.kubernetes.io/managed-by=capacity-provisioner

app.kubernetes.io/part-of=acstor

Annotations: pv.kubernetes.io/bind-completed: yes

pv.kubernetes.io/bound-by-controller: yes

volume.beta.kubernetes.io/storage-provisioner: disk.csi.azure.com

volume.kubernetes.io/selected-node: aks-agentpool-10525397-vmss000002

volume.kubernetes.io/storage-provisioner: disk.csi.azure.com

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 10Gi

Access Modes: RWO

VolumeMode: Block

Used By: diskpool-worker-lbxwt

Events:

Type Reason Age From Message

Normal WaitForFirstConsumer 9m26s persistentvolume-controller waiting for first consumer to be created before binding

Normal ExternalProvisioning 9m26s persistentvolume-controller waiting for a volume to be created, either by external provisioner "disk.csi.azure.com" or manually created by system administrator

Normal Provisioning 9m26s disk.csi.azure.com_csi-azuredisk-controller-69b978cbc6-wvmft_bdc14308-5f21-4fe5-933b-058b2633d611 External provisioner is provisioning volume for claim "acstor/training-azuredisk-diskpool-cbarq-diskpool-pvc-utmernhf"

Normal ProvisioningSucceeded 9m24s disk.csi.azure.com_csi-azuredisk-controller-69b978cbc6-wvmft_bdc14308-5f21-4fe5-933b-058b2633d611 Successfully provisioned volume pvc-0f4663a2-8ee1-48db-b0be-49cb0a1cf2f7 Additionally, an Azure Resource of the type specified is created

This also confirms the name of the Azure resource being provisioned.

In the details, we observe that the disk PVC is utilized by the pod `diskpool-worker-lbxwt`. This pod accesses the Azure Disk via the PVC, directing all IO operations to the disk. Given its `system-node-critical` priority class, this pod is crucial.

$ kubectl get pods -n acstor

NAME READY STATUS RESTARTS AGE

...

diskpool-worker-lbxwt 1/1 Running 0 14m

$ kubectl describe pod diskpool-worker-lbxwt -n acstor

Name: diskpool-worker-lbxwt

Namespace: acstor

Priority: 2000001000

Priority Class Name: system-node-critical

Service Account: capacity-provisioner-pod-sa

Node: aks-agentpool-10525397-vmss000002/10.224.0.6

Start Time: Tue, 05 Mar 2024 12:04:00 +0900

Labels: acstor.azure.com/diskpool=training-azuredisk-diskpool-cbarq

acstor.azure.com/managedby=capacity-provisioner

acstor.azure.com/storagepool=training-azuredisk

app.kubernetes.io/component=storage-pool

app.kubernetes.io/instance=azurecontainerstorage-diskpool-worker

app.kubernetes.io/managed-by=capacity-provisioner

app.kubernetes.io/name=diskpool-worker

app.kubernetes.io/part-of=acstor

Annotations: cni.projectcalico.org/containerID: a46436e3b06a0aaaac5a3c2a3f9f4a350d9562de1d874b99a083c32f3d26e536

cni.projectcalico.org/podIP: 10.244.2.8/32

cni.projectcalico.org/podIPs: 10.244.2.8/32

Status: Running

IP: 10.244.2.8

IPs:

IP: 10.244.2.8

Controlled By: DiskPool/training-azuredisk-diskpool-cbarq

Containers:

capacity-provisioner-pod:

Container ID: containerd://f6f570b9dcccec1d4058fedfa75d0341ee24226bf028152cc05f118d731427a4

Image: mcr.microsoft.com/acstor/capacity-provisioner:v1.0.3-preview

Image ID: mcr.microsoft.com/acstor/capacity-provisioner@sha256:07c8585b8ecc37a27ad89d6efa8d400d109d823228b3090ca671a8d588942b5e

Port:

Host Port:

Args:

--mode=worker

-zap-log-level=debug

State: Running

Started: Tue, 05 Mar 2024 12:04:14 +0900

Ready: True

Restart Count: 0

Environment:

MY_NODE_NAME: (v1:spec.nodeName)

MY_POD_NAME: diskpool-worker-lbxwt (v1:metadata.name)

RELEASE_NAMESPACE: acstor (v1:metadata.namespace)

Mounts:

/var/run/secrets/kubernetes.io/serviceaccount from serviceaccount (ro)

Devices:

/dev/azure-cp from training-azuredisk-diskpool-cbarq-diskpool-pvc-utmernhf

Conditions:

Type Status

Initialized True

Ready True

ContainersReady True

PodScheduled True

Volumes:

training-azuredisk-diskpool-cbarq-diskpool-pvc-utmernhf:

Type: PersistentVolumeClaim (a reference to a PersistentVolumeClaim in the same namespace)

ClaimName: training-azuredisk-diskpool-cbarq-diskpool-pvc-utmernhf

ReadOnly: false

serviceaccount:

Type: Secret (a volume populated by a Secret)

SecretName: capacity-provisioner-pod-sa

Optional: false

QoS Class: BestEffort

Node-Selectors: acstor.azure.com/io-engine=acstor

kubernetes.io/arch=amd64

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 10s

node.kubernetes.io/unreachable:NoExecute op=Exists for 10s

Topology Spread Constraints: topology.kubernetes.io/zone:DoNotSchedule when max skew 1 is exceeded for selector acstor.azure.com/storagepool=training-azuredisk

Events:

Type Reason Age From Message

Normal Scheduled 15m default-scheduler Successfully assigned acstor/diskpool-worker-lbxwt to aks-agentpool-10525397-vmss000002

Normal SuccessfulAttachVolume 15m attachdetach-controller AttachVolume.Attach succeeded for volume "pvc-0f4663a2-8ee1-48db-b0be-49cb0a1cf2f7"

Normal SuccessfulMountVolume 15m kubelet MapVolume.MapPodDevice succeeded for volume "pvc-0f4663a2-8ee1-48db-b0be-49cb0a1cf2f7" globalMapPath "/var/lib/kubelet/plugins/kubernetes.io/csi/volumeDevices/pvc-0f4663a2-8ee1-48db-b0be-49cb0a1cf2f7/dev"

Normal SuccessfulMountVolume 15m kubelet MapVolume.MapPodDevice succeeded for volume "pvc-0f4663a2-8ee1-48db-b0be-49cb0a1cf2f7" volumeMapPath "/var/lib/kubelet/pods/9063e076-1594-4bc9-ba80-238b576cdc8f/volumeDevices/kubernetes.io~csi"

Normal Pulling 15m kubelet Pulling image "mcr.microsoft.com/acstor/capacity-provisioner:v1.0.3-preview"

Normal Pulled 15m kubelet Successfully pulled image "mcr.microsoft.com/acstor/capacity-provisioner:v1.0.3-preview" in 1.863449186s (1.863461493s including waiting)

Normal Created 15m kubelet Created container capacity-provisioner-pod

Normal Started 15m kubelet Started container capacity-provisioner-pod

With our pool prepared, we can proceed to allocate storage using a Persistent Volume Claim (PVC) derived from the newly established storage class.

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: training-azuredisk-pvc

spec:

accessModes:

- ReadWriteOnce

storageClassName: training-azuredisk

resources:

requests:

storage: 1Gi

This action initiates a new PVC, which remains in a pending state until it is bound to a new consumer.

$ kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

training-azuredisk-pvc Pending training-azuredisk 19s

$ kubectl describe pvc training-azuredisk-pvc

Name: training-azuredisk-pvc

Namespace: default

StorageClass: acstor-training-azuredisk

Status: Pending

Volume:

Labels:

Annotations:

Finalizers: [kubernetes.io/pvc-protection]

Capacity:

Access Modes:

VolumeMode: Filesystem

Used By:

Events:

Type Reason Age From Message

Normal WaitForFirstConsumer 0s persistentvolume-controller waiting for first consumer to be created before binding

We can then deploy our pod, which will request storage supported by the azurediskpvc PVC and supplied by the bound PV. Notably, the storage class name includes the namespace prefix. Consequently, our pod can store data on the provisioned Azure Storage resource.

apiVersion: v1

kind: Pod

metadata:

name: training-pod

spec:

nodeSelector:

acstor.azure.com/io-engine: acstor

volumes:

- name: managedpv

persistentVolumeClaim:

claimName: training-azuredisk-pvc

containers:

- name: ubuntu-container

image: ubuntu:latest

command: ["/bin/bash", "-c", "--"]

args: ["tail -f /dev/null"]

volumeMounts:

- mountPath: "/volume"

name: managedpv

Finally, we can access the pod’s shell and create a new file, which will be stored on the corresponding Azure Disk resource.

$ kubectl exec -it training-pod -- sh

ls

bin boot dev etc home lib lib32 lib64 libx32 media mnt opt proc root run sbin srv sys tmp usr var volume

touch volume/sample.json

ls volume

lost+found sample.json

# Resources

- **Official Documentation:** [https://learn.microsoft.com/en-us/azure/storage/container-storage/container-storage-introduction](https://learn.microsoft.com/en-us/azure/storage/container-storage/container-storage-introduction)

Top comments (0)