Best Ways to Run Large Language Models (LLMs) on Mac in 2025

Best Ways to Run LLM on Mac: Introduction

As AI technology advances, running large language models (LLMs) locally on personal devices, including Mac computers, has become more feasible. In 2025, Apple’s latest MacBook Pro lineup featuring M4 Pro and M4 Max chips, improved memory bandwidth, and extended battery life provides a solid foundation for running LLMs. Additionally, new software tools and optimizations have made deploying AI models on macOS easier than ever before.

This article explores the best ways to run LLMs on a Mac in 2025, including software options, hardware considerations, and alternative solutions for users seeking high-performance AI computing.

Recommended Software for Running LLMs on Mac

With advancements in model efficiency and optimized software, several tools enable users to run LLMs locally on their Macs:

1. Exo by ExoLabs (Distributed AI Computing)

Exo is an open-source AI infrastructure that allows users to run advanced LLMs, such as DeepSeek R1 and Qwen 2.5 Max, across multiple Apple devices in a distributed manner.

Installation and Usage Guide:

-

Install Exo

- Open the Terminal on your Mac (Finder > Applications > Utilities > Terminal).

- Install Exo with the following command:

curl -fsSL https://install.exo.sh | sh

-

Once installed, verify it by running:

exo --version

-

Run DeepSeek R1 on Exo:

- Use the following command to run DeepSeek R1 on a Mac with M-series chips:

exo run deepseek-r1 --devices M4-Pro,M4-Max --quantization 4-bit

- If you have multiple Apple devices, Exo can distribute the workload across them automatically.

(Exo Labs)

Note:I want to point out the latest news about Exo and Deepseek R1: Exo has further expanded accessibility to advanced AI by making it possible to run the full 671B DeepSeek R1 model on your own hardware. With distributed inference, users have demonstrated running DeepSeek R1 across multiple devices, including consumer-grade hardware like Mac Minis and MacBook Pros. One user achieved a setup with 7 M4 Pro Mac Minis and 1 M4 Max MacBook Pro, totaling 496GB unified memory using Exo’s distributed inference with 4-bit quantization. This showcases Exo’s ability to enable cutting-edge AI at home, emphasizing their commitment to democratization.

See the official tweet

2. Ollama (Simplified LLM Execution)

Ollama is one of the easiest ways to download and run open-source LLMs on macOS with a simple command-line interface.

Installation and Usage Guide:

-

Install Ollama

- Open the Terminal and run:

curl -fsSL https://ollama.com/install.sh | sh

-

Confirm installation:

ollama --version

-

Run a Model Using Ollama:

- To run DeepSeek R1, enter the command:

ollama run deepseek-r1

-

For Mistral or other models, replace

deepseek-r1with the model name:

ollama run mistral The first run may take some time as the model is downloaded.

(Ollama)

3. LM Studio (Graphical Interface for LLMs)

LM Studio is a user-friendly desktop application that allows Mac users to interact with and run LLMs locally without requiring terminal commands.

Installation and Usage Guide:

-

Download and Install LM Studio:

- Visit LM Studio’s official website and download the macOS version.

- Install by dragging the app into the Applications folder.

-

Run a Model in LM Studio:

- Open LM Studio from Launchpad.

- Go to the Model Hub and download a preferred model (e.g., DeepSeek R1 or LLaMA 3).

- Click on Run Model and start chatting with it.

4. GPT4All (Privacy-Focused Local LLM)

GPT4All is a locally run AI framework that prioritizes privacy while enabling chat-based AI functionality.

Installation and Usage Guide:

-

Download GPT4All:

- Visit GPT4All’s official site and download the macOS version.

- Install and open the application.

-

Running GPT4All:

- Select a model from the available options.

- Click Load Model and start using the chatbot.

5. Llama.cpp (Optimized LLM Inference Engine)

Llama.cpp is a lightweight inference framework designed for running Meta’s LLaMA models on MacBooks efficiently.

Installation and Usage Guide:

-

Install Homebrew (if not already installed):

- Open Terminal and run:

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)" Install Llama.cpp:

brew install llama-cpp

- Run a LLaMA Model:

llama-cpp -m models/7B.gguf -p "Hello, how can I help you?"

- Replace

models/7B.ggufwith the path to the desired model.

Hardware Considerations for Running LLMs on Mac

1. Choosing the Right Mac Model

For best performance, consider the following Mac models based on RAM requirements and model size:

| Mac Model | Recommended RAM | Supported Model Size (Quantized) |

|---|---|---|

| MacBook Air M3 | 16GB | Up to 7B Models (4-bit) |

| MacBook Pro M4 | 32GB | Up to 13B Models (4-bit) |

| Mac Studio M4 Max | 64GB | Up to 70B Models (4-bit) |

| Mac Pro M4 Ultra | 128GB+ | 100B+ Models (4-bit) |

Alternative AI Computing Solutions

If Mac performance is insufficient for running larger LLMs, consider alternative hardware solutions:

1. NVIDIA Digits AI Supercomputer

- Dedicated AI computing solution with up to 200B parameter support.

- 128GB Unified Memory for seamless model inference.

- Cost: Starts at $3,000. (NVIDIA)

2. Cloud-Based LLM Hosting (For Large-Scale Models)

If running models locally is not feasible, cloud-hosted inference APIs can be used:

- OpenAI API (GPT-4 Turbo)

- DeepSeek Cloud API

- Alibaba Qwen API

Best Ways to Run LLM on Mac: Conclusion

In 2025, Mac users have multiple robust options for running LLMs locally, thanks to advancements in Apple Silicon and dedicated AI software. Exo, Ollama, and LM Studio stand out as the most efficient solutions, while GPT4All and Llama.cpp cater to privacy-focused and lightweight needs.

For users needing scalability and raw power, cloud-based APIs and NVIDIA's AI hardware solutions remain viable alternatives.

By selecting the right tool and optimizing Mac hardware, running LLMs efficiently on macOS is more accessible than ever.

Top comments (5)

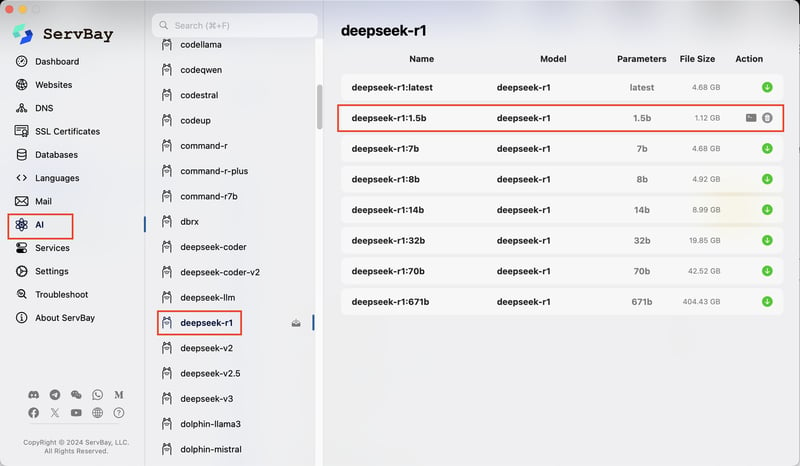

Hey, @mehmetakar! Your post has been really helpful to me! However, I noticed that you used the command line for your operations, but that's actually not necessary! Let me recommend a better tool for you: Servbay. It simplifies the process and supports one-click deployment of LLMs, making it a better choice for Mac users seeking efficient AI computing. Hope this helps you!

Nice! Looking forward to see more of these tools.

Hi @mehmetakar , extremely intersting and useful information here!

I was wondering if you think the new mac mini with the m4 chip and 16GB of RAM could also do the trick for any of the models you shared? or would you recommend going for the 24 or 32GB options?

Thanks so much!

Thanks! It is up to model sizes you will use as I mentioned on the table. If you haven't bought yet, it is better to buy with high ram options for performance on any heavy models. On the other hand, as open source llm implementation & development evolves, doing more with less capacity will be possible, so, it is completely up to you.

Also, you can ask some other geeks on reddit, asking on Exo, Ollama and other studios' discussions topics with more usage cases.

There is no Mac Pro M4 Ultra yet (as suggested in your table above)... Running the 70B model at 64GB is a gamble. At least @ LM Studio it crashes...