*the author called me a kid in one of his passive-aggressive comments.

In this post you will see why security should be taken seriously and why rolling your own security mechanisms instead of relying on industry standards is outright bad.

This is a post-response to https://dev.to/matpk/cryptographically-protecting-your-spa-fga which claims securing server responses with an RSA signature is secure enough to prevent terrible API design from being exploited.

In the article, the author provides a sample application which I will be using as my exploitation target. This is how it looks like:

The name "John Doe" comes from an API response to /users and as you can see, it contains a signature that, in theory, would prevent a man-in-the-middle attack from modifying it.

Well, let's try... Let's analyze the application source code to see what we can find.

We see the hardcoded public key:

We see the effect calling the /users API and passing the response through the validation function:

And the validation function itself:

Man-in-the-middle attack

I have setup Charles proxy, an easy to use network debugging / tampering tool that will allow us to modify the network response.

https://www.charlesproxy.com/documentation/getting-started/

Charles allows you to intercept and modify network request/responses. Unfortunately MacOS seems to be configured to not allow us to proxy requests from localhost and it provides localhost.charlesproxy.com instead which maps directly to 127.0.0.1. Running the application with localhost.charlesproxy.com:3000 produces an error, as crypto APIs, for obvious reasons, only run on HTTPS or the special localhost host.

But we can fix that and it turns out we don't need to access crypto at all for this to work.

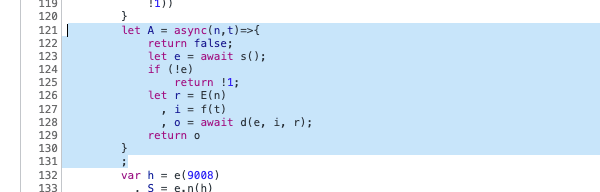

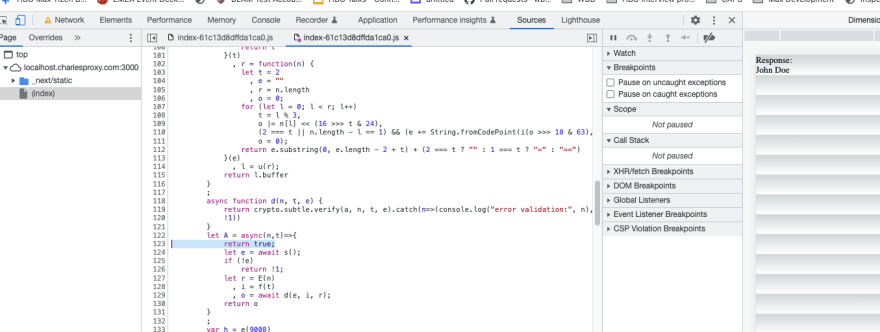

Meet our new friend, Chrome devtools overrides. With it we can actually make changes to the code and it will run with our changes. Beautiful. Our first attack to the front-end is to modify the validation function to always return true regardless of what the signature was:

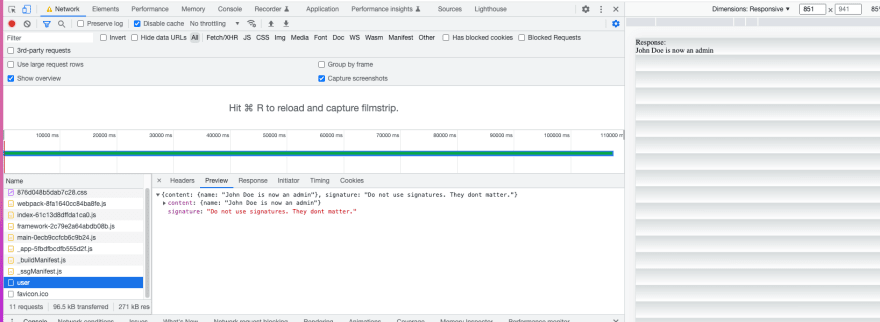

Note the application still runs and presents the network response correctly. Now we can actually modify the server response to whatever we want. In Charles you can do that by using the Breakpoints tool: https://www.charlesproxy.com/documentation/proxying/breakpoints/

You can set a breakpoint into /users by enabling the Breakpoints tool and once you hit that request Charles will pause it with this screen which allows you to modify the response:

And we're done:

The client is completely oblivious of signatures as we just return true from the function that verifies them. The client is also completely oblivious of network modifications.

Conclusion

Don't assume your server is secure just because you thought hardening your front-end was a good idea. Don't reinvent the wheel in terms of security... industry standards are standards because they work and trying to come up with your own "secure" ways will simply not work.

Top comments (14)

How you trivially misrepresented what the article claims, ignored the disclaimers made and the conclusion reached, and then compiled the half a dozen hours of guided tinkering and troubleshooting that went through the process of writing this article to pretend it was trivial with the sole intent of ridiculing someone for the crime of asking for feedback.

All of this to finally miss the point. The existence of this article proves the measure works for what it was designed to do (protecting the UI controls against fiddling employees).

Someone able to do this (specially without the guidance from the developer) could just as easily find the endpoints in the source code and work from there, no playing with the UI required.

I thought you said the pentester used a tool to alter the responses?

Are your fiddling employees capable enough to install and use the hacking tool that the pentester used, but not capable enough to understand the code?

You miss the point. You can, for example, mess around in the devtools to change the body of incoming responses. You don't need the tool.

I hope you learned something.

I learned that some people are not worth 6 hours of conversation. Thanks for the lesson.

When you ran out of power attacking my ideas the only thing you can do now is attack the person. It seems you learned nothing, which is a shame.

"when you ran out of power attacking my ideas" is a weird way of spelling "trying to disprove your point I ironically proved it".

My goodness. I signed up exclusively to tell you what a sore loser you were. Hopefully not a year later.

Nice clear example, how long did this take to complete?

I had the knowledge to do this in 10 minutes, the time it took to write this article. Took me 6 hours to explain everything to the author and handle his passive-aggressive comments.

While I'm a security enthusiast, my main work area is around front-end architecture. I would expect a well prepared hacker to just laugh at this.

Some 6 hours, with me helping all the way. In the original article you can "follow" the process.

If it makes you feel better, let's say you helped me. Even so, it seems like 6 hours is a pretty low entry barrier to something your pentesters deemed secure enough.

There is no other way of interpreting it. But if it makes you feel better, let's say 6 hours driving with a GPS gets you to your destination just like 6 hours driving taking random turns.

They deemed it secure enough based on the profile of the potential attackers. And as it turns out, this is secure enough because it is industry standard; it is in all regards a simplified version of JWT.

This is still the wrong use-case for JWS though. There's literally no benefit of using signed messages from your server. It's cool and all, you learned a bunch implementing it, but that's it.