I’ve seen a lot of frustration around complex Agent frameworks like LangChain. I challenged myself to see how small an Agent framework could be if we removed every non-essential piece. The result is Pocket Flow: a 100-line LLM agent framework for what truly matters. Check it out here: GitHub Link, Documentation

Why Strip It Down?

Complex Vendor or Application Wrappers Cause Headaches

- Hard to Maintain: Vendor APIs evolve (e.g., OpenAI introduces a new client after 0.27), leading to bugs or dependency issues.

- Hard to Extend: Application-specific wrappers often don’t adapt well to your unique use cases.

We Don’t Need Everything Baked In

- Easy to DIY (with LLMs): It’s often easier just to build your own up-to-date wrapper — an LLM can even assist in coding it when fed with documents.

- Easy to Customize: Many advanced features (multi-agent orchestration, etc.) are nice to have but aren’t always essential in the core framework. Instead, the core should focus on fundamental primitives, and we can layer on tailored features as needed.

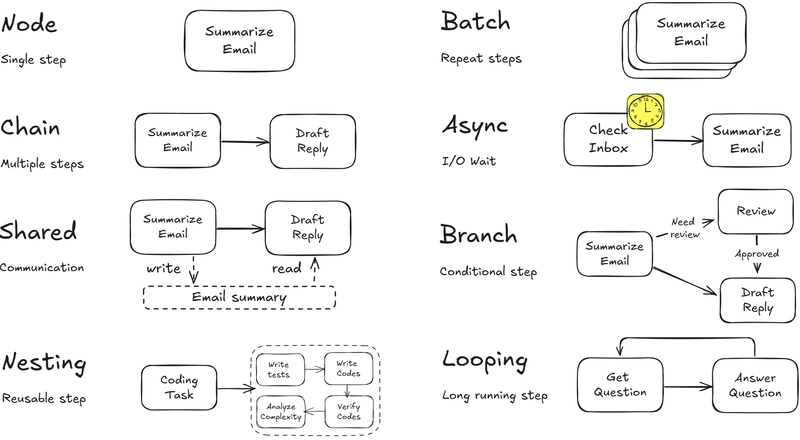

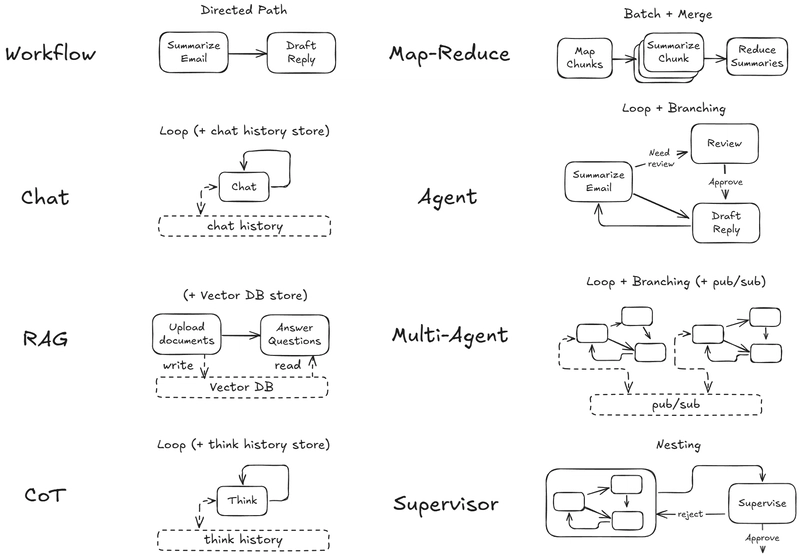

These 100 lines capture what I see as the core abstraction of most LLM frameworks: a nested directed graph that breaks down tasks into multiple LLM steps, with branching and recursion to enable agent-like decision-making.

From there, you can layer on Complex Design Patterns (When You Need Them)

- Single-Agent

- Multi-Agent Collaboration

- Retrieval-Augmented Generation (RAG)

- Workflow

- Or any other feature you can dream up!

Because the codebase is tiny, it’s easy to see where each piece fits and how to modify it without wading through layers of abstraction.

I’m adding more examples and would love feedback. If there’s a feature you’d like to see or a specific use case you think is missing, please let me know!

Top comments (1)

This is awesome, thanks for sharing it! It's incredible that you managed to build a LangChain alternative in just 100 lines of code. Really impressive work - wishing you continued success at Microsoft Research AI!