Training artificial intelligence (AI) models has long been a resource-intensive and expensive process. As the demand for more powerful AI models grows, so too do the costs associated with training them. From enormous datasets to the computational power required for deep learning algorithms, the price tag for AI training can easily run into millions of dollars. For smaller businesses or emerging startups, these costs often present a significant barrier to entry.

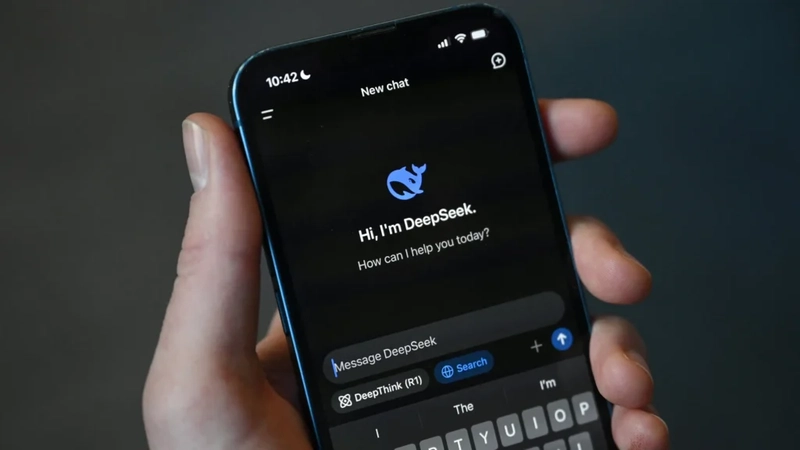

However, DeepSeek, an AI company that has garnered attention for its groundbreaking innovations, has found a way to reduce the cost of AI training by an astounding 30 times. By leveraging a combination of cutting-edge technologies and creative problem-solving strategies, DeepSeek has drastically lowered the financial and operational barriers to developing AI. In this article, we explore how DeepSeek achieved this impressive feat and examine the techniques and technologies that enabled this breakthrough.

What Makes AI Training So Expensive?

Before diving into how DeepSeek achieved its success, it’s important to understand the underlying reasons behind the high cost of AI model training. There are several key factors that contribute to these expenses.

1. Massive Computational Power Requirements

Training AI, especially deep learning models, requires vast amounts of computational power. Deep learning models contain millions, if not billions, of parameters that need to be adjusted and fine-tuned through a series of iterations. The more complex the model, the greater the amount of processing power required. This leads many companies to invest heavily in data centers equipped with powerful graphics processing units (GPUs) or specialized hardware like Tensor Processing Units (TPUs).

2. Data Acquisition and Storage Costs

AI models rely heavily on large datasets for training. Collecting, curating, and storing this data comes with its own set of costs. Companies often have to purchase datasets, which can be expensive, or spend significant resources on data collection and preprocessing. Once acquired, this data needs to be stored and managed on powerful servers or cloud infrastructures, adding further to the overall cost.

3. Energy Consumption

Running the hardware required for training AI models demands a large amount of energy. The longer the training process, the more electricity is consumed. In many cases, the energy costs are one of the most significant contributors to the overall expenses of AI training.

4. Time and Personnel Costs

AI model training isn’t just about hardware and data. It requires skilled professionals who understand the nuances of machine learning algorithms, model optimization, and data management. The longer the training process takes, the more time these experts need to invest, which translates to higher labor costs.

How Did DeepSeek Train AI 30 Times Cheaper?

DeepSeek’s approach to slashing the cost of AI training is multifaceted. By rethinking the traditional approaches to AI model development and training, the company has leveraged several key innovations that have allowed it to drastically reduce its expenses.

1. Decentralized Edge Computing

One of the most significant breakthroughs DeepSeek made was shifting from centralized cloud-based training to a decentralized edge computing model. Traditionally, AI models are trained on large, centralized servers or in data centers. These facilities require massive amounts of computing power and consume a great deal of energy.

DeepSeek turned this model on its head by utilizing edge devices—smaller, distributed computing nodes located closer to where the data is generated. These edge devices process data locally, reducing the need for centralized servers to handle all of the computational load. By distributing the computing work across thousands of smaller, low-cost edge devices, DeepSeek was able to cut down significantly on infrastructure costs.

Edge computing also offers a quicker feedback loop for training, as data does not need to be transmitted to a central server for processing. The decentralized nature of the training system helps to accelerate model training while reducing both computational and time costs.

How It Works:

DeepSeek’s edge computing network consists of thousands of connected devices that handle specific tasks in the training process. Instead of sending all raw data to a centralized server, these devices process data locally and send results back to the central hub. This allows for real-time updates and faster training cycles.

2. Transfer Learning: Training on Pre-Trained Models

Another key technique DeepSeek employed to cut costs is transfer learning. This method involves leveraging models that have already been pre-trained on large, general datasets and then fine-tuning them for specific tasks. Instead of training an AI model from scratch, which requires massive datasets and computational resources, transfer learning allows DeepSeek to take a pre-existing model and adapt it for new applications with significantly less data and computation.

By applying transfer learning, DeepSeek avoided the costly and time-consuming process of training a model from the ground up. This significantly reduced both the amount of data required and the computational power necessary to reach a high level of model performance.

How It Works:

For example, instead of starting with a completely new model, DeepSeek uses a model pre-trained on a broad dataset (e.g., a large dataset of images or text). They then “fine-tune” the model by providing it with a smaller, task-specific dataset. This allows the model to adapt to the new task with much less time and data than it would have taken to train a model from scratch.

3. Optimized Hardware Design

DeepSeek also achieved cost reductions through custom-built, optimized hardware. Traditional AI training often relies on general-purpose hardware like GPUs or TPUs, which are expensive and energy-hungry. Instead of relying solely on off-the-shelf hardware, DeepSeek developed custom hardware tailored specifically to its AI models, improving performance and reducing operational costs.

These custom AI chips are designed to perform the specific computations required for DeepSeek’s models more efficiently, reducing the need for excessive computational resources and energy consumption.

How It Works:

DeepSeek's custom chips optimize parallel processing, which allows them to execute many computations at once. This efficiency reduces the number of processing cycles needed to complete a task, cutting down both time and energy costs.

4. Data Efficiency Through Augmentation and Synthetic Data

AI models thrive on large, high-quality datasets, but collecting such data is often expensive and time-consuming. To solve this issue, DeepSeek employed data augmentation and synthetic data generation techniques to make the most out of limited data.

Data augmentation involves modifying existing data (e.g., rotating images, changing colors, adding noise) to generate new training examples, reducing the need for an enormous dataset. Synthetic data generation involves creating entirely new datasets using AI models, allowing DeepSeek to generate vast amounts of data at a fraction of the cost of acquiring real-world data.

How It Works:

For instance, DeepSeek used synthetic data generation to create realistic data for training models without needing to rely on real-world data. This approach enabled the company to significantly expand its datasets without incurring the cost of acquiring or storing large volumes of data.

5. Parallelization of Model Training

Lastly, DeepSeek employed a technique known as model parallelization, which divides a large model into smaller segments that can be trained simultaneously across multiple devices or systems. This parallel processing strategy significantly reduced the time required for training large, complex models, and it allowed DeepSeek to train models more quickly, thus reducing operational costs.

How It Works:

Instead of training a large model sequentially on one device, DeepSeek splits the model into parts that can be processed independently. These parts are then trained on different devices at the same time. The results are later combined to create the final model. This parallelization allows for faster training and greater efficiency.

What Are the Broader Implications of DeepSeek's Innovation?

DeepSeek’s innovative approach to cutting AI training costs has the potential to transform the entire AI industry. With AI training becoming more affordable, smaller companies and startups now have the opportunity to develop their own AI solutions without the need for massive budgets.

1. Lowering Barriers to Entry

One of the most significant impacts of DeepSeek’s cost-reduction strategies is the potential for democratizing AI. By lowering the cost of training, DeepSeek has made it possible for smaller players in various industries to leverage AI, fostering innovation across the board.

2. Accelerating AI Research and Development

Lower costs also mean that more resources can be allocated to AI research and experimentation. With more affordable training, companies and research institutions can quickly iterate and explore new AI techniques, leading to faster advancements in AI technology.

For Developers: API Access

CometAPI offer a price far lower than the official price to help you integrate deepseek API(model name: deepseek-chat; deepseek-reasoner), and you will get $1 in your account after registering and logging in! Welcome to register and experience CometAPI.

CometAPI acts as a centralized hub for APIs of several leading AI models, eliminating the need to engage with multiple API providers separately.

Please refer to DeepSeek R1 API for integration details.

Conclusion

DeepSeek's remarkable achievement in reducing AI training costs by 30 times is a prime example of how innovation can disrupt established industries. By utilizing a combination of edge computing, transfer learning, custom hardware, data efficiency techniques, and parallelization, DeepSeek has paved the way for more accessible, efficient, and cost-effective AI development. As the AI landscape continues to evolve, the techniques pioneered by DeepSeek may very well become the new standard, allowing AI to reach new heights of performance, accessibility, and scalability.

Top comments (0)