QA Engineers spend a considerable amount of time and effort in developing automation frameworks and even more on adding tests and maintaining them.

Given the flaky nature of these end-to-end automation tests, most of the teams design alerting mechanisms for test failures.

Communication platforms like slack or microsoft teams are becoming the new graveyard for these alerts as most engineers end-up ignoring them over a period of time. The primary reasons for ignoring these alerts are

- Excess Alerts

- Lack of Visibility

- Poor Accountability

In the following sections, sample config files of Test Results Reporter cli tool are shared to follow the best practices while sending your test results to slack, microsoft teams or any other communication platforms.

test-results-reporter

/

testbeats

test-results-reporter

/

testbeats

Publishes test results to Microsoft Teams, Google Chat & Slack

This npm package has been renamed from test-results-reporter to testbeats. test-results-reporter will soon be phased out, and users are encouraged to transition to testbeats.

Publish test results to Microsoft Teams, Google Chat, Slack and many more.

Get Started

TestBeats is a tool designed to streamline the process of publishing test results from various automation testing frameworks to communication platforms like slack, teams and more for easy access and collaboration. It unifies your test reporting to build quality insights and make faster decisions.

Read more about the project at https://testbeats.com

Sample Reports

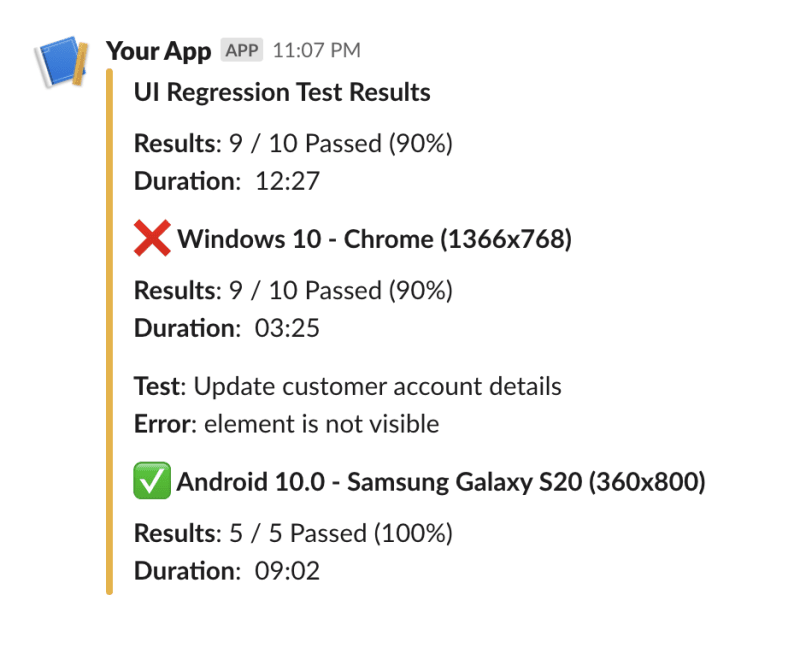

Alerts in Slack

Results in Portal

Need Help

We use Github Discussions to receive feedback, discuss ideas & answer questions. Head over to it and feel free to start a discussion. We are always happy to help 😊.

Support Us

Like this project! Star it on Github ⭐. Your support means a lot to us.

Excess Alerts

During the initial phases, looking at these alerts in your slack channels or any other place is going to be a fancy experience. After some days, we get used to ignoring them if there are more alerts than we can handle. Due to this, the real problems are lost in the noise.

One way to solve this is by sending the failure alerts to a different channel by keeping your primary channel clean and out of noise.

Primary Channel

Failure Channel

Config

[

{

"name": "slack",

"inputs": {

"url": "<primary-channel-webhook-url>",

"publish": "test-summary-slim"

}

},

{

"name": "slack",

"condition": "fail",

"inputs": {

"url": "<failure-channel-webhook-url>",

"publish": "failure-details"

}

}

]

Lack of Visibility

Test Failure Analysis is where value is realised and quality is improved. It is highly critical for the velocity of your releases. Failures happen for a wide variety of reasons and keeping a track of these failure reasons is a tedious task.

ReportPortal is an AI powered test results portal. It uses the collected history of executions and fail patterns to achieve hands-free analysis of your latest results. With auto analysing power, it helps to distinguish between real failures and noise (known failures or flaky tests).

It provides the following failure categories

- Product Bug (PB)

- Automation Bug (AB)

- System Issue (SI)

- No Defect (ND)

- To Investigate (TI)

Report Portal Analysis

Config

{

"name": "report-portal-analysis",

"inputs": {

"url": "<report-portal-base-url>",

"api_key": "<api-key>",

"project": "<project-id>",

"launch_id": "<launch-id>"

}

}

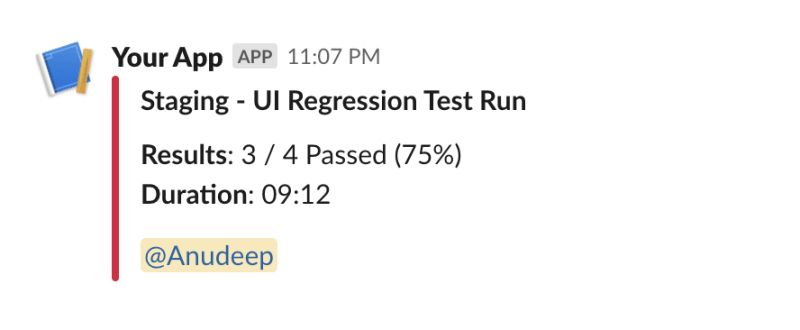

Poor Accountability

Investigating and fixing the test failures is the most important part of maintaining automation test suites. It makes the automation healthy and trustworthy.

One way we often see to infuse accountability is by asking the test owners (who has written or adopted the tests) to look at the failures. In this approach, the knowledge is restricted and creates a dependency on others.

Another way is to make the entire team responsible for all test failures. It would be a disaster, if the entire team tries to investigate the same failure at the same time or no one takes a look at them assuming others are investigating. It would be worth creating a roster for the person to look at the failures.

Auto tagging the person who is on-call to investigate the test failures would definitely help in maintaining the automation suites healthy.

Mentions

Config

{

"name": "mentions",

"inputs": {

"schedule": {

"layers": [

{

"rotation": {

"every": "week",

"users": [

{

"name": "Jon",

"slack_uid": "ULA15K66N"

},

{

"name": "Anudeep",

"slack_uid": "ULA15K66M"

}

]

}

}

]

}

}

}

Top comments (0)