by Jenna Thorne

Also known as split testing, A/B testing refers to random experimentation wherein two or more versions of a variable are shown to different site visitors at once to determine the version that works best and which drives business metrics. Put simply, you can show an A version of content to one half of your audience and a B version to the other half.

This testing process is invaluable since different audiences behave in different ways. For example, something that works for a certain company may not work in another. Furthermore, it eliminates the guesswork from website optimization. A in A/B testing means the original variable or the ‘control’, and B means the new version or the ‘variation’ of the original variable.

How does A/B Testing Work?

To run this test, you must make two content versions with changes to one variable. These two versions will then be shown to two same-sized audiences, and you'll analyze which performed better over a certain time. It might sound complex but A/B testing is actually very simple.

What you need to do first is to decide what you want to test and the reason why. You could, for example, determine how many people click on the button. This is a good indicator of how the button's size impacts perception. The next thing that you can do is to divide users into two sets.

Every set should be random. You can then create two similar pages but with different sizes of buttons. From there, you can check out the analytics and see which page receives more clicks. The decision to click depends on various factors, such as button size, text color, and the device used. Marketers could observe how one marketing content version performs compared to another version when you do A/B testing.

Why is A/B Testing Important?

Accurate A/B testing could make a big difference in the return on investment. Through controlled tests and empirical data gathering, you can determine exactly which marketing strategies work best for your brand and product. Running a promotion without running a test is never a good idea.

Testing, when performed consistently, could substantially boost the results. It’s easier to make decisions and create more effective marketing strategies in the future if you know what works and what doesn’t. The following are some of the benefits you get when running A/B tests regularly on your website as well as on your marketing materials:

- A/B testing helps understand the target audience. You gain an insight into who your audience is and what they want when you determine the kind of emails, headlines, and other features they respond to.

- Stay on top of evolving trends. Predicting what kind of images, content, or other features people will respond to is difficult, and regular testing helps you stay ahead of evolving consumer behavior.

- Higher rates of conversion. A/B testing is the sole most effective way to boost rates of conversion. Again, knowing what works and what doesn’t work provides you with actionable data that could help simplify the conversion process.

- Minimize bounce rates. When website visitors see content they like, they will stay on it longer. Testing to determine the kind of marketing materials and content users like will help build a better site—one that they want to stay on.

With the help of A/B testing, Lotte Hotel, the largest hotel group in South Korea, boosts booking rates by 49 percent thanks to A/B testing. Moreover, it has also expanded to more than 30 hotel locations across the globe. They have used tools like Google Analytics 360 to measure user data and created hypothesized versions of websites elements to create a winning page layout combination with the help of Google Optimize.

Different types of A/B tests

There are different benefits to every type of A/B testing. Furthermore, depending on the changes you want to make, different tests could work better than others.

Split testing

Split testing is the process of experimenting in which an entirely new version of the current web page is tested to analyze which has the better performance.

Image Source- Google Help Doc

Split Testing Advantages

- Recommended for running tests with no changes to the UI, such as optimizing the load time, switching to a different database, and so on.

- Split testing is ideal for trying out new designs while using the current design for comparison.

- Change the workflow of a web page. Workflows impact business conversions significantly, which helps test new paths before the implementation of changes and find out if there are missed points.

- Much recommended method for dynamic content.

Multivariate testing

MVT for short, multivariate testing refers to the experimentation wherein different variations of several page variables are tested simultaneously to analyze which combination of variables performs best. Multivariate testing is more complicated compared to A/B tests and is best for advanced product, marketing, and development professionals.

As long as done appropriately, this type of testing helps eliminate the need to run several sequential A/B tests on a page with similar goals. You can save time, effort, and money by running concurrent tests with more variations. It could lead to a conclusion in the shortest possible time.

Image Source- Google Help Doc

The Benefits of Multivariate Testing

- Determine and analyze each page element’s contribution to the measured gains.

- Helps to avoid performing several sequential A/B tests with the same goal. 3. It saves time since you can track the performance of different tested page elements simultaneously.

- Map all interactions between all independent element variations, such as banner images, page headlines, etc.

Multipage testing

Multipage testing is a kind of experimentation in which you test changes to certain elements across several pages. Multipage testing could be done in a couple of ways. The first way is called Funnel Multipage testing. This is where you can run all the sales funnel pages and make new versions of every page, making the challenger the sales funnel and again testing it against the control.

Conventional or Classic Multipage testing is the second way. In this method, you can test how adding or removing recurring elements, such as testimonials, security badges, and others, impact conversions across an entire funnel.

Image Source- Google Help Doc

Multipage Testing Advantages

- Helps the target audience see a consistent set of a page, regardless of its control or one of its variations.

- Allows you to build consistent experiences for the target audience.

- Enables you to implement the same change on various pages to ensure that site visitors will not be distracted and bounce off between variations and designs when navigating your website.

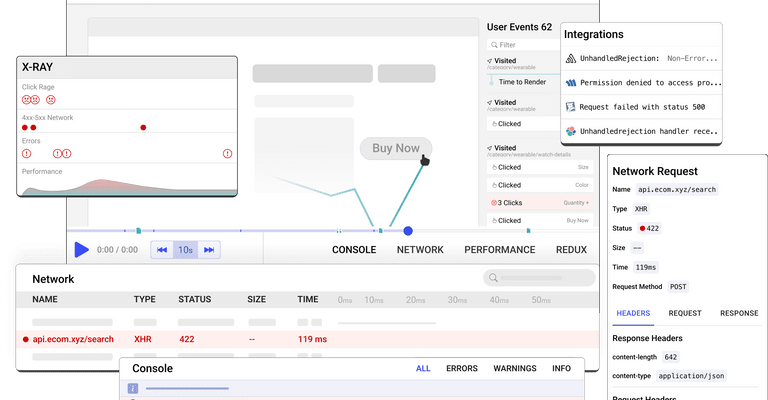

Session Replay for Developers

Uncover frustrations, understand bugs and fix slowdowns like never before with OpenReplay — an open-source session replay suite for developers. It can be self-hosted in minutes, giving you complete control over your customer data

Happy debugging! Try using OpenReplay today.

Elements that you can A/B Test

There are several variables that you could test to optimize the performance of the landing page. Below are some of the crucial elements you can test.

Headings

The heading or the headline is what catches the users’ attention. Furthermore, subheadings compel them to keep reading, click on a paid app or scroll down to read the fold copy. Thus, it’s important to test your headline by making variations and determine which works better.

Aside from the copy, consider changing the font size, type, style, and alignment of the heading and subheadings.

Image Source- LeadPages

Images

You can test background images, content images, or above the fold image. Aside from that, you can also test by replacing images with illustrations, videos, or GIFs.

Forms

Forms serve as the medium wherein users get in touch with you; that’s why the signup process should be smooth. You should not give users a chance to drop off during this phase. The following are what you can test:

- Consider minimizing the unnecessary signup field.

- Add inline forms on various pages, like blog pages or case studies.

- Utilize social proof, like testimonials, on the signup form page.

- Try out a different form copy as well as CTA buttons.

Page design and layout

How you present various elements on the page matters. Even if you have a compelling landing page copy, a badly formatted copy won’t convert. Thus, you should test out the placement and arrangement of elements on your webpage and ensure there’s a hierarchical order that users can follow easily.

CTAs

Test which call-to-actions push users toward a specific action, such as ‘subscribe’, ‘click here’, ‘check here’, and so on. Furthermore, the action words could be used with a ‘now as well, creating a sense of urgency.

A/B testing process

Let's start a step-by-step process of how A/B testing can be started.

Identify the Pain Points

Simply, pain points are elements in the sales funnel that put visitors off or give an inferior shopping experience. There are free tools like Microsoft Clarity, which will help you measure how users interact with your pages with the help of user session recordings. At the same time, you will also find premium tools like hotjar, which gives you a complete package of user interaction-related data like heatmaps, session recordings, and behavior analytics. This data will help you determine where users spend the most time, their scrolling behavior, and so on. This helps identify the problem areas on your website. Here's an example from Openreplay session replay tool itself to determine how users interact with buttons.

Generate hypothesis

When you have determined your goal, it’s important to start generating A/B testing ideas and test hypotheses for why you think they would be better than the present version. When you already have a list of ideas, make them your priority in terms of the impact expected and the difficulty in the implementation.

Source - cxl

Create variations

You need to know the following terms to understand this step.

Control – the page’s original version

Test Version – the version where you make changes

Variant – any element you change for testing, like using various images, changing the call-to-action button, and so on.

In this step, you put the hypothesis into action. Furthermore, you must decide the A/B testing approach to use, whether it’s split, multipage, or multivariate testing. You can then proceed to make the test version of the page with the help of free tools like Google Optimize, but unfortunately Google has decided to shut down this free A/B testing tool. Nevertheless, you will find some more tools like optimizely, vwo, and some more. You can configure these tools and implement your test versions; for instance, if you want to boost the click-through rate, you may want to change the button size of the CTA copy.

If you want more form submissions, reducing the signup field or adding testimonials besides the form would be a good idea.

Start your test

When the sample size and variations are all asset up, you can start testing. Again you can use tools like Google Optimize, Ominiconvert, or Crazyegg. Let the test run for an adequate time until you start interpreting results. Typically, testing should be run until you acquire statistically significant data before making changes.

The timings depend on the sample size, variations, and test goals.

Here's a better representation from Adroll about how you can start your A/B test.

Analyze and review your test Results

Analysis of the results is paramount when running A/B testing. Since A/B testing calls for continuous gathering and analysis of data, it is in this last step that your whole journey unravels. Once the test ends, analyze the test results by considering the metrics, such as confidence level, percentage increase, direct and indirect impact on other metrics, and so on.

After considering these numbers, if the test succeeds, it’s time to deploy the winning variation. If the test stays inconclusive, gain insight from it and implement these in subsequent tests.

Conclusion

Now that you have a clear idea about the steps involved in A/B testing, you can start immediately. Find your pain points, create your hypothesized versions, and implement them to measure the difference between them.

Top comments (0)