This article is a translation of コンテナで始めるモニタリング基盤構築

This article is the 17th day article of Makuake Advent Calendar 2021.

It's been three years since I joined the company, and the company's Advent calendar is also my third participation.

For the past year, I belonged to the Re-Architecture team, a team that develops and operates Makuake's service infrastructure, and has been struggling in various ways.

I'm sure I will struggle with this and that next year.

Click here for jobs for the Re-Architecture team.

【Go/マイクロサービス】「Makuake」の基盤を刷新するRe-Architectureチームのエンジニア募集!

I don't think there is any problem with applying from outside Japan. Feel free to message me.

By the way, the first article of this year (one more article on the 24th, maybe) is "Building a monitoring infrastructure starting with a container".

In particular, regardless of my main business, I was trying various things to make the monitoring platform of the application I made as a hobby feel good with a container, so I tried to disclose the knowledge at that time (not so much ...) think.

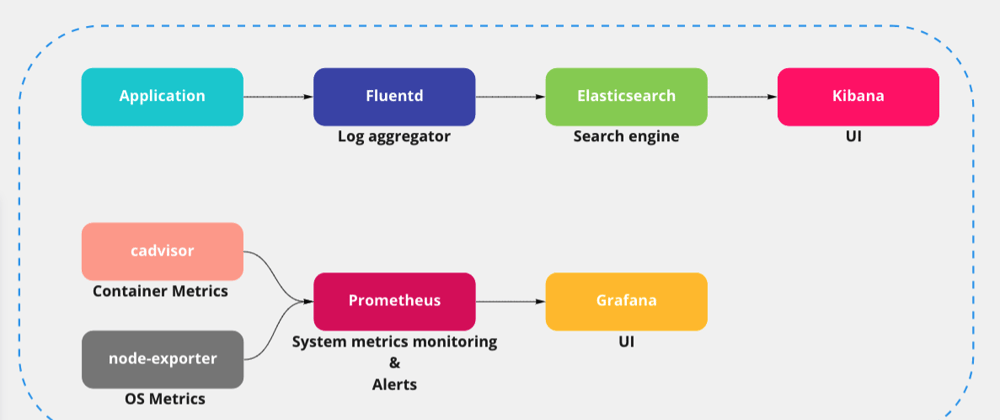

Monitoring infrastructure configuration

The applications that make up the monitoring platform to be built this time are as follows.

-

Elasticsearch

- Search engine. Accumulate application logs. The application itself that collects logs implements a simple application in Go.

-

Fluentd

- Search engine. Accumulate application logs. The application itself that collects logs implements a simple application in Go.

- I could have used ELK (Logstash instead of Fluentd) instead of the EFK stack, but I chose the familiar Fluentd.

-

Kibana

- Data search/visualization/analysis UI. Visualize the application log.

-

Grafana

- Like Kibana, a UI for data. It is used for visualization of system metrics.

- It can also be used to visualize the application log, but the application log uses Kiabana.

-

Prometheus

- System metrics monitoring tool. Collect system metrics in conjunction with node-exporter and cadvisor. Visualization of the collected data is done in Grafana.

-

node-exporter

- Collect OS metrics.

-

cadvisor

- Collect container metrics.

I tried to roughly configure it for those who want to build and play for the time being.

Build these applications with docker-compose.

Monitoring infrastructure construction

All implementations are in bmf-san/docker-based-monitoring-stack-boilerplate

After cloning, just create .env anddocker-compose up and you will be able to touch it immediately.

By the way, if it is M1, cadvisor will not start, so container metrics cannot be collected. It has been confirmed to work on intel mac and ubuntu.

The directory structure is as follows. I will explain one container at a time.

.

├── app

│ ├── Dockerfile

│ ├── go.mod

│ └── main.go

├── cadvisor

│ └── Dockerfile

├── docker-compose.yml

├── elasticsearch

│ └──Dockerfile

├── .env.example

├── fluentd

│ ├── Dockerfile

│ └── config

│ └── fluent.conf

├── grafana

│ ├── Dockerfile

│ └── provisioning

│ ├── dashboards

│ │ ├── containor_monitoring.json

│ │ ├── dashboard.yml

│ │ └── node-exporter-full_rev21.json

│ └── datasources

│ └── datasource.yml

├── kibana

│ ├── Dockerfile

│ └── config

│ └── kibana.yml

├── node-exporter

│ └── Dockerfile

└── prometheus

├── Dockerfile

└── template

└── prometheus.yml.template

I would like to explain one by one.

app

First, create a sloppy application container that spits out logs.

├── app

│ ├── Dockerfile

│ ├── go.mod

│ └── main.go

The application looks like this. It's a server that just spits out a log saying "OK" and responds with "Hello World".

package main

import (

"fmt"

"log"

"net/http"

)

func main() {

mux := http.NewServeMux()

mux.Handle("/", http.HandlerFunc(func(w http.ResponseWriter, r *http.Request) {

w.WriteHeader(http.StatusOK)

log.Println("OK")

fmt.Fprintf(w, "Hello World")

}))

http.ListenAndServe(":8080", mux)

}

The Dockerfile is as simple as building the source and running the binaries, with no special notes.

docker-compose.yml has the following form.

version: '3.9'

services:

app:

container_name: "${APP_CONTAINER_NAME}"

environment:

- APP_IMAGE_NAME=${APP_IMAGE_NAME}

- APP_IMAGE_TAG=${APP_IMAGE_TAG}

- ALPINE_IMAGE_NAME=${APP_IMAGE_NAME}

build:

context: "./app"

dockerfile: "Dockerfile"

args:

PLATFORM: "${PLATFORM}"

APP_IMAGE_NAME: "${APP_IMAGE_NAME}"

APP_IMAGE_TAG: "${APP_IMAGE_TAG}"

ALPINE_IMAGE_NAME: "${ALPINE_IMAGE_NAME}"

ports:

- ${APP_HOST_PORT}:${APP_CONTAINER_PORT}

command: ./app

logging:

driver: "fluentd"

options:

fluentd-address: ${FLUENTD_ADDRESS}

fluentd-async-connect: "true"

tag: "${APP_LOGGING_TAG}"

Specify fluentd as the logging driver and transfer the logs to fluentd.

fluent-async-connect is a setting that buffers logs until a connection can be established with fluentd. If true, it will buffer logs even if the connection is not established.

fluentd

This section describes the fluentd container that is the log transfer destination of the application.

├── fluentd

│ ├── Dockerfile

│ └── config

│ └── fluent.conf

The Dockerfile is as follows.

ARG FLUENTD_IMAGE_NAME=${FLUENTD_IMAGE_NAME}

ARG FLUENTD_IMAGE_TAG=${FLUENTD_IMAGE_TAG}

ARG PLATFORM=${PLATFORM}

FROM --platform=${PLATFORM} ${FLUENTD_IMAGE_NAME}:${FLUENTD_IMAGE_TAG}

USER root

RUN gem install fluent-plugin-elasticsearch

USER fluent

The only gem used in fluentd is fluent-plugin-elasticsearch for working with elasticsearch.

The reason why USER is set to root and finally returned to fluent is because the execution user of the fluentd image is fluent.

Set fluentd conf as follows.

<source>

@type forward

port "#{ENV['FLUENTD_CONTAINER_PORT']}"

bind 0.0.0.0

</source>

<match "#{ENV['APP_LOGGING_TAG']}">

@type copy

<store>

@type elasticsearch

host elasticsearch

port "#{ENV['ELASTICSEARCH_CONTAINER_PORT']}"

user "#{ENV['ELASTICSEARCH_ELASTIC_USERNAME']}"

password "#{ENV['ELASTICSEARCH_ELASTIC_PASSWORD']}"

logstash_format true

logstash_prefix "#{ENV['FLUENTD_LOGSTASH_PREFIX_APP']}"

logstash_dateformat %Y%m%d

include_tag_key true

type_name "#{ENV['FLUENTD_TYPE_NAME_APP']}"

tag_key @log_name

flush_interval 1s

</store>

</match>

In fluentd conf, environment variables can be embedded in the format # {...}, so it is convenient to embed variables without using envsubst etc.

I will omit docker-compose.yml because there are no special notes.

elasticsearch

Elasticsearch is set to start on a single node.

There is nothing else to mention, so I will omit the details.

├── elasticsearch

│ └──Dockerfile

kibana

Next, let's talk about kibana, which visualizes application logs.

Since there are no special notes about Dockerfile, I will omit the description and explain from kibana's conf.

server.name: kibana

server.host: "0"

elasticsearch.hosts: [ "http://${ELASTICSEARCH_CONTAINER_NAME}:${ELASTICSEARCH_CONTAINER_PORT}" ]

xpack.monitoring.ui.container.elasticsearch.enabled: true

elasticsearch.username: ${ELASTICSEARCH_ELASTIC_USERNAME}

elasticsearch.password: ${ELASTICSEARCH_ELASTIC_PASSWORD}

xpack.monitoring.ui.container.elasticsearch.enabled is an option that should be enabled if elasticsearch is running in the container.

There are no special notes about kibana's docker-compose.yml, so I will omit it.

node-exporter & cadvisor

I will omit the explanation because I am only aware of the directories to mount and the startup options for node-exporter and cadvisor.

prometheus

Next is prometheus.

I wanted to write a prometheus configuration file using envsubst, so I made the Dockerfile as follows.

ARG ALPINE_IMAGE_NAME=${ALPINE_IMAGE_NAME}

ARG ALPINE_IMAGE_TAG=${ALPINE_IMAGE_TAG}

ARG PROMETHEUS_IMAGE_NAME=${PROMETHEUS_IMAGE_NAME}

ARG PROMETHEUS_IMAGE_TAG=${PROMETHEUS_IMAGE_TAG}

ARG PLATFORM=${PLATFORM}

FROM --platform=${PLATFORM} ${PROMETHEUS_IMAGE_NAME}:${PROMETHEUS_IMAGE_TAG} as build-stage

FROM --platform=${PLATFORM} ${ALPINE_IMAGE_NAME}:${ALPINE_IMAGE_TAG}

RUN apk add gettext

COPY --from=build-stage /bin/prometheus /bin/prometheus

RUN mkdir -p /prometheus /etc/prometheus \

&& chown -R nobody:nogroup etc/prometheus /prometheus

COPY ./template/prometheus.yml.template /template/prometheus.yml.template

USER nobody

VOLUME [ "/prometheus" ]

WORKDIR /prometheus

docker-compose.yml looks like this:

prometheus:

container_name: "${PROMETHEUS_CONTAINER_NAME}"

environment:

- PROMETHEUS_IMAGE_NAME=${PROMETHEUS_IMAGE_NAME}

- PROMETHEUS_IMAGE_TAG=${PROMETHEUS_IMAGE_TAG}

- PROMETHEUS_CONTAINER_NAME=${PROMETHEUS_CONTAINER_NAME}

- PROMETHEUS_CONTAINER_PORT=${PROMETHEUS_CONTAINER_PORT}

- CADVISOR_CONTAINER_NAME=${CADVISOR_CONTAINER_NAME}

- CADVISOR_CONTAINER_PORT=${CADVISOR_CONTAINER_PORT}

- NODE_EXPORTER_CONTAINER_NAME=${NODE_EXPORTER_CONTAINER_NAME}

- NODE_EXPORTER_CONTAINER_PORT=${NODE_EXPORTER_CONTAINER_PORT}

build:

context: "./prometheus"

dockerfile: "Dockerfile"

args:

PLATFORM: "${PLATFORM}"

PROMETHEUS_IMAGE_NAME: "${PROMETHEUS_IMAGE_NAME}"

PROMETHEUS_IMAGE_TAG: "${PROMETHEUS_IMAGE_TAG}"

ALPINE_IMAGE_NAME: "${ALPINE_IMAGE_NAME}"

ALPINE_IMAGE_TAG: "${ALPINE_IMAGE_TAG}"

ports:

- ${PROMETHEUS_HOST_PORT}:${PROMETHEUS_CONTAINER_PORT}

command:

- /bin/sh

- -c

- |

envsubst < /template/prometheus.yml.template > /etc/prometheus/prometheus.yml

/bin/prometheus \

--config.file=/etc/prometheus/prometheus.yml \

--storage.tsdb.path=/prometheus

restart: always

The prometheus configuration file is written as follows.

scrape_configs:

- job_name: 'prometheus'

scrape_interval: 5s

static_configs:

- targets:

- ${PROMETHEUS_CONTAINER_NAME}:${PROMETHEUS_CONTAINER_PORT}

- job_name: 'cadvisor'

static_configs:

- targets:

- ${CADVISOR_CONTAINER_NAME}:${CADVISOR_CONTAINER_PORT}

- job_name: 'node-exporter'

static_configs:

- targets:

- ${NODE_EXPORTER_CONTAINER_NAME}:${NODE_EXPORTER_CONTAINER_PORT}

I'm only writing about the job name and target I want to scrape. If you want to set alert notification using Alertmanager, you will also add the Alertmanager setting to this configuration file.

grafana

Finally, grafana.

docker-compose.yml looks like this:

grafana:

container_name: "${GRAFANA_CONTAINER_NAME}"

environment:

- GF_SECURITY_ADMIN_USER=${GF_SECURITY_ADMIN_USER}

- GF_SECURITY_ADMIN_PASSWORD=${GF_SECURITY_ADMIN_PASSWORD}

- GF_USERS_ALLOW_SIGN_UP="${GF_USERS_ALLOW_SIGN_UP}"

- GF_USERS_ALLOW_ORG_CREATE="${GF_USERS_ALLOW_ORG_CREATE}"

- DS_PROMETHEUS=${DS_PROMETHEUS}

build:

context: "./grafana"

dockerfile: "Dockerfile"

args:

PLATFORM: "${PLATFORM}"

GRAFANA_IMAGE_NAME: "${GRAFANA_IMAGE_NAME}"

GRAFANA_IMAGE_TAG: "${GRAFANA_IMAGE_TAG}"

volumes:

- ./grafana/provisioning:/etc/grafana/provisioning

ports:

- ${GRAFANA_HOST_PORT}:${GRAFANA_CONTAINER_PORT}

restart: always

provisioning is the directory where you put the files used for provisioning data sources and dashboards.

Since prometheus is used as the data source, the settings of prometheus are described in datasources / datasource.yml.

apiVersion: 1

datasources:

- name: Prometheus

type: prometheus

access: proxy

orgId: 1

url: http://prometheus:9090

basicAuth: false

isDefault: true

editable: true

The dashboard provides dashboard configuration files for container metrics and OS metrics.

You can use the dashboard configuration file published at grafana.com - grafana/dashboards.

Assembling a dashboard from scratch can be a daunting task, so it seems good to find a base and adjust it.

There are quite a lot of things that are open to the public, so it's interesting to touch them.

Start-up

Most of the settings are configured so that they can be adjusted with environment variables.

.env.example in bmf-san / docker-based-monitoring-stack-boilerplate.env After copying it as, you can start it with docker-compose up.

In the setting of .env.example, the port number is assigned as follows.

| Application | URL |

|---|---|

| app | http://localhost:8080/ |

| prometheus | http://localhost:9090/graph |

| node-exporter | http://localhost:9100/ |

| mysqld-exporter | http://localhost:9104/ |

| grafana | http://localhost:3000/ |

| kibana | http://0.0.0.0:5601/ |

summary

I think it was relatively easy to build (maybe the benefit of the container).

The architecture of each application is deep, so it would be interesting to see how it works once you touch it.

I haven't actually put it into operation yet, so I'd like to put it into operation as soon as possible.

Latest comments (0)