Greetings, Indie Hackers!

I've noticed that there's not much discussion going around here regarding bot protection and the effects that bots can have on a website.

A website with non-existent or weak bot protection is at a high risk of suffering numerous negative consequences due to malicious bot traffic.

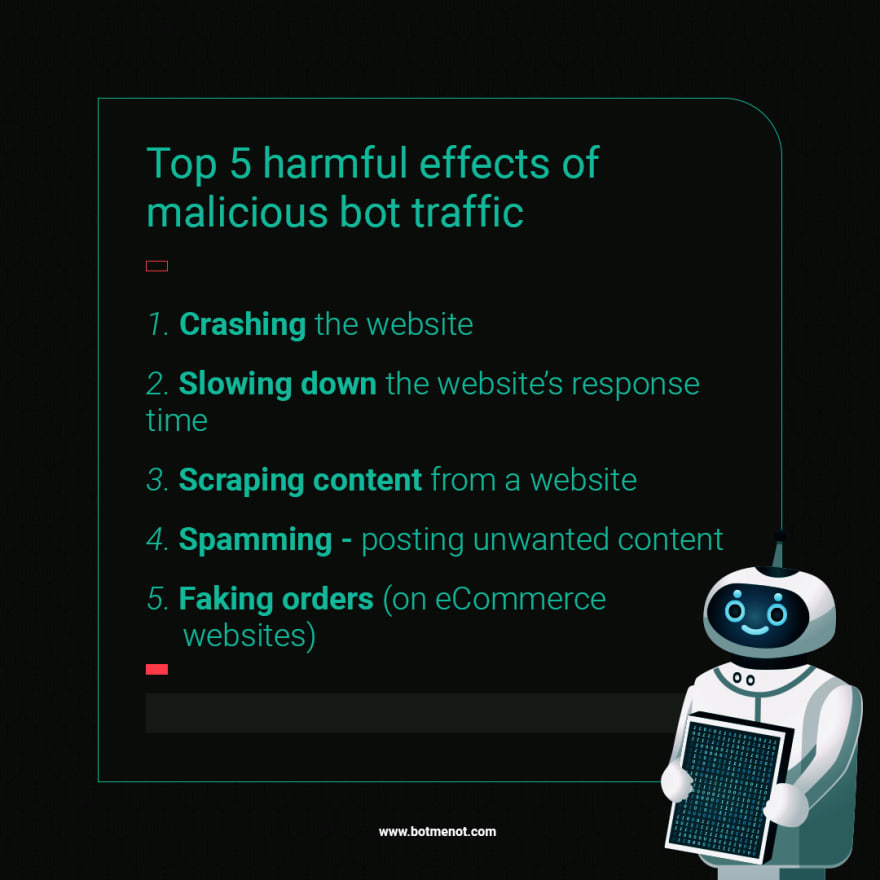

The most dangerous harmful effects that bot traffic can have on a website are:

Crashing the website - when a website crashes, its services become unavailable for its intended users. This happens most frequently when a website is a target of a DDoS (Distributed Denial of Service) attack. They occur by overwhelming a website with a large number of requests - much more than it can handle.

Slowing down the website's response time - in a way, this is a milder version of a scenario where your website completely crashes. However, as users are becoming less patient, it still poses a real and serious threat.

Scraping content - purposes of scraping content from a website are various. Gathering personal data, monitoring prices, or plagiarism - all these (and more) are a real risk for unprotected websites.

Spamming - many bots are developed to post promotional or some other type of unwanted content to various websites. Sometimes they are able to mimic human behavior extremely well, so it can be difficult to notice them.

Faking orders - this is especially significant for eCommerce websites. The purpose of this action is (in most cases) to check the stock levels of a certain product. However, the negative effect is that it can temporarily cause those products to be unavailable to people who genuinely want to make a purchase.

Interested in learning more about bot protection or even checking out by yourself how well a website is protected against bots?

Make sure to check out https://botmenot.com/

Top comments (0)