In this series, we will first discuss the building blocks of Frontend System Design. In an interview, it might happen that you are asked a question about an application that you have not known before and hence it's important to know the building blocks so that you can design any system.

Today we are going to discuss Realtime Updates.

What are Realtime Updates?

Realtime updates mean that we need to update the UI in realtime, near realtime, or periodically. It is usually required in:

Chat Applications

Online Games

Realtime Auction

Collaborative Tools like Figma, Whiteboard

Some Important Things to Discuss First

Pull/Push Strategies

Pull is when the client requests updates from the server; basically, the client is the initiator here.

Push is when the server sends updates to the client; basically, the server is the initiator here.

Comet

It's a term used to refer to the technologies and techniques, which are used to maintain a long-hold HTTP connection and push messages from the server to the client, including Server Side Events, long polling, and others.

Should we use WebSockets everywhere?

Here are some typical myths that we need to understand are not totally true:

Short polling: very ineffective, no need to use it

Long polling: we need to only use it when the browser does not support Web Sockets

Server Side Events: nobody uses it

Web Sockets: very effective since it provides bi-directional connection

Well, WebSockets is designed for bi-directional connection and should be used for specific use cases. We cannot just use it every time we need realtime updates.

Reason : It might not be wise to do so. We need to understand that designing an application is not just about efficiency but it's also about the trade-offs. We can't just go and implement WebSockets without thinking about the cost of development and the resources it will consume.

Examples that provoke some deep thought

LinkedIn uses Server Side Events for instant messaging, but

why it doesn't use WebSockets?StackOverflow uses WebSockets to update the UI, basically adding the feed with new posts but we don't need a bi-directional connection here, then why didn't they use server-side events or polling?

How is it important for interviews?

In an interview where we are asked to design an application, it is not that important as to what solution we choose but rather why we choose it keeping in mind the business and resources available. It is important to know all the options and their respective trade-offs and finally arrive at the best solution.

Short Intro to Scalability

Scalability is simply the ability of a solution to handle more requests or workload by using more resources.

There are two types of scaling:

Horizontal Scaling: where you add more machines for distributing requests to handle

Vertical Scaling : where you increase the resources of a single machine like CPU, RAM, etc.

Now the component in the system that distributes the incoming traffic is known as the load balancer , simply put it figures out the server it needs to send the request to, based on various metrics like CPU, etc.

Stateful vs Stateless server architecture

Stateful server architecture is where user's data like session data, login data and all are stored in the server. Problems with this is that once the server is down, the data is lost plus it causes scalability issues since even on meting out the request to other server won't reclaim the user data.

Stateless architecture is where the set of servers share a common cache and storage and hence even when one of the servers is down, the data persists and hence it is also scalable.

Short Polling

Definition:

Short Polling is when the client (basically the browser) periodically asks the server for updates, maybe after a regular time period of 4 seconds.

Advantages:

It does not require additional work on the server side since the client is the initiator here.

It works over HTTP.

It doesn't need a persistent connection.

Disadvantages:

It's basically inefficient in a lot of cases.

Misses a lot of random updates: in case random updates with different intervals are happening to the data, then it is not getting shown properly on the client since the client will only send requests at periodic intervals, and hence it misses out on a lot of updates

Makes useless requests: there might not be that frequent updates happening as the time period is set for the client to send requests and hence it might make a lot of useless requests

Where to use short polling?

No access to backend code: When the backend code is not available to us, we simply have no option than to periodically send requests to the server.

Data only needs to be updated periodically

Data is getting updated with known interval

Simplest, possibly temporary solution is needed.

Where not to use?

Realtime updates are required

Very infrequent updates take place

How to scale a short polling system?

Suppose we have a stock app where we are trying to fetch the info from a database that is regularly updated by a different stock server. We can have our own intermediary servers to fetch info from the database and send it back to the client. We might have used only one intermediate server but additional resources but still it might not be enough (vertical scaling was not enough). So we have increased the servers (horizontal scaling). Let us assume the time period is 2 seconds.

Now for the second request, the load balancer will use a different server.

Okay, now let's move to our next topic.

What is Long Polling

Definition:

Long polling is a technique where the client first sends an HTTP request, and then the server holds the request till some update is available till then the connection persists, and when the new data is available the response is sent.

Advantages:

It saves more resources compared to traditional polling methods.

It is close to real-time updates with minimum delay.

Disadvantages:

Adds Complexity : It requires maintaining a persistent connection which leads to complexity in the backend code.

Horizontal Scaling is Difficult

Operates over standard HTTP and hence causes extra overhead.

Not exactly realtime, Server Side Event and WebSocket are quite better

Might require sticky sessions which are detrimental to scalability

When to use Long Polling

Realtime updates are required for old browsers. Long Polling is highly supported in older browsers since under the hood it is at the end XMLHttpRequest. Many websocket and SSE libraries use this as a fallback for old browsers. Plus it allows the feature to send headers, which SSE does not.

Messages from the server are highly infrequent.

When to avoid using it

An efficient realtime bi-directional connection is required. In that case, we need to fall back to WS and SSE for minimum latency.

We don't have enough infrastructure to support too many persistent connections.

HTTP Overhead can be a problem and it is better to shift to SSE.

Problems which we need further understanding on

Unnecessary Delays

When the client sends a request to the server, the first update is sent as a response but what if a new update occurs simultaneously? Well, it can't be sent in the first response but requires another request from the client side to be sent as a response.

Can be solved using WebSockets or SSE.Problems with scaling: Suppose we are using a stateful architecture and what we are doing is showing a progress percentage of some task on the client. For that client had sent a request to a specific server, and the server is sending back the progress percentage of the task and this data is stored in the server.

Solutions for issues in scalability

Using sticky session: here we will use a mapping of the client to server so that the load balancer knows which server the request needs to be meted out to.

Simply using stateless architecture

Sticky session vs shared storage

Sticky session is mainly needed when we need stateful architecture and can be useful since:

It's easy to implement

It is good for short-lived or non-critical data such as file uploads

But it can be a problem since:

It leads to uneven distribution of connections between servers and clients

The data is still stored in the server and if the server goes down, the data is lost.

It is quite vulnerable to attacks from hackers since hackers can trick the load balancer into meting out requests to only one server

Hence it might be a good idea to switch to Shared Storage even though it is hard to implement but is quite useful since:

It has no impact on the load balancer

The data is stored in shared storage and hence does not get lost

Great for critical or long-lived data

Server Side Events

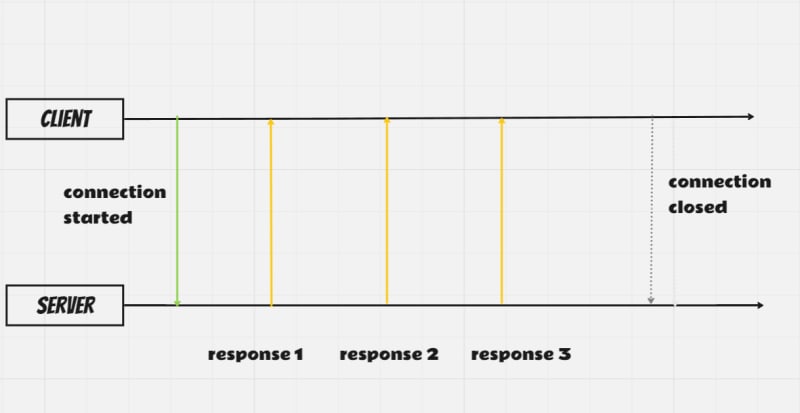

Definition: It is an implementation of Comet where the client establishes a connection with the server and the server sends responses whenever necessary while the connection is active. It is a push technology where the server is the initiator.

This fact needs to be noted that short polling and long polling are merely some hacks but this is an actual technology.

It is used in realtime notifications like news, and social feeds; realtime updates like sporting events; and progress updates, and monitoring server statistics like uptime, status, and other running processes.

Advantages:

It provides realtime updates.

It is compatible with most proxy servers, load balancers, and so on due to working over plain HTTP.

It reduces HTTP overhead to an average of 5 bytes compared to 8 bytes for traditional polling.

It supports various features such as automatic reconnection, event names, and event IDs.

When compared to HTTP 2, it offers multiplexing which allows multiple SSE connections to be opened over a single TCP connection.

It allows connectionless messaging to save battery power on mobile devices.

It uses plain HTTP, allowing polyfill to be used with vanilla Javascript.

Disadvantages:

It requires a persistent connection.

It requires additional server-side implementation.

It has less browser support compared to polling and websockets.

It has less efficient traffic compared to WebSockets.

For cross-domain connections, appropriate CORS settings are required.

It is limited to 6 concurrent connections per browser on HTTP version below 2, for more connections an upgrade to version 2 is required.

When to use SSE

A monodirectional channel is required for realtime updates considering browser support is not an issue.

Messages from the server are quite frequent and hence to avoid HTTP overhead, shifting from long polling to SSE is a good idea.

Battery life is a problem: connectionless push is supported in SSE. We might have noticed that a persistent connection is required in SSE which can be detrimental to the battery life of the device. To avoid that, SSE allows the device to go into sleep mode between messages which can be an advantage over WS.

When not to use SSE

A bi-directional connection is required.

The server sends too frequent messages.

In either case, it is better to switch to WebSockets.

Something to Notice before moving to the final topic

WebSockets

Definition:

It is a bi-directional channel which allows communication in both directions.

It is mainly used in chat apps, multiplayer games, collab tools like Figma, and so on.

Advantages:

It is bi-directional.

It provides less latency and lower overhead for message sending since WS runs on a different protocol and is independent of HTTP.

It has better browser support compared to SSE.

It supports binary data such that payload size can be reduced compared to text-only approaches and you can also transfer binary data such as for images.

It allows clients to connect to the server from another domain without the trouble of CORS.

Disadvantages:

It is complex to implement and maintain.

It runs on different protocol (WS and WSS for secure web sockets). This can cause problems with some firewalls, proxy servers, firewalls, and load balancers which are originally supposed to work with HTTP.

It does not support HTTP features such as multiplexing.

Its browser support is worse than polling.

Horizontal scaling is problematic.

When should we use it

A bidirectional channel is required.

Maximal efficiency is required: we can emulate bidirectional connection, and we can make it using SSE and HTTP request( or long polling and HTTP request) but in the best case, we should WebSockets for its low latency and low overhead.

When to avoid using it

Bidirectional communication is not required for example only near realtime updates are needed.

Infrastructure is not ready for WS protocols and in that case, it's better to use SSE+HTTP requests.

We need the features of HTTP. WS does not use HTTP and hence cuts off the features it provides.

Risks are not planned out. WS uses a different protocol but the entire IT architecture might be built on HTTP and hence it might cause unpredicted problems.

Problems we can face

Scaling : The diagram pretty much describes it

Need to share updates between servers: The solution to this is pub and sub as explained by the diagram.

We are simply cutting off features HTTP would have given us.

F or example:

Chat app: we know a chat app offers multiple features like chats, group chats, status checks, and so on. We might need multiple WS connections for different updates or what we can do is add an additional field in the message so as to distinguish it from other messages. The first way can be more reliable and easier to implement and maintain but can be very inefficient and on the other hand, the second way will cause a high overhead.Infrastructure: Most IT infrastructure use proxy servers or load balancers which will simply not allow WS connection from unfamiliar protocols or basically insecure protocols to establish a connection with the server. In that case, we will need an encrypted or secure WS protocol to pass through.

Authentication : using cookie for auth is not suitable for all applications and WS API does not support token in the Authorisation header which is quite unlike regular HTTP. So let's see how we can solve this:

III) Send Auth token with the first message

The server checks the token present in the first message and if it is invalid, it closes the connection. It is more secure than the first and more simple than the second. However, the problem with this is that it is vulnerable to DDoS attacks. In the previous approaches, no one could make a connection if the valid token was not present as params.

IV) Using cookies

Cookies are still possible since there still needs to be an HTTP handshake before the WS connection but the WS connection needs to be deployed on the same domain as the web app.

V) Using Sec-WebSocket-Protocol HTTP Header

The initial HTTP handshake contains a Sec-WebSocket Protocol Header which we can use to send token but the problem is that it is not as secure as the Authorisation header and it can be logged as plain text in proxy servers plus proxy servers and load balancers may not know how to handle it since there is no standard to it.

Wrapping Up

Man! That was a lot. Hope you guys got to know a ton of things from this article. I took the material from the awesome video of Dmitriy Zhiganov so gotta show some credits. We will meet in the next blog till then goodbye!

Top comments (0)