There is increasing number of games that advertise themselves to be "RTX On". Indeed, games with ray tracing enabled usually come with stunning graphics. For example, a famous game called MineCraft. The difference between the scene with or without ray tracing is matchless.

Images from Nvidia: https://images.nvidia.com/geforce-com/international/comparisons/minecraft-ray-tracing/minecraft-with-rtx-interactive-comparison-001-on-vs-off.html

It is like two totally different games! In the first image, it is like we are still playing a game in 1980s console's style. However, in the second image, it is the game of next generation.

There is more lighting coming through the narrow gap at the top of the scene. The shadows feel more realistic. Reflection also appears on the ground of this cave. Those visual effects do not show in the image marked as "RTX OFF" at all!

Is ray tracing a new thing?

Fun fact: ray tracing is not a new thing! The concept was already described in 16th century. There were no computers at that era. Therefore, no magic happens in ray tracing but mathematics and physics. It is not like a nuclear weapon that only few world-leading scientists who can understand and employ this kind of technology.

But why ray tracing hadn't been so popular 10 years ago as today? Well, the answer is simple: computation power.

Rendering a scene with ray tracing is temporally and computationally costly, but in order to understand why this is the case, please follow me to the next section.

Ray tracing algorithm

To understand how the algorithm works, we need to know a basic knowledge: how do our eyes see the world?

The answer is simple: our eyes see the world by the lights around us come to our eyes. It is a passive process because there are no mysterious particles that come out from our eyes to the world, collect information from the environment around us, and then go back to tell our eyes what is happening.

OK, here comes the funny part: the ray tracing algorithm is actually the mysterious process described above!

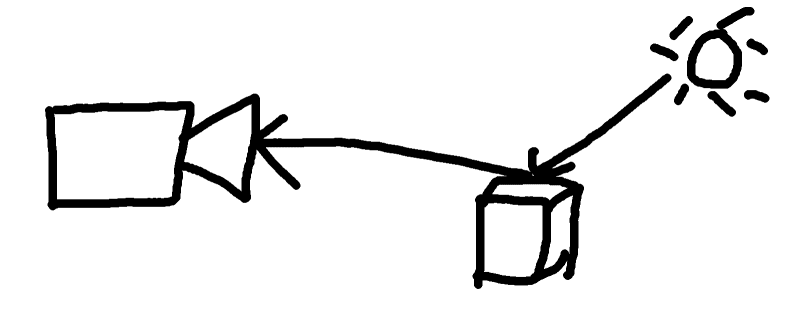

Imagine our monitor is a camera that is taking photos of the world inside our screen. Rather than the camera waiting for the lights come to our screen, the camera shoots the ray to the world proactively. When the ray hits something, it goes back to our camera and tell the camera what is the color of the object the ray just hit.

Here are images shown the difference, the arrows are the directions of lights:

(Sorry for my poor doodles/(ㄒoㄒ)/~~)

Yes! What happens in the ray tracing world is just what happens in the real world reversed!

At the stage, the algorithm seems to be easy and straightforward. For each pixel on our screen, it shoots a ray to the scene, when it hits something, it returns the color that the ray hit back to the pixel, which contributes to the color shown on our screen. Due to huge number of pixels that our screen usually have, the computation is better to be done on GPU than CPU, because GPU has better parallel processing power than CPU.

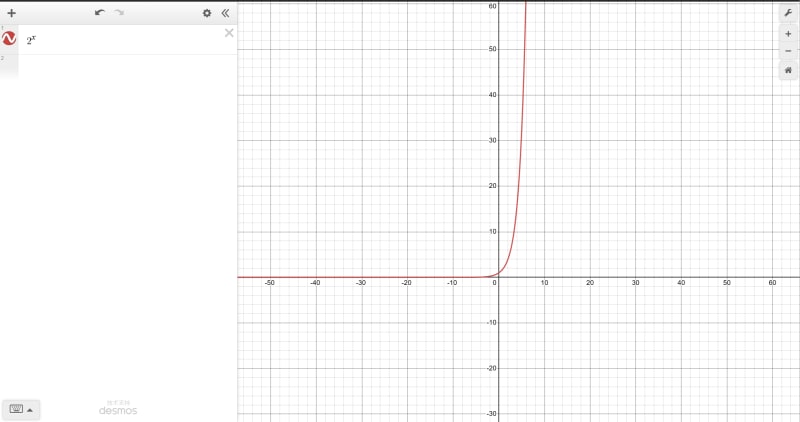

However, shooting one ray for each pixel is not enough. There may be only one ray coming out from the pixel, but when the ray hits something, the ray may be scattered into more rays to shoot to other directions in the scene. The ray will be scattered into more rays when it hits second object, and so forth. This kind of operation is the key to make reflections and refractions possible, because we need color information from other objects to paint on the object we concern, and It is also the reason that makes the algorithm computationally expensive. One ray can spawn multiple rays, and each of those multiple rays can spawn much more. Mathematically, this is called "exponential function", which is one of the fastest growing functions.

An exponential function graph generated by: https://www.desmos.com/calculator

Besides there will be countless rays dancing in our scene, here comes another problem that increases the complexity of ray tracing algorithm even more: how do we check each ray collide with each object in the scene? Assume we know the collision algorithm that checks how a ray intersect with a object, but we still need to know which object is hit by ray and is closest to the origin of the ray. A ray may interesct multiple objects on its way, however, we are normally interested in the closest one. Yes, normally not always, because if we want to render something transparent, we have to know what behind that transparent object. But, whatever it is opaque or transparent, the worst case of intersection detection is when the ray has to go through everything in the scene, which means the total time complexity is equal to the number of objects multiplies with the number of rays generated. Obviously, if we really take the naïve approach to do the job, the time to render our scene will be sky-rocketed, which is totally unacceptable for realtime rendering. Remember, we are video game makers, so every millisecond counts!

Acceleration Structure

If there is no way to accelerate the ray tracing process, it is impossible for us to enjoy the beauty while we are playing video games. Fortunately, there are plenty of methods helping our devices run those time-consuming algorithms faster. One of them is called "bounding volume hierarchy"(BVH), and it is the most fundemental one.

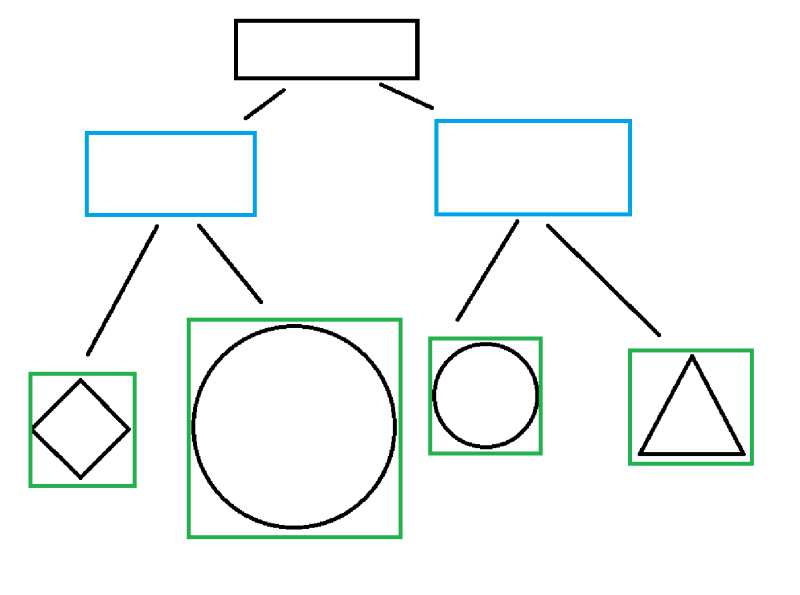

The basic idea of BVH is simple: for each object, whatever the shape it is, try to make a retangular box that is big enough to cover the entire object. Then, try to make a bigger box that covers a group of boxes that are close to each other. This process can be done recursively until everything in the scene is covered by BVHs.

As shown in the image, each object is covered by a green box. And those boxes that are close to each other is covered by a bigger aqua box. Fun fact, we have a name for those boxes: axis-aligned bounding box (AABB). It gets the name because all of its sides are strctly aligned with axes. There is another bounding box called oriented bouding box, which is retagular box as well, but it can be rotated if the object it covers is rotated. You can pick any one of them (or both) to group objects in the scene. For simplicity's sake, we will still use the term "BVH" when we learn the acceleration algorithm.

Those BVHs can be grouped by a tree structure. Each child node is covered by its parent node. The root is the biggest box that covers the whole scene. BVHs become smaller as the level goes deeper, until it reaches the leaf node, which is the box that covers the object directly.

When the program shoots a ray into the scene, rather than checking everything in the world, it firstly figures out if it collides with the root of the tree. If nothing happens, it does not hit any thing, then the algorithm can return the result earlier. If it does hit the root, then the process continues. When the program checks the collisions at the second level of the tree, the ray may hit one or more BVHs, then all objects covered by boxes that are not hit by the ray can be ignored in this process, so we can focus on the objects covered by boxes touched by the ray. It can also be done recursively, because if we have smaller BVHs, we can do this operation again against those smaller BVHs, then we can ignore even more objects which are certain not to be on the path of the ray, until the reaches the leaves. All those operations mentioned above have a name called "Broad phase detection". After broad phase detection, there will be handful objects we have to check precisely, this is called "narrow phase detection". At this stage, we still need to run precise checking algorithms for everything left to know which objects are truly collided or there are nothing collided by the ray at all.

Mathematically, it reduces the complexity of the algorithm from O(n) to O(logN), which is an enormous decrease for our detection work.

Here comes with the fun fact: what RT cores of GPU do is exactlly like as mentioned above. GPU helps us build the BVH trees, and the building process is accelerated by specific hardware of GPU. GPU also helps us check the collisions of ray against the scene, and it is accelerated by hardware as well.

Conclusion

Hopefully, you can learn some basic staff about ray tracing algorithm and how it is accelerated by acceleration structure by building BVH tree. In the next part, I will introduce how Vulkan plays the role of realtime raytracing rendering.

Top comments (0)