With the introduction of Gen AI and ChatGPT, my focus shifted towards its industrial application. As we start building some experience with real-life use cases, it's becoming clearer now that the most value you can get out of it is if you build a sort of pipeline around it that processes your data in multiple steps. Here, you have LLM perform individual isolated "supervised" tasks rather than letting it do the whole thing end-to-end in one shot.

The simplest example I came up with yesterday in one of my use cases is running the LLM to explain the output of image recognition and classification.

The thing is, image recognition alone simply lists certain image elements. Considering certain cultural implications, it may not be immediately clear what you are actually looking at.

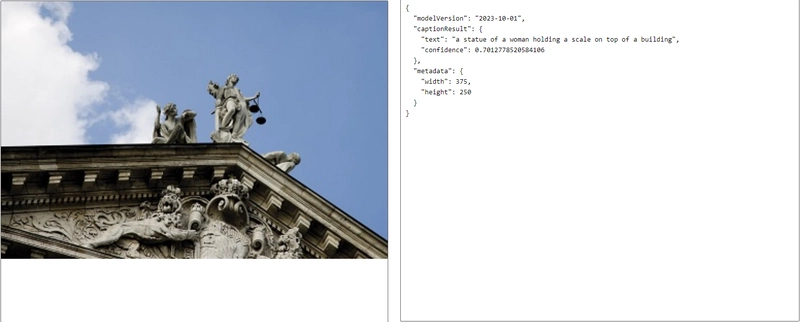

For example, here's the product of image captioning.

The result here is simply "a statue of a woman holding a scale on top of a building." If you ask me, I don't think I would describe it like that.

But let's run these results through another iteration of analysis with Gen AI and ask it to come up with a suggestion based on the image description.

Now, this makes much more sense and sounds more like a human would describe something they see.

It's still a good thing to remember, though, when leveraging Gen AI for analysis, that it does not understand the reasons and consequences of actions. Just keep in mind such limitations the next time you outsource analysis to the machine.

Top comments (0)