Human Language as a Programming Language

Today we’re going to take a break from programming (sort-of) and discuss a unique take on improving ChatGPT’s problem solving abilities.

I’ve recently been leveraging AI to create children’s coloring books. I have zero artistic creativity, but so far I’ve been able to publish several and with each iteration I explore new ways to automate the process. I’m to the point now where I can create around a 120–200 page coloring book in less than an hour. But I have a problem…

I’m not making any sales

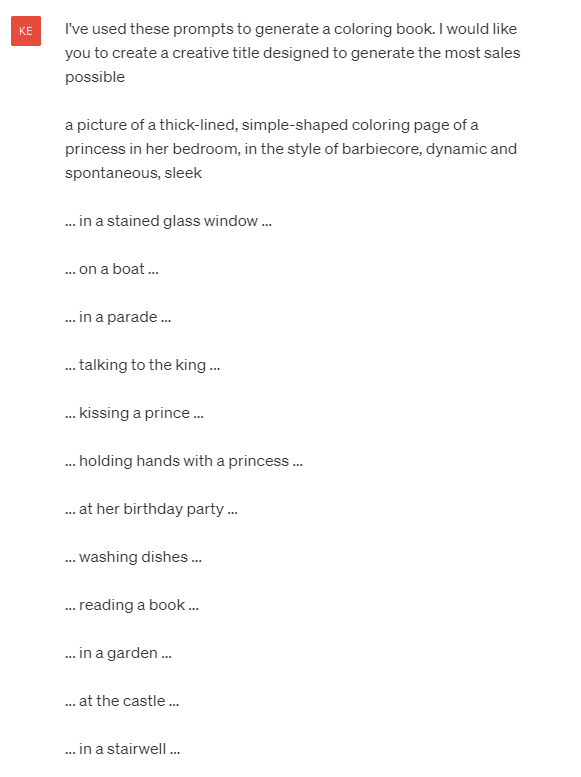

I’m not really surprised since my focus has been entirely on generating “passable” content in the quickest way possible. I have some focus on quality as well beyond passing the publisher’s quality checks, but I digress. So far I’ve made $0.13 in royalties and I’d like to start improving that. So I thought I’d leverage AI more and start getting ChatGPT to write my titles, marketing descriptions, and choose keywords. “Default” ChatGPT does well enough at this, excelling at creativity, but without direction, the results won’t be great… and could even get you slapped with a takedown order or lawsuit.

Prediction Engine

When I first started using ChatGPT, I approached it as if it were a search engine and this produced far better results than using a search engine. I remember telling everyone I came across that it was amazing — it was like “having a conversation with Google”. But I soon started running into the common problems we have with large language models in the form of “hallucinations” (the model tells you a wrong answer with 100% confidence that it’s truthful and accurate).

Since it’s a prediction model, if you don’t “shape” it, it will sort-of blindly predict what comes next and may give you more misleading information than if you give it a specific role. It’s not trying to do that at all, and there are safeguards in place to prevent things like that from happening, but in my experience, it needs a lot of help from me in pointing out mistakes and errors. This is not only time-consuming, but eats away at my (currently limited) number of messages I can exchange with GPT-4 (the latest ChatGPT model).

My First Attempt at a Good Title May Have Resulted in a Lawsuit 👮

My latest coloring book features princesses in various situations. I know ChatGPT does better with more information, so I gave it a general purpose and fed it a bunch of my prompts.

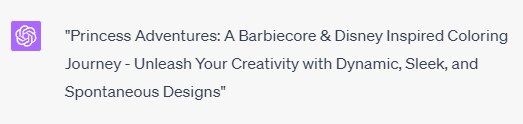

Did ChatGPT just say the “D” word? In my title? That I’m commercializing… yeah pretty sure that’s not ok… and Barbiecore seems a little dicey.

Ok, let’s break that interaction down in reverse:

ChatGPT is aware of the legal consequences of using another entity’s trademark

ChatGPT is aware that Disney is trademarked

ChatGPT is aware I want to generate revenue

ChatGPT advised me to use Disney

While #4 isn’t strictly a hallucination because I didn’t ask “not to get sued”, ChatGPT generally attempts to look out for a user’s well-being and provides disclaimers when it notices something in its advice may be harmful to the user.

Further, I asked about the use of “BarbieCore” and ChatGPT admitted this is a gray area since Mattel doesn’t hold a trademark over “BarbieCore” and it is a widely-used term, but it could bring legal consequences anyway.

So what can we do to reduce hallucinations such as this?

Human Language as a Programming Language

A lot of software developers are afraid of losing their jobs to AI right now, but I think we’re probably in one of the best positions to be in for a number of reasons that I’ll maybe explore in another post. In this one, I’ll focus on the one that makes the most logical sense to me — using my words as a tool to shape and manipulate a software application.

Role-Playing with AI

One of the most effective strategies I’ve found for shaping and manipulating AI chat bots, particularly ChatGPT, is role-playing. This involves simulating a conversation between different roles, each with their own unique perspective and objectives. For example, in my coloring book project, I simulated a conversation between a kindergarten teacher, a marketing professional, a business professional, and a legal professional.

The kindergarten teacher’s role was to focus on the well-being of children, suggesting ideas that would be exciting and engaging for them. The marketing professional’s role was to focus on revenue generation, emphasizing the need to appeal to parents who would be making the purchase. The business professional kept the conversation focused on the topic of generating revenue, and the legal professional ensured all suggestions were legally sound.

This role-play approach led to a more comprehensive and balanced discussion. It allowed the AI to consider a wider range of perspectives and generate solutions that were more comprehensive and balanced. It also made the conversation more engaging and relatable, as I could see my own concerns and objectives reflected in the different roles.

Establishing a Baseline

I don’t know the inner-workings of ChatGPT, but I know when I design chat bots using large language models, I direct them with a role and formatting instructions to begin with and I ensure this message is carried forward in every conversation had with the bot so it’s always directed. I also carry the user’s first message forward, or first few messages depending on token usage. This ensures that the bot is directed by me for use in my application as I intend and also meets the user’s goals.

I assume ChatGPT is the same, and maybe they’ve built some “forgetting” into their “memory”. My best bet actually probably would’ve been to start a new conversation, but this worked this time.

There’s a lot going on here, so let’s break that down:

I reset the conversation by asking ChatGPT to forget all prior messages and use this message as a baseline. I then introduced my problem (not making sales). Then I introduced the key players in the conversation and their roles. Finally, I used a separator, then asked for what I was seeking — a title, description, and 7 keywords.

As I mentioned, resetting the conversation may not always be effective so it may be best to start fresh with a new conversation. What you should definitely do is introduce your problem, think about people you’d like to have on your “team” if you were solving this problem together, and specify what their focus is in the conversation

The Power of Directed Conversation

This strategy of directed conversation, using human language as a programming language, has the potential to greatly improve the problem-solving abilities of AI like ChatGPT. By providing clear direction and context, we can guide the AI towards more accurate and useful predictions. This can help to avoid the issue of “hallucinations” and make the AI a more reliable and effective tool.

In my case, this approach helped ChatGPT to generate more effective titles, descriptions, and keywords for my coloring books. It also helped me to avoid potential legal issues by ensuring that all suggestions were legally sound.

Testing This Approach

Ok, but was it really effective? What would happen for instance if someone purposefully asked one of the players to do something legally questionable? 😅

Looking Ahead

As AI continues to evolve, I believe that strategies like role-playing will become increasingly important. They allow us to harness the power of AI in a more controlled and directed way, leading to better outcomes and fewer errors. As software developers, we are in a unique position to leverage these strategies due to our well-honed ability to direct, shape, and create software applications using language (natural and otherwise).

So, while AI may be changing the landscape of software development, I see it as an opportunity rather than a threat. By embracing new strategies and approaches, we can continue to innovate and create value in this exciting field. And who knows? Maybe one day, we’ll all be having conversations with entire codebases, refactoring them to our heart’s content with a mere phrase such as “Make this codebase more modular”.

Top comments (0)