An Analogy

Imagine you are starting a pie company. You want not only to make delicious pies, to build an efficient process for making delicious pies. You'll need to build machines that make pies. At a minimum, you'll need to measure a machine's performance. Ideally, you'll be able to reliably predict a machine's performance.

In this analogy, your code (more specifically, your algorithm) is your pie machine. Benchmarking tools are your way to measure performance, and Big-O is your way to predict performance.

An Overview

Big-O is a notation. It's a back-of-the-envelope way for engineers to communicate algorithm runtime complexity to each other. Don't be intimidated by runtime complexity. Some algorithms solve the same problem much more efficiently than others. The faster algorithm is said to have a lower runtime complexity than the other. Runtime complexities always reflect the worst case scenario of an algorithm, for example searching through an array and the item you were looking for was the last element in the array.

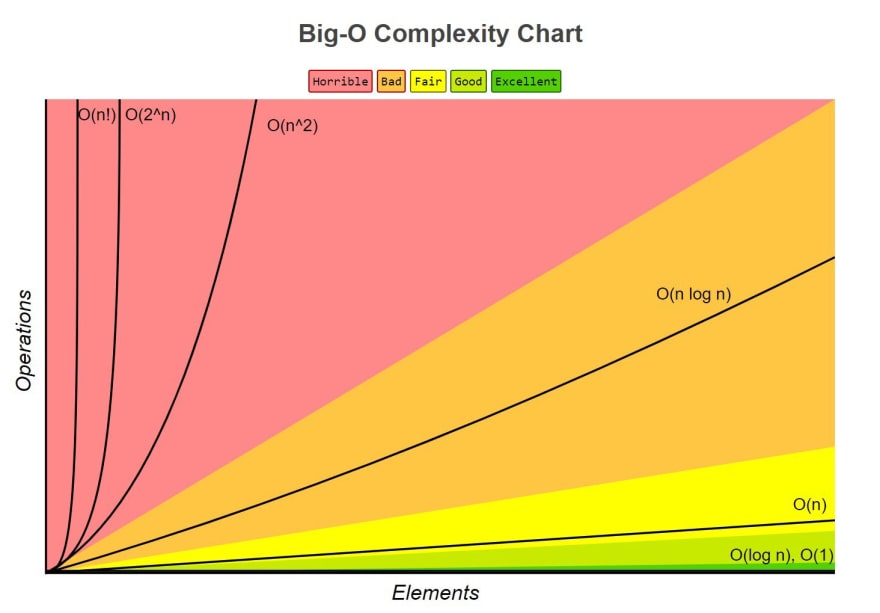

Take a look at the image below from the Big-O Cheatsheet. This site is a great resource for getting familiar with algorithms and their runtimes.

This chart compares the runtime complexities of seven different algorithm types at varying input lengths. n here represents the size of an input; a greater n value is a greater input length. This chart tells us that some algorithms' runtimes become immeasurably complex even for small inputs, and that some algorithms' runtimes remain 'simple' even for large inputs. Below, I'll provide some examples for O(1), O(log n), O(n), O(n log n), and O(n^2).

Some Examples

O(1)

O(1) has the lowest runtime complexity. It makes no difference what the size of the input is.

- Selecting the first or last element of an array.

O(log n)

For O(log n) logarithmic algorithms, the runtime will approach an asymptote and plateau as the data set increases in size.

- A binary search tree.

O(n)

O(n) algorithms are said to have a linear runtime. Their runtime is linearly proportional to their input size.

- Filtering an array.

O(n log n)

O(n log n), or linearithmic, algorithms are ones where you perform logarithmic algorithms n times.

- Sorting an array. Think: sorting is like running search a bunch of times in a row.

O(n^2 )

O(n^2 ) or quadratic algorithms have runtimes that are proportional to the square of the input size.

- Looking for duplicates in an array, bubble sorts, or any kind of nested iteration.

You may notice that I skipped over O(2^n ) and O(n!). I did this because you are less likely to encounter them than the other algorithm types. Because of their high runtime complexity, they are not a great choice for most tasks.

Top comments (0)